5 Structured Thinking Techniques for Data Scientists

Try 1 of these 5 structured thinking techniques as you wrestle with your next data science project.

Structured thinking is a framework for solving unstructured problems — which covers just about all data science problems. Using a structured approach to solve problems not only only helps solve problems faster but also helps identify the parts of the problem that may need some extra attention.

Think of structured thinking like the map of a city you’re visiting for the first time.Without a map, you’ll probably find it difficult to reach your destination. Even if you did eventually reach your destination, it’ll probably take you at least double the time.

What Is Structured Thinking?

Here’s where the analogy breaks down: Structured thinking is a framework and not a fixed mindset; you can modify these techniques based on the problem you’re trying to solve. Let’s look at five structured thinking techniques to use in your next data science project .

- Six Step Problem Solving Model

- Eight Disciplines of Problem Solving

- The Drill Down Technique

- The Cynefin Framework

- The 5 Whys Technique

More From Sara A. Metwalli 3 Reasons Data Scientists Need Linear Algebra

1. Six Step Problem Solving Model

This technique is the simplest and easiest to use. As the name suggests, this technique uses six steps to solve a problem, which are:

Have a clear and concise problem definition.

Study the roots of the problem.

Brainstorm possible solutions to the problem.

Examine the possible solution and choose the best one.

Implement the solution effectively.

Evaluate the results.

This model follows the mindset of continuous development and improvement. So, on step six, if your results didn’t turn out the way you wanted, go back to step four and choose another solution (or to step one and try to define the problem differently).

My favorite part about this simple technique is how easy it is to alter based on the specific problem you’re attempting to solve.

We’ve Got Your Data Science Professionalization Right Here 4 Types of Projects You Need in Your Data Science Portfolio

2. Eight Disciplines of Problem Solving

The eight disciplines of problem solving offers a practical plan to solve a problem using an eight-step process. You can think of this technique as an extended, more-detailed version of the six step problem-solving model.

Each of the eight disciplines in this process should move you a step closer to finding the optimal solution to your problem. So, after you’ve got the prerequisites of your problem, you can follow disciplines D1-D8.

D1 : Put together your team. Having a team with the skills to solve the project can make moving forward much easier.

D2 : Define the problem. Describe the problem using quantifiable terms: the who, what, where, when, why and how.

D3 : Develop a working plan.

D4 : Determine and identify root causes. Identify the root causes of the problem using cause and effect diagrams to map causes against their effects.

D5 : Choose and verify permanent corrections. Based on the root causes, assess the work plan you developed earlier and edit as needed.

D6 : Implement the corrected action plan.

D7 : Assess your results.

D8 : Congratulate your team. After the end of a project, it’s essential to take a step back and appreciate the work you’ve all done before jumping into a new project.

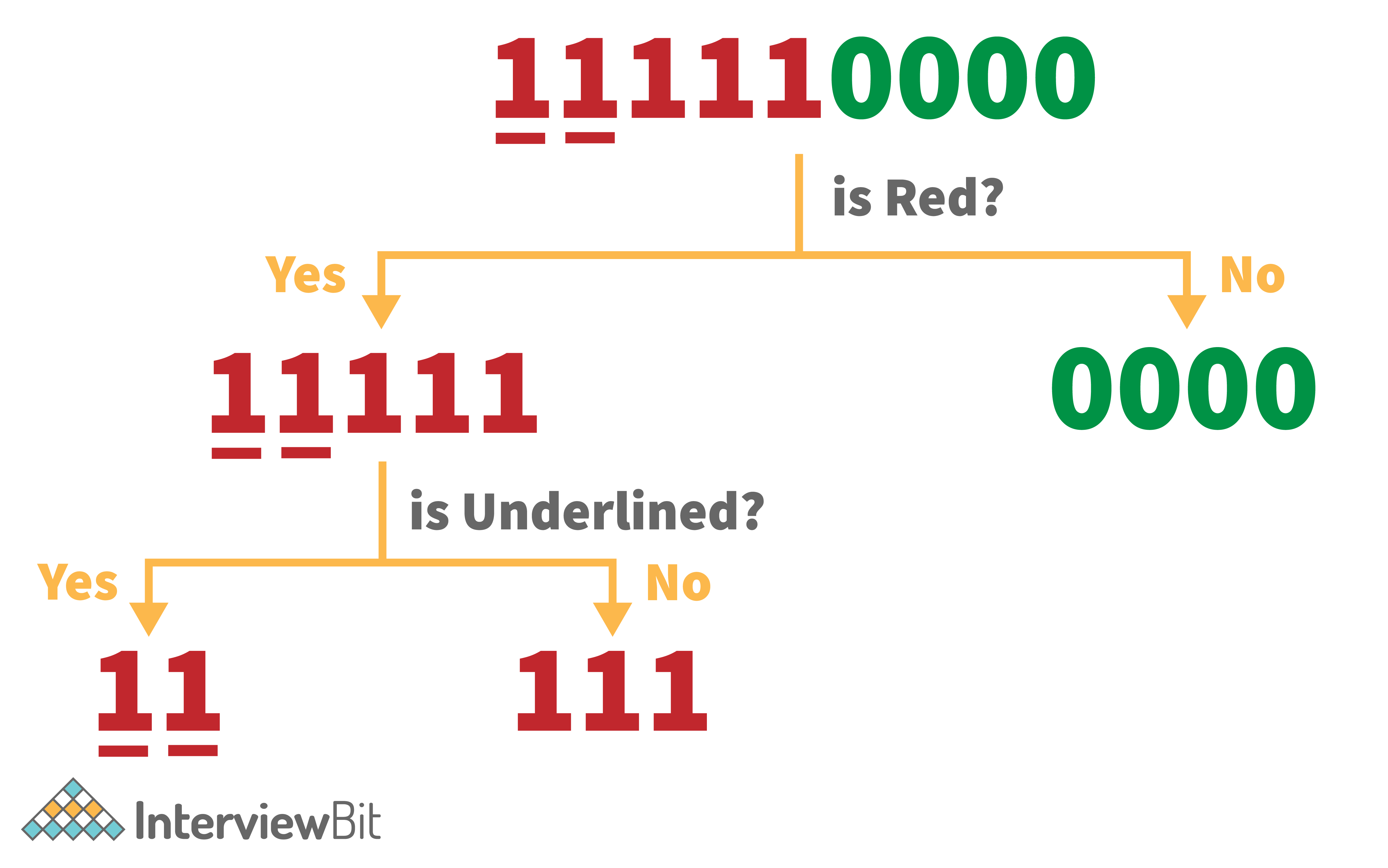

3. The Drill Down Technique

The drill down technique is more suitable for large, complex problems with multiple collaborators. The whole purpose of using this technique is to break down a problem to its roots to make finding solutions that much easier. To use the drill down technique, you first need to create a table. The first column of the table will contain the outlined definition of the problem, followed by a second column containing the factors causing this problem. Finally, the third column will contain the cause of the second column's contents, and you’ll continue to drill down on each column until you reach the root of the problem.

Once you reach the root causes of the symptoms, you can begin developing solutions for the bigger problem.

On That Note . . . 4 Essential Skills Every Data Scientist Needs

4. The Cynefin Framework

The Cynefin framework, like the rest of the techniques, works by breaking down a problem into its root causes to reach an efficient solution. We consider the Cynefin framework a higher-level approach because it requires you to place your problem into one of five contexts.

- Obvious Contexts. In this context, your options are clear, and the cause-and-effect relationships are apparent and easy to point out.

- Complicated Contexts. In this context, the problem might have several correct solutions. In this case, a clear relationship between cause and effect may exist, but it’s not equally apparent to everyone.

- Complex Contexts. If it’s impossible to find a direct answer to your problem, then you’re looking at a complex context. Complex contexts are problems that have unpredictable answers. The best approach here is to follow a trial and error approach.

- Chaotic Contexts. In this context, there is no apparent relationship between cause and effect and our main goal is to establish a correlation between the causes and effects.

- Disorder. The final context is disorder, the most difficult of the contexts to categorize. The only way to diagnose disorder is to eliminate the other contexts and gather further information.

Get the Job You Want. We Can Help. Apply for Data Science Jobs on Built In

5. The 5 Whys Technique

Our final technique is the 5 Whys or, as I like to call it, the curious child approach. I think this is the most well-known and natural approach to problem solving.

This technique follows the simple approach of asking “why” five times — like a child would. First, you start with the main problem and ask why it occurred. Then you keep asking why until you reach the root cause of said problem. (Fair warning, you may need to ask more than five whys to find your answer.)

Recent Career Development Articles

5 Steps on How to Approach a New Data Science Problem

Many companies struggle to reorganize their decision making around data and implement a coherent data strategy. The problem certainly isn’t lack of data but inability to transform it into actionable insights. Here's how to do it right.

A QUICK SUMMARY – FOR THE BUSY ONES

TABLE OF CONTENTS

Introduction

Data has become the new gold. 85 percent of companies are trying to be data-driven, according to last year’s survey by NewVantage Partners , and the global data science platform market is expected to reach $128.21 billion by 2022, up from $19.75 billion in 2016.

Clearly, data science is not just another buzzword with limited real-world use cases. Yet, many companies struggle to reorganize their decision making around data and implement a coherent data strategy. The problem certainly isn’t lack of data.

In the past few years alone, 90 percent of all of the world’s data has been created, and our current daily data output has reached 2.5 quintillion bytes, which is such a mind-bogglingly large number that it’s difficult to fully appreciate the break-neck pace at which we generate new data.

The real problem is the inability of companies to transform the data they have at their disposal into actionable insights that can be used to make better business decisions, stop threats, and mitigate risks.

In fact, there’s often too much data available to make a clear decision, which is why it’s crucial for companies to know how to approach a new data science problem and understand what types of questions data science can answer.

What types of questions can data science answer?

“Data science and statistics are not magic. They won’t magically fix all of a company’s problems. However, they are useful tools to help companies make more accurate decisions and automate repetitive work and choices that teams need to make,” writes Seattle Data Guy , a data-driven consulting agency.

The questions that can be answered with the help of data science fall under following categories:

- Identifying themes in large data sets : Which server in my server farm needs maintenance the most?

- Identifying anomalies in large data sets : Is this combination of purchases different from what this customer has ordered in the past?

- Predicting the likelihood of something happening : How likely is this user to click on my video?

- Showing how things are connected to one another : What is the topic of this online article?

- Categorizing individual data points : Is this an image of a cat or a mouse?

Of course, this is by no means a complete list of all questions that data science can answer. Even if it were, data science is evolving at such a rapid pace that it would most likely be completely outdated within a year or two from its publication.

Now that we’ve established the types of questions that can be reasonably expected to be answered with the help of data science, it’s time to lay down the steps most data scientists would take when approaching a new data science problem.

Step 1: Define the problem

First, it’s necessary to accurately define the data problem that is to be solved. The problem should be clear, concise, and measurable . Many companies are too vague when defining data problems, which makes it difficult or even impossible for data scientists to translate them into machine code.

Here are some basic characteristics of a well-defined data problem:

- The solution to the problem is likely to have enough positive impact to justify the effort.

- Enough data is available in a usable format.

- Stakeholders are interested in applying data science to solve the problem.

Step 2: Decide on an approach

There are many data science algorithms that can be applied to data, and they can be roughly grouped into the following families:

- Two-class classification : useful for any question that has just two possible answers.

- Multi-class classification : answers a question that has multiple possible answers.

- Anomaly detection : identifies data points that are not normal.

- Regression : gives a real-valued answer and is useful when looking for a number instead of a class or category.

- Multi-class classification as regression : useful for questions that occur as rankings or comparisons.

- Two-class classification as regression : useful for binary classification problems that can also be reformulated as regression.

- Clustering : answer questions about how data is organized by seeking to separate out a data set into intuitive chunks.

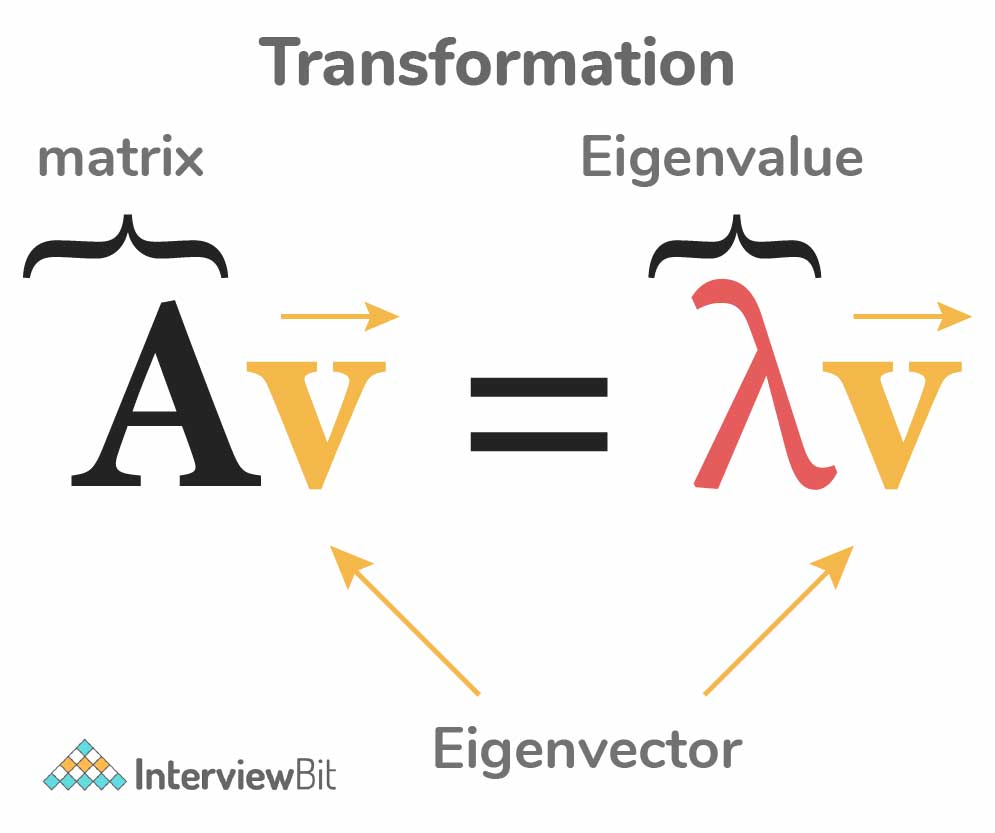

- Dimensionality reduction : reduces the number of random variables under consideration by obtaining a set of principal variables.

- Reinforcement learning algorithms : focus on taking action in an environment so as to maximize some notion of cumulative reward.

Step 3: Collect data

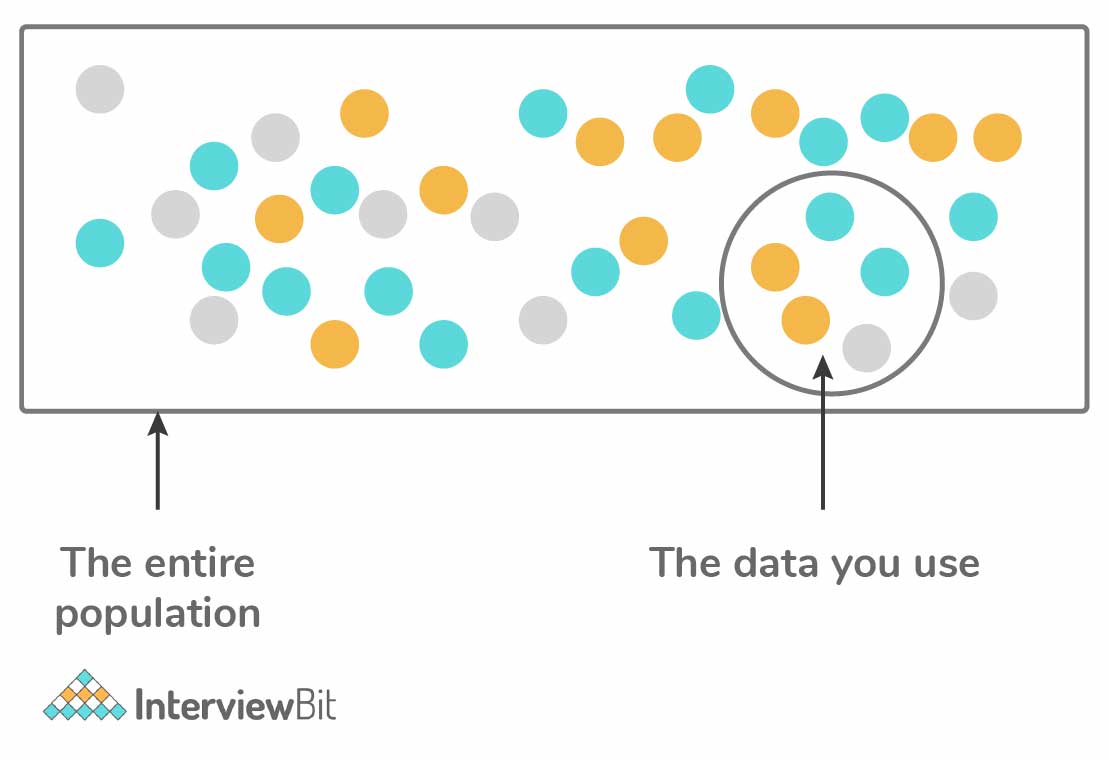

With the problem clearly defined and a suitable approach selected, it’s time to collect data. All collected data should be organized in a log along with collection dates and other helpful metadata.

It’s important to understand that collected data is seldom ready for analysis right away. Most data scientists spend much of their time on data cleaning , which includes removing missing values, identifying duplicate records, and correcting incorrect values.

Step 4: Analyze data

The next step after data collection and cleanup is data analysis. At this stage, there’s a certain chance that the selected data science approach won’t work. This is to be expected and accounted for. Generally, it’s recommended to start with trying all the basic machine learning approaches as they have fewer parameters to alter.

There are many excellent open source data science libraries that can be used to analyze data. Most data science tools are written in Python, Java, or C++.

<blockquote><p>“Tempting as these cool toys are, for most applications the smart initial choice will be to pick a much simpler model, for example using scikit-learn and modeling techniques like simple logistic regression,” – advises Francine Bennett, the CEO and co-founder of Mastodon C.</p></blockquote>

Step 5: Interpret results

After data analysis, it’s finally time to interpret the results. The most important thing to consider is whether the original problem has been solved. You might discover that your model is working but producing subpar results. One way how to deal with this is to add more data and keep retraining the model until satisfied with it.

Most companies today are drowning in data. The global leaders are already using the data they generate to gain competitive advantage, and others are realizing that they must do the same or perish. While transforming an organization to become data-driven is no easy task, the reward is more than worth the effort.

The 5 steps on how to approach a new data science problem we’ve described in this article are meant to illustrate the general problem-solving mindset companies must adopt to successfully face the challenges of our current data-centric era.

Frequently Asked Questions

Our promise

Every year, Brainhub helps 750,000+ founders, leaders and software engineers make smart tech decisions. We earn that trust by openly sharing our insights based on practical software engineering experience.

A serial entrepreneur, passionate R&D engineer, with 15 years of experience in the tech industry. Shares his expert knowledge about tech, startups, business development, and market analysis.

Popular this month

Get smarter in engineering and leadership in less than 60 seconds.

Join 300+ founders and engineering leaders, and get a weekly newsletter that takes our CEO 5-6 hours to prepare.

previous article in this collection

It's the first one.

next article in this collection

It's the last one.

Navigating the Data Science Learning Curve: 6 Essential Tips for Beginners

Ready to dive into data science? Follow these six tips, take action, and unleash your potential in the field

A few weeks ago, I found myself in a conversation that led to an interesting question. It went something like this:

Say, Hans, you’re actually quite active in the field of data science. But when you were studying, data science hadn’t even been invented yet. Still, over the past few years, you’ve gained knowledge and skills in data analytics and data science. So, you must know a thing or two about it. That’s why I have a question for you. What would you recommend if someone wants to learn data science themselves and they have to start from scratch?

Whew, I didn’t have an immediate answer to this question. But it did make me think. What would my advice be? It wasn’t a complex question, but one that triggered many different thoughts and ideas in my mind. How do you go about “learning data science from scratch?” As I pondered, I came up with a list of six tips. And I’m happy to share those tips with you here.

Tip 1: Don’t start with programming, opt for a low-code solution

It might look super cool, typing all sorts of complex Python statements in dark mode, but that’s not what makes me happy. My preference lies with a low-code solution like KNIME . With the help of KNIME, I’ve been able to accelerate my data science career tremendously. In KNIME, the process is central, not the code. And that comes with several advantages. A KNIME canvas is organized, allowing you to zoom in and out. You can encapsulate nodes into so-called metanodes, and with annotations, the (data science) process you execute in your workflow is supported and made understandable, turning it into a story that you can share and communicate.

Especially if you’re new to data science and don’t have a background or training in programming, it’s important to focus on the problem you want to solve or the insight you want to gain. How do you translate the business problem into a data science problem? That, to me, is the challenge in data science. As a data scientist, you need to be able to focus on the choices you need to make to arrive at a good solution. Which algorithm fits best, which records do I include, which variables should be considered, what metrics do I use to assess the quality of my solution? Those kinds of things. And as a beginner, you don’t want to get stuck every time because you misplaced a comma in your code or forgot a parenthesis, etc.

An additional advantage of KNIME is that during node configuration, all options are presented. Many choices are configurable but also have a default value. This allows you to configure each node thoughtfully or simply see what happens with the default values.

The added value of a data scientist doesn’t lie in writing good code (that’s what an LLM will do for you later) but in conceptualizing, implementing, and making choices to arrive at a process that turns input data into valuable output data. But to have a good plan and make the right choices, you do need some knowledge, but how do you acquire that knowledge?

Tip 2: Get started, just do it

You can read books, watch YouTube videos, browse blogs, take an online course, but if you only consume that content, your skills won’t improve, and your knowledge will only increase to a limited extent. To truly make progress in data science, it’s best to just start and build up knowledge around the data science activities you are doing. Get to work.

Suppose you want to learn how to create a predictive model, and you realize that you need to split your dataset into a training, testing (and validation) set. Dive into this topic and try to figure out the best way to split your dataset for your specific use case. Once you’ve set up partitioning to your satisfaction, move on to the next step in the process. You don’t need to know all the options, but it’s important to understand what you’re doing (and why). Build your workflow or code in small and manageable steps. Try to create a minimal viable product with as few nodes or lines as possible.

It is clear. I go for KNIME as my environment to do my data science and analytics projects. But the choice is based on personal preferences. And this choice is not the key for success to learn data science. Regardless of the approach chosen, the most important factor is consistent practice and hands-on experience in solving real-world data science problems. And yes, in my opinion, KNIME facilitates that the best.

Tip 3: Define a real-world use case with a familiar dataset

Overall, hands-on practice with real-world projects is a fundamental step in the learning journey of data science from scratch. It provides you with practical experience, fosters critical thinking and problem-solving skills, and builds a strong foundation for further exploration and growth in the field of analytics and data science.

If you want to gradually master data science skills, the choice of topic, use case, and datasets is important. It’s better to choose a use case and dataset you’re familiar with than standard datasets (like the Iris dataset) often seen in tutorials. If you don’t have a dataset at hand, check out the Kaggle Open Datasets .

Working on a topic you’re familiar with and a real dataset associated with it helps evaluate the outcomes of your steps accurately. For instance, if your predictive model for football match outcomes predicts a draw in 80% of the matches, you, as a football expert, know this is incorrect (on average, 25% of matches end in a draw). That means going back to the drawing board. Or if you encounter outliers, such as a team scoring more than 15 goals in a match, you can use your domain knowledge of football to decide if this might be incorrectly entered data or a valid value. Therefore, it’s recommended to work with a real dataset because these datasets confront you with deviations and noise that require attention. On the other hand, the advantage of working with “pre-existing datasets” like the wine dataset, the Iris dataset, or the Boston housing dataset is that they yield consistent results and sometimes seem too good to be true. You can use them effectively to get your workflow “working.” However, you’re not challenged to think about the outcomes.

But problem solving skills are also a part of data science. There for you have to approach problems analytically, question assumptions, and think creatively to find innovative solutions and stimulate critical thinking and decision-making abilities.

Tip 4: Take small, manageable steps

A data science use case like creating a predictive model can be accomplished with a limited number of nodes (see figure).

You probably won’t have the best model right away, but you’ll have a workflow that you can improve by adding functionalities (KNIME nodes) simply and step by step. Pause with each node addition to consider how to best configure it. Do I accept the default settings, or do I investigate the effect of deviating from the standard settings? Expanding the workflow provides opportunities to seek information by reading a blog on the topic, following a YouTube tutorial, or maybe taking a short training session, all specifically focused on the subject you’re currently working on in your workflow and want to learn more about. Reflection allows you to assess your growth, identify areas for improvement, and track your journey in mastering data science.

Tip 5: When stuck, don’t panic

One of the beautiful aspects of working on a data science use case is that it’s not a straight line to the finish. I often feel there’s always room for improvement or doing things differently. This means lots of testing and experimenting to arrive at a good, acceptable solution. However, reaching that good solution often involves overcoming various obstacles. It’s good to know that help is always nearby. If you search smartly on the internet, someone has likely found a solution to the problem you’re facing. And if you get stuck in KNIME, there’s the KNIME Forum , KNIME videos , and the KNIME Learning Centre .

But perhaps most importantly, don’t give up; keep trying. It won’t always be easy. It’s an illusion to become a full-fledged data scientist in a week. Learning new things happens in steps, and it’s faster when you combine practice with theory. But do it in moderation. It’s better to spend one hour a day for 8 days learning something new than trying to do it all in one day for 8 hours.

Tip 6: Stay motivated and curious, keep on learning

Becoming more proficient in data science doesn’t happen overnight. It takes time. Additionally, data science is more than just programming. It requires knowledge of methods and techniques, as well as the domain in which the data science use case operates.

Seek collaboration and networking within the data science community. Participate in forums, attend meetups, and connect with peers and professionals in the field. Collaborating with others can provide you with valuable insights, feedback, and opportunities for growth.

Your learning journey never ends. Try to stay updated with the latest trends, tools, and technologies in data science. Explore new areas, take advanced courses, and participate in workshops or conferences to expand your knowledge and expertise.

I will never tell you that it is easy to learn data science from scratch. Sometimes it’s easy, sometimes you get stuck. And occasionally your project will fail completely. Therefore, embrace failure as part of the process and let it fuel your motivation to keep learning and growing.

Starting your journey to learn data science from scratch? Here are six tips to guide you along the way.

1. Start with a low-code solution like KNIME to ease into the field without getting bogged down in programming syntax.

2. Dive into hands-on projects, applying your knowledge to real-world datasets and problems.

3. Choose familiar use cases and datasets to better understand the outcomes of your analysis and hone your problem-solving skills.

4. Take small, manageable steps, building on your skills iteratively as you progress.

5. Don’t panic when you encounter obstacles; seek help, stay persistent, and keep trying.

6. Embrace failure, stay motivated, curious, and committed to lifelong learning, continuously expanding your knowledge and staying updated with the latest trends and technologies in data science.

Ready to dive into data science? Follow these six tips, take action, and unleash your potential in the field. Your data science adventure awaits!

This blogpost was inspired by my contribution to a KNIME Webinar “How to teach yourself data science from scratch”.

search faculty.ai

Key skills for aspiring data scientists: Problem solving and the scientific method

This blog is part two of our ‘Data science skills’ series, which takes a detailed look at the skills aspiring data scientists need to ace interviews, get exciting projects, and progress in the industry. You can find the other blogs in our series under the ‘Data science career skills’ tag.

One of the things that attracts a lot of aspiring data scientists to the field is a love of problem solving, more specifically problem solving using the scientific method. This has been around for hundreds of years, but the vast volume of data available today offers new and exciting ways to test all manner of different hypotheses – it is called data science after all.

If you’re a PhD student, you’ll probably be fairly used to using the scientific method in an academic context, but problem solving means something slightly different in a commercial context. To succeed, you’ll need to learn how to solve problems quickly, effectively and within the constraints of your organisation’s structure, resources and time frames.

Why is problem solving essential for data scientists?

Problem solving is involved in nearly every aspect of a typical data science project from start to finish. Indeed, almost all data science projects can be thought of as one long problem solving exercise.

To make this clear, let’s consider the following case study; you have been asked to help optimize a company’s direct marketing, which consists of weekly catalogues.

Defining the right question

The first aim of most data science projects is to properly specify the question or problem you wish to tackle. This might sound trivial, but it can often be one of the most challenging parts of any project, and how successful you are at this stage can come to define how successful you are by the finish.

In an academic context, your problem is usually very clearly defined. But as a data scientist in industry it’s rare for your colleagues or your customer to know exactly which problem they’re trying to solve.

In this example, you have been asked to “optimise a company’s direct marketing”. There are numerous translations of this problem statement into the language of data science. You could create a model which helps you contact customers who would get the biggest uplift in purchase propensity or spend from receiving direct marketing. Or you could simply work out which customers are most likely to buy and focus on contacting them.

While most marketers and data scientists would agree that the first approach is better in theory, whether or not you can answer this question through data depends on what the company has been doing up to this point. A robust analysis of the company’s data and previous strategy is therefore required, even before deciding on which specific problem to focus on.

This example makes clear the importance of properly defining your question up front; both options here would lead you on very different trajectories and it is therefore crucial that you start off on the right one. As a data scientist, it will be your job to help turn an often vague direction from a customer or colleague into a firm strategy.

Formulating and evaluating hypotheses

Once you’ve decided on the question that will deliver the best results for your company or your customer, the next step is to formulate hypotheses to test. These can come from many places, whether it be the data, business experts, or your own intuition.

Suppose in this example you’ve had to settle for finding customers who are most likely to buy. Clearly you’ll want to ensure that your new process is better than the company’s old one – indeed, if you’re making better data driven decisions than the company’s previous process you would expect this to be the case.

There is a challenge here though – you can’t directly test the effect of changing historical mailing decisions because these decisions have already been made. However, you can indirectly, by looking at people who were mailed, and then looking at who bought something and who didn’t. If your new process is superior to the previous one, it should be suggesting that you mail most of the people in this first category, as people missed here could indicate potential lost revenue. It should also omit most of the people in the latter category, as mailing this group is definitely wasted marketing spend.

While these metrics don’t prove that your new process is better, they do provide some evidence that you’re making improvements over what went before.

This example is typical of applied data science projects – you often can’t test your model on historical data to the extent that you would like, so you have to use the data you have available as best you can to give us as much evidence as is possible as to the validity of your hypotheses.

Testing and drawing conclusions

The ultimate test of any data science algorithm is how it performs in the real world. Most data science projects will end by attempting to answer this question, as ultimately this is the only way that data science can truly deliver value to people.

In our example from above, this might look like comparing your algorithm against the company’s current process by doing an randomised control trial (RCT), and comparing the response rates across the two groups. Of course one would expect random variation, and being able to explain the significance (or lack thereof) of any deviations between the two groups would be essential to solving the company’s original problem.

How successfully you test and draw your final conclusions, as well as well you take into account all the limitations with the evaluation, will ultimately decide how impactful the end result of the project is. When addressing a business problem there can be massive consequences to getting the answer wrong – therefore formulating this final test in a way that is scientifically robust but also helps address the original problem statement is therefore paramount, and is a skill that any data scientist needs to possess.

How to develop your problem solving skills

There are certainly ways you can develop your applied data science problem solving skills. The best advice, as so often is true in life, is to practice. Indeed, one of the reasons that so many employers look for data scientists with PhDs is because this demonstrates that the individual in question can solve hard problems.

Websites like kaggle can be a great starting point for learning how to tackle data science problems and winners of old competitions often have good posts about how they came to build their winning model. It’s also important to learn how to translate business problems into a clear data science problem statement. Data science problems found online have often solved this bit for you, so try and focus on those that are vague and ill-defined – whilst it might be tempting to stick to those that are more concrete, real life is seldom as accommodating.

As the best way to develop your skills is to practice them, Faculty’s Fellowship programme can be a fantastic way to improve your problem solving skills. As the fellowship gives you an opportunity to tackle a real business problem for a real customer, and take the problem through from start to finish, there are not many better ways to develop, and prove, your skills in this area.

Head to the Faculty Fellowship page to find out more.

Recent Blogs

Optimisation by design: ai as a critical enabler in uk regulated infrastructure, navigating the future: insights from the energy transition and ai panel, ai in customer service: what mcdonald’s recent troubles can teach us.

Subscribe to our newsletter and never miss out on updates from our experts.

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

Data Science Process

If you are in a technical domain or a student with a technical background then you must have heard about Data Science from some source certainly. This is one of the booming fields in today’s tech market. And this will keep going on as the upcoming world is becoming more and more digital day by day. And the data certainly hold the capacity to create a new future. In this article, we will learn about Data Science and the process which is included in this.

What is Data Science?

Data can be proved to be very fruitful if we know how to manipulate it to get hidden patterns from them. This logic behind the data or the process behind the manipulation is what is known as Data Science . From formulating the problem statement and collection of data to extracting the required results from them the Data Science process and the professional who ensures that the whole process is going smoothly or not is known as the Data Scientist. But there are other job roles as well in this domain like:

- Data Engineers

- Data Analysts

- Data Architect

- Machine Learning Engineer

- Deep Learning Engineer

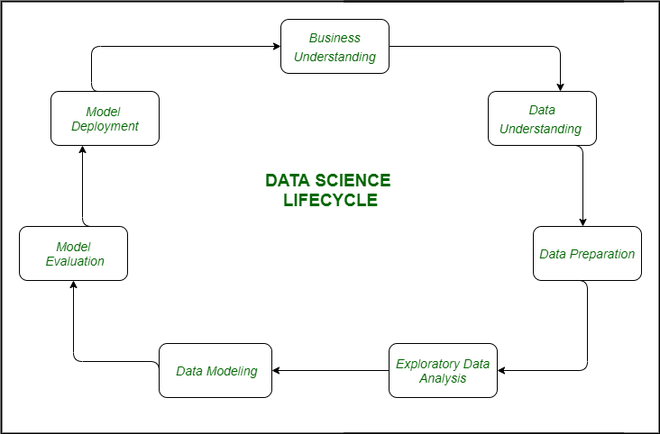

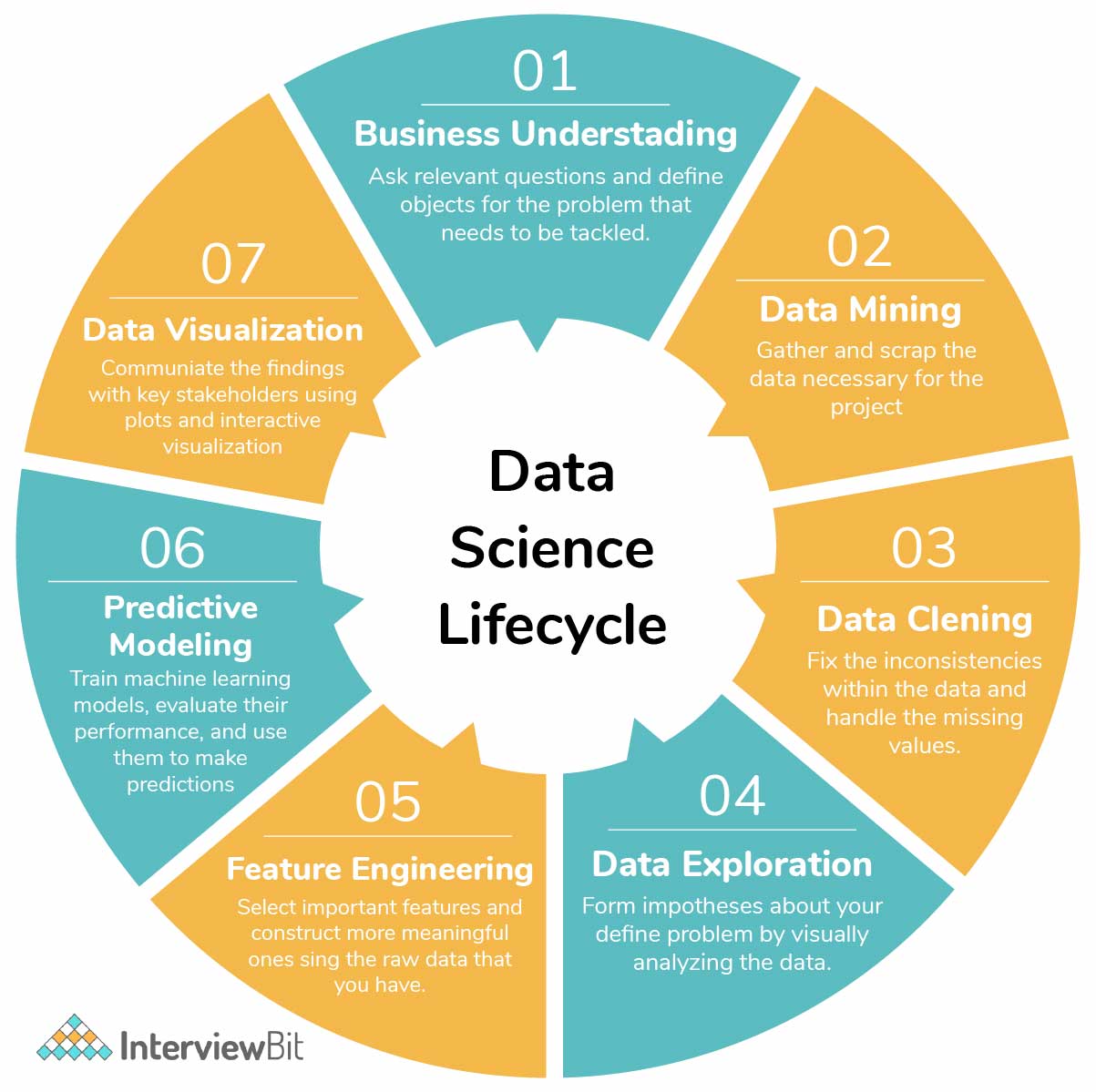

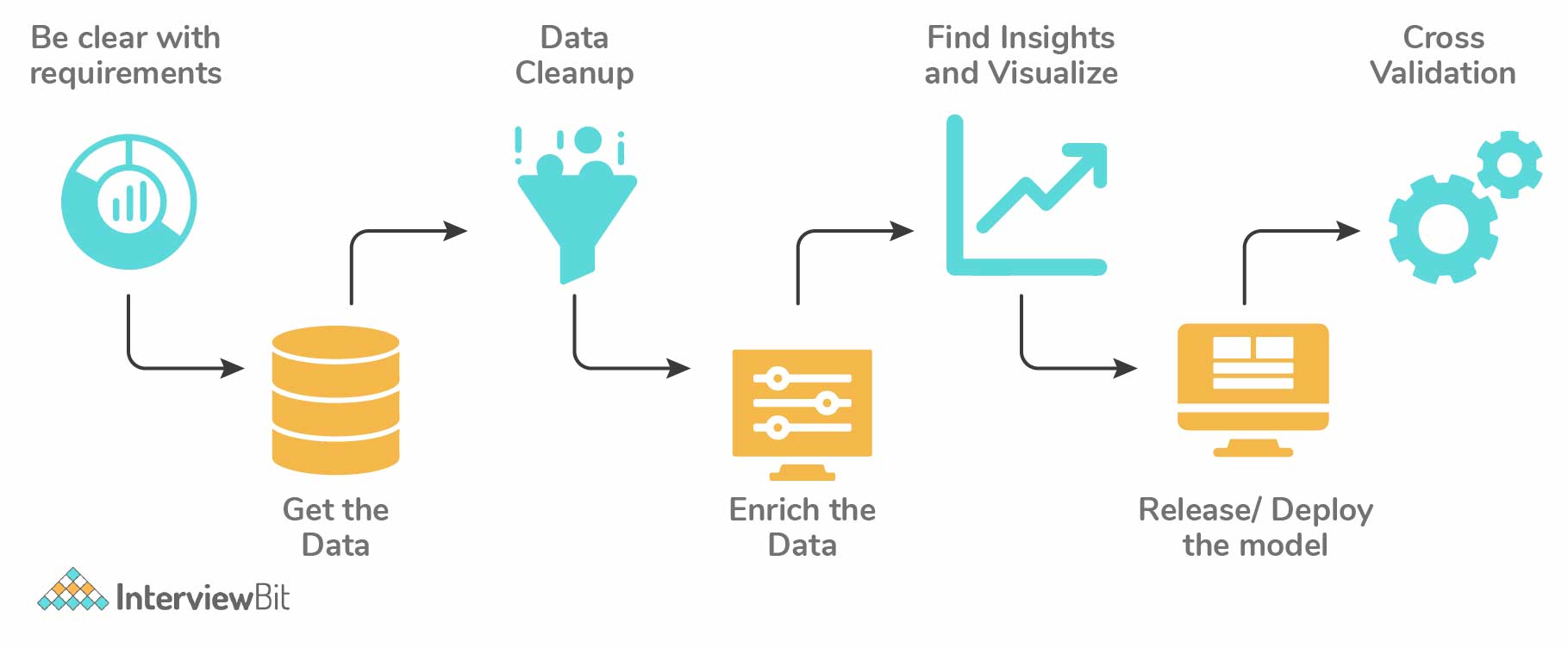

Data Science Process Life Cycle

Some steps are necessary for any of the tasks that are being done in the field of data science to derive any fruitful results from the data at hand.

- Data Collection – After formulating any problem statement the main task is to calculate data that can help us in our analysis and manipulation. Sometimes data is collected by performing some kind of survey and there are times when it is done by performing scrapping.

- Data Cleaning – Most of the real-world data is not structured and requires cleaning and conversion into structured data before it can be used for any analysis or modeling.

- Exploratory Data Analysis – This is the step in which we try to find the hidden patterns in the data at hand. Also, we try to analyze different factors which affect the target variable and the extent to which it does so. How the independent features are related to each other and what can be done to achieve the desired results all these answers can be extracted from this process as well. This also gives us a direction in which we should work to get started with the modeling process.

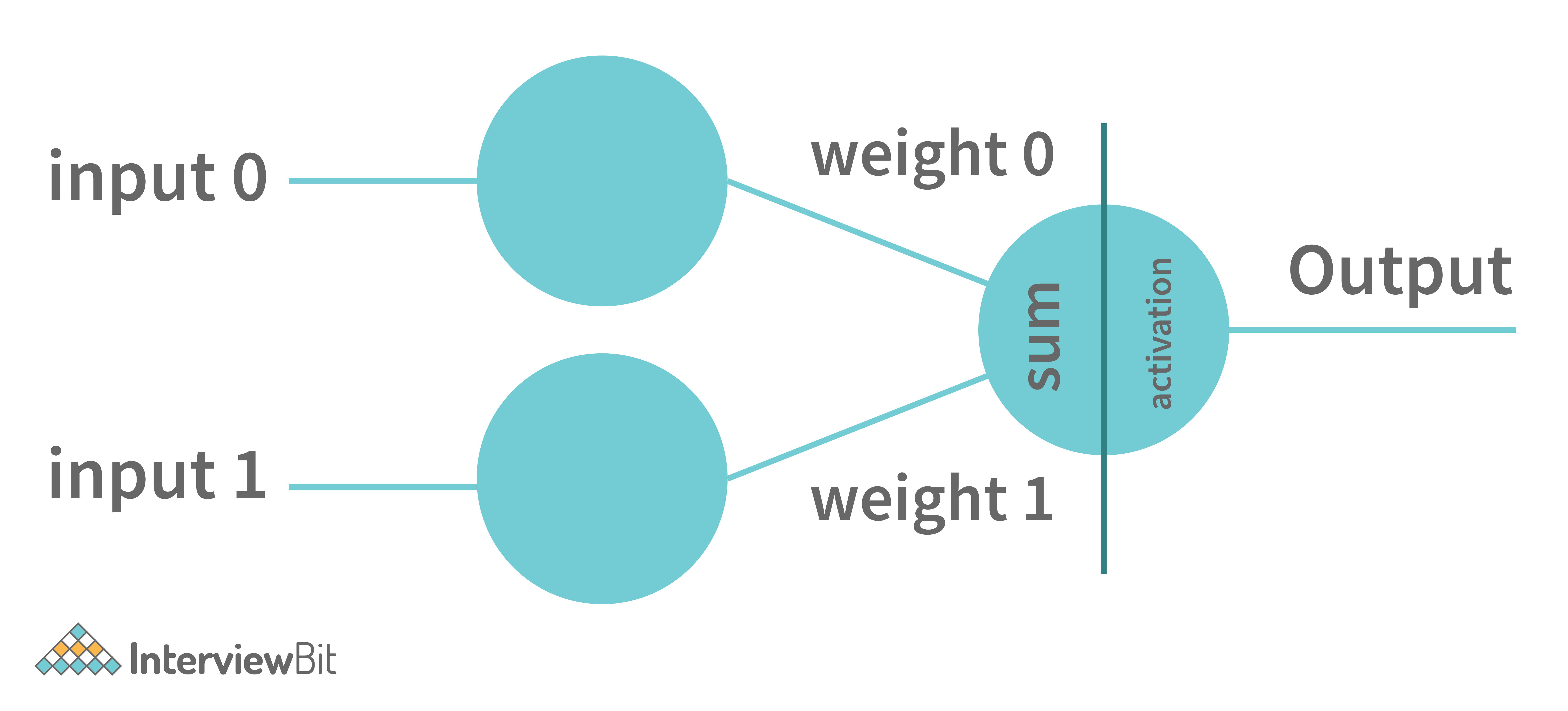

- Model Building – Different types of machine learning algorithms as well as techniques have been developed which can easily identify complex patterns in the data which will be a very tedious task to be done by a human.

- Model Deployment – After a model is developed and gives better results on the holdout or the real-world dataset then we deploy it and monitor its performance. This is the main part where we use our learning from the data to be applied in real-world applications and use cases.

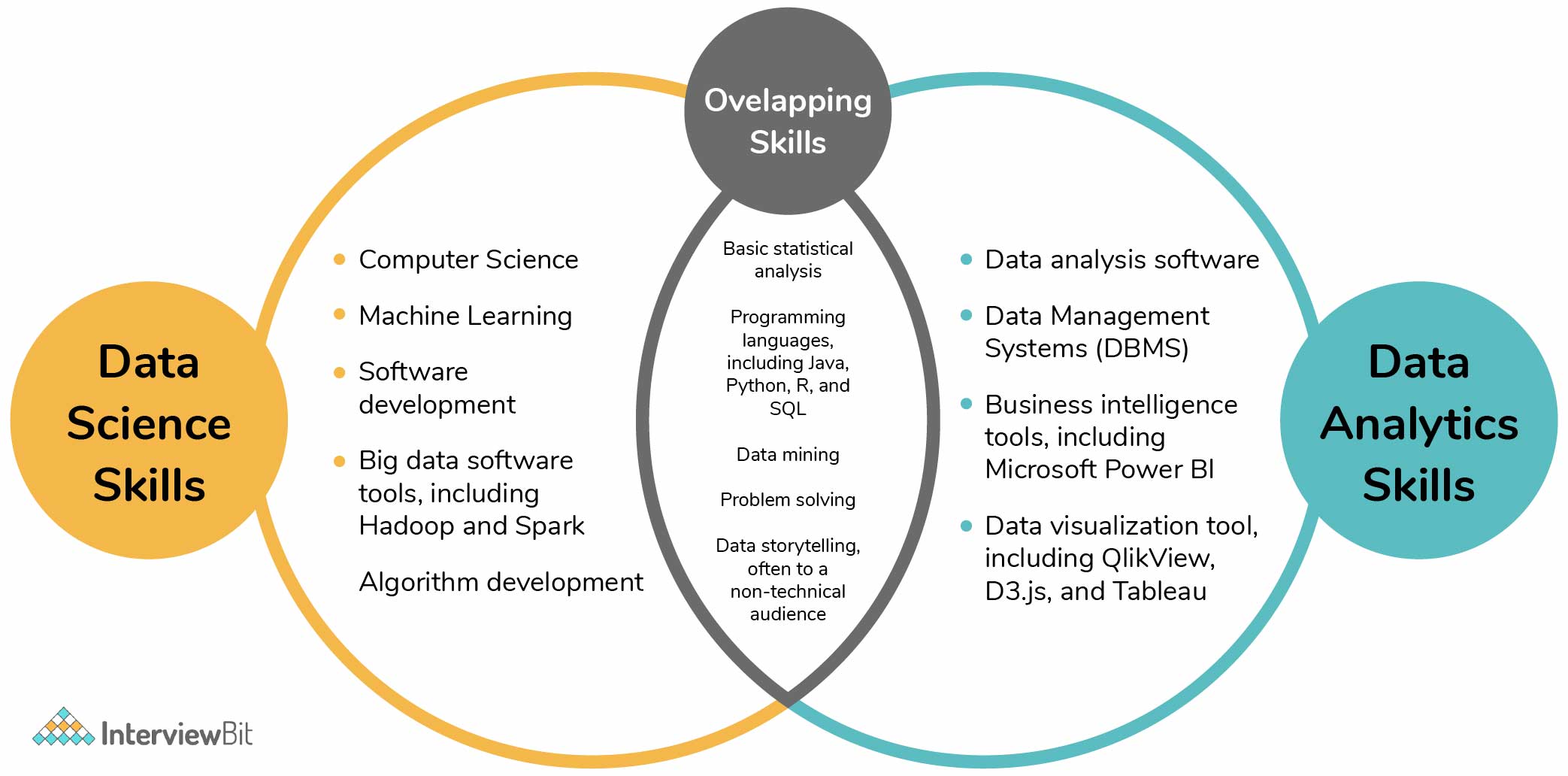

Components of Data Science Process

Data Science is a very vast field and to get the best out of the data at hand one has to apply multiple methodologies and use different tools to make sure the integrity of the data remains intact throughout the process keeping data privacy in mind. Machine Learning and Data analysis is the part where we focus on the results which can be extracted from the data at hand. But Data engineering is the part in which the main task is to ensure that the data is managed properly and proper data pipelines are created for smooth data flow. If we try to point out the main components of Data Science then it would be:

- Data Analysis – There are times when there is no need to apply advanced deep learning and complex methods to the data at hand to derive some patterns from it. Due to this before moving on to the modeling part, we first perform an exploratory data analysis to get a basic idea of the data and patterns which are available in it this gives us a direction to work on if we want to apply some complex analysis methods on our data.

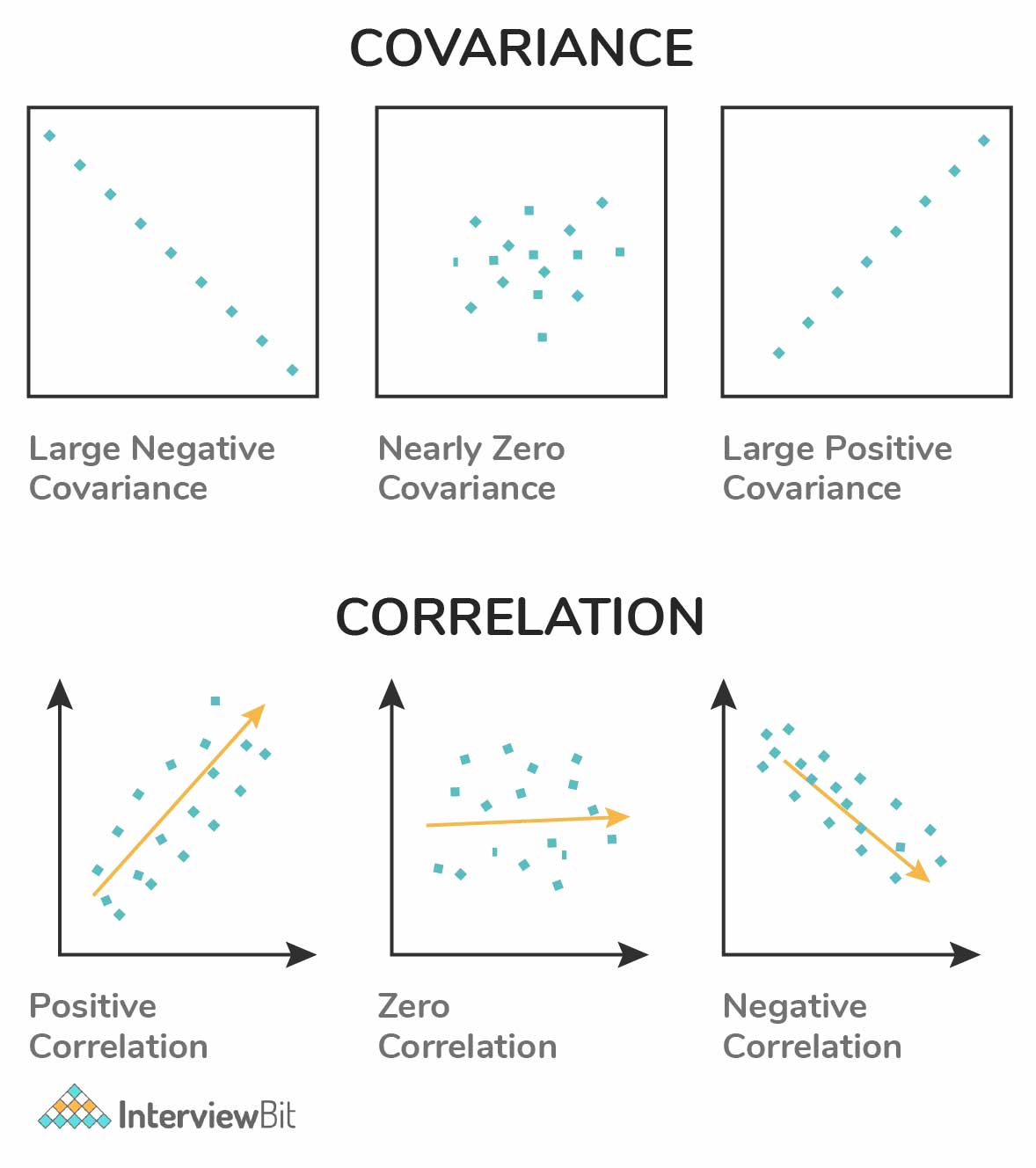

- Statistics – It is a natural phenomenon that many real-life datasets follow a normal distribution. And when we already know that a particular dataset follows some known distribution then most of its properties can be analyzed at once. Also, descriptive statistics and correlation and covariances between two features of the dataset help us get a better understanding of how one factor is related to the other in our dataset.

- Data Engineering – When we deal with a large amount of data then we have to make sure that the data is kept safe from any online threats also it is easy to retrieve and make changes in the data as well. To ensure that the data is used efficiently Data Engineers play a crucial role.

- Machine Learning – Machine Learning has opened new horizons which had helped us to build different advanced applications and methodologies so, that the machines become more efficient and provide a personalized experience to each individual and perform tasks in a snap of the hand earlier which requires heavy human labor and time intense.

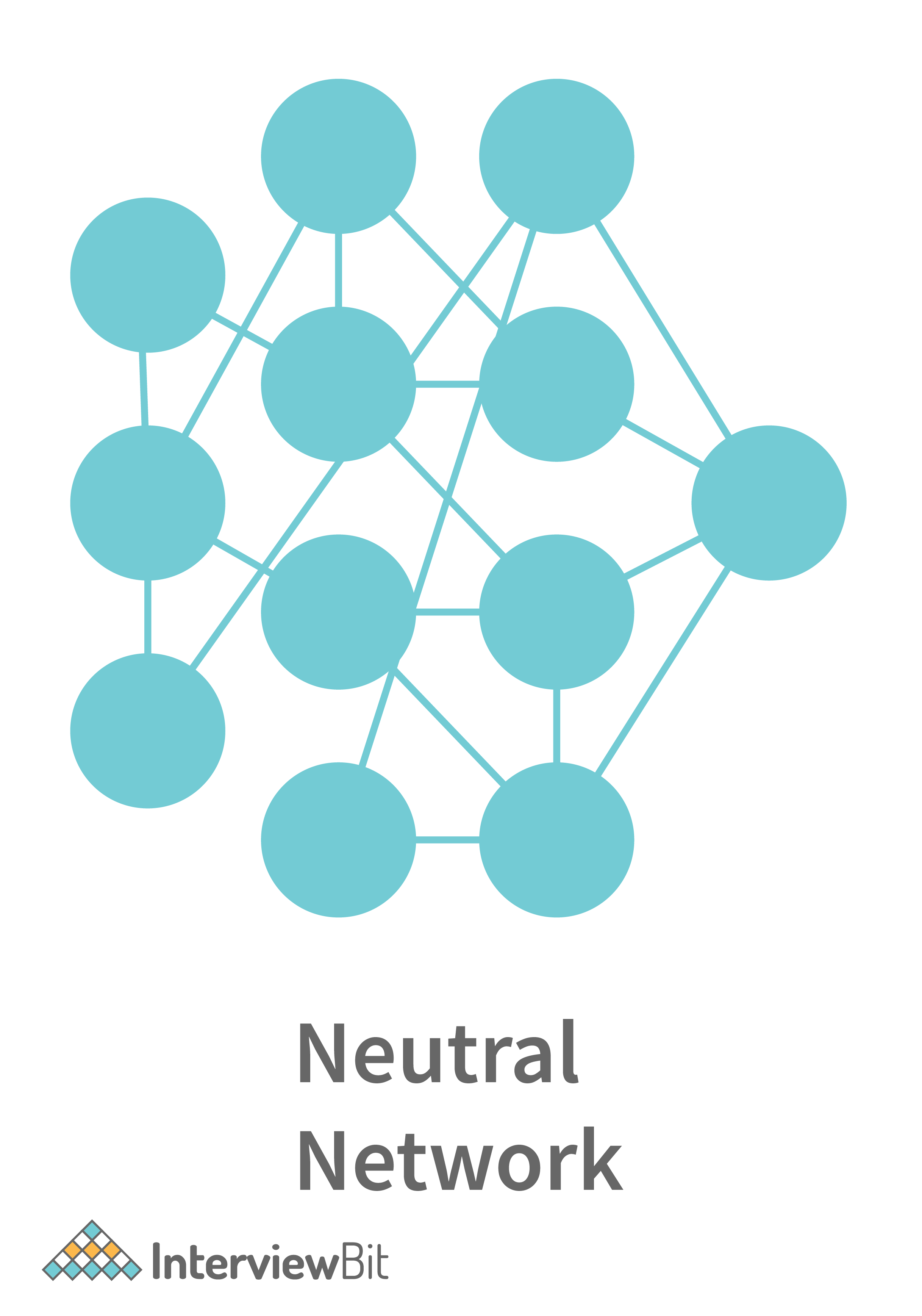

- Deep Learning – This is also a part of Artificial Intelligence and Machine Learning but it is a bit more advanced than machine learning itself. High computing power and a huge corpus of data have led to the emergence of this field in data science.

Knowledge and Skills for Data Science Professionals

As a Data Scientist, you’ll be responsible for jobs that span three domains of skills.

- Statistical/mathematical reasoning

- Business communication/leadership

- Programming

1. Statistics: Wikipedia defines it as the study of the collection, analysis, interpretation, presentation, and organization of data. Therefore, it shouldn’t be a surprise that data scientists need to know statistics.

2. Programming Language R/ Python: Python and R are one of the most widely used languages by Data Scientists. The primary reason is the number of packages available for Numeric and Scientific computing.

3. Data Extraction, Transformation, and Loading: Suppose we have multiple data sources like MySQL DB, MongoDB, Google Analytics. You have to Extract data from such sources, and then transform it for storing in a proper format or structure for the purposes of querying and analysis. Finally, you have to load the data in the Data Warehouse, where you will analyze the data. So, for people from ETL (Extract Transform and Load) background Data Science can be a good career option.

Steps for Data Science Processes:

Step 1: Defining research goals and creating a project charter

- Spend time understanding the goals and context of your research.Continue asking questions and devising examples until you grasp the exact business expectations, identify how your project fits in the bigger picture, appreciate how your research is going to change the business, and understand how they’ll use your results.

Create a project charter

A project charter requires teamwork, and your input covers at least the following:

- A clear research goal

- The project mission and context

- How you’re going to perform your analysis

- What resources you expect to use

- Proof that it’s an achievable project, or proof of concepts

- Deliverables and a measure of success

Step 2: Retrieving Data

Start with data stored within the company

- Finding data even within your own company can sometimes be a challenge.

- This data can be stored in official data repositories such as databases, data marts, data warehouses, and data lakes maintained by a team of IT professionals.

- Getting access to the data may take time and involve company policies.

Step 3: Cleansing, integrating, and transforming data-

- Data cleansing is a subprocess of the data science process that focuses on removing errors in your data so your data becomes a true and consistent representation of the processes it originates from.

- The first type is the interpretation error, such as incorrect use of terminologies, like saying that a person’s age is greater than 300 years.

- The second type of error points to inconsistencies between data sources or against your company’s standardized values. An example of this class of errors is putting “Female” in one table and “F” in another when they represent the same thing: that the person is female.

Integrating:

- Combining Data from different Data Sources.

- Your data comes from several different places, and in this sub step we focus on integrating these different sources.

- You can perform two operations to combine information from different data sets. The first operation is joining and the second operation is appending or stacking.

Joining Tables:

- Joining tables allows you to combine the information of one observation found in one table with the information that you find in another table.

Appending Tables:

- Appending or stacking tables is effectively adding observations from one table to another table.

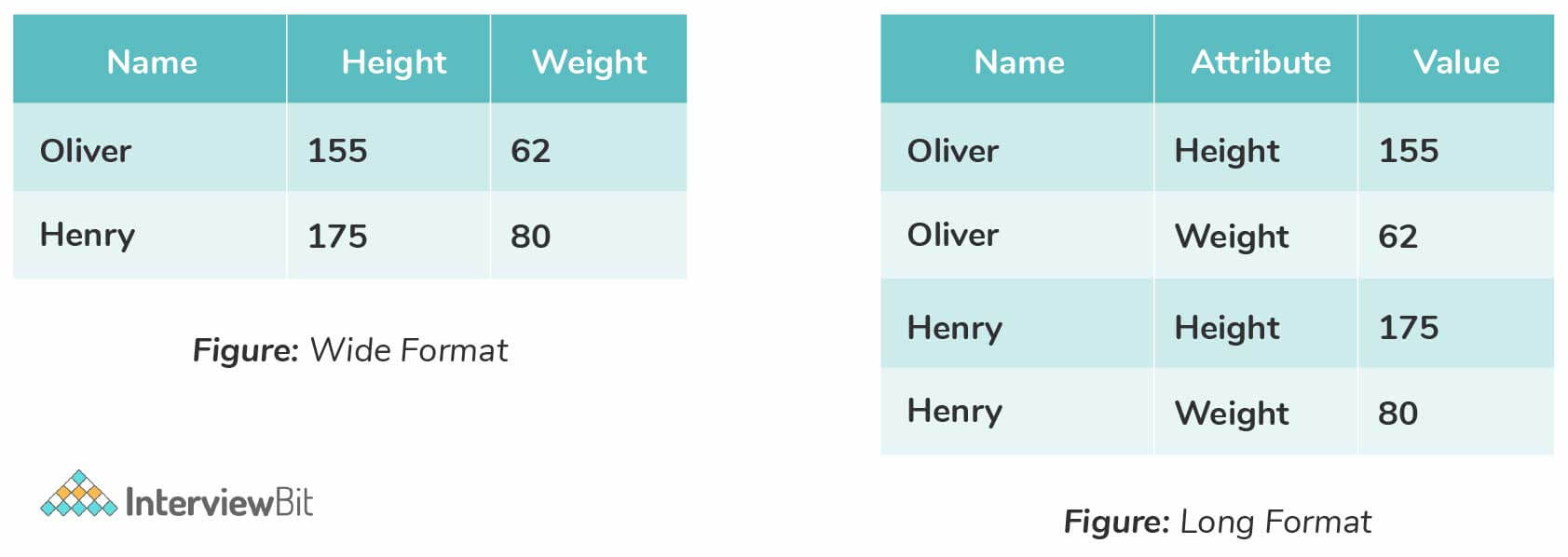

Transforming Data

- Certain models require their data to be in a certain shape.

Reducing the Number of Variables

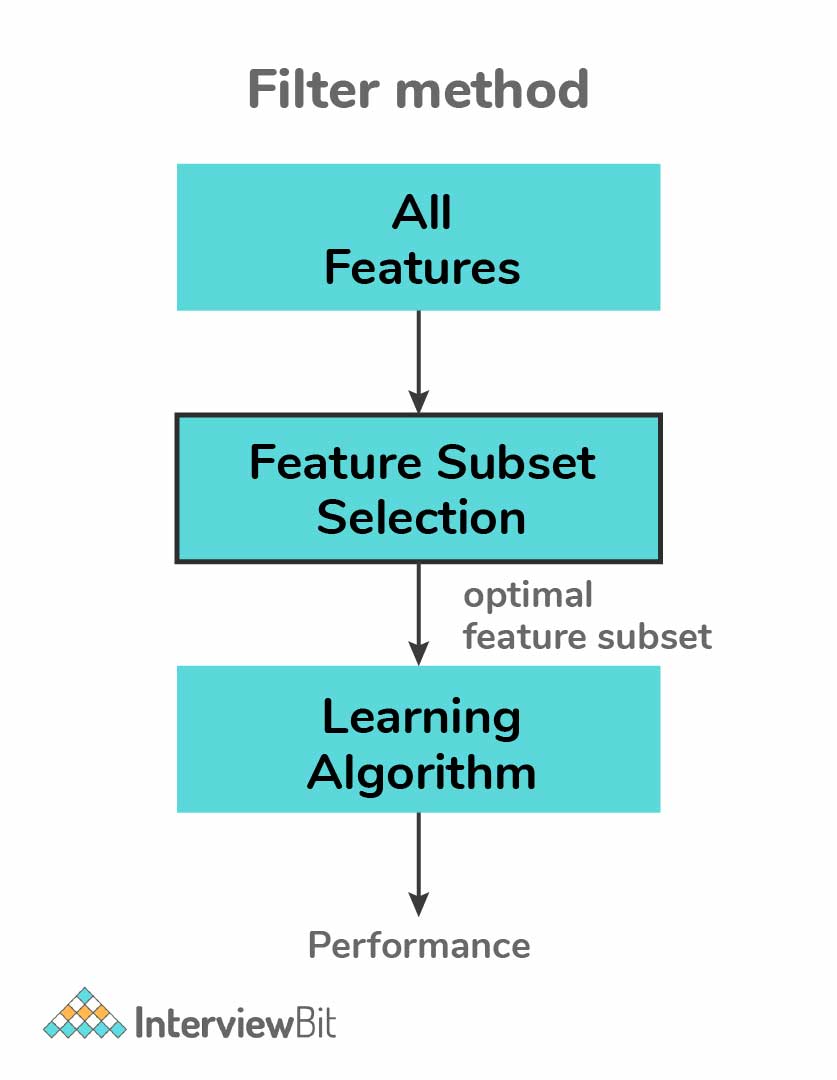

- Sometimes you have too many variables and need to reduce the number because they don’t add new information to the model.

- Having too many variables in your model makes the model difficult to handle, and certain techniques don’t perform well when you overload them with too many input variables.

- Dummy variables can only take two values: true(1) or false(0). They’re used to indicate the absence of a categorical effect that may explain the observation.

Step 4: Exploratory Data Analysis

- During exploratory data analysis you take a deep dive into the data.

- Information becomes much easier to grasp when shown in a picture, therefore you mainly use graphical techniques to gain an understanding of your data and the interactions between variables.

- Bar Plot, Line Plot, Scatter Plot ,Multiple Plots , Pareto Diagram , Link and Brush Diagram ,Histogram , Box and Whisker Plot .

Step 5: Build the Models

- Build the models are the next step, with the goal of making better predictions, classifying objects, or gaining an understanding of the system that are required for modeling.

Step 6: Presenting findings and building applications on top of them –

- The last stage of the data science process is where your soft skills will be most useful, and yes, they’re extremely important.

- Presenting your results to the stakeholders and industrializing your analysis process for repetitive reuse and integration with other tools.

Benefits and uses of data science and big data

- Governmental organizations are also aware of data’s value. A data scientist in a governmental organization gets to work on diverse projects such as detecting fraud and other criminal activity or optimizing project funding.

- Nongovernmental organizations (NGOs) are also no strangers to using data. They use it to raise money and defend their causes. The World Wildlife Fund (WWF), for instance, employs data scientists to increase the effectiveness of their fundraising efforts.

- Universities use data science in their research but also to enhance the study experience of their students. • Ex: MOOC’s- Massive open online courses.

Tools for Data Science Process

As time has passed tools to perform different tasks in Data Science have evolved to a great extent. Different software like Matlab and Power BI , and programming Languages like Python and R Programming Language provides many utility features which help us to complete most of the most complex task within a very limited time and efficiently. Some of the tools which are very popular in this domain of Data Science are shown in the below image.

Usage of Data Science Process

The Data Science Process is a systematic approach to solving data-related problems and consists of the following steps:

- Problem Definition: Clearly defining the problem and identifying the goal of the analysis.

- Data Collection : Gathering and acquiring data from various sources, including data cleaning and preparation.

- Data Exploration: Exploring the data to gain insights and identify trends, patterns, and relationships.

- Data Modeling: Building mathematical models and algorithms to solve problems and make predictions.

- Evaluation: Evaluating the model’s performance and accuracy using appropriate metrics.

- Deployment: Deploying the model in a production environment to make predictions or automate decision-making processes.

- Monitoring and Maintenance: Monitoring the model’s performance over time and making updates as needed to improve accuracy.

Issues of Data Science Process

- Data Quality and Availability : Data quality can affect the accuracy of the models developed and therefore, it is important to ensure that the data is accurate, complete, and consistent. Data availability can also be an issue, as the data required for analysis may not be readily available or accessible.

- Bias in Data and Algorithms : Bias can exist in data due to sampling techniques, measurement errors, or imbalanced datasets, which can affect the accuracy of models. Algorithms can also perpetuate existing societal biases, leading to unfair or discriminatory outcomes.

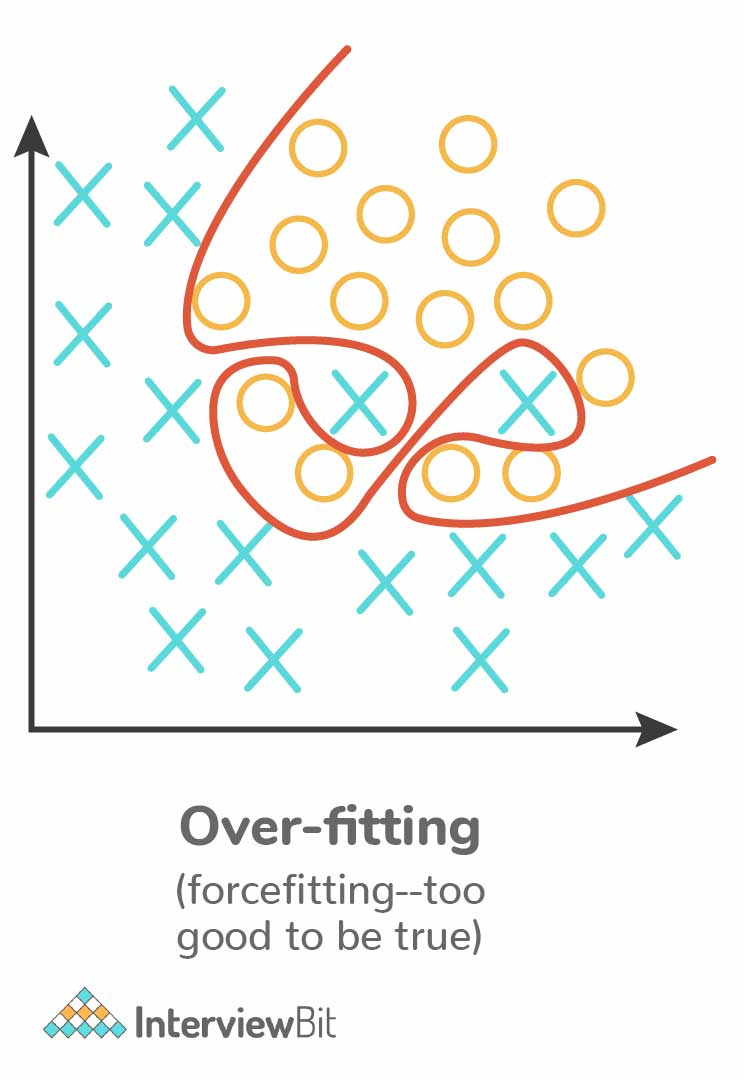

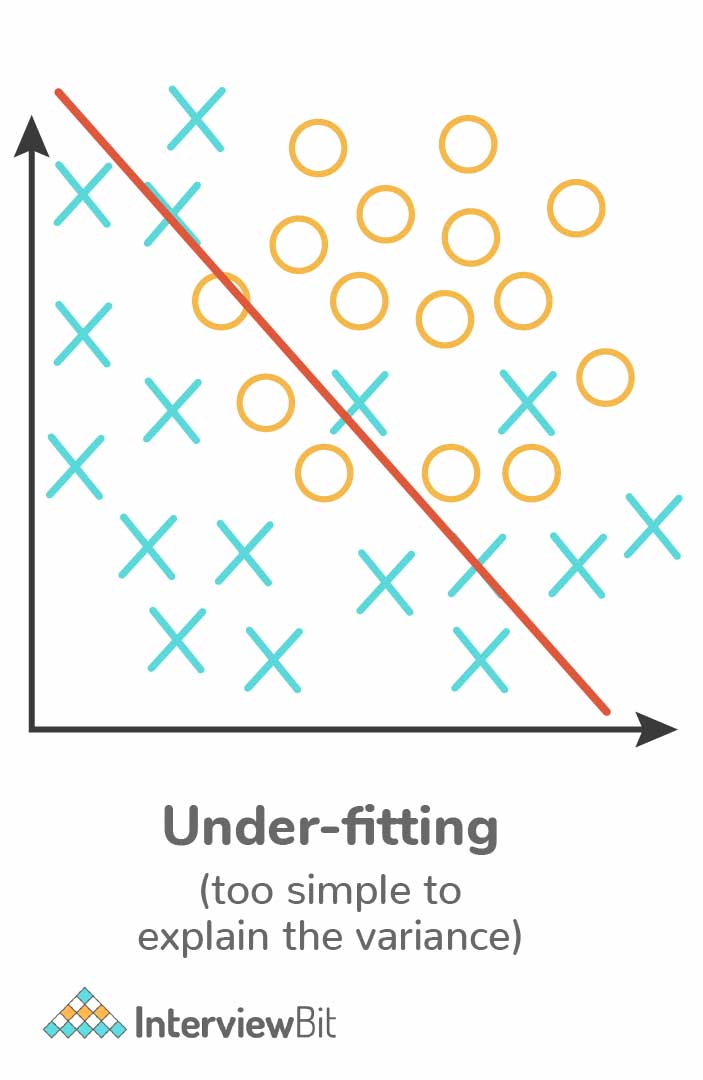

- Model Overfitting and Underfitting : Overfitting occurs when a model is too complex and fits the training data too well, but fails to generalize to new data. On the other hand, underfitting occurs when a model is too simple and is not able to capture the underlying relationships in the data.

- Model Interpretability : Complex models can be difficult to interpret and understand, making it challenging to explain the model’s decisions and decisions. This can be an issue when it comes to making business decisions or gaining stakeholder buy-in.

- Privacy and Ethical Considerations : Data science often involves the collection and analysis of sensitive personal information, leading to privacy and ethical concerns. It is important to consider privacy implications and ensure that data is used in a responsible and ethical manner.

- Technical Challenges : Technical challenges can arise during the data science process such as data storage and processing, algorithm selection, and computational scalability.

Please Login to comment...

Similar reads.

- AI-ML-DS Blogs

- Technical Scripter

- data-science

- Technical Scripter 2019

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

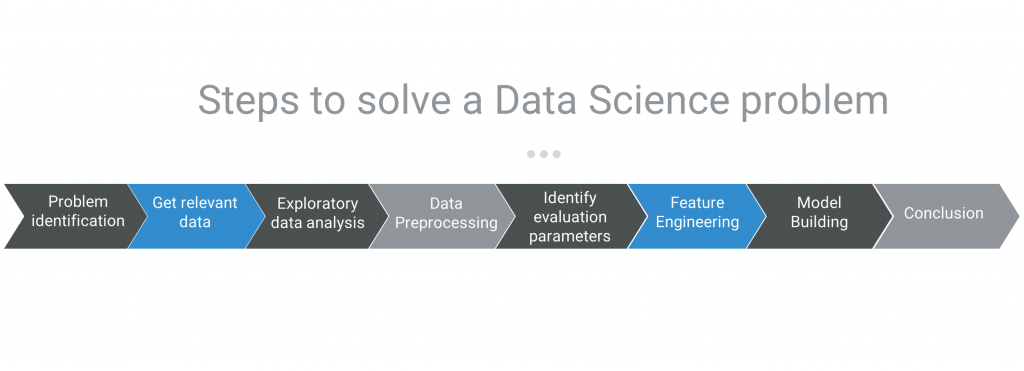

Steps to Solve a Data Science Problem

- September 5, 2023

- Machine Learning

Everyone has their own way of approaching a Data Science problem. If you are a beginner in Data Science, then your way of approaching the problem will develop over time. But there are some steps you should follow to start and reach the end of your problem with a solution. So, if you want to know the steps you should follow while solving a Data Science problem, this article is for you. In this article, I’ll take you through all the essential steps you should follow to solve a Data Science problem.

Below are all the steps you should follow to solve a Data Science problem:

- Define the Problem

- Data Collection

- Data Cleaning

- Explore the Data

- Feature Engineering

- Choose a Model

- Split the Data

- Model Training and Evaluation

Now, let’s go through each step one by one.

Step 1: Define the Problem

When solving a data science problem, the initial and foundational step is to define the nature and scope of the problem. It involves gaining a comprehensive understanding of the objectives, requirements, and limitations associated. By going through this step in the beginning, data scientists lay the groundwork for a structured and effective analytical process.

When defining the problem, data scientists need to answer several crucial questions. What is the ultimate goal of this analysis? What specific outcomes are expected? Are there any constraints or limitations that need to be considered? It could involve factors like available data, resources, and time constraints.

For instance, imagine a Data Science problem where an e-commerce company aims to optimize its recommendation system to boost sales. The problem definition here would encompass aspects like identifying the target metrics (e.g., click-through rate, conversion rate), understanding the available data (user interactions, purchase history), and recognizing any challenges that might arise (data privacy concerns, computational limitations).

So, the first step of defining the problem sets the stage for the entire steps to solve a Data Science problem. It establishes a roadmap, aids in effective resource allocation, and ensures that the subsequent analytical efforts are purpose-driven and oriented towards achieving the desired outcomes.

Step 2: Data Collection

The second critical step is the collection of relevant data from various sources. This step involves the procurement of raw information that serves as the foundation for subsequent analysis and insights.

The data collection process encompasses a variety of sources, which could range from databases and APIs to files and web scraping . Each source contributes to the diversity and comprehensiveness of the data pool. However, the key lies not just in collecting data but in ensuring its accuracy, completeness, and representativeness.

For instance, imagine a retail company aiming to optimize its inventory management. To achieve this, the company might collect data on sales transactions, stock levels, and customer purchasing behaviour. This data could be collected from internal databases, external vendors, and customer interaction logs.

So, the data collection phase is about assembling a robust and reliable dataset that will be the foundation for subsequent analysis in the rest of the steps to solve a Data Science problem.

Step 3: Data Cleaning

Once relevant data is collected, the next crucial step in solving a data science problem is data cleaning . Data cleaning involves refining the collected data to ensure its quality, consistency, and suitability for analysis.

The cleaning process entails addressing various issues that may be present in the dataset. One common challenge is handling missing values, where certain data points are absent. It can occur due to various reasons, such as data entry errors or incomplete records. To address this, data scientists apply techniques like imputation, where missing values are estimated and filled in based on patterns within the data.

Outliers , which are data points that deviate significantly from the rest of the dataset, can also impact the integrity of the analysis. Outliers could be due to errors or represent genuine anomalies. Data cleaning involves identifying and either removing or appropriately treating these outliers, as they can distort the results of analysis.

Inconsistencies and errors in the data, such as duplicate records or contradictory information, can arise from various sources. These discrepancies need to be detected and rectified to ensure the accuracy of analysis. Data cleaning also involves standardizing units of measurement, ensuring consistent formatting, and addressing other inconsistencies.

Preprocessing is another crucial aspect of data cleaning. It involves transforming and structuring the data into a usable format for analysis. It might include normalization, where data is scaled to a common range, or encoding categorical variables into numerical representations.

So, data cleaning is an essential step in preparing the data for analysis. It ensures that the data is accurate, reliable, and ready to be used for the rest of the steps to solve a Data Science problem. By addressing missing values, outliers, and inconsistencies, data scientists create a solid foundation upon which subsequent analysis can be performed effectively.

Step 4: Explore the Data

After the data has been cleaned and prepared, the next crucial step in solving a data science problem is exploring the data . Exploring the data involves delving into its characteristics, patterns, and relationships to extract meaningful insights that can inform subsequent analyses and decision-making.

Data exploration encompasses techniques that are aimed to uncover hidden patterns and gain a deeper understanding of the dataset. Visualizations and summary statistics are commonly used tools during this step. Visualizations, such as graphs and charts, provide a visual representation of the data, making it easier to identify trends, anomalies, and relationships.

For example, consider a retail dataset containing information about customer purchases. Data exploration could involve creating visualizations of customer spending patterns over different months and identifying if there are any particular items that are frequently purchased together. It can provide insights into customer preferences and inform targeted marketing strategies.

So, data exploration is like peering into the data’s story, uncovering its nuances and intricacies. It helps data scientists gain a comprehensive understanding of the dataset, enabling them to make informed decisions about the analytical techniques to be employed in the next steps to solve a Data Science problem. By identifying trends, anomalies, and relationships, data exploration sets the stage for more sophisticated analyses and ultimately contributes to making impactful business decisions.

Step 5: Feature Engineering

The next step is feature engineering, where the magic of transformation takes place. Feature engineering involves crafting new variables from the existing data that can provide deeper insights or improve the performance of machine learning models.

Feature engineering is like refining raw materials to create a more valuable product. Just as a skilled craftsman shapes and polishes raw materials into a finished masterpiece, data scientists carefully craft new features from the available data to enhance its predictive power. Feature engineering encompasses a variety of techniques. It involves performing statistical and mathematical calculations on the existing variables to derive new insights.

Consider a retail scenario where the goal is to predict customer purchase behaviour. Feature engineering might involve creating a new variable that represents the average purchase value per customer, combining information about the number of purchases and total spent. This aggregated metric can provide a more holistic view of customer spending patterns.

So, feature engineering means transforming data into meaningful features that drive better predictions and insights. It’s the bridge that connects the raw data to the models, enhancing their performance and contributing to the overall success while solving a Data Science problem.

Step 6: Choose a Model

The next step is selecting a model to choose the right tool for the job. It’s the stage where you decide which machine learning algorithm best suits the nature of your problem and aligns with your objectives.

Model selection depends on understanding the fundamental nature of your problem. Is it about classifying items into categories, predicting numerical values, identifying patterns in data, or something else? Different machine learning algorithms are designed to tackle specific types of problems, and choosing the right one can significantly impact the quality of your results.

For instance, if your goal is to predict a numerical value, regression algorithms like linear regression, decision trees, or support vector regression might be suitable. On the other hand, if you’re dealing with classification tasks, where you need to assign items to different categories, algorithms like logistic regression, random forests, decision tree classifier, or support vector machines might be more appropriate.

So, selecting a model is about finding the best tool to unlock the insights hidden within your data. It’s a strategic decision that requires careful consideration of the problem’s nature, the data’s characteristics, and the algorithm’s capabilities.

Step 7: Split the Data

Imagine the process of solving a data science problem as building a bridge of understanding between the past and the future. In this step, known as data splitting, we create a pathway that allows us to learn from the past and predict the future with confidence.

The concept is simple: you wouldn’t drive a car without knowing how it handles different road surfaces. Similarly, you wouldn’t build a predictive model without first understanding how it performs on different sets of data. Data splitting is about creating distinct sets of data, each with a specific purpose, to ensure the reliability and accuracy of your model.

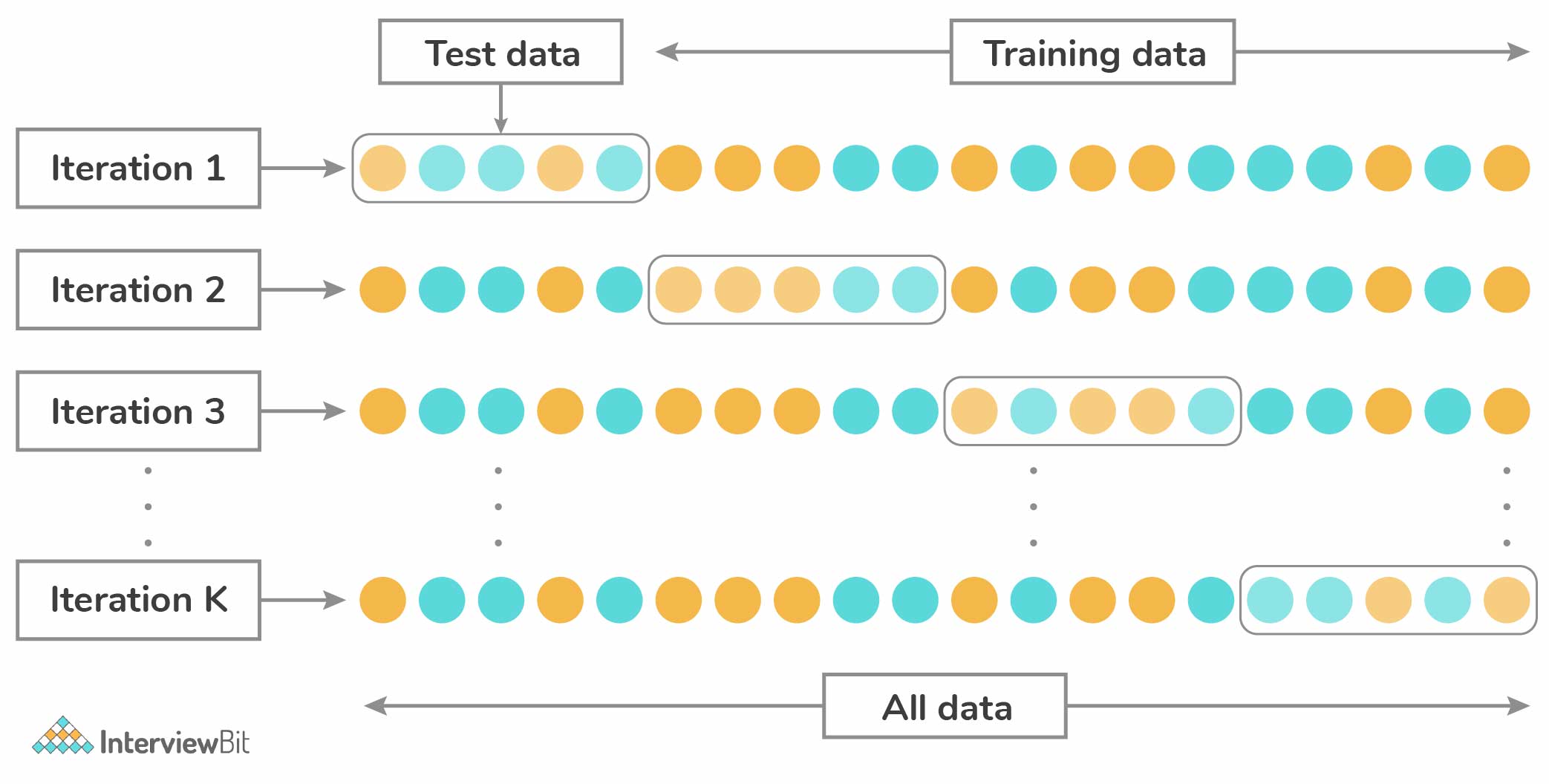

Firstly, we divide our data into three key segments: the training, the validation, and the test set. Think of these as different stages of our journey: the training set serves as the learning ground where our model builds its understanding of patterns and relationships in the data. Next, the validation set helps us fine-tune our model’s settings, known as hyperparameters, to ensure it’s optimized for performance. Lastly, the test set is the true test of our model’s mettle. It’s a simulation of the real-world challenges our model will face.

Why the division? Well, if we used all our data for training, we risk creating a model that’s too familiar with the specifics of our data and unable to generalize to new situations. By having separate validation and test sets, we avoid over-optimization, making our model robust and capable of navigating diverse scenarios.

So, data splitting isn’t just a division of numbers; it’s a strategic move to ensure that our models learn, adapt, and predict effectively. It’s about providing the right environment for learning, tuning, and testing so that our predictive journey leads to reliable and accurate outcomes.

Final Step: Model Training and Evaluation

The final step to solve a Data science problem is Model Training and Evaluation.

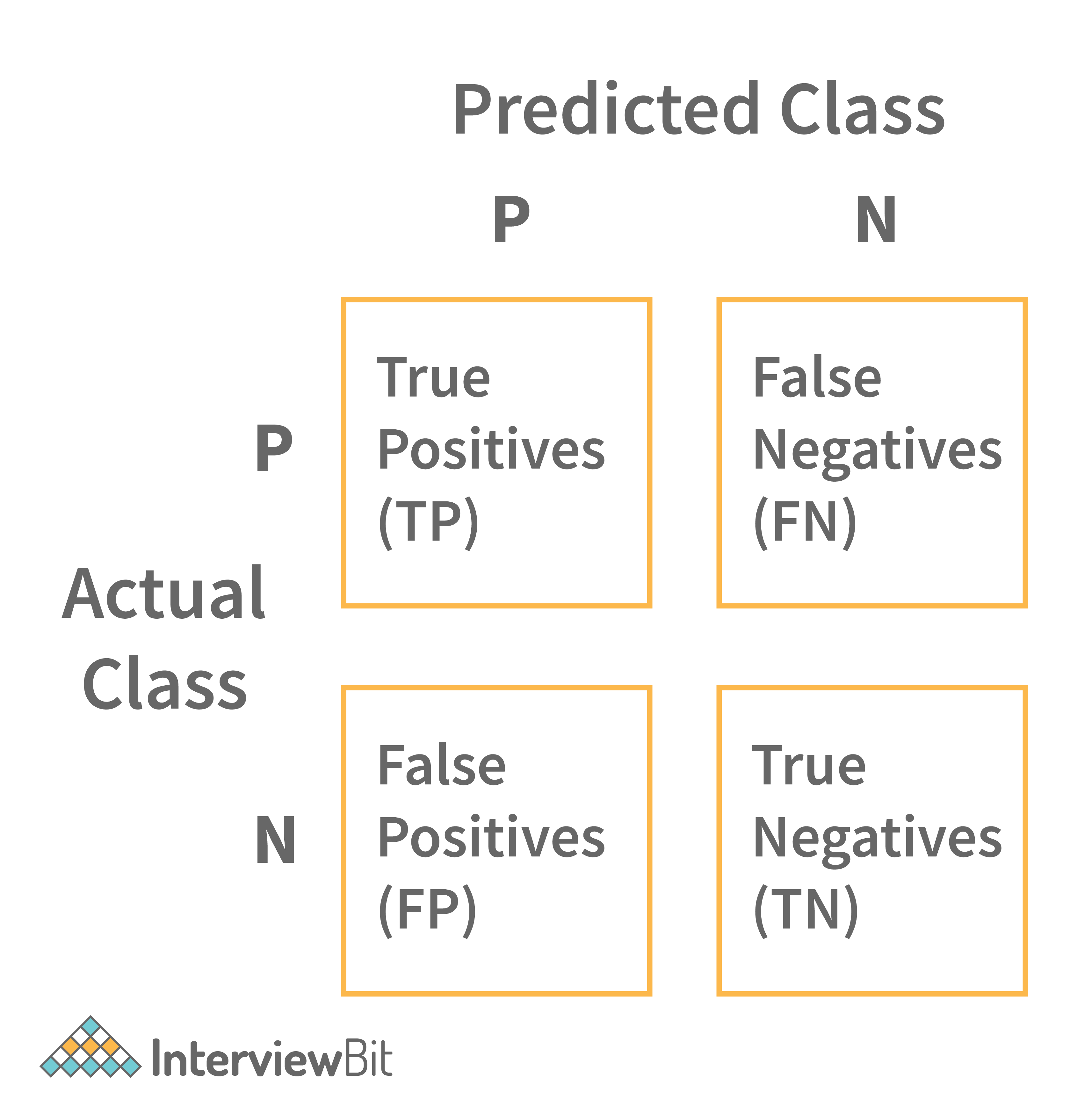

The first aspect of this step is Model Training. With the chosen algorithm, the model is presented with the training data. The model grasps the underlying patterns, relationships, and trends hidden within the data. It adapts its internal parameters to mould itself according to the intricacies of the training examples. Then the model is evaluated on the test set. Metrics like accuracy, precision, recall, and F1-score provide insights into how well the model is performing.

So, in the final step, we train the chosen model on the training data. It involves fitting the model to learn patterns from the data. And evaluate the model’s performance on the test set.

So, below are all the steps you should follow to solve a Data Science problem:

I hope you liked this article on steps to solve a Data Science problem. Feel free to ask valuable questions in the comments section below.

Aman Kharwal

Data Strategist at Statso. My aim is to decode data science for the real world in the most simple words.

Recommended For You

SQL Data Cleaning Methods

- August 20, 2024

Price Elasticity of Demand Analysis with Python

- August 19, 2024

Machine Learning Guided Projects with Python

- August 16, 2024

Roadmap to Learn Data Science for Healthcare

- August 14, 2024

One comment

Leave a reply cancel reply, discover more from thecleverprogrammer.

Subscribe now to keep reading and get access to the full archive.

Type your email…

Continue reading

- Participate

5 Key Steps in the Data Science Lifecycle Explained

Data science has emerged as one of the most popular and valuable fields in the modern landscape of business and management. It is, therefore, important to know the data science lifecycle when applying data to extract information and solve problems.

This lifecycle encompasses five key stages, that include—data collection, data pre-processing, data processing, data mining, and data presentation and dissemination. All of these are crucial in the process of converting raw data into valuable knowledge to support projects and their execution.

Data Collection: The Foundation of the Data Science Lifecycle

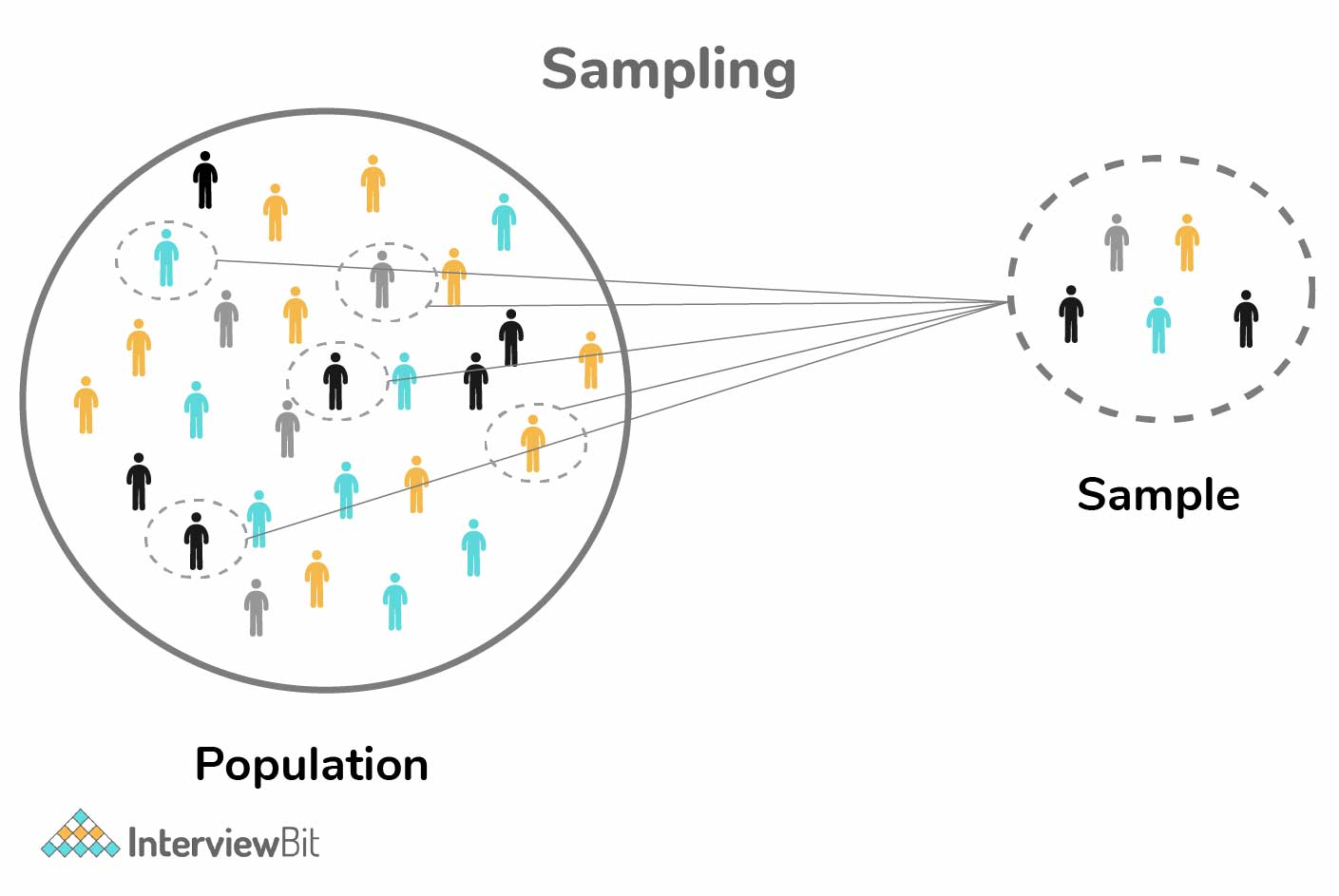

Data collection is the first and perhaps the most important process of the data science life cycle. This phase involves the identification of data from various sources, which is the basis for the analysis and modeling. The quality of the data set gathered in this particular stage of the process has a direct relationship with the quality of the insights that will be generated in the subsequent stages of the process.

The first step focuses on the sources of data, which may be insider databases, outsider APIs, questionnaires, sensors, or web crawling. All sources present different kinds of information, must be chosen for the project. The objective is to get data that is valid, reliable, and useful in solving the problem being considered.

Key considerations in data collection:

- Source Identification and Selection: Select data sources that are relevant to the project, considering the project’s objectives. Thus, internal sources, for example, CRM, can reveal more specific information, while external sources, like social networks or market research, can give a broader and more general view.

- Data Quality and Integrity: Evaluate the effectiveness of each source of data for research questions. Check to make sure that everything is correct, and that all data is current. Ensure that there is a mechanism for checking and correcting any errors or inconsistencies before proceeding to the next steps.

- Ethical and Legal Compliance: It is essential to follow ethical standards and legal considerations while collecting the data. This involves getting legal clearance, observing the privacy policies, and implementing measures to prevent data leakages.

Incorporating these considerations into the data collection process helps to build a strong foundation for the other stages of the data science lifecycle and, hence, leads to better results.

Data Preparation: Transforming Raw Data into Actionable Insights

Data cleaning is one of the most critical steps in the data science process, as it paves the way for data exploration and modeling. This step entails the process of preparing the data for analysis by arranging it in a way that is easy to analyze. Data preparation is crucial to ensure that the conclusions made are correct and can be relied on when coming up with business decisions.

Data preparation encompasses several key activities:

- Data Cleaning: This is the process of checking and correcting any errors or inconsistencies in the data. Some of the most frequent operations include filling in the missing data, eliminating the records’ duplicates, and dealing with outliers. Techniques like Pandas and SQL are used for such purposes most of the time.

- Data Transformation: The data is usually presented in different formats and must be reformatted for analysis purposes. This may include handling numerical data by scaling the numbers, handling categorical data through coding, and combining data from different datasets. Tools like feature scaling and one-hot encoding are very useful in this process.

- Data Integration: It is very important to integrate different sources of information into a single database. The process may include data integration , data synchronization, and data cleaning, where data from different sources is combined, structured, and standardized.

- Data Reduction: For higher efficiency and easy control, large data may need to be down-sampled. Data reduction methods, such as dimensionality reduction and sampling, can help reduce the size of the data while preserving important information.

Data cleaning is crucial, as it is the initial phase of the data science process, ensuring efficient and accurate analysis and model development.

In-depth Analysis of the Data Science Lifecycle

Data Analysis is the third step in the Data Science process, where raw data is analyzed to obtain useful information. This process has several methods for the identification of patterns in data in a manner that can inform strategy.

Key Aspects of Data Analysis:

- Descriptive Analysis: Gives historical information in the form of averages, median, and dispersion with the help of standard deviation. It assists in ascertaining the previous results and patterns.

- Diagnostic Analysis: Emphasizes analyzing the causes of previous results. Correlation analysis and hypothesis testing are used to understand why some events happened in a particular manner.

- Predictive Analysis: It employs statistical models and machine learning algorithms to predict the possible occurrence of future events from past events. Some of them are regression analysis and time series forecasting.

- Prescriptive Analysis: Proposes a course of action because of the descriptive, diagnostic, and predictive analysis done on the insights. It usually encompasses an optimization procedure that helps in determining the most appropriate decision.

Data analysis tools and technologies that are useful for the task include Python libraries (Pandas, NumPy), R, and data visualization tools (Tableau, Power BI). It is vital to understand these techniques and tools for extracting value from large and often unstructured datasets.

Data Modeling: Crafting the Predictive Blueprint

Data modeling is an important step in the data science process, where the raw data is transformed into valuable information using various analytical and predictive methods. The first phase is to translate the collected data into mathematical and statistical models and analyze the data to discover patterns and relationships.

Key Aspects of Data Modeling :

- Model Selection: Selecting the right model based on the nature of the problem; for instance, using the regression model for continuous data and classification model for categorical data.

- Model Training: Utilizing past data to train the model, thus enabling it to identify the patterns and correlations within the data.

- Model Evaluation: Measuring and comparing metrics, such as accuracy, precision, recall, and F1 score, for the model to check how well it is functioning.

- Model tuning: Optimizing the parameters of the model when fitting it to the data to avoid overfitting or underfitting.

Other approaches, such as ensemble models and deep learning , can enhance data modeling by providing better analysis. This can be done using Python libraries such as sci-kit-learn and TensorFlow or platforms such as Azure ML in the data science process.

Effective Data Visualization and Communication

Effective data visualization and communication is used to present the data in a way that is understandable and can be converted into useful information. At this stage, data are presented visually to detect patterns, trends, and relationships that may not be obvious from the numerical data.

Effective visualization enables stakeholders to understand complex ideas within a short time and come up with the right decisions. Key techniques include:

- Choosing the Right Visuals: Identifying the right charts, graphs, and maps that are most suited to present the data in the given case, like using bar graphs for comparing or line graphs for presenting trends,

- Clarity and Simplicity: Simple and clear visualization, avoiding overcrowding of the visual, and proper labels and legends.

- Storytelling: Transforming the information into a story to make the findings and recommendations easily understandable to the audience.

Tools and technologies play a significant role in this process, including:

- Tableau: To design engaging and easily sharable dashboards.

- Power BI : For data connectivity with different data sources and generating comprehensive reports.

- Matplotlib and Seaborn: For generating static, interactive, and animated plots in Python.

These techniques guarantee that the findings from the data are well presented and understood by the stakeholders, who can then take proper action.

It is critical to grasp the concept of the data science lifecycle when dealing with the challenges of data science . The five steps—data collection, data preparation, data analysis, data modeling, and data visualization and communication—are crucial in the process of deriving insight from data. Following these stages provides a structured approach to problem-solving and value creation, highlighting the importance of each stage in achieving positive results.

- Data Science: An Exciting Field for Your Professional Career in 2024

- Embrace Data Science for Business Success

- Why Data Science is the Most In-demand Skill Now & How Can You Prepare for it?

- Why You Should Pursue A Big Data Analytics Career?

Courtesy: DASCA

Step by Step process to solve a Data Science Challenge/Problem

December 29, 2019 6 min read

From predicting a sales forecast to predicting the shortest route to reach a destination, Data Science has a wide range of applications across various industries. Engineering, marketing, sales, operations, supply chain, and whatnot. You name it, and there is an application of data science. And the application of data science is growing exponentially! The situation is such that the demand for people with knowledge in data science is higher than academia is currently supplying!

Starting with this article, I will be writing a series of blog posts on how to solve a Data Science problem in real-life and in data science competitions.

While there could be different approaches to solving a problem, the broad structure to solving a Data Science problem remains more or less the same. The approach that I usually follow is mentioned below.

Step 1: Identify the problem and know it well:

In real-life scenarios: Identification of a problem and understanding the problem statement is one of the most critical steps in the entire process of solving a problem. One needs to do high-level analysis on the data and talk to relevant functions (could be marketing, operations, technology team, product team, etc) in the organization to understand the problems and see how these problems can be validated and solved through data.

To give a real-life example, I will briefly take you through a problem that I worked on recently. I was performing the customer retention analysis of an e-learning platform. This particular case is a classification problem where the target variable is binary i.e one needs to predict whether a user would be an active learner on the platform or not in the next ‘x’ days, based on her behavior/interaction on the platform for the last ‘y’ days.

As I just mentioned, identification of the problem is one of the most critical steps. In this particular case, it was identifying that there is an issue with the user retention on the platform. And as an immediate actionable step, it is important to understand the underlying factors that are causing users to leave the platform (or become non-active learners). Now the question is how do we do this.

In the case of Data Science challenges , the problem statement is generally well defined, and all you need to do is clearly understand it and come up with a suitable solution. If needed, an additional primary and secondary research about the problem statement should be done as it helps in coming up with a better solution. If needed additional variables and cross features can be created based on the subject expertise.

Step 2: Get the relevant data:

In real-life scenarios: Once the problem is identified, Data scientists need to talk to relevant functions (could be marketing, operations, technology team, product team, etc) in the organization to understand the possible trigger points of the problem and identify relevant data to perform the analysis. Once this is done, all the relevant data should be extracted from the database.

Continuing my narration of the problem statement I recently worked on, I did a thorough audit of the platform and the user journey and what actions users performed when on the platform. I did the audit with the help of the product and development team. This audit gave me a thorough understanding of the database architecture and potential data logs that were captured and could be considered for the analysis. An extensive list of data points (variables or features) were collated with the help of relevant stakeholders in the organization.

In essence, usually, this step not only helps in understanding the DB architecture and data extraction process, but it would also help in identifying potential issues within the DB (if any), missing logs in the user journey that were not captured previously, etc. This would further help the development team to add the missing logs and enhance the architecture of the DB.

Now that we have done the data extraction, we can proceed with the data pre-processing step in order to prepare the data for the analysis.

Data Science Challenge: In the case of Data Science Challenges, a dataset is often provided.

Step 3: Perform exploratory data analysis:

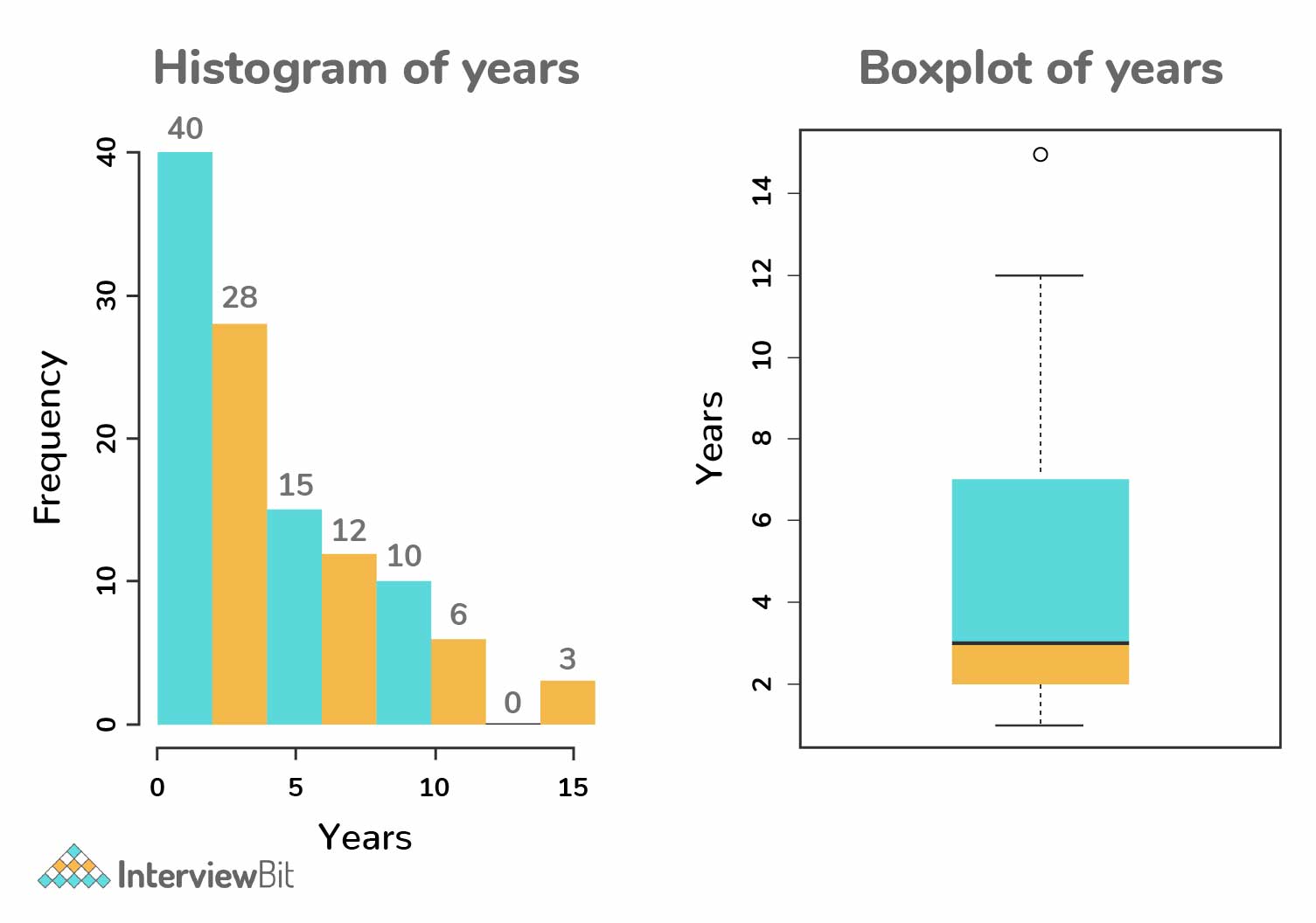

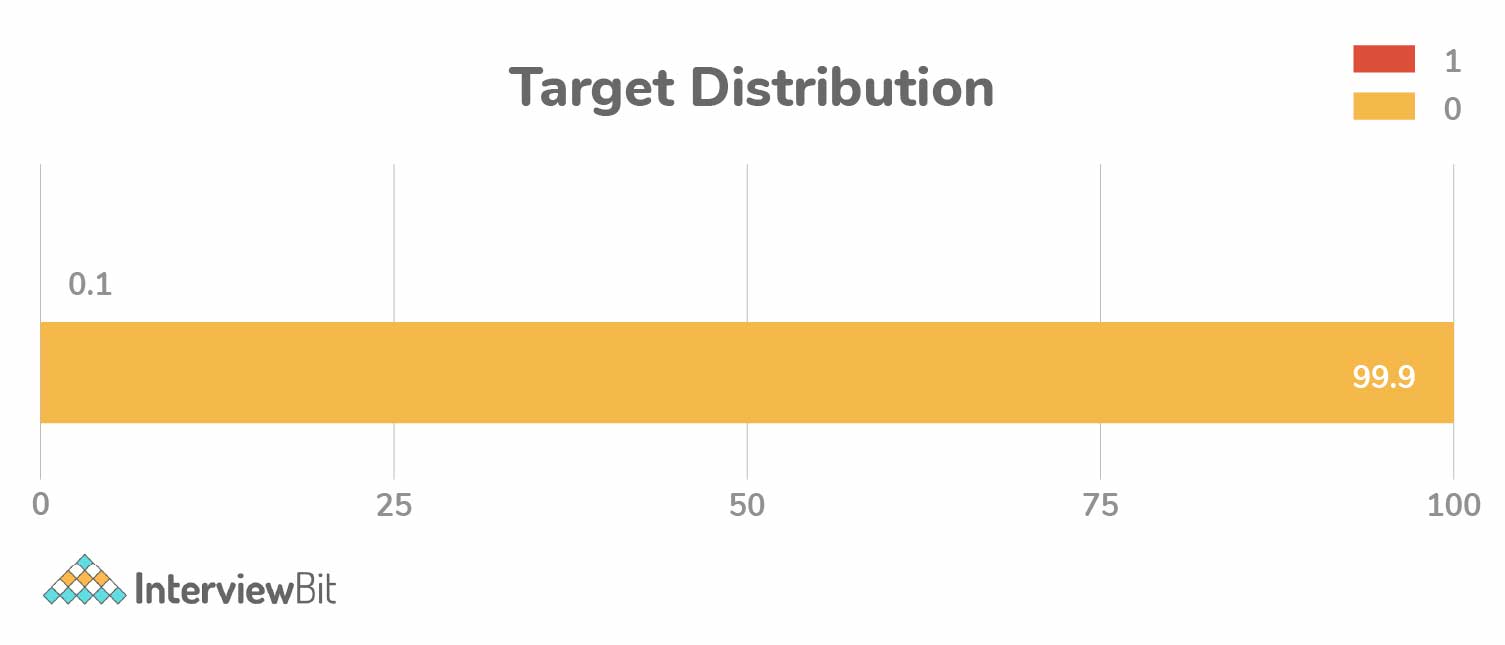

To begin with, data exploration is done to understand the patterns of each of the variables. Some basic plots such as histograms and box plots are analyzed to check if there are any outliers, class imbalances, missingness, and anomalies in the dataset. Data exploration and data pre-processing have a very close correlation and often they are clubbed together.

Step 4: Pre-process the data:

In order to get reliable, reproducible and unbiased data analysis certain pre-processing steps are to be followed. In my recent study, I followed the below-mentioned steps – these are some of the standard steps that are followed while performing any analysis:

- Data Cleaning and treating missingness in the data: Often data comes with missing values and it is always a struggle to get quality data.

- Standardization/normalization (if needed): Often variables in a dataset come with a wide range of data, performing standardization/normalization would bring them to a common scale so that it could further help in implementing various machine learning models (where standardization/normalization is a pre-requisite to apply such models).

- Outlier detection : It is important to know if there are any anomalies in the dataset and treat them if required. Else you might end up getting skewed results.

- Train dataset: Models are trained on the training dataset

- Test dataset: Once the model is built on the training dataset, it should be tested on the test data to check for its performance.

The pre-processing step is common for both real-life data science problems and competitions alike. Now that we have pre-processed the data, we can move to defining the model evaluation parameters and exploring the data further.

Step 5: Define model evaluation parameters :

Arriving at the right parameters to assess a model is critical before performing the analysis.

Based on various parameters and expressions of interest of the problem, one needs to define model evaluation parameters. Some of the widely used model evaluation performance are listed below:

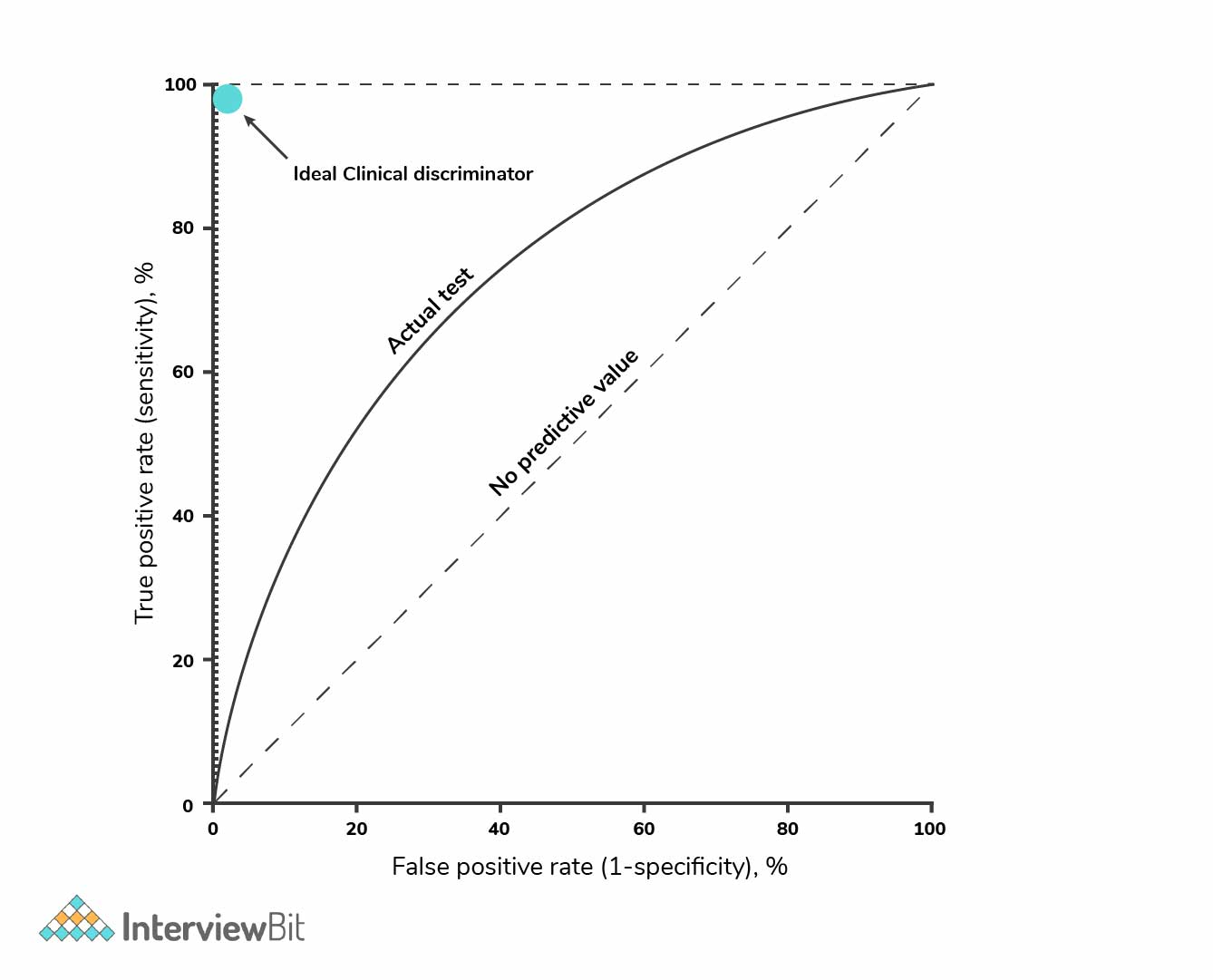

- Receiver Operating Characteristic (ROC): This is a visualization tool that plots the relationship between true positive rate and false positive rate of a binary classifier. ROC curves can be used to compare the performance of different models by measuring the area under the curve (AUC) of its plotted scores, which ranges from 0.0 to 1.0. The greater this area, the better the algorithm is to find a specific feature.

- Classification Accuracy/Accuracy

- Confusion matrix

- Mean Absolute Error

- Mean Squared Error

- Precision, Recall

The model performance evaluation should be done on the test dataset created during the preprocessing step, this test dataset should remain untouched during the entire model training process.

Coming to the customer retention analysis that I worked on, my goal was to predict the users who would leave the platform or become non-active learners. In this specific case, I picked a model that has a good true positive rate in its confusion matrix. Here, true positive means, the cases in which the model has predicted a positive result (i.e user left the platform or user became a non-active learner) that is the same as the actual output. Let’s not worry about the process of picking the right model evaluation parameter, I will give a detailed explanation in the next series of articles.

Data Science challenges: Often, the model evaluation parameters are given in the challenge.

Step 7: Perform feature engineering:

This step is performed in order to know:

- Important features that are to be used in the model (basically we need to remove the redundant features if any). Metrics such as AIC, BIC are used to identify the redundant features, there are built-in packages such as StepAIC (forward and backward feature selection) in R that help in performing these steps. Also, algorithms such as Boruta are usually helpful in understanding the feature importance.

In my case, I used Boruta to identify the important features that are required for applying a machine learning model. In general, featuring has following steps: