Logistic Regression

Mar 31, 2019

1.28k likes | 2.37k Views

STAT E-150 Statistical Methods. Logistic Regression. So far we have considered regression analyses where the response variables are quantitative . What if this is not the case? If a response variable is categorical a different regression model applies, called logistic regression .

Share Presentation

- log likelihood

- response variables

- overall model

- different regression model

Presentation Transcript

STAT E-150Statistical Methods Logistic Regression

So far we have considered regression analyses where the response variables are quantitative. What if this is not the case? If a response variable is categorical a different regression model applies, called logistic regression.

A categorical variable which has only two possible values is called a binary variable. We can represent these two outcomes as 1 to represent the presence of some condition (“success”) and 0 to represent the absence of the condition (“failure”): The logistic regression model describes how the probability of “success” is related to the values of the explanatory variables, which can be categorical or quantitative.

Logistic regression models work with odds rather than proportions. The odds are just the ratio of the proportions for the two possible outcomes: If π is the proportion for one outcome, then 1 − π is the proportion forthe second outcome. The odds of the first outcome occurring are .

Here's an example: Suppose that a coin is weighted so that heads are more likely than tails, with P(heads) = .6. Then P(tails) = 1 - P(heads) = 1 - .6 = .4 The odds of getting heads in a toss of this coin are The odds of getting tails in a toss of this coin are The odds ratio is This tells us that you are 2.25 times more likely to get "heads" than to get "tails".

You can also convert the odds of an event back to the probability of the event: For an event A, P(A) = For example, if the odds of a horse winning are 9 to 1, then the probability of the horse winning are 9/(1+9) = .9

The Logistic Regression Model The relationship between a categorical response variable and a single quantitative predictor variable is an S-shaped curve. Here is a plot of p vs. x for different logistic regression models: The points on the curve represent P(Y=1) for each value of x. The associated model is the logistic or logit model:

The general logistic regression model is where and E(Y) = π, the probability of success. The xi are independent quantitative or qualitative variables.

Odds and log(odds) • Letπ =P(Y = 1) be a probability with 0 < π< 1 Then the odds that Y = 1 is the ratio odds = and so the log (odds) =

This transformation from π to log(odds) is called the logistic or logit transformation. • The relationship is one-to-one: • For every value of π (except for 0 and 1) there is one and only one value of .

The log(odds) can have any value from -∞ to ∞, and so we can use a linear predictor. • That is, we can model the log odds as a linear function of the explanatory variable: • y = β0 + β1x • (To verify this, solve πand then take the log of both sides.)

For any fixed value of the predictor x, there are four probabilities: If the model is exactly correct, then p = π and the two fitted values estimate the same number.

To go from log(odds) to odds, use the exponential function ex: 1. odds = elog(odds) 2. You can check that if odds = 1/(1 - π ), then you can solve for π to find that π = odds/(1 + odds). 3. Since log(odds) = we have the result π= elog(odds) / (1 + elog(odds))

The Logistic Regression Model The Logistic Regression Model for the probability of success of a binary response variable based on a single predictor x is: Logit form: Probability form:

Example: A study was conducted to analyze behavioral variables and stress in people recently diagnosed with cancer. For our purposes we will look at patients who have been in the study for at least a year, and the dependent variable (Outcome) is coded 1 to indicate that the patient is improved or in complete remission, and 0 if the patient has not improved or has died. The predictor variable is the survival rating assigned by the patient's physician at the time of diagnosis. This is a number between 0 and 100 and represents the estimated probability of survival at five years. Out of 66 cases there are 48 patients who have improved and 18 who have not.

The scatterplot shows us that a linear regression analysis is not appropriate for this data. This scatterplot clearly has no linear trend, but it does show that the proportion of people who improve is much higher when the survival rate is high, as would be expected. However, if we transformation from whether the patient has improved to the odds of improvement, and then consider the log of the odds, we will have a variable that is a linear function of the survival rate, and we will be able to use linear regression.

Let p = the probability of improvement Then 1 - p is the probability of no improvement We will look for an equation of the form Here β1will be the amount of increase in the log odds for a one-unit increase in SurvRate.

Here are the results of this analysis: We can see that the logistic regression equation is log(odds) = .081xSurvRate - 2.684

Assessing the Model In linear regression, we used the p-values associated with the test statistic t to assess the contribution of each predictor. In logistic regression, we can use the Wald statistic in the same way. Note that in this example, the Wald statistic for the predictor is 17.755, which is significant at the .05 level of significance. This is evidence that this predictor is a significant predictor in this model.

H0: β1 = 0 Ha: β1 ≠ 0 Since p is close to zero, the null hypothesis is rejected. This indicates that the predicted survival rate is a useful indicator of the patient's outcome. The resulting regression equation is log(odds) = .081xSurvRate - 2.684

Here are scatterplots of the data and of the values predicted by the model:

Note how well the results fit the data: The suggested curve is quite close to the points in the lower left, rises rapidly across the points in the center, where the values of SurvRate that have a roughly equal number of patients who improve and don't improve, and finally comes close to the cluster of points in the upper right. The values all fall between 0 and 1.

SPSS takes an iterative approach to this solution: it will begin with some starting values for β0 and β1, see how well the estimated log odds fit the data, adjust the coefficients, and then reexamine the fit. This will continue until no further adjustments will produce a better fit What do all of the SPSS results tell us?

Starting with Block 0: Beginning Block The Case Processing Summary tells us that all 66 cases were included:

The Variables in the Equation table shows that in this first iteration only the constant was used. The second table lists the variables that were not included in this model; it indicates that if the second variable were to be included, it would be a significant predictor:

The Iteration History shows what the results would be with only this single predictor. Since the second variable, SurvRate, is not included, there is little change. The -2 Log likelihood can be used to assess how well a model would fit the data. It is based on summing the probabilities associated with the expected and observed outcomes. The lower the -2LL value, the better the fit.

You can see from the Classification Table that the values were not classified by a second variable at this point. You can also see that there were 48 patients who improved and 18 who did not.

One way to test the overall model is the Hosmer-Lemeshow goodness-of-fit test, which is a Chi-Square test comparing the observed and expected frequencies of subjects falling in the two categories of the response variable. Large values of 2 (and the corresponding small p-values) indicate a lack of fit for the model. This table tells us that our model is a good fit, since the p-value is large:

Now consider the next block, Block 1: Method = Enter The Iteration History table shows the progress as the model is reassessed; the value of the coefficient of SurvRate converges to .081. To assess whether this larger model provides a significantly better fit than the smaller model, consider the difference between the -2LL values. The value for the smaller model was 77.346, which is larger than 37.323, the value for the model with SurvRate included, indicating that the larger model is a significantly better fit.

This table now shows that SurvRate is a significant predictor (p is close to 0), and we can find the coefficients in the resulting regression equation, y = log(odds) = .081xSurvRate - 2.684

Why is the odds ratio Exp(B)? Suppose that we have the logistic regression equation y = log(odds) = β1x + β0 Then β1 represents the change in y associated with a unit change in x. That is, y will increase by β1 when x increases by 1. But y is log(odds). So log(odds) will increase by β1 when x increases by 1.

Exp(B) is an indicator of the change in odds resulting from a unit change in the predictor. Let's see how this happens: Suppose we start with the regression equation y = β1x + β0 Now if x increases by 1, we have y = β1(x +1) + β0 How much has y changed? New value - old value = [β1(x +1) + β0] - [β1 x + b0] = [β1x + β1 + β0] - [β1 x + β0] = β1 So y has increased by β1. That is, β1 is the change in y associated with a unit change in x. But y = log(odds), so now we know that log(odds) will increase by β1 when x increases by 1.

If log(odds) changes by β1 then odds increases by eβ1 In other words, the change in odds associated with a unit change in x is eβ1, which can be denoted as Exp(β1) -- or by Exp(B) in SPSS. In our example, then, with each unit increase in SurvRate, y = log(odds) will increase by .081. That is, the odds of improving will increase by a factor of 1.085 for each unit increase in SurvRate.

Another example: • The sales director for a chain of appliance stores wants to find out what circumstances encourage customers to purchase extended warranties after a major appliance purchase. • The response variable is an indicator of whether or not a warranty is purchased. • The predictor variables are • - Customer gender • - Age of the customer • - Whether a gift is offered with the warranty • - Price of the appliance • - Race of the customer (this is coded with three indicator variables to represent White, African-American, and Hispanic)

Here are the results with all predictors: The odds ratio is shown in the Exp(B) column. This is the change in odds for each unit change in the predictor. For example, the odds of a customer purchasing a warranty are 1.096 times greater for each additional year in the customer's age. Which of these variables might be removed from this model? Use α = .10

If the analysis is rerun with only three predictors these are the results: In this model, all three predictors are significant. These results indicate that the odds that a customer who is offered a gift will purchase a warranty is more than ten times greater than the corresponding odds for a customer having the same other characteristics but who is not offered a gift. Also, the odds ratio for Age is greater than 1. This tells us that older buyers are more likely to purchase a warranty.

If the analysis is rerun with only three predictors these are the results: The resulting regression equation is Log(odds) = 2.339xGift + .064xAge - 6.096

If the analysis is rerun with only three predictors these are the results: Note: in this example, the coefficient of Price is too small to be expressed in three decimal places. This situation can be remedied by dividing the price by 100, and creating a new model.

The resulting equation is now Log(odds) = 2.339xGift + .064xAge + .040xPrice100 - 6.096

To produce the output for your analysis, • Choose >Analyze >Regression >Binary Logistic. • Choose the response and predictor variables. • Click on Options and check CI for Exp(B) to create the confidence intervals. Click on Continue • Click on Save and check the Probabilities box. Click on Continue and then on OK.

To produce the graph of the results, create a simple scatterplot using Predicted Probability as the dependent variable, and the predictor as a covariate.

- More by User

Logistic regression

Logistic regression. A quick intro. Why Logistic Regression?. Big idea: dependent variable is a dichotomy (though can use for more than 2 categories i.e. multinomial logistic regression) Why would we use?

876 views • 20 slides

Logistic Regression. (An Introduction). Objective : Modeling a binary response (success, failure) or probability through a set of predictors X1…Xp

1.16k views • 14 slides

Logistic Regression. Aims. When and Why do we Use Logistic Regression ? Binary Multinomial Theory Behind Logistic Regression Assessing the Model Assessing predictors Interpreting Logistic Regression. When And Why.

710 views • 28 slides

Logistic Regression. Appiled Linear Statistical Models ,由 Neter 等著 Categorical Data Analysis ,由 Agresti 著. Logistic 回归. 当响应变量是定性变量时的非线性模型 两种 可能的结果,成功或失败,患病的或没 有 患病的,出席的或缺席的 实例 : CAD ( 心血管 疾病 ) 是年龄,体重,性别, 吸烟历史 ,血压的函数 吸烟 者或不吸烟者是家庭历史,同年龄组行 为 ,收入,年龄的函数 今年购买一辆汽车是收入,当前汽车的使用

734 views • 30 slides

Logistic Regression. Outline. Basic Concepts of Logistic Regression Finding Logistic Regression Coefficients using Excel’s Solver Significance Testing of the Logistic Regression Coefficients Testing the Fit of the Logistic Regression Model

1.46k views • 65 slides

Logistic regression. David Kauchak CS451 – Fall 2013. Admin. Assignment 7 CS Lunch on Thursday. Priors. Coin1 data: 3 Heads and 1 Tail Coin2 data: 30 Heads and 10 tails Coin3 data: 2 Tails Coin4 data: 497 Heads and 503 tails

769 views • 53 slides

Logistic Regression. Now with multinomial support!. An Introduction. Logistic regression is a method for analyzing relative probabilities between discrete outcomes (binary or categorical dependent variables) Binary outcome: standard logistic regression ie . Dead (1) or NonDead (0)

616 views • 10 slides

Logistic Regression. Introduction. What is it? It is an approach for calculating the odds of event happening vs other possibilities…Odds ratio is an important concept Lets discuss this with examples Why are we studying it? To use it for classification

393 views • 10 slides

Logistic Regression. Example using Peng (2002) data. The overall fit of the final model is shown by the −2 log-likelihood statistic. If the significance of the chi-square statistic is less than .05, then the model is a significant fit of the data. Choose a Grouped Scatter Plot.

304 views • 9 slides

Logistic Regression. Prof. navneet Goyal Department of computer Science Bits- pilani , pilani campus. Logistic Regression. Source of figure unknown. Logistic Regression. Linear Regression – relationship between a continuous response variable and a set of predictor variables

505 views • 12 slides

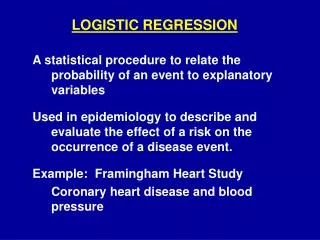

LOGISTIC REGRESSION

LOGISTIC REGRESSION. A statistical procedure to relate the probability of an event to explanatory variables Used in epidemiology to describe and evaluate the effect of a risk on the occurrence of a disease event. Example: Framingham Heart Study Coronary heart disease and blood pressure.

531 views • 22 slides

Logistic Regression. Debapriyo Majumdar Data Mining – Fall 2014 Indian Statistical Institute Kolkata September 1, 2014. Recall: Linear Regression. Assume: the relation is linear Then for a given x (=1800), predict the value of y

375 views • 19 slides

Logistic Regression. Biostatistics 510 March 15, 2007 Vanessa Perez. Logistic regression. Most important model for categorical response (y i ) data Categorical response with 2 levels ( binary : 0 and 1) Categorical response with ≥ 3 levels (nominal or ordinal)

844 views • 20 slides

Logistic Regression. Who intends to vote?. Scholars and politicians would both like to understand who voted. Imagine that you did a survey of voters after an election and you ask people if they voted.

1.03k views • 85 slides

Logistic Regression. 10701 /15781 Recitation February 5, 2008. Parts of the slides are from previous years’ recitation and lecture notes, and from Prof. Andrew Moore’s data mining tutorials. Discriminative Classifier. Learn P(Y|X) directly Logistic regression for binary classification:

326 views • 11 slides

Logistic Regression. Linear regression. Function f : X Y is a linear combination of input components. Binary classification. Two classes Our goal is to learn to classify correctly two types of examples Class 0 – labeled as 0, Class 1 – labeled as 1 We would like to learn

681 views • 30 slides

Logistic Regression. Psy 524 Ainsworth. What is Logistic Regression?. Form of regression that allows the prediction of discrete variables by a mix of continuous and discrete predictors.

515 views • 37 slides

Logistic Regression. What Type of Regression?. Dependent Variable – Y Continuous – e.g. sales, height Dummy Variable or Multiple Regression. What Type of Regression?. Dependent Variable – Y Continuous – e.g. sales, height Dummy Variable or Multiple Regression Dependent Variable – Y

561 views • 37 slides

Logistic Regression. Logistic Regression - Dichotomous Response variable and numeric and/or categorical explanatory variable(s) Goal: Model the probability of a particular as a function of the predictor variable(s) Problem: Probabilities are bounded between 0 and 1

517 views • 37 slides

Logistic regression. Recall the simple linear regression model: y = b 0 + b 1 x + e. where we are trying to predict a continuous dependent variable y from a continuous independent variable x. This model can be extended to Multiple linear regression model:

409 views • 29 slides

Logistic Regression. Logistic Regression - Binary Response variable and numeric and/or categorical explanatory variable(s) Goal: Model the probability of a particular outcome as a function of the predictor variable(s) Problem: Probabilities are bounded between 0 and 1.

377 views • 11 slides

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Overview of Logistic Regression lecture (CC-BY, 2020)

CC BY is the correct license for this work. Ignore the ones in the slides: use this materials as you like, with attribution. (KW, 02-2020)

Related Papers

Critical care (London, England)

Jonathan Ball

This review introduces logistic regression, which is a method for modelling the dependence of a binary response variable on one or more explanatory variables. Continuous and categorical explanatory variables are considered.

Nishant Agarwal

There are many situations where we have binary outcomes (it rains in Delhi on a given day, or it doesnt; a person has heart disease or not) and we have some input variables which may be discrete or continuous. This kind of problem is known as classification and this paper describes the use of logistic regression for binary classification of a data set. The hypothesis function for logistic regression model is h θ (x) = 1 1 + exp(−θ T x) where h θ (x) is hypothised value for given input x for a particular set of parameters θ. For this assignment I have applied gradient descent method and have analysed the effect of various hyperparameters and optimizers on the accuracy of prediction.

Journal of Modern Applied Statistical Methods

Rand Wilcox

Antony Ngunyi

IOSR Journal of Mathematics

PETER MWITA

Revista de Sociologia e Política

Dalson Figueiredo , Antônio Fernandes

Introduction: What if my response variable is binary categorical? This paper provides an intuitive introduction to logistic regression, the most appropriate statistical technique to deal with dichotomous dependent variables. Materials and Methods: we estimate the effect of corruption scandals on the chance of reelection of candidates running for the Brazilian Chamber of Deputies using data from Castro and Nunes (2014). Specifically, we show the computational implementation in R and we explain the substantive interpretation of the results. Results: we share replication materials which quickly enables students and professionals to use the procedures presented here for their studying and research activities. Discussion: we hope to facilitate the use of logistic regression and to spread replication as a data analysis teaching tool.

Gifted Child Quarterly

Francis Huang

Introduction to Statistics with SPSS for Social Science

Duncan Cramer

Sahil Mahajan

Jinko Graham

approximate exact conditional inference for logistic regression models. Exact conditional inference is based on the distribution of the sufficient statistics for the parameters of interest given the sufficient statistics for the remaining nuisance parameters. Using model formula notation, users specify a logistic model and model terms of interest for exact inference. License GPL (> = 2)

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

RELATED PAPERS

JOURNAL OF ADVANCES IN MATHEMATICS

Elmira Kushta

Ramana Kumar Penmetsa

Mathematical Problems in Engineering

Abdelhamid ZAIDI , Asamh Al Luhayb

Communications in Statistics - Theory and Methods

Han Lin Shang

Journal of Educational and Behavioral Statistics

David Rindskopf

Kenneth Ezukwoke , Samaneh Zareian

Journal of Multivariate Analysis

Debashis Ghosh

Habshah Midi

International Journal of Machine Learning and Cybernetics

Abdallah Musa

Gavin Cawley

Kuram Ve Uygulamada Egitim Bilimleri

Dr. Ömay ÇOKLUK

Karim Anaya-Izquierdo

Journal of Statistical Software

Andrzej Sokolowski

Statistics in medicine

Georg Heinze

Allen Featherstone

arXiv (Cornell University)

Marie-luce Taupin

Statistical methods in medical research

Vito Muggeo

Michelle Liou

Monika Sharma

Ehsan Abbasi

Kanagavalli N

International Journal of Computers Communications & Control

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

IMAGES

VIDEO

COMMENTS

What is logistic regression? Linear regression is the type of regression we use for a continuous, normally distributed response variable. Logistic regression is the type of regression we use for a binary response variable that follows a Bernoulli distribution Let us review: Bernoulli Distribution. Linear Regression.

Logistic Regression With a little bit of algebraic work, the logistic model can be rewritten as: The value inside the natural log function (#=1)/1−&(#=1) , is called the odds, thus logistic regression is said to model the log-odds with a linear function of the predictors or features, -. This gives us the natural

Logistic Regression Objective Function • Can't just use squared loss as in linear regression: - Using the logistic regression model results in a non-convex optimization 9 J ( )= 1 2n Xn i=1 ⇣ h ⇣ x(i) ⌘ y(i) ⌘ 2 h (x)= 1 1+e T x

9 Logistic Regression 25b_logistic_regression 27 Training: The big picture 25c_lr_training 56 Training: The details, Testing LIVE 59 Philosophy LIVE 63 Gradient Derivation 25e_derivation. Background 3 25a_background. Lisa Yan, CS109, 2020 1. Weighted sum If !=#!,#",…,##: 4 dot product

The expression on the right is called a logistic function and cannot yield a value that is negative or a value that is >1. Fitting a model of this form is known as logistic regression. Other transformations (also called link functions) can be used to ensure the probabilities lie in [0, 1], including the probit (popular in econometrics).

Overall, logistic regression maps a point x in d-dimensional feature space to a value in the range 0 to 1. Can interpret prediction from a logistic regression model as: A probability of class membership. A class assignment, by applying threshold to probability. threshold represents decision boundary in feature space.

Learn the basics of logistic regression, a popular machine learning technique, from Stanford University's CS124 course. Download the ppt slides here.

Logistic Regression. Section 9. Logistic Regression. for binary and ordinal outcomes. Any good classification rule, including a logistic model, should have high sensitivity & specificity. In logistic, we choose a cutpoint,Pc, Predict positive if model P > Pc Predict negative if model P < Pc Or can use logit cutpoint=ln(Pc/(1-Pc)) Diabetes ...

Logistic Regression Explained from Scratch (Visually ...

This course introduces principles, algorithms, and applications of machine learning from the point of view of modeling and prediction. It includes formulation of learning problems and concepts of representation, over-fitting, and generalization. These concepts are exercised in supervised learning and reinforcement learning, with applications to images and to temporal sequences.

Using a logistic regression model. Model consists of a vector β in d-dimensional feature space. For a point x in feature space, project it onto β to convert it into a real number z in the range - ∞ to + ∞. = α + β ⋅ x = α + β x + L + β. 1. d. Map z to the range 0 to 1 using the logistic function. = 1 /(1 + e −. )

Logistic Regression Based on a chapter by Chris Piech Before we get started, I want to familiarize you with some notation: TX = ∑n i=1 iXi = 1X1 + 2X2 + + nXn weighted sum ˙„z" = 1 1+ e z sigmoid function Logistic Regression Overview Classification is the task of choosing a value of y that maximizes P„YjX". Naïve Bayes worked by

it is based on an intuitive probabilistic interpretation of h. it is very convenient and mathematically friendly ('easy' to minimize). Verify: y = +1 encourages. n wtx ≫ 0, so θ(wtx n) ≈ 1; y. n n = −1 encourages wtx ≪ 0, so θ(wtx. n n) ≈ 0; Logistic Regression and Gradient Descent: 8 /23. Probabilistic interpretation.

Logistic regression is a versatile supervised machine learning technique used for predicting a binary output variable. Overfitting can occur in logistic regression, and it's crucial to employ techniques such as regularization to mitigate this issue. The sklearn library in Python provides robust tools for implementing logistic regression models.

We can assess this using logistic regression fitting the following model, logit(π i) = β 0 +β 1cad.dur i, where π i = Pr(ith patient has severe disease|cad.dur i) and cad.dur i is the time from the onset of symptoms. BIOST 515, Lecture 12 4

This situation can be remedied by dividing the price by 100, and creating a new model. The resulting equation is now Log (odds) = 2.339xGift + .064xAge + .040xPrice100 - 6.096. To produce the output for your analysis, • Choose >Analyze >Regression >Binary Logistic. • Choose the response and predictor variables.

Logistic regression showed that the odds of scoring < 20 on the MoCA-P increased with advancing age and with education at ≤7 years (p < 0.05). Two points are added to the MoCA-P score for those ...

Before we report the results of the logistic regression model, we should first calculate the odds ratio for each predictor variable by using the formula eβ. For example, here's how to calculate the odds ratio for each predictor variable: Odds ratio of Program: e.344 = 1.41. Odds ratio of Hours: e.006 = 1.006.

The hypothesis function for logistic regression model is h θ (x) = 1 1 + exp (−θ T x) where h θ (x) is hypothised value for given input x for a particular set of parameters θ. For this assignment I have applied gradient descent method and have analysed the effect of various hyperparameters and optimizers on the accuracy of prediction.