Home Blog Design Understanding Data Presentations (Guide + Examples)

Understanding Data Presentations (Guide + Examples)

In this age of overwhelming information, the skill to effectively convey data has become extremely valuable. Initiating a discussion on data presentation types involves thoughtful consideration of the nature of your data and the message you aim to convey. Different types of visualizations serve distinct purposes. Whether you’re dealing with how to develop a report or simply trying to communicate complex information, how you present data influences how well your audience understands and engages with it. This extensive guide leads you through the different ways of data presentation.

Table of Contents

What is a Data Presentation?

What should a data presentation include, line graphs, treemap chart, scatter plot, how to choose a data presentation type, recommended data presentation templates, common mistakes done in data presentation.

A data presentation is a slide deck that aims to disclose quantitative information to an audience through the use of visual formats and narrative techniques derived from data analysis, making complex data understandable and actionable. This process requires a series of tools, such as charts, graphs, tables, infographics, dashboards, and so on, supported by concise textual explanations to improve understanding and boost retention rate.

Data presentations require us to cull data in a format that allows the presenter to highlight trends, patterns, and insights so that the audience can act upon the shared information. In a few words, the goal of data presentations is to enable viewers to grasp complicated concepts or trends quickly, facilitating informed decision-making or deeper analysis.

Data presentations go beyond the mere usage of graphical elements. Seasoned presenters encompass visuals with the art of data storytelling , so the speech skillfully connects the points through a narrative that resonates with the audience. Depending on the purpose – inspire, persuade, inform, support decision-making processes, etc. – is the data presentation format that is better suited to help us in this journey.

To nail your upcoming data presentation, ensure to count with the following elements:

- Clear Objectives: Understand the intent of your presentation before selecting the graphical layout and metaphors to make content easier to grasp.

- Engaging introduction: Use a powerful hook from the get-go. For instance, you can ask a big question or present a problem that your data will answer. Take a look at our guide on how to start a presentation for tips & insights.

- Structured Narrative: Your data presentation must tell a coherent story. This means a beginning where you present the context, a middle section in which you present the data, and an ending that uses a call-to-action. Check our guide on presentation structure for further information.

- Visual Elements: These are the charts, graphs, and other elements of visual communication we ought to use to present data. This article will cover one by one the different types of data representation methods we can use, and provide further guidance on choosing between them.

- Insights and Analysis: This is not just showcasing a graph and letting people get an idea about it. A proper data presentation includes the interpretation of that data, the reason why it’s included, and why it matters to your research.

- Conclusion & CTA: Ending your presentation with a call to action is necessary. Whether you intend to wow your audience into acquiring your services, inspire them to change the world, or whatever the purpose of your presentation, there must be a stage in which you convey all that you shared and show the path to staying in touch. Plan ahead whether you want to use a thank-you slide, a video presentation, or which method is apt and tailored to the kind of presentation you deliver.

- Q&A Session: After your speech is concluded, allocate 3-5 minutes for the audience to raise any questions about the information you disclosed. This is an extra chance to establish your authority on the topic. Check our guide on questions and answer sessions in presentations here.

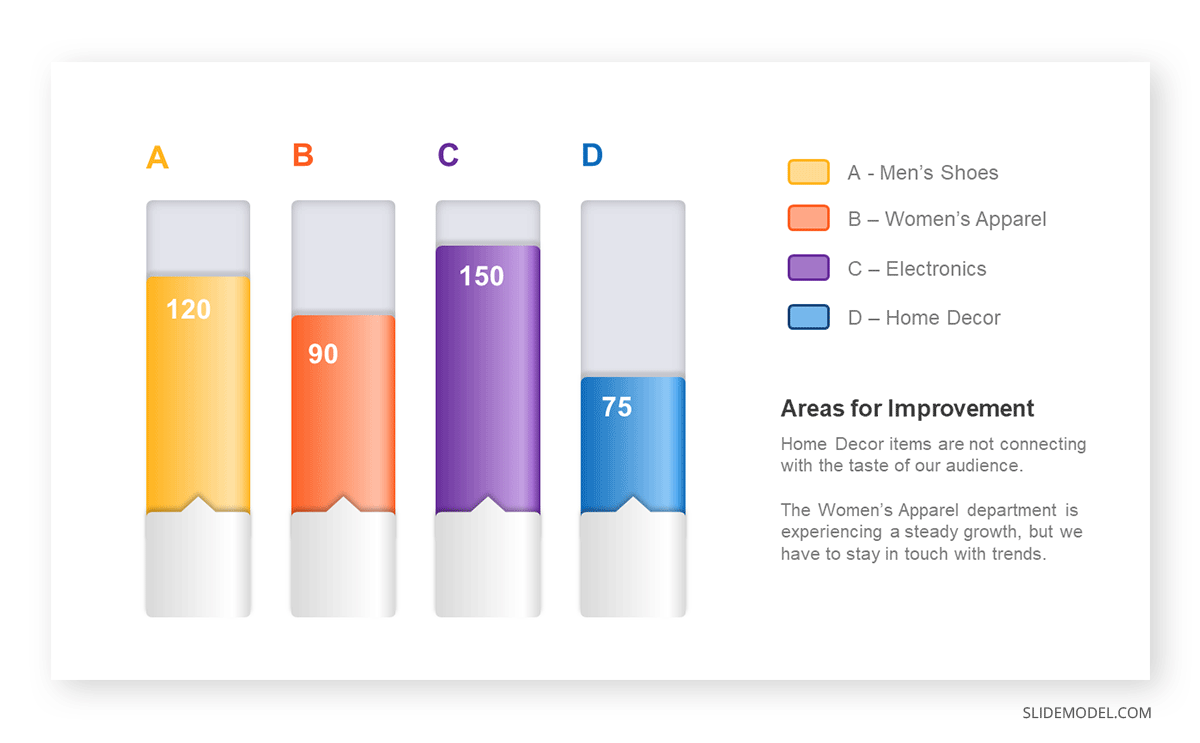

Bar charts are a graphical representation of data using rectangular bars to show quantities or frequencies in an established category. They make it easy for readers to spot patterns or trends. Bar charts can be horizontal or vertical, although the vertical format is commonly known as a column chart. They display categorical, discrete, or continuous variables grouped in class intervals [1] . They include an axis and a set of labeled bars horizontally or vertically. These bars represent the frequencies of variable values or the values themselves. Numbers on the y-axis of a vertical bar chart or the x-axis of a horizontal bar chart are called the scale.

Real-Life Application of Bar Charts

Let’s say a sales manager is presenting sales to their audience. Using a bar chart, he follows these steps.

Step 1: Selecting Data

The first step is to identify the specific data you will present to your audience.

The sales manager has highlighted these products for the presentation.

- Product A: Men’s Shoes

- Product B: Women’s Apparel

- Product C: Electronics

- Product D: Home Decor

Step 2: Choosing Orientation

Opt for a vertical layout for simplicity. Vertical bar charts help compare different categories in case there are not too many categories [1] . They can also help show different trends. A vertical bar chart is used where each bar represents one of the four chosen products. After plotting the data, it is seen that the height of each bar directly represents the sales performance of the respective product.

It is visible that the tallest bar (Electronics – Product C) is showing the highest sales. However, the shorter bars (Women’s Apparel – Product B and Home Decor – Product D) need attention. It indicates areas that require further analysis or strategies for improvement.

Step 3: Colorful Insights

Different colors are used to differentiate each product. It is essential to show a color-coded chart where the audience can distinguish between products.

- Men’s Shoes (Product A): Yellow

- Women’s Apparel (Product B): Orange

- Electronics (Product C): Violet

- Home Decor (Product D): Blue

Bar charts are straightforward and easily understandable for presenting data. They are versatile when comparing products or any categorical data [2] . Bar charts adapt seamlessly to retail scenarios. Despite that, bar charts have a few shortcomings. They cannot illustrate data trends over time. Besides, overloading the chart with numerous products can lead to visual clutter, diminishing its effectiveness.

For more information, check our collection of bar chart templates for PowerPoint .

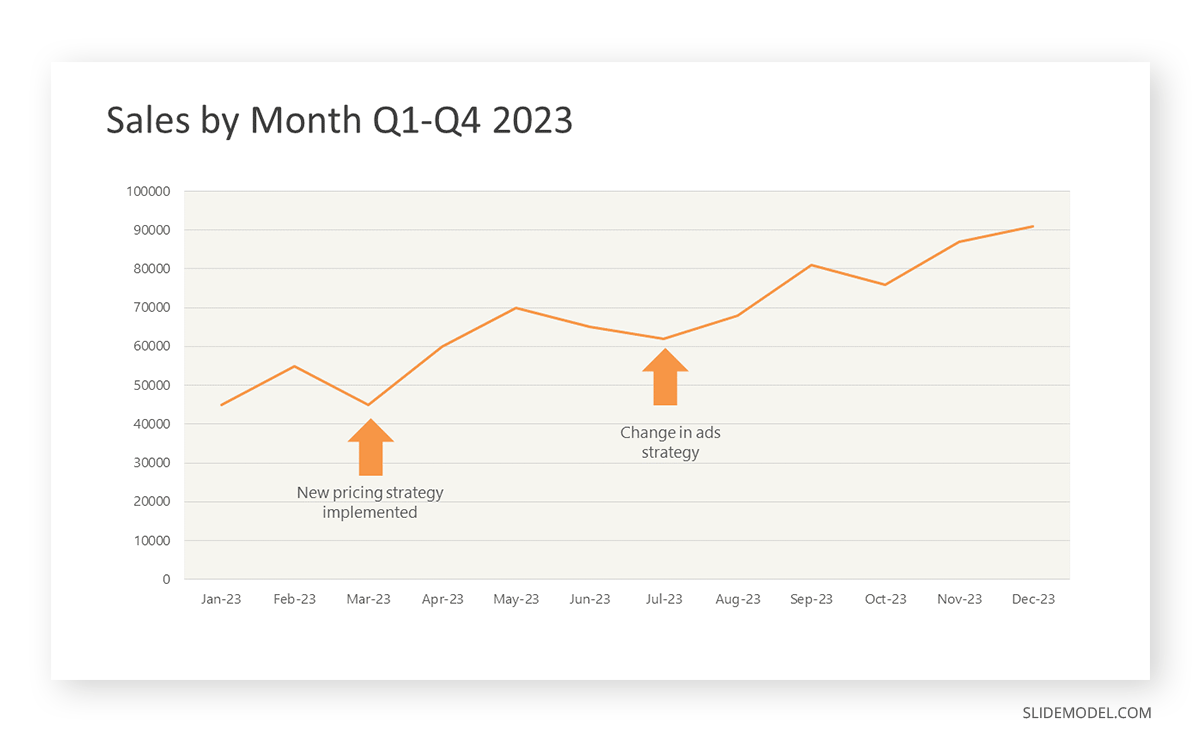

Line graphs help illustrate data trends, progressions, or fluctuations by connecting a series of data points called ‘markers’ with straight line segments. This provides a straightforward representation of how values change [5] . Their versatility makes them invaluable for scenarios requiring a visual understanding of continuous data. In addition, line graphs are also useful for comparing multiple datasets over the same timeline. Using multiple line graphs allows us to compare more than one data set. They simplify complex information so the audience can quickly grasp the ups and downs of values. From tracking stock prices to analyzing experimental results, you can use line graphs to show how data changes over a continuous timeline. They show trends with simplicity and clarity.

Real-life Application of Line Graphs

To understand line graphs thoroughly, we will use a real case. Imagine you’re a financial analyst presenting a tech company’s monthly sales for a licensed product over the past year. Investors want insights into sales behavior by month, how market trends may have influenced sales performance and reception to the new pricing strategy. To present data via a line graph, you will complete these steps.

First, you need to gather the data. In this case, your data will be the sales numbers. For example:

- January: $45,000

- February: $55,000

- March: $45,000

- April: $60,000

- May: $ 70,000

- June: $65,000

- July: $62,000

- August: $68,000

- September: $81,000

- October: $76,000

- November: $87,000

- December: $91,000

After choosing the data, the next step is to select the orientation. Like bar charts, you can use vertical or horizontal line graphs. However, we want to keep this simple, so we will keep the timeline (x-axis) horizontal while the sales numbers (y-axis) vertical.

Step 3: Connecting Trends

After adding the data to your preferred software, you will plot a line graph. In the graph, each month’s sales are represented by data points connected by a line.

Step 4: Adding Clarity with Color

If there are multiple lines, you can also add colors to highlight each one, making it easier to follow.

Line graphs excel at visually presenting trends over time. These presentation aids identify patterns, like upward or downward trends. However, too many data points can clutter the graph, making it harder to interpret. Line graphs work best with continuous data but are not suitable for categories.

For more information, check our collection of line chart templates for PowerPoint and our article about how to make a presentation graph .

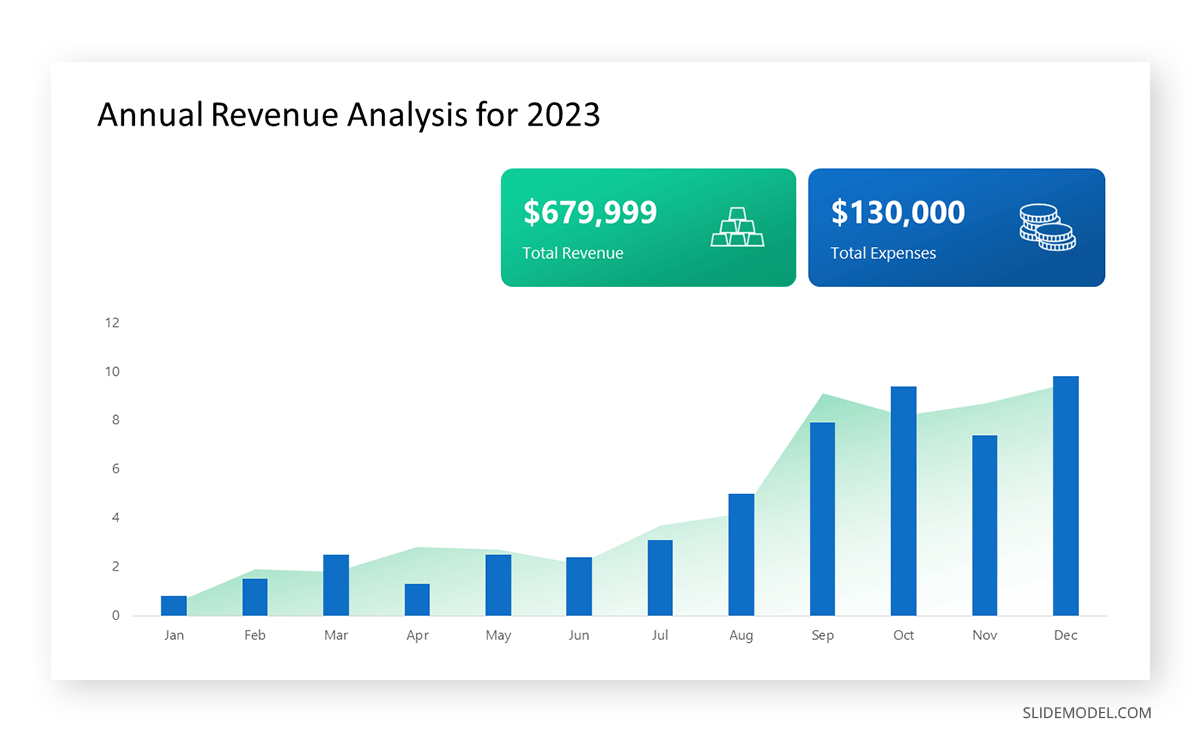

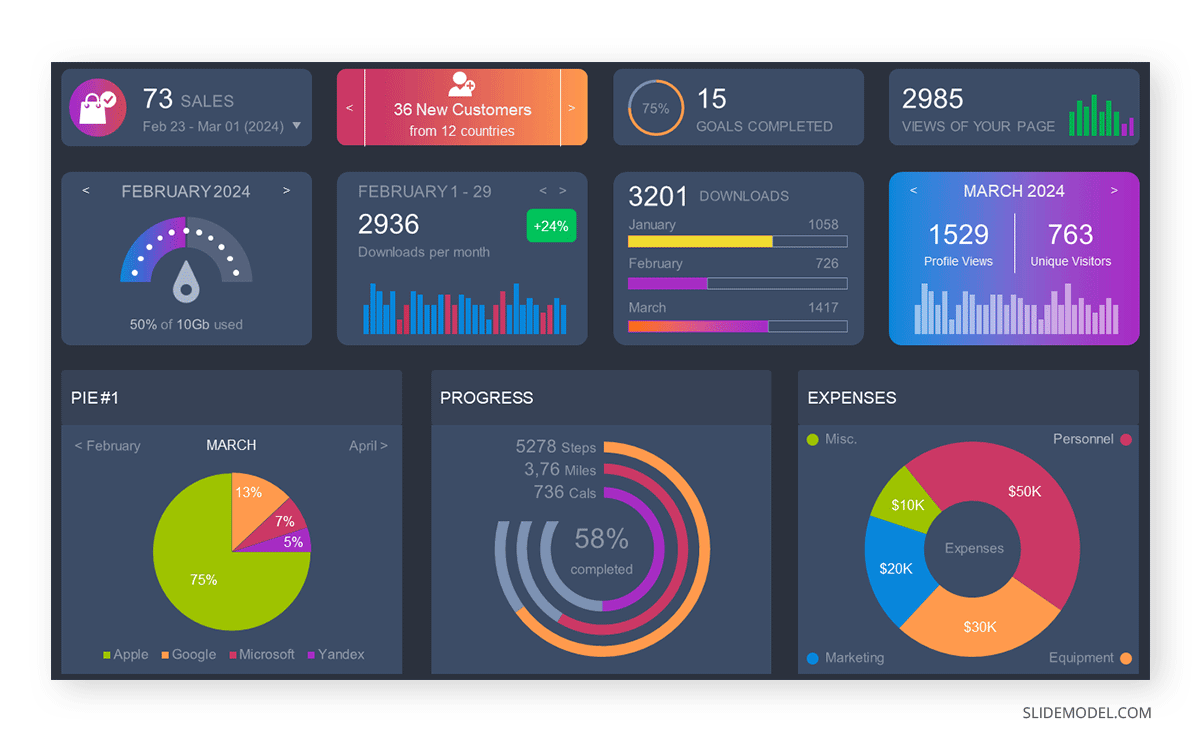

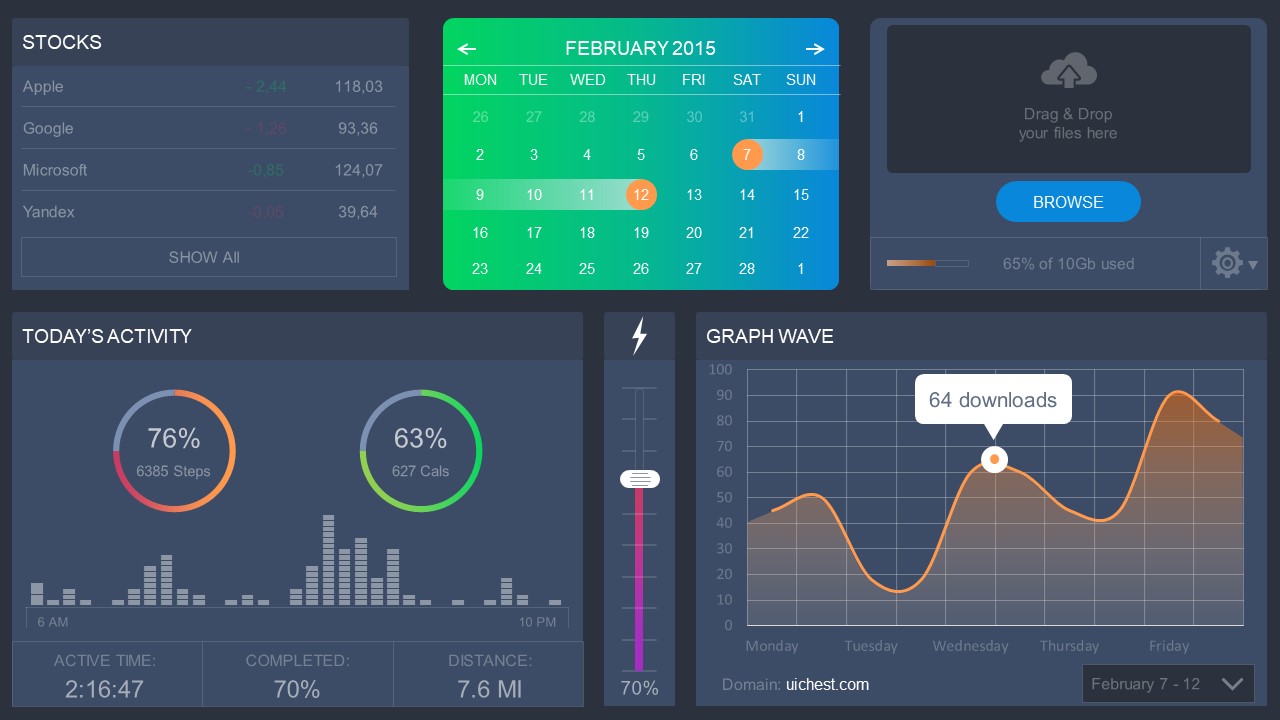

A data dashboard is a visual tool for analyzing information. Different graphs, charts, and tables are consolidated in a layout to showcase the information required to achieve one or more objectives. Dashboards help quickly see Key Performance Indicators (KPIs). You don’t make new visuals in the dashboard; instead, you use it to display visuals you’ve already made in worksheets [3] .

Keeping the number of visuals on a dashboard to three or four is recommended. Adding too many can make it hard to see the main points [4]. Dashboards can be used for business analytics to analyze sales, revenue, and marketing metrics at a time. They are also used in the manufacturing industry, as they allow users to grasp the entire production scenario at the moment while tracking the core KPIs for each line.

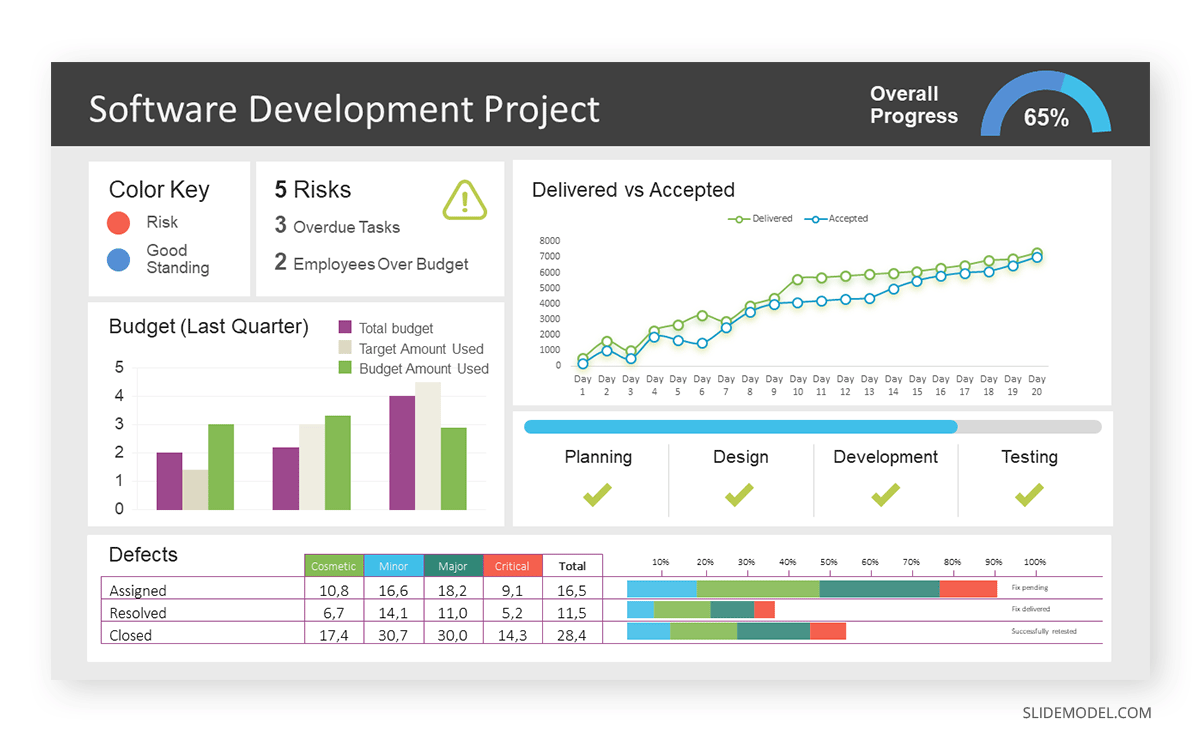

Real-Life Application of a Dashboard

Consider a project manager presenting a software development project’s progress to a tech company’s leadership team. He follows the following steps.

Step 1: Defining Key Metrics

To effectively communicate the project’s status, identify key metrics such as completion status, budget, and bug resolution rates. Then, choose measurable metrics aligned with project objectives.

Step 2: Choosing Visualization Widgets

After finalizing the data, presentation aids that align with each metric are selected. For this project, the project manager chooses a progress bar for the completion status and uses bar charts for budget allocation. Likewise, he implements line charts for bug resolution rates.

Step 3: Dashboard Layout

Key metrics are prominently placed in the dashboard for easy visibility, and the manager ensures that it appears clean and organized.

Dashboards provide a comprehensive view of key project metrics. Users can interact with data, customize views, and drill down for detailed analysis. However, creating an effective dashboard requires careful planning to avoid clutter. Besides, dashboards rely on the availability and accuracy of underlying data sources.

For more information, check our article on how to design a dashboard presentation , and discover our collection of dashboard PowerPoint templates .

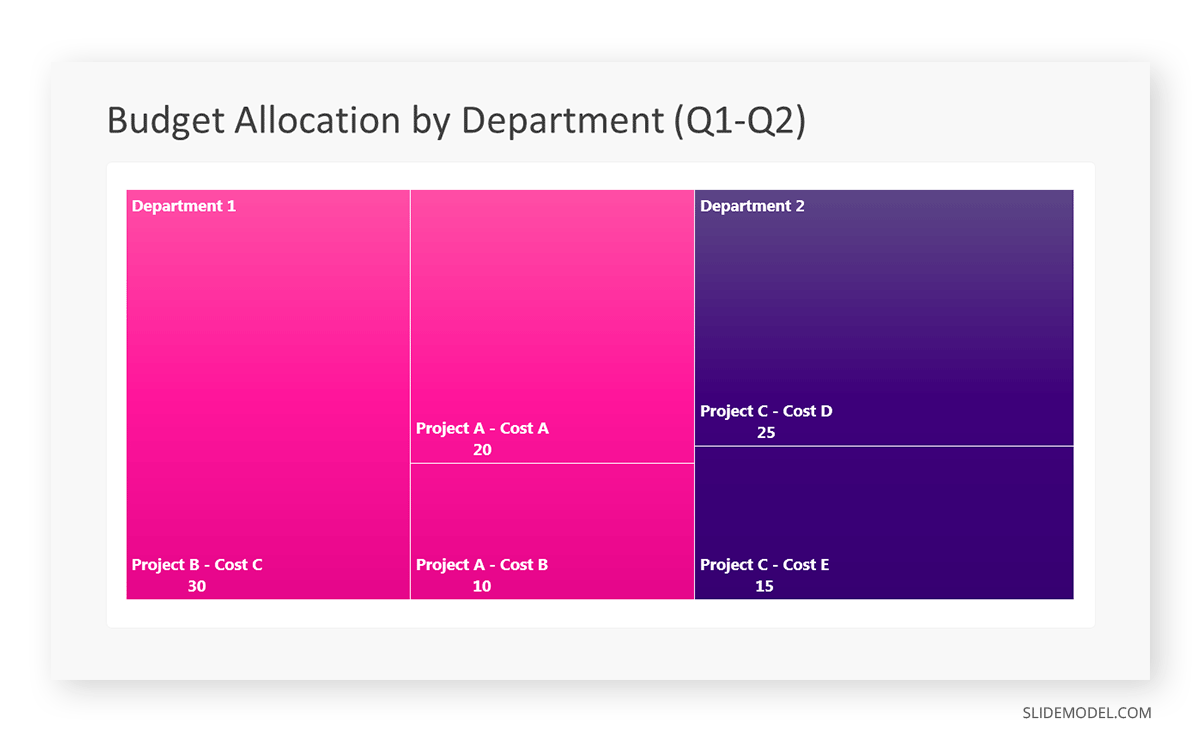

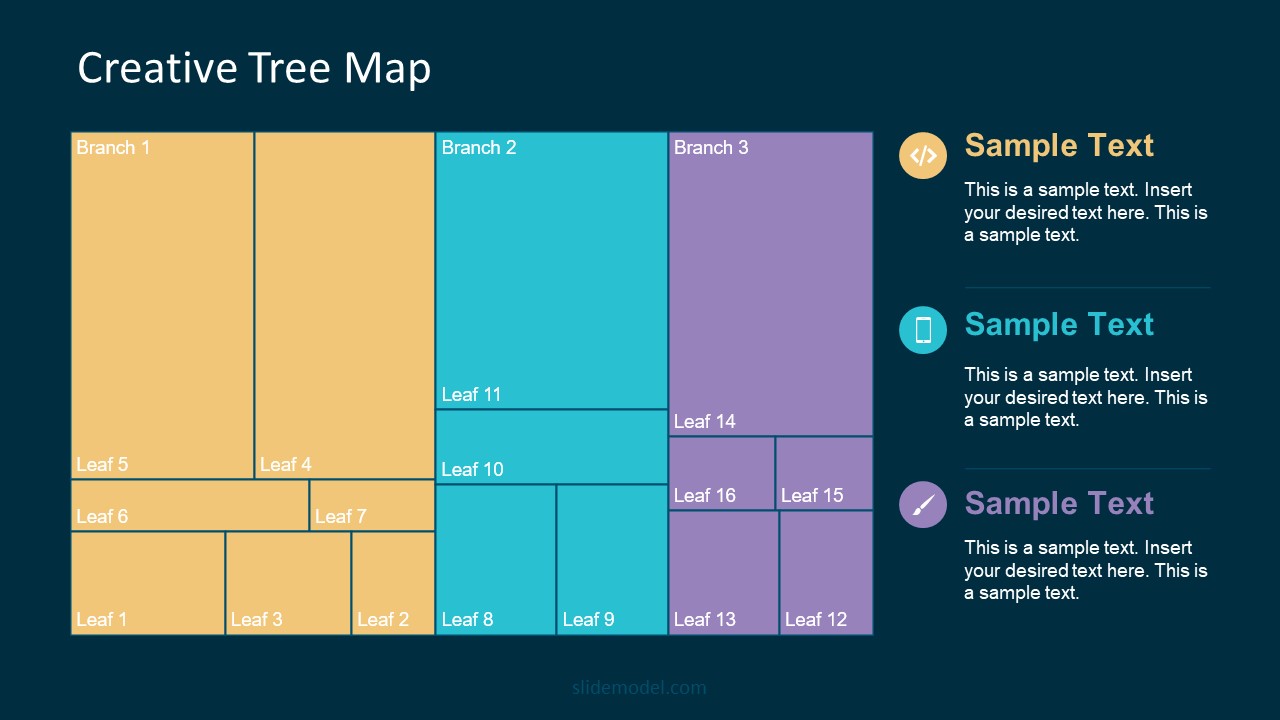

Treemap charts represent hierarchical data structured in a series of nested rectangles [6] . As each branch of the ‘tree’ is given a rectangle, smaller tiles can be seen representing sub-branches, meaning elements on a lower hierarchical level than the parent rectangle. Each one of those rectangular nodes is built by representing an area proportional to the specified data dimension.

Treemaps are useful for visualizing large datasets in compact space. It is easy to identify patterns, such as which categories are dominant. Common applications of the treemap chart are seen in the IT industry, such as resource allocation, disk space management, website analytics, etc. Also, they can be used in multiple industries like healthcare data analysis, market share across different product categories, or even in finance to visualize portfolios.

Real-Life Application of a Treemap Chart

Let’s consider a financial scenario where a financial team wants to represent the budget allocation of a company. There is a hierarchy in the process, so it is helpful to use a treemap chart. In the chart, the top-level rectangle could represent the total budget, and it would be subdivided into smaller rectangles, each denoting a specific department. Further subdivisions within these smaller rectangles might represent individual projects or cost categories.

Step 1: Define Your Data Hierarchy

While presenting data on the budget allocation, start by outlining the hierarchical structure. The sequence will be like the overall budget at the top, followed by departments, projects within each department, and finally, individual cost categories for each project.

- Top-level rectangle: Total Budget

- Second-level rectangles: Departments (Engineering, Marketing, Sales)

- Third-level rectangles: Projects within each department

- Fourth-level rectangles: Cost categories for each project (Personnel, Marketing Expenses, Equipment)

Step 2: Choose a Suitable Tool

It’s time to select a data visualization tool supporting Treemaps. Popular choices include Tableau, Microsoft Power BI, PowerPoint, or even coding with libraries like D3.js. It is vital to ensure that the chosen tool provides customization options for colors, labels, and hierarchical structures.

Here, the team uses PowerPoint for this guide because of its user-friendly interface and robust Treemap capabilities.

Step 3: Make a Treemap Chart with PowerPoint

After opening the PowerPoint presentation, they chose “SmartArt” to form the chart. The SmartArt Graphic window has a “Hierarchy” category on the left. Here, you will see multiple options. You can choose any layout that resembles a Treemap. The “Table Hierarchy” or “Organization Chart” options can be adapted. The team selects the Table Hierarchy as it looks close to a Treemap.

Step 5: Input Your Data

After that, a new window will open with a basic structure. They add the data one by one by clicking on the text boxes. They start with the top-level rectangle, representing the total budget.

Step 6: Customize the Treemap

By clicking on each shape, they customize its color, size, and label. At the same time, they can adjust the font size, style, and color of labels by using the options in the “Format” tab in PowerPoint. Using different colors for each level enhances the visual difference.

Treemaps excel at illustrating hierarchical structures. These charts make it easy to understand relationships and dependencies. They efficiently use space, compactly displaying a large amount of data, reducing the need for excessive scrolling or navigation. Additionally, using colors enhances the understanding of data by representing different variables or categories.

In some cases, treemaps might become complex, especially with deep hierarchies. It becomes challenging for some users to interpret the chart. At the same time, displaying detailed information within each rectangle might be constrained by space. It potentially limits the amount of data that can be shown clearly. Without proper labeling and color coding, there’s a risk of misinterpretation.

A heatmap is a data visualization tool that uses color coding to represent values across a two-dimensional surface. In these, colors replace numbers to indicate the magnitude of each cell. This color-shaded matrix display is valuable for summarizing and understanding data sets with a glance [7] . The intensity of the color corresponds to the value it represents, making it easy to identify patterns, trends, and variations in the data.

As a tool, heatmaps help businesses analyze website interactions, revealing user behavior patterns and preferences to enhance overall user experience. In addition, companies use heatmaps to assess content engagement, identifying popular sections and areas of improvement for more effective communication. They excel at highlighting patterns and trends in large datasets, making it easy to identify areas of interest.

We can implement heatmaps to express multiple data types, such as numerical values, percentages, or even categorical data. Heatmaps help us easily spot areas with lots of activity, making them helpful in figuring out clusters [8] . When making these maps, it is important to pick colors carefully. The colors need to show the differences between groups or levels of something. And it is good to use colors that people with colorblindness can easily see.

Check our detailed guide on how to create a heatmap here. Also discover our collection of heatmap PowerPoint templates .

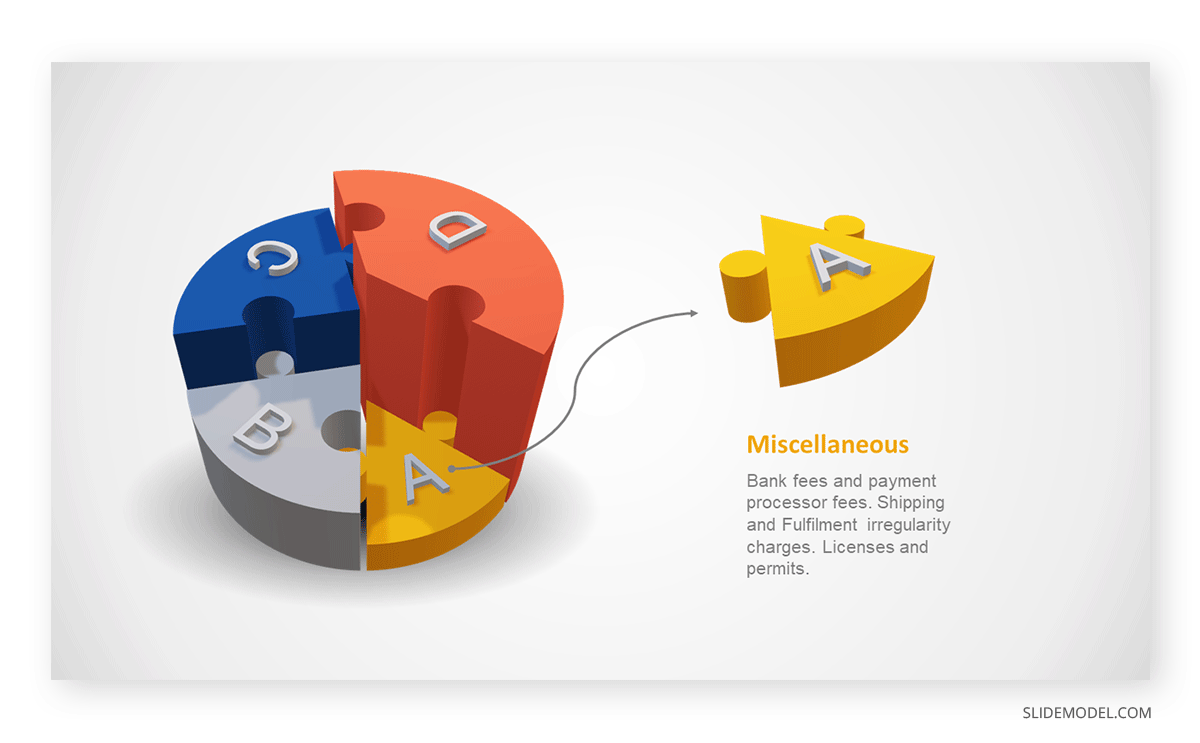

Pie charts are circular statistical graphics divided into slices to illustrate numerical proportions. Each slice represents a proportionate part of the whole, making it easy to visualize the contribution of each component to the total.

The size of the pie charts is influenced by the value of data points within each pie. The total of all data points in a pie determines its size. The pie with the highest data points appears as the largest, whereas the others are proportionally smaller. However, you can present all pies of the same size if proportional representation is not required [9] . Sometimes, pie charts are difficult to read, or additional information is required. A variation of this tool can be used instead, known as the donut chart , which has the same structure but a blank center, creating a ring shape. Presenters can add extra information, and the ring shape helps to declutter the graph.

Pie charts are used in business to show percentage distribution, compare relative sizes of categories, or present straightforward data sets where visualizing ratios is essential.

Real-Life Application of Pie Charts

Consider a scenario where you want to represent the distribution of the data. Each slice of the pie chart would represent a different category, and the size of each slice would indicate the percentage of the total portion allocated to that category.

Step 1: Define Your Data Structure

Imagine you are presenting the distribution of a project budget among different expense categories.

- Column A: Expense Categories (Personnel, Equipment, Marketing, Miscellaneous)

- Column B: Budget Amounts ($40,000, $30,000, $20,000, $10,000) Column B represents the values of your categories in Column A.

Step 2: Insert a Pie Chart

Using any of the accessible tools, you can create a pie chart. The most convenient tools for forming a pie chart in a presentation are presentation tools such as PowerPoint or Google Slides. You will notice that the pie chart assigns each expense category a percentage of the total budget by dividing it by the total budget.

For instance:

- Personnel: $40,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 40%

- Equipment: $30,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 30%

- Marketing: $20,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 20%

- Miscellaneous: $10,000 / ($40,000 + $30,000 + $20,000 + $10,000) = 10%

You can make a chart out of this or just pull out the pie chart from the data.

3D pie charts and 3D donut charts are quite popular among the audience. They stand out as visual elements in any presentation slide, so let’s take a look at how our pie chart example would look in 3D pie chart format.

Step 03: Results Interpretation

The pie chart visually illustrates the distribution of the project budget among different expense categories. Personnel constitutes the largest portion at 40%, followed by equipment at 30%, marketing at 20%, and miscellaneous at 10%. This breakdown provides a clear overview of where the project funds are allocated, which helps in informed decision-making and resource management. It is evident that personnel are a significant investment, emphasizing their importance in the overall project budget.

Pie charts provide a straightforward way to represent proportions and percentages. They are easy to understand, even for individuals with limited data analysis experience. These charts work well for small datasets with a limited number of categories.

However, a pie chart can become cluttered and less effective in situations with many categories. Accurate interpretation may be challenging, especially when dealing with slight differences in slice sizes. In addition, these charts are static and do not effectively convey trends over time.

For more information, check our collection of pie chart templates for PowerPoint .

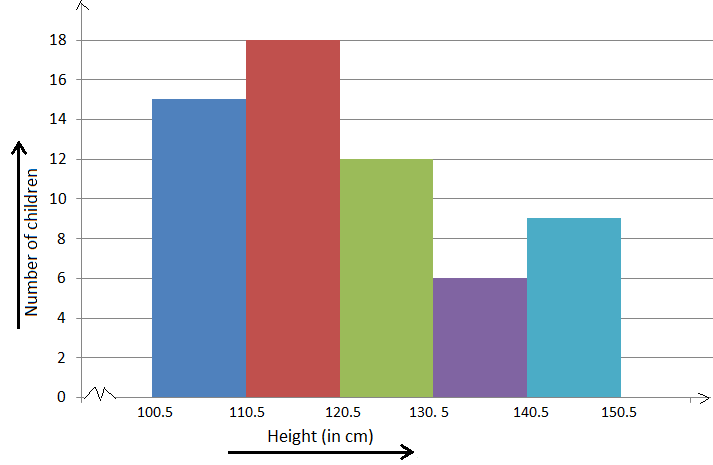

Histograms present the distribution of numerical variables. Unlike a bar chart that records each unique response separately, histograms organize numeric responses into bins and show the frequency of reactions within each bin [10] . The x-axis of a histogram shows the range of values for a numeric variable. At the same time, the y-axis indicates the relative frequencies (percentage of the total counts) for that range of values.

Whenever you want to understand the distribution of your data, check which values are more common, or identify outliers, histograms are your go-to. Think of them as a spotlight on the story your data is telling. A histogram can provide a quick and insightful overview if you’re curious about exam scores, sales figures, or any numerical data distribution.

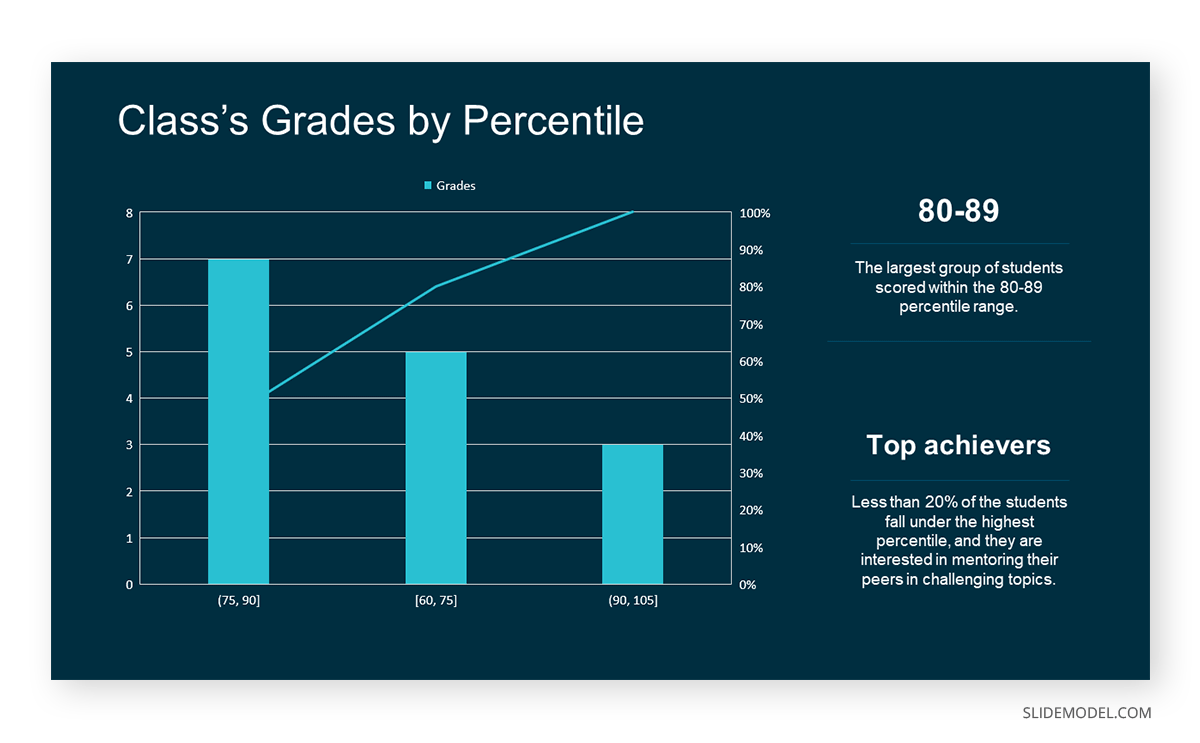

Real-Life Application of a Histogram

In the histogram data analysis presentation example, imagine an instructor analyzing a class’s grades to identify the most common score range. A histogram could effectively display the distribution. It will show whether most students scored in the average range or if there are significant outliers.

Step 1: Gather Data

He begins by gathering the data. The scores of each student in class are gathered to analyze exam scores.

After arranging the scores in ascending order, bin ranges are set.

Step 2: Define Bins

Bins are like categories that group similar values. Think of them as buckets that organize your data. The presenter decides how wide each bin should be based on the range of the values. For instance, the instructor sets the bin ranges based on score intervals: 60-69, 70-79, 80-89, and 90-100.

Step 3: Count Frequency

Now, he counts how many data points fall into each bin. This step is crucial because it tells you how often specific ranges of values occur. The result is the frequency distribution, showing the occurrences of each group.

Here, the instructor counts the number of students in each category.

- 60-69: 1 student (Kate)

- 70-79: 4 students (David, Emma, Grace, Jack)

- 80-89: 7 students (Alice, Bob, Frank, Isabel, Liam, Mia, Noah)

- 90-100: 3 students (Clara, Henry, Olivia)

Step 4: Create the Histogram

It’s time to turn the data into a visual representation. Draw a bar for each bin on a graph. The width of the bar should correspond to the range of the bin, and the height should correspond to the frequency. To make your histogram understandable, label the X and Y axes.

In this case, the X-axis should represent the bins (e.g., test score ranges), and the Y-axis represents the frequency.

The histogram of the class grades reveals insightful patterns in the distribution. Most students, with seven students, fall within the 80-89 score range. The histogram provides a clear visualization of the class’s performance. It showcases a concentration of grades in the upper-middle range with few outliers at both ends. This analysis helps in understanding the overall academic standing of the class. It also identifies the areas for potential improvement or recognition.

Thus, histograms provide a clear visual representation of data distribution. They are easy to interpret, even for those without a statistical background. They apply to various types of data, including continuous and discrete variables. One weak point is that histograms do not capture detailed patterns in students’ data, with seven compared to other visualization methods.

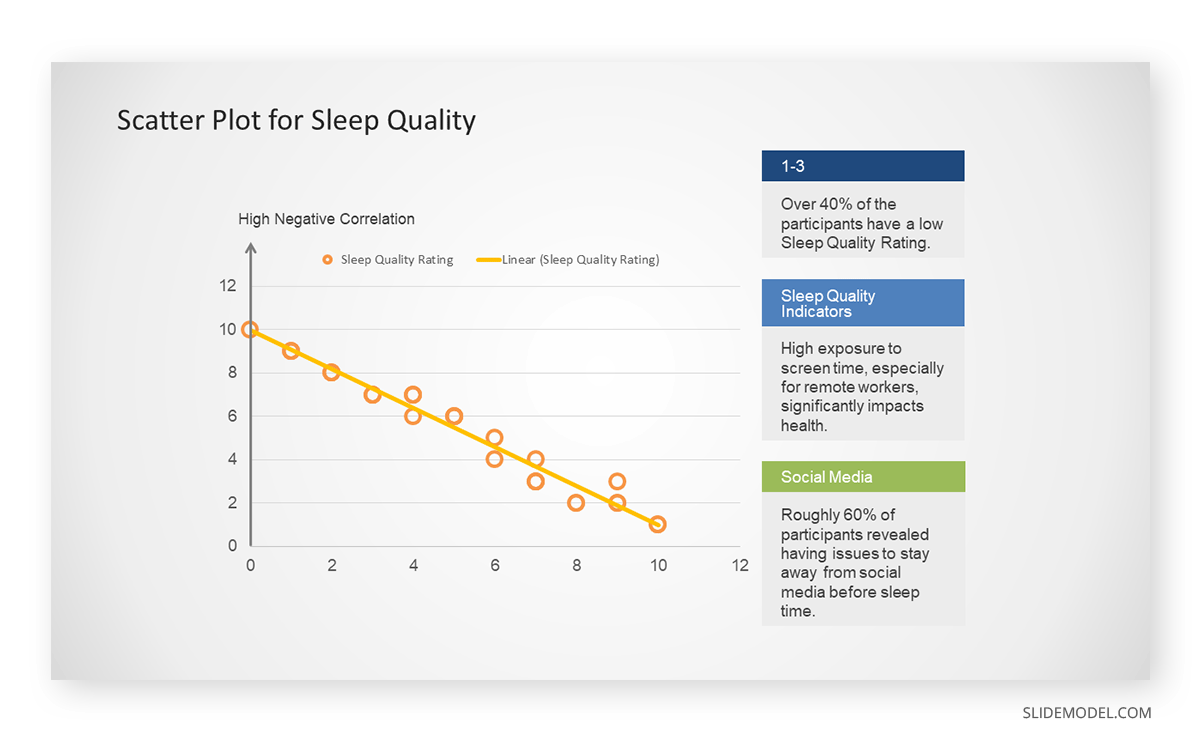

A scatter plot is a graphical representation of the relationship between two variables. It consists of individual data points on a two-dimensional plane. This plane plots one variable on the x-axis and the other on the y-axis. Each point represents a unique observation. It visualizes patterns, trends, or correlations between the two variables.

Scatter plots are also effective in revealing the strength and direction of relationships. They identify outliers and assess the overall distribution of data points. The points’ dispersion and clustering reflect the relationship’s nature, whether it is positive, negative, or lacks a discernible pattern. In business, scatter plots assess relationships between variables such as marketing cost and sales revenue. They help present data correlations and decision-making.

Real-Life Application of Scatter Plot

A group of scientists is conducting a study on the relationship between daily hours of screen time and sleep quality. After reviewing the data, they managed to create this table to help them build a scatter plot graph:

In the provided example, the x-axis represents Daily Hours of Screen Time, and the y-axis represents the Sleep Quality Rating.

The scientists observe a negative correlation between the amount of screen time and the quality of sleep. This is consistent with their hypothesis that blue light, especially before bedtime, has a significant impact on sleep quality and metabolic processes.

There are a few things to remember when using a scatter plot. Even when a scatter diagram indicates a relationship, it doesn’t mean one variable affects the other. A third factor can influence both variables. The more the plot resembles a straight line, the stronger the relationship is perceived [11] . If it suggests no ties, the observed pattern might be due to random fluctuations in data. When the scatter diagram depicts no correlation, whether the data might be stratified is worth considering.

Choosing the appropriate data presentation type is crucial when making a presentation . Understanding the nature of your data and the message you intend to convey will guide this selection process. For instance, when showcasing quantitative relationships, scatter plots become instrumental in revealing correlations between variables. If the focus is on emphasizing parts of a whole, pie charts offer a concise display of proportions. Histograms, on the other hand, prove valuable for illustrating distributions and frequency patterns.

Bar charts provide a clear visual comparison of different categories. Likewise, line charts excel in showcasing trends over time, while tables are ideal for detailed data examination. Starting a presentation on data presentation types involves evaluating the specific information you want to communicate and selecting the format that aligns with your message. This ensures clarity and resonance with your audience from the beginning of your presentation.

1. Fact Sheet Dashboard for Data Presentation

Convey all the data you need to present in this one-pager format, an ideal solution tailored for users looking for presentation aids. Global maps, donut chats, column graphs, and text neatly arranged in a clean layout presented in light and dark themes.

Use This Template

2. 3D Column Chart Infographic PPT Template

Represent column charts in a highly visual 3D format with this PPT template. A creative way to present data, this template is entirely editable, and we can craft either a one-page infographic or a series of slides explaining what we intend to disclose point by point.

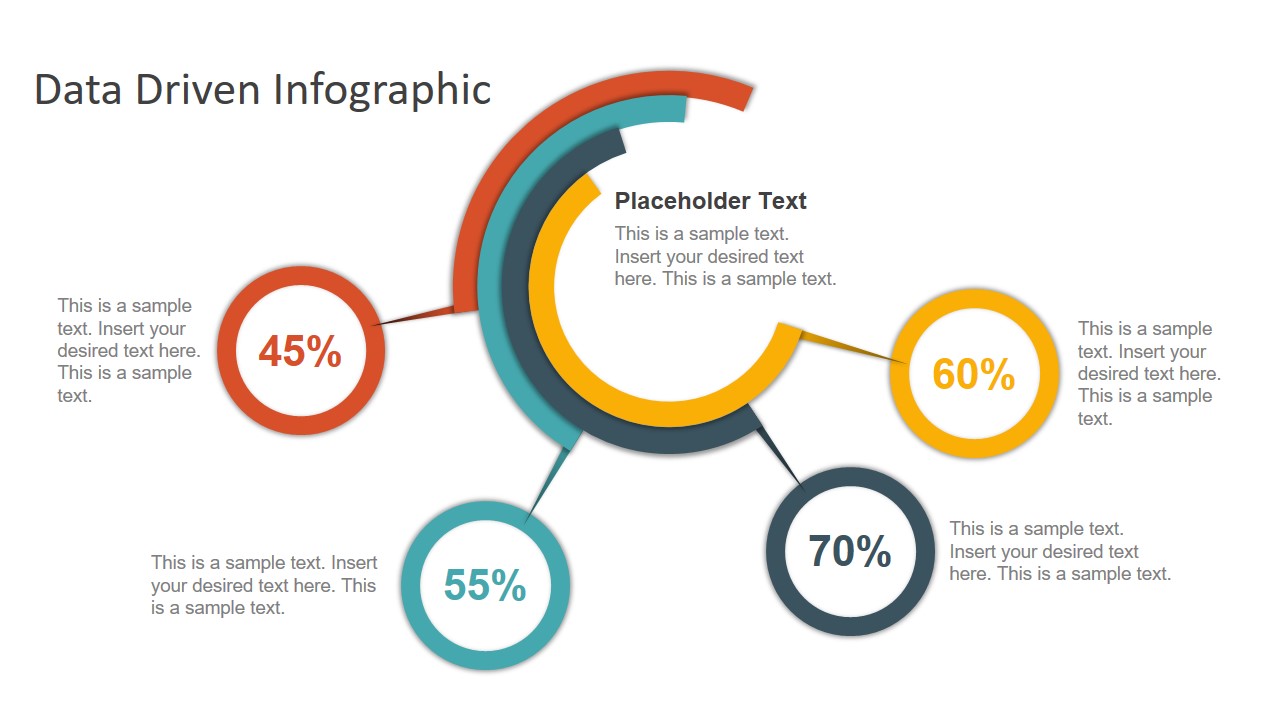

3. Data Circles Infographic PowerPoint Template

An alternative to the pie chart and donut chart diagrams, this template features a series of curved shapes with bubble callouts as ways of presenting data. Expand the information for each arch in the text placeholder areas.

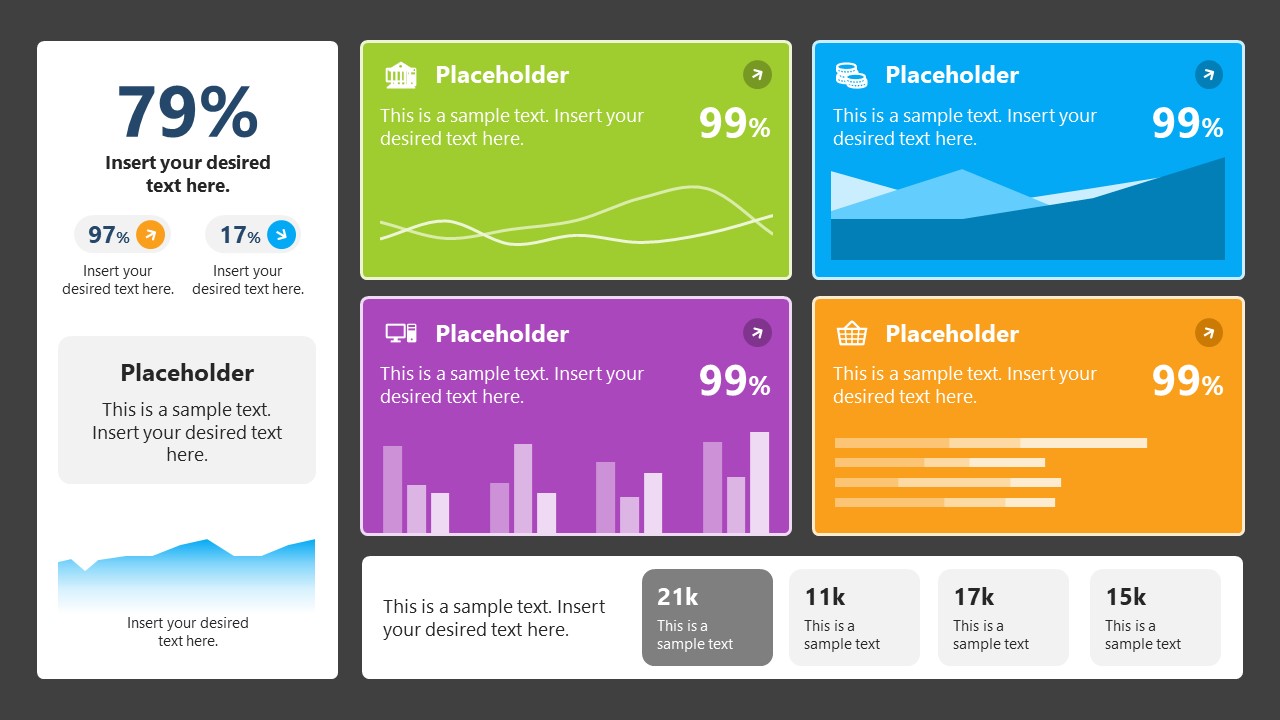

4. Colorful Metrics Dashboard for Data Presentation

This versatile dashboard template helps us in the presentation of the data by offering several graphs and methods to convert numbers into graphics. Implement it for e-commerce projects, financial projections, project development, and more.

5. Animated Data Presentation Tools for PowerPoint & Google Slides

A slide deck filled with most of the tools mentioned in this article, from bar charts, column charts, treemap graphs, pie charts, histogram, etc. Animated effects make each slide look dynamic when sharing data with stakeholders.

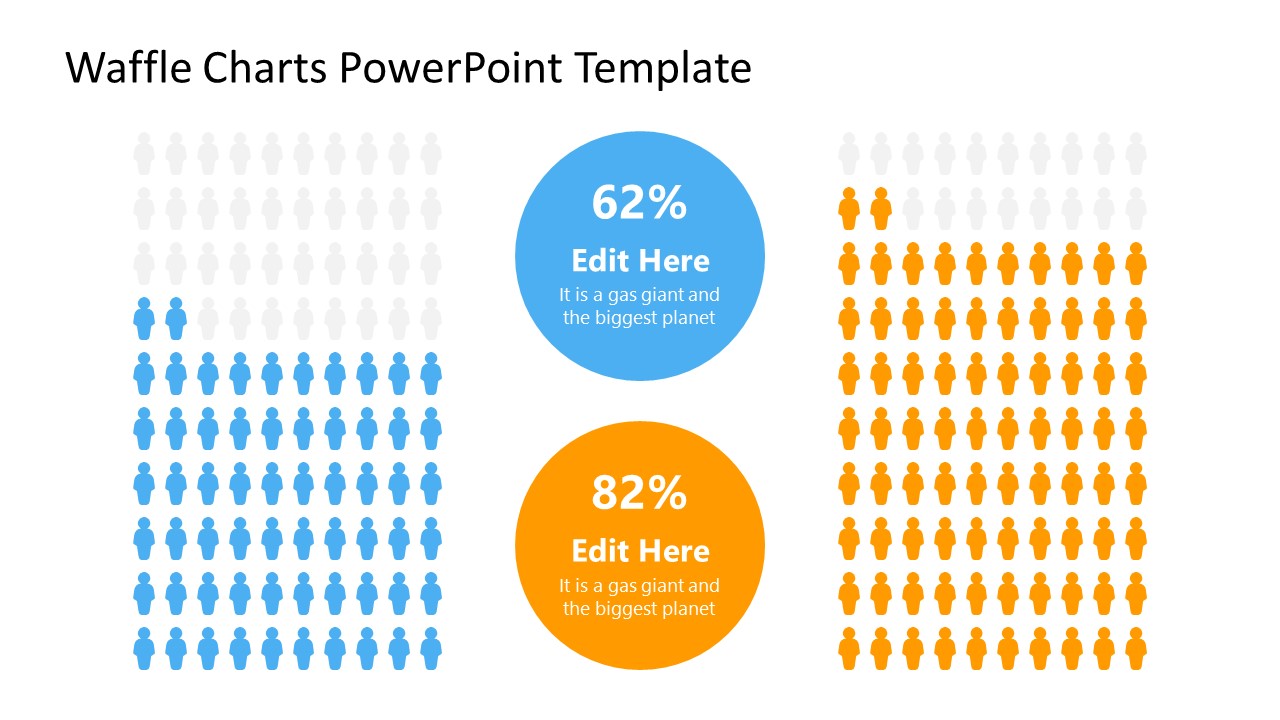

6. Statistics Waffle Charts PPT Template for Data Presentations

This PPT template helps us how to present data beyond the typical pie chart representation. It is widely used for demographics, so it’s a great fit for marketing teams, data science professionals, HR personnel, and more.

7. Data Presentation Dashboard Template for Google Slides

A compendium of tools in dashboard format featuring line graphs, bar charts, column charts, and neatly arranged placeholder text areas.

8. Weather Dashboard for Data Presentation

Share weather data for agricultural presentation topics, environmental studies, or any kind of presentation that requires a highly visual layout for weather forecasting on a single day. Two color themes are available.

9. Social Media Marketing Dashboard Data Presentation Template

Intended for marketing professionals, this dashboard template for data presentation is a tool for presenting data analytics from social media channels. Two slide layouts featuring line graphs and column charts.

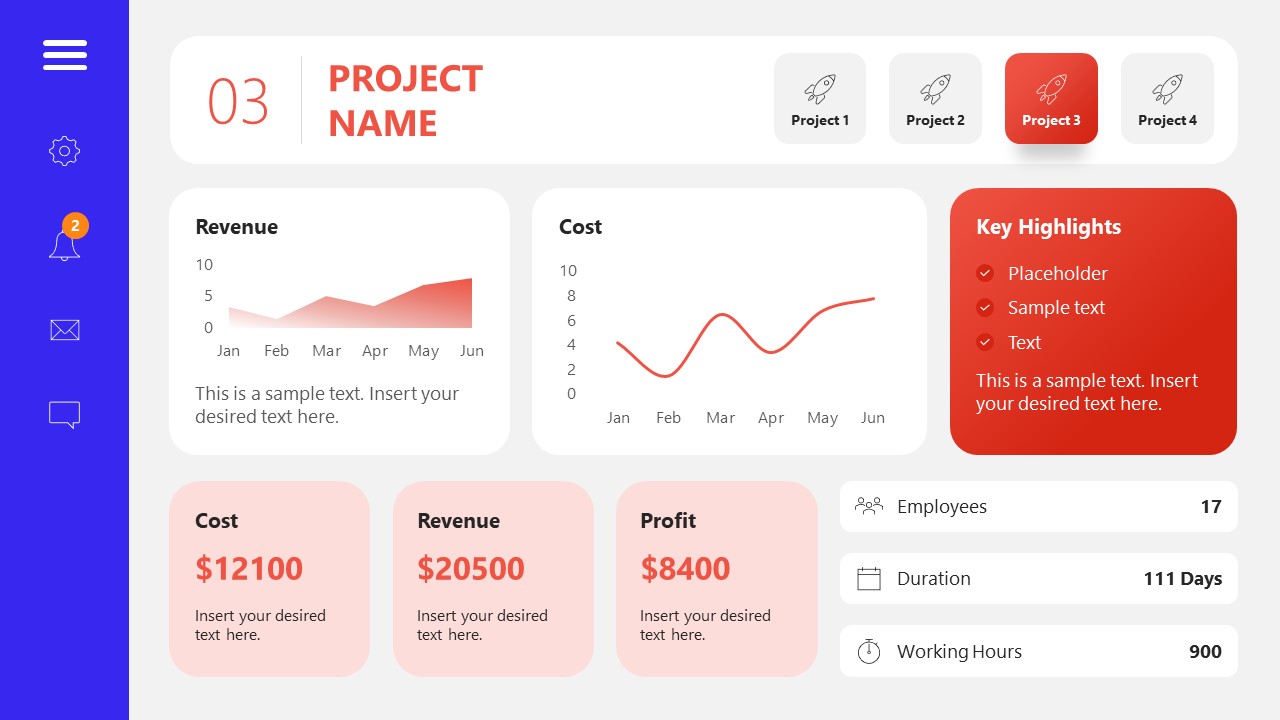

10. Project Management Summary Dashboard Template

A tool crafted for project managers to deliver highly visual reports on a project’s completion, the profits it delivered for the company, and expenses/time required to execute it. 4 different color layouts are available.

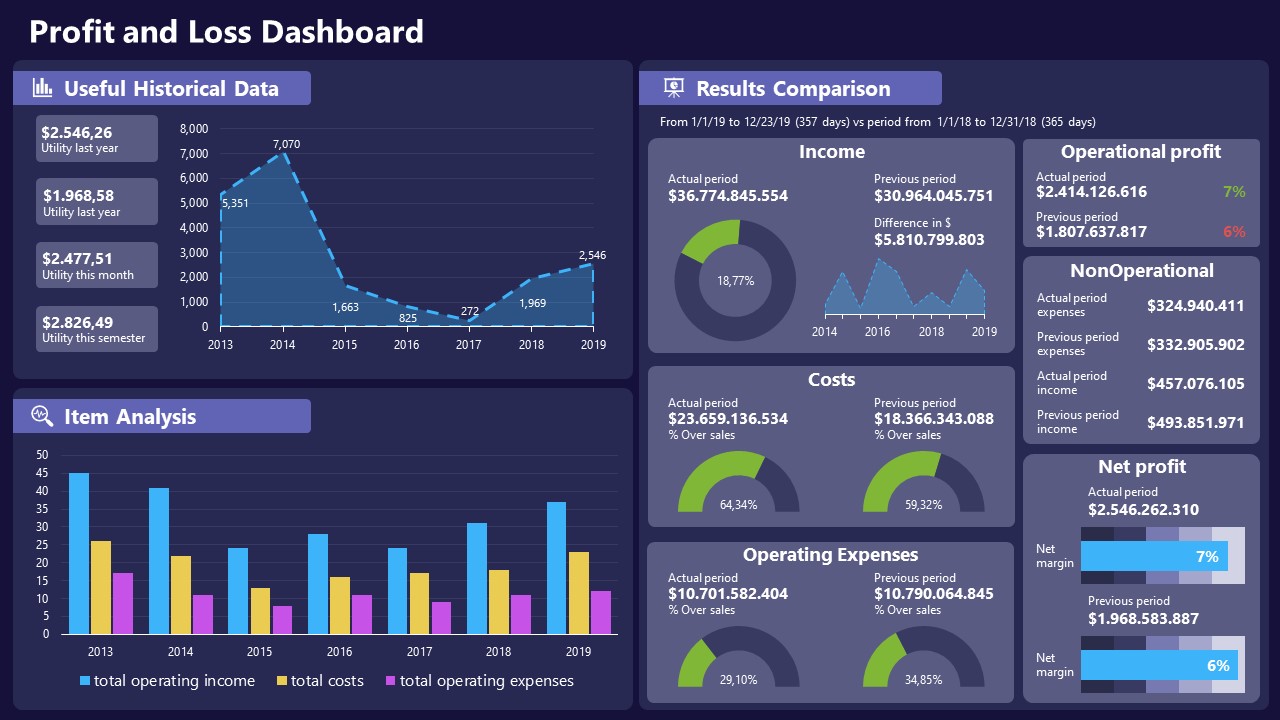

11. Profit & Loss Dashboard for PowerPoint and Google Slides

A must-have for finance professionals. This typical profit & loss dashboard includes progress bars, donut charts, column charts, line graphs, and everything that’s required to deliver a comprehensive report about a company’s financial situation.

Overwhelming visuals

One of the mistakes related to using data-presenting methods is including too much data or using overly complex visualizations. They can confuse the audience and dilute the key message.

Inappropriate chart types

Choosing the wrong type of chart for the data at hand can lead to misinterpretation. For example, using a pie chart for data that doesn’t represent parts of a whole is not right.

Lack of context

Failing to provide context or sufficient labeling can make it challenging for the audience to understand the significance of the presented data.

Inconsistency in design

Using inconsistent design elements and color schemes across different visualizations can create confusion and visual disarray.

Failure to provide details

Simply presenting raw data without offering clear insights or takeaways can leave the audience without a meaningful conclusion.

Lack of focus

Not having a clear focus on the key message or main takeaway can result in a presentation that lacks a central theme.

Visual accessibility issues

Overlooking the visual accessibility of charts and graphs can exclude certain audience members who may have difficulty interpreting visual information.

In order to avoid these mistakes in data presentation, presenters can benefit from using presentation templates . These templates provide a structured framework. They ensure consistency, clarity, and an aesthetically pleasing design, enhancing data communication’s overall impact.

Understanding and choosing data presentation types are pivotal in effective communication. Each method serves a unique purpose, so selecting the appropriate one depends on the nature of the data and the message to be conveyed. The diverse array of presentation types offers versatility in visually representing information, from bar charts showing values to pie charts illustrating proportions.

Using the proper method enhances clarity, engages the audience, and ensures that data sets are not just presented but comprehensively understood. By appreciating the strengths and limitations of different presentation types, communicators can tailor their approach to convey information accurately, developing a deeper connection between data and audience understanding.

If you need a quick method to create a data presentation, check out our AI presentation maker . A tool in which you add the topic, curate the outline, select a design, and let AI do the work for you.

[1] Government of Canada, S.C. (2021) 5 Data Visualization 5.2 Bar Chart , 5.2 Bar chart . https://www150.statcan.gc.ca/n1/edu/power-pouvoir/ch9/bargraph-diagrammeabarres/5214818-eng.htm

[2] Kosslyn, S.M., 1989. Understanding charts and graphs. Applied cognitive psychology, 3(3), pp.185-225. https://apps.dtic.mil/sti/pdfs/ADA183409.pdf

[3] Creating a Dashboard . https://it.tufts.edu/book/export/html/1870

[4] https://www.goldenwestcollege.edu/research/data-and-more/data-dashboards/index.html

[5] https://www.mit.edu/course/21/21.guide/grf-line.htm

[6] Jadeja, M. and Shah, K., 2015, January. Tree-Map: A Visualization Tool for Large Data. In GSB@ SIGIR (pp. 9-13). https://ceur-ws.org/Vol-1393/gsb15proceedings.pdf#page=15

[7] Heat Maps and Quilt Plots. https://www.publichealth.columbia.edu/research/population-health-methods/heat-maps-and-quilt-plots

[8] EIU QGIS WORKSHOP. https://www.eiu.edu/qgisworkshop/heatmaps.php

[9] About Pie Charts. https://www.mit.edu/~mbarker/formula1/f1help/11-ch-c8.htm

[10] Histograms. https://sites.utexas.edu/sos/guided/descriptive/numericaldd/descriptiven2/histogram/ [11] https://asq.org/quality-resources/scatter-diagram

Like this article? Please share

Data Analysis, Data Science, Data Visualization Filed under Design

Related Articles

Filed under Business • October 8th, 2024

Data-Driven Decision Making: Presenting the Process Behind Informed Choices

Discover how to harness data for informed decision-making and create impactful presentations. A detailed guide + templates on DDDM presentation slides.

Filed under Google Slides Tutorials • June 3rd, 2024

How To Make a Graph on Google Slides

Creating quality graphics is an essential aspect of designing data presentations. Learn how to make a graph in Google Slides with this guide.

Filed under Design • March 27th, 2024

How to Make a Presentation Graph

Detailed step-by-step instructions to master the art of how to make a presentation graph in PowerPoint and Google Slides. Check it out!

Leave a Reply

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

National Research Council; Division of Behavioral and Social Sciences and Education; Commission on Behavioral and Social Sciences and Education; Committee on Basic Research in the Behavioral and Social Sciences; Gerstein DR, Luce RD, Smelser NJ, et al., editors. The Behavioral and Social Sciences: Achievements and Opportunities. Washington (DC): National Academies Press (US); 1988.

The Behavioral and Social Sciences: Achievements and Opportunities.

- Hardcopy Version at National Academies Press

5 Methods of Data Collection, Representation, and Analysis

This chapter concerns research on collecting, representing, and analyzing the data that underlie behavioral and social sciences knowledge. Such research, methodological in character, includes ethnographic and historical approaches, scaling, axiomatic measurement, and statistics, with its important relatives, econometrics and psychometrics. The field can be described as including the self-conscious study of how scientists draw inferences and reach conclusions from observations. Since statistics is the largest and most prominent of methodological approaches and is used by researchers in virtually every discipline, statistical work draws the lion’s share of this chapter’s attention.

Problems of interpreting data arise whenever inherent variation or measurement fluctuations create challenges to understand data or to judge whether observed relationships are significant, durable, or general. Some examples: Is a sharp monthly (or yearly) increase in the rate of juvenile delinquency (or unemployment) in a particular area a matter for alarm, an ordinary periodic or random fluctuation, or the result of a change or quirk in reporting method? Do the temporal patterns seen in such repeated observations reflect a direct causal mechanism, a complex of indirect ones, or just imperfections in the data? Is a decrease in auto injuries an effect of a new seat-belt law? Are the disagreements among people describing some aspect of a subculture too great to draw valid inferences about that aspect of the culture?

Such issues of inference are often closely connected to substantive theory and specific data, and to some extent it is difficult and perhaps misleading to treat methods of data collection, representation, and analysis separately. This report does so, as do all sciences to some extent, because the methods developed often are far more general than the specific problems that originally gave rise to them. There is much transfer of new ideas from one substantive field to another—and to and from fields outside the behavioral and social sciences. Some of the classical methods of statistics arose in studies of astronomical observations, biological variability, and human diversity. The major growth of the classical methods occurred in the twentieth century, greatly stimulated by problems in agriculture and genetics. Some methods for uncovering geometric structures in data, such as multidimensional scaling and factor analysis, originated in research on psychological problems, but have been applied in many other sciences. Some time-series methods were developed originally to deal with economic data, but they are equally applicable to many other kinds of data.

- In economics: large-scale models of the U.S. economy; effects of taxation, money supply, and other government fiscal and monetary policies; theories of duopoly, oligopoly, and rational expectations; economic effects of slavery.

- In psychology: test calibration; the formation of subjective probabilities, their revision in the light of new information, and their use in decision making; psychiatric epidemiology and mental health program evaluation.

- In sociology and other fields: victimization and crime rates; effects of incarceration and sentencing policies; deployment of police and fire-fighting forces; discrimination, antitrust, and regulatory court cases; social networks; population growth and forecasting; and voting behavior.

Even such an abridged listing makes clear that improvements in methodology are valuable across the spectrum of empirical research in the behavioral and social sciences as well as in application to policy questions. Clearly, methodological research serves many different purposes, and there is a need to develop different approaches to serve those different purposes, including exploratory data analysis, scientific inference about hypotheses and population parameters, individual decision making, forecasting what will happen in the event or absence of intervention, and assessing causality from both randomized experiments and observational data.

This discussion of methodological research is divided into three areas: design, representation, and analysis. The efficient design of investigations must take place before data are collected because it involves how much, what kind of, and how data are to be collected. What type of study is feasible: experimental, sample survey, field observation, or other? What variables should be measured, controlled, and randomized? How extensive a subject pool or observational period is appropriate? How can study resources be allocated most effectively among various sites, instruments, and subsamples?

The construction of useful representations of the data involves deciding what kind of formal structure best expresses the underlying qualitative and quantitative concepts that are being used in a given study. For example, cost of living is a simple concept to quantify if it applies to a single individual with unchanging tastes in stable markets (that is, markets offering the same array of goods from year to year at varying prices), but as a national aggregate for millions of households and constantly changing consumer product markets, the cost of living is not easy to specify clearly or measure reliably. Statisticians, economists, sociologists, and other experts have long struggled to make the cost of living a precise yet practicable concept that is also efficient to measure, and they must continually modify it to reflect changing circumstances.

Data analysis covers the final step of characterizing and interpreting research findings: Can estimates of the relations between variables be made? Can some conclusion be drawn about correlation, cause and effect, or trends over time? How uncertain are the estimates and conclusions and can that uncertainty be reduced by analyzing the data in a different way? Can computers be used to display complex results graphically for quicker or better understanding or to suggest different ways of proceeding?

Advances in analysis, data representation, and research design feed into and reinforce one another in the course of actual scientific work. The intersections between methodological improvements and empirical advances are an important aspect of the multidisciplinary thrust of progress in the behavioral and social sciences.

- Designs for Data Collection

Four broad kinds of research designs are used in the behavioral and social sciences: experimental, survey, comparative, and ethnographic.

Experimental designs, in either the laboratory or field settings, systematically manipulate a few variables while others that may affect the outcome are held constant, randomized, or otherwise controlled. The purpose of randomized experiments is to ensure that only one or a few variables can systematically affect the results, so that causes can be attributed. Survey designs include the collection and analysis of data from censuses, sample surveys, and longitudinal studies and the examination of various relationships among the observed phenomena. Randomization plays a different role here than in experimental designs: it is used to select members of a sample so that the sample is as representative of the whole population as possible. Comparative designs involve the retrieval of evidence that is recorded in the flow of current or past events in different times or places and the interpretation and analysis of this evidence. Ethnographic designs, also known as participant-observation designs, involve a researcher in intensive and direct contact with a group, community, or population being studied, through participation, observation, and extended interviewing.

Experimental Designs

Laboratory experiments.

Laboratory experiments underlie most of the work reported in Chapter 1 , significant parts of Chapter 2 , and some of the newest lines of research in Chapter 3 . Laboratory experiments extend and adapt classical methods of design first developed, for the most part, in the physical and life sciences and agricultural research. Their main feature is the systematic and independent manipulation of a few variables and the strict control or randomization of all other variables that might affect the phenomenon under study. For example, some studies of animal motivation involve the systematic manipulation of amounts of food and feeding schedules while other factors that may also affect motivation, such as body weight, deprivation, and so on, are held constant. New designs are currently coming into play largely because of new analytic and computational methods (discussed below, in “Advances in Statistical Inference and Analysis”).

Two examples of empirically important issues that demonstrate the need for broadening classical experimental approaches are open-ended responses and lack of independence of successive experimental trials. The first concerns the design of research protocols that do not require the strict segregation of the events of an experiment into well-defined trials, but permit a subject to respond at will. These methods are needed when what is of interest is how the respondent chooses to allocate behavior in real time and across continuously available alternatives. Such empirical methods have long been used, but they can generate very subtle and difficult problems in experimental design and subsequent analysis. As theories of allocative behavior of all sorts become more sophisticated and precise, the experimental requirements become more demanding, so the need to better understand and solve this range of design issues is an outstanding challenge to methodological ingenuity.

The second issue arises in repeated-trial designs when the behavior on successive trials, even if it does not exhibit a secular trend (such as a learning curve), is markedly influenced by what has happened in the preceding trial or trials. The more naturalistic the experiment and the more sensitive the meas urements taken, the more likely it is that such effects will occur. But such sequential dependencies in observations cause a number of important conceptual and technical problems in summarizing the data and in testing analytical models, which are not yet completely understood. In the absence of clear solutions, such effects are sometimes ignored by investigators, simplifying the data analysis but leaving residues of skepticism about the reliability and significance of the experimental results. With continuing development of sensitive measures in repeated-trial designs, there is a growing need for more advanced concepts and methods for dealing with experimental results that may be influenced by sequential dependencies.

Randomized Field Experiments

The state of the art in randomized field experiments, in which different policies or procedures are tested in controlled trials under real conditions, has advanced dramatically over the past two decades. Problems that were once considered major methodological obstacles—such as implementing randomized field assignment to treatment and control groups and protecting the randomization procedure from corruption—have been largely overcome. While state-of-the-art standards are not achieved in every field experiment, the commitment to reaching them is rising steadily, not only among researchers but also among customer agencies and sponsors.

The health insurance experiment described in Chapter 2 is an example of a major randomized field experiment that has had and will continue to have important policy reverberations in the design of health care financing. Field experiments with the negative income tax (guaranteed minimum income) conducted in the 1970s were significant in policy debates, even before their completion, and provided the most solid evidence available on how tax-based income support programs and marginal tax rates can affect the work incentives and family structures of the poor. Important field experiments have also been carried out on alternative strategies for the prevention of delinquency and other criminal behavior, reform of court procedures, rehabilitative programs in mental health, family planning, and special educational programs, among other areas.

In planning field experiments, much hinges on the definition and design of the experimental cells, the particular combinations needed of treatment and control conditions for each set of demographic or other client sample characteristics, including specification of the minimum number of cases needed in each cell to test for the presence of effects. Considerations of statistical power, client availability, and the theoretical structure of the inquiry enter into such specifications. Current important methodological thresholds are to find better ways of predicting recruitment and attrition patterns in the sample, of designing experiments that will be statistically robust in the face of problematic sample recruitment or excessive attrition, and of ensuring appropriate acquisition and analysis of data on the attrition component of the sample.

Also of major significance are improvements in integrating detailed process and outcome measurements in field experiments. To conduct research on program effects under field conditions requires continual monitoring to determine exactly what is being done—the process—how it corresponds to what was projected at the outset. Relatively unintrusive, inexpensive, and effective implementation measures are of great interest. There is, in parallel, a growing emphasis on designing experiments to evaluate distinct program components in contrast to summary measures of net program effects.

Finally, there is an important opportunity now for further theoretical work to model organizational processes in social settings and to design and select outcome variables that, in the relatively short time of most field experiments, can predict longer-term effects: For example, in job-training programs, what are the effects on the community (role models, morale, referral networks) or on individual skills, motives, or knowledge levels that are likely to translate into sustained changes in career paths and income levels?

Survey Designs

Many people have opinions about how societal mores, economic conditions, and social programs shape lives and encourage or discourage various kinds of behavior. People generalize from their own cases, and from the groups to which they belong, about such matters as how much it costs to raise a child, the extent to which unemployment contributes to divorce, and so on. In fact, however, effects vary so much from one group to another that homespun generalizations are of little use. Fortunately, behavioral and social scientists have been able to bridge the gaps between personal perspectives and collective realities by means of survey research. In particular, governmental information systems include volumes of extremely valuable survey data, and the facility of modern computers to store, disseminate, and analyze such data has significantly improved empirical tests and led to new understandings of social processes.

Within this category of research designs, two major types are distinguished: repeated cross-sectional surveys and longitudinal panel surveys. In addition, and cross-cutting these types, there is a major effort under way to improve and refine the quality of survey data by investigating features of human memory and of question formation that affect survey response.

Repeated cross-sectional designs can either attempt to measure an entire population—as does the oldest U.S. example, the national decennial census—or they can rest on samples drawn from a population. The general principle is to take independent samples at two or more times, measuring the variables of interest, such as income levels, housing plans, or opinions about public affairs, in the same way. The General Social Survey, collected by the National Opinion Research Center with National Science Foundation support, is a repeated cross sectional data base that was begun in 1972. One methodological question of particular salience in such data is how to adjust for nonresponses and “don’t know” responses. Another is how to deal with self-selection bias. For example, to compare the earnings of women and men in the labor force, it would be mistaken to first assume that the two samples of labor-force participants are randomly selected from the larger populations of men and women; instead, one has to consider and incorporate in the analysis the factors that determine who is in the labor force.

In longitudinal panels, a sample is drawn at one point in time and the relevant variables are measured at this and subsequent times for the same people. In more complex versions, some fraction of each panel may be replaced or added to periodically, such as expanding the sample to include households formed by the children of the original sample. An example of panel data developed in this way is the Panel Study of Income Dynamics (PSID), conducted by the University of Michigan since 1968 (discussed in Chapter 3 ).

Comparing the fertility or income of different people in different circumstances at the same time to find correlations always leaves a large proportion of the variability unexplained, but common sense suggests that much of the unexplained variability is actually explicable. There are systematic reasons for individual outcomes in each person’s past achievements, in parental models, upbringing, and earlier sequences of experiences. Unfortunately, asking people about the past is not particularly helpful: people remake their views of the past to rationalize the present and so retrospective data are often of uncertain validity. In contrast, generation-long longitudinal data allow readings on the sequence of past circumstances uncolored by later outcomes. Such data are uniquely useful for studying the causes and consequences of naturally occurring decisions and transitions. Thus, as longitudinal studies continue, quantitative analysis is becoming feasible about such questions as: How are the decisions of individuals affected by parental experience? Which aspects of early decisions constrain later opportunities? And how does detailed background experience leave its imprint? Studies like the two-decade-long PSID are bringing within grasp a complete generational cycle of detailed data on fertility, work life, household structure, and income.

Advances in Longitudinal Designs

Large-scale longitudinal data collection projects are uniquely valuable as vehicles for testing and improving survey research methodology. In ways that lie beyond the scope of a cross-sectional survey, longitudinal studies can sometimes be designed—without significant detriment to their substantive interests—to facilitate the evaluation and upgrading of data quality; the analysis of relative costs and effectiveness of alternative techniques of inquiry; and the standardization or coordination of solutions to problems of method, concept, and measurement across different research domains.

Some areas of methodological improvement include discoveries about the impact of interview mode on response (mail, telephone, face-to-face); the effects of nonresponse on the representativeness of a sample (due to respondents’ refusal or interviewers’ failure to contact); the effects on behavior of continued participation over time in a sample survey; the value of alternative methods of adjusting for nonresponse and incomplete observations (such as imputation of missing data, variable case weighting); the impact on response of specifying different recall periods, varying the intervals between interviews, or changing the length of interviews; and the comparison and calibration of results obtained by longitudinal surveys, randomized field experiments, laboratory studies, onetime surveys, and administrative records.

It should be especially noted that incorporating improvements in methodology and data quality has been and will no doubt continue to be crucial to the growing success of longitudinal studies. Panel designs are intrinsically more vulnerable than other designs to statistical biases due to cumulative item non-response, sample attrition, time-in-sample effects, and error margins in repeated measures, all of which may produce exaggerated estimates of change. Over time, a panel that was initially representative may become much less representative of a population, not only because of attrition in the sample, but also because of changes in immigration patterns, age structure, and the like. Longitudinal studies are also subject to changes in scientific and societal contexts that may create uncontrolled drifts over time in the meaning of nominally stable questions or concepts as well as in the underlying behavior. Also, a natural tendency to expand over time the range of topics and thus the interview lengths, which increases the burdens on respondents, may lead to deterioration of data quality or relevance. Careful methodological research to understand and overcome these problems has been done, and continued work as a component of new longitudinal studies is certain to advance the overall state of the art.

Longitudinal studies are sometimes pressed for evidence they are not designed to produce: for example, in important public policy questions concerning the impact of government programs in such areas as health promotion, disease prevention, or criminal justice. By using research designs that combine field experiments (with randomized assignment to program and control conditions) and longitudinal surveys, one can capitalize on the strongest merits of each: the experimental component provides stronger evidence for casual statements that are critical for evaluating programs and for illuminating some fundamental theories; the longitudinal component helps in the estimation of long-term program effects and their attenuation. Coupling experiments to ongoing longitudinal studies is not often feasible, given the multiple constraints of not disrupting the survey, developing all the complicated arrangements that go into a large-scale field experiment, and having the populations of interest overlap in useful ways. Yet opportunities to join field experiments to surveys are of great importance. Coupled studies can produce vital knowledge about the empirical conditions under which the results of longitudinal surveys turn out to be similar to—or divergent from—those produced by randomized field experiments. A pattern of divergence and similarity has begun to emerge in coupled studies; additional cases are needed to understand why some naturally occurring social processes and longitudinal design features seem to approximate formal random allocation and others do not. The methodological implications of such new knowledge go well beyond program evaluation and survey research. These findings bear directly on the confidence scientists—and others—can have in conclusions from observational studies of complex behavioral and social processes, particularly ones that cannot be controlled or simulated within the confines of a laboratory environment.

Memory and the Framing of Questions

A very important opportunity to improve survey methods lies in the reduction of nonsampling error due to questionnaire context, phrasing of questions, and, generally, the semantic and social-psychological aspects of surveys. Survey data are particularly affected by the fallibility of human memory and the sensitivity of respondents to the framework in which a question is asked. This sensitivity is especially strong for certain types of attitudinal and opinion questions. Efforts are now being made to bring survey specialists into closer contact with researchers working on memory function, knowledge representation, and language in order to uncover and reduce this kind of error.

Memory for events is often inaccurate, biased toward what respondents believe to be true—or should be true—about the world. In many cases in which data are based on recollection, improvements can be achieved by shifting to techniques of structured interviewing and calibrated forms of memory elicitation, such as specifying recent, brief time periods (for example, in the last seven days) within which respondents recall certain types of events with acceptable accuracy.

- “Taking things altogether, how would you describe your marriage? Would you say that your marriage is very happy, pretty happy, or not too happy?”

- “Taken altogether how would you say things are these days—would you say you are very happy, pretty happy, or not too happy?”

Presenting this sequence in both directions on different forms showed that the order affected answers to the general happiness question but did not change the marital happiness question: responses to the specific issue swayed subsequent responses to the general one, but not vice versa. The explanations for and implications of such order effects on the many kinds of questions and sequences that can be used are not simple matters. Further experimentation on the design of survey instruments promises not only to improve the accuracy and reliability of survey research, but also to advance understanding of how people think about and evaluate their behavior from day to day.

Comparative Designs

Both experiments and surveys involve interventions or questions by the scientist, who then records and analyzes the responses. In contrast, many bodies of social and behavioral data of considerable value are originally derived from records or collections that have accumulated for various nonscientific reasons, quite often administrative in nature, in firms, churches, military organizations, and governments at all levels. Data of this kind can sometimes be subjected to careful scrutiny, summary, and inquiry by historians and social scientists, and statistical methods have increasingly been used to develop and evaluate inferences drawn from such data. Some of the main comparative approaches are cross-national aggregate comparisons, selective comparison of a limited number of cases, and historical case studies.

Among the more striking problems facing the scientist using such data are the vast differences in what has been recorded by different agencies whose behavior is being compared (this is especially true for parallel agencies in different nations), the highly unrepresentative or idiosyncratic sampling that can occur in the collection of such data, and the selective preservation and destruction of records. Means to overcome these problems form a substantial methodological research agenda in comparative research. An example of the method of cross-national aggregative comparisons is found in investigations by political scientists and sociologists of the factors that underlie differences in the vitality of institutions of political democracy in different societies. Some investigators have stressed the existence of a large middle class, others the level of education of a population, and still others the development of systems of mass communication. In cross-national aggregate comparisons, a large number of nations are arrayed according to some measures of political democracy and then attempts are made to ascertain the strength of correlations between these and the other variables. In this line of analysis it is possible to use a variety of statistical cluster and regression techniques to isolate and assess the possible impact of certain variables on the institutions under study. While this kind of research is cross-sectional in character, statements about historical processes are often invoked to explain the correlations.

More limited selective comparisons, applied by many of the classic theorists, involve asking similar kinds of questions but over a smaller range of societies. Why did democracy develop in such different ways in America, France, and England? Why did northeastern Europe develop rational bourgeois capitalism, in contrast to the Mediterranean and Asian nations? Modern scholars have turned their attention to explaining, for example, differences among types of fascism between the two World Wars, and similarities and differences among modern state welfare systems, using these comparisons to unravel the salient causes. The questions asked in these instances are inevitably historical ones.

Historical case studies involve only one nation or region, and so they may not be geographically comparative. However, insofar as they involve tracing the transformation of a society’s major institutions and the role of its main shaping events, they involve a comparison of different periods of a nation’s or a region’s history. The goal of such comparisons is to give a systematic account of the relevant differences. Sometimes, particularly with respect to the ancient societies, the historical record is very sparse, and the methods of history and archaeology mesh in the reconstruction of complex social arrangements and patterns of change on the basis of few fragments.

Like all research designs, comparative ones have distinctive vulnerabilities and advantages: One of the main advantages of using comparative designs is that they greatly expand the range of data, as well as the amount of variation in those data, for study. Consequently, they allow for more encompassing explanations and theories that can relate highly divergent outcomes to one another in the same framework. They also contribute to reducing any cultural biases or tendencies toward parochialism among scientists studying common human phenomena.

One main vulnerability in such designs arises from the problem of achieving comparability. Because comparative study involves studying societies and other units that are dissimilar from one another, the phenomena under study usually occur in very different contexts—so different that in some cases what is called an event in one society cannot really be regarded as the same type of event in another. For example, a vote in a Western democracy is different from a vote in an Eastern bloc country, and a voluntary vote in the United States means something different from a compulsory vote in Australia. These circumstances make for interpretive difficulties in comparing aggregate rates of voter turnout in different countries.

The problem of achieving comparability appears in historical analysis as well. For example, changes in laws and enforcement and recording procedures over time change the definition of what is and what is not a crime, and for that reason it is difficult to compare the crime rates over time. Comparative researchers struggle with this problem continually, working to fashion equivalent measures; some have suggested the use of different measures (voting, letters to the editor, street demonstration) in different societies for common variables (political participation), to try to take contextual factors into account and to achieve truer comparability.

A second vulnerability is controlling variation. Traditional experiments make conscious and elaborate efforts to control the variation of some factors and thereby assess the causal significance of others. In surveys as well as experiments, statistical methods are used to control sources of variation and assess suspected causal significance. In comparative and historical designs, this kind of control is often difficult to attain because the sources of variation are many and the number of cases few. Scientists have made efforts to approximate such control in these cases of “many variables, small N.” One is the method of paired comparisons. If an investigator isolates 15 American cities in which racial violence has been recurrent in the past 30 years, for example, it is helpful to match them with 15 cities of similar population size, geographical region, and size of minorities—such characteristics are controls—and then search for systematic differences between the two sets of cities. Another method is to select, for comparative purposes, a sample of societies that resemble one another in certain critical ways, such as size, common language, and common level of development, thus attempting to hold these factors roughly constant, and then seeking explanations among other factors in which the sampled societies differ from one another.

Ethnographic Designs

Traditionally identified with anthropology, ethnographic research designs are playing increasingly significant roles in most of the behavioral and social sciences. The core of this methodology is participant-observation, in which a researcher spends an extended period of time with the group under study, ideally mastering the local language, dialect, or special vocabulary, and participating in as many activities of the group as possible. This kind of participant-observation is normally coupled with extensive open-ended interviewing, in which people are asked to explain in depth the rules, norms, practices, and beliefs through which (from their point of view) they conduct their lives. A principal aim of ethnographic study is to discover the premises on which those rules, norms, practices, and beliefs are built.

The use of ethnographic designs by anthropologists has contributed significantly to the building of knowledge about social and cultural variation. And while these designs continue to center on certain long-standing features—extensive face-to-face experience in the community, linguistic competence, participation, and open-ended interviewing—there are newer trends in ethnographic work. One major trend concerns its scale. Ethnographic methods were originally developed largely for studying small-scale groupings known variously as village, folk, primitive, preliterate, or simple societies. Over the decades, these methods have increasingly been applied to the study of small groups and networks within modern (urban, industrial, complex) society, including the contemporary United States. The typical subjects of ethnographic study in modern society are small groups or relatively small social networks, such as outpatient clinics, medical schools, religious cults and churches, ethnically distinctive urban neighborhoods, corporate offices and factories, and government bureaus and legislatures.

As anthropologists moved into the study of modern societies, researchers in other disciplines—particularly sociology, psychology, and political science—began using ethnographic methods to enrich and focus their own insights and findings. At the same time, studies of large-scale structures and processes have been aided by the use of ethnographic methods, since most large-scale changes work their way into the fabric of community, neighborhood, and family, affecting the daily lives of people. Ethnographers have studied, for example, the impact of new industry and new forms of labor in “backward” regions; the impact of state-level birth control policies on ethnic groups; and the impact on residents in a region of building a dam or establishing a nuclear waste dump. Ethnographic methods have also been used to study a number of social processes that lend themselves to its particular techniques of observation and interview—processes such as the formation of class and racial identities, bureaucratic behavior, legislative coalitions and outcomes, and the formation and shifting of consumer tastes.

Advances in structured interviewing (see above) have proven especially powerful in the study of culture. Techniques for understanding kinship systems, concepts of disease, color terminologies, ethnobotany, and ethnozoology have been radically transformed and strengthened by coupling new interviewing methods with modem measurement and scaling techniques (see below). These techniques have made possible more precise comparisons among cultures and identification of the most competent and expert persons within a culture. The next step is to extend these methods to study the ways in which networks of propositions (such as boys like sports, girls like babies) are organized to form belief systems. Much evidence suggests that people typically represent the world around them by means of relatively complex cognitive models that involve interlocking propositions. The techniques of scaling have been used to develop models of how people categorize objects, and they have great potential for further development, to analyze data pertaining to cultural propositions.

Ideological Systems

Perhaps the most fruitful area for the application of ethnographic methods in recent years has been the systematic study of ideologies in modern society. Earlier studies of ideology were in small-scale societies that were rather homogeneous. In these studies researchers could report on a single culture, a uniform system of beliefs and values for the society as a whole. Modern societies are much more diverse both in origins and number of subcultures, related to different regions, communities, occupations, or ethnic groups. Yet these subcultures and ideologies share certain underlying assumptions or at least must find some accommodation with the dominant value and belief systems in the society.

The challenge is to incorporate this greater complexity of structure and process into systematic descriptions and interpretations. One line of work carried out by researchers has tried to track the ways in which ideologies are created, transmitted, and shared among large populations that have traditionally lacked the social mobility and communications technologies of the West. This work has concentrated on large-scale civilizations such as China, India, and Central America. Gradually, the focus has generalized into a concern with the relationship between the great traditions—the central lines of cosmopolitan Confucian, Hindu, or Mayan culture, including aesthetic standards, irrigation technologies, medical systems, cosmologies and calendars, legal codes, poetic genres, and religious doctrines and rites—and the little traditions, those identified with rural, peasant communities. How are the ideological doctrines and cultural values of the urban elites, the great traditions, transmitted to local communities? How are the little traditions, the ideas from the more isolated, less literate, and politically weaker groups in society, transmitted to the elites?

India and southern Asia have been fruitful areas for ethnographic research on these questions. The great Hindu tradition was present in virtually all local contexts through the presence of high-caste individuals in every community. It operated as a pervasive standard of value for all members of society, even in the face of strong little traditions. The situation is surprisingly akin to that of modern, industrialized societies. The central research questions are the degree and the nature of penetration of dominant ideology, even in groups that appear marginal and subordinate and have no strong interest in sharing the dominant value system. In this connection the lowest and poorest occupational caste—the untouchables—serves as an ultimate test of the power of ideology and cultural beliefs to unify complex hierarchical social systems.

Historical Reconstruction

Another current trend in ethnographic methods is its convergence with archival methods. One joining point is the application of descriptive and interpretative procedures used by ethnographers to reconstruct the cultures that created historical documents, diaries, and other records, to interview history, so to speak. For example, a revealing study showed how the Inquisition in the Italian countryside between the 1570s and 1640s gradually worked subtle changes in an ancient fertility cult in peasant communities; the peasant beliefs and rituals assimilated many elements of witchcraft after learning them from their persecutors. A good deal of social history—particularly that of the family—has drawn on discoveries made in the ethnographic study of primitive societies. As described in Chapter 4 , this particular line of inquiry rests on a marriage of ethnographic, archival, and demographic approaches.

Other lines of ethnographic work have focused on the historical dimensions of nonliterate societies. A strikingly successful example in this kind of effort is a study of head-hunting. By combining an interpretation of local oral tradition with the fragmentary observations that were made by outside observers (such as missionaries, traders, colonial officials), historical fluctuations in the rate and significance of head-hunting were shown to be partly in response to such international forces as the great depression and World War II. Researchers are also investigating the ways in which various groups in contemporary societies invent versions of traditions that may or may not reflect the actual history of the group. This process has been observed among elites seeking political and cultural legitimation and among hard-pressed minorities (for example, the Basque in Spain, the Welsh in Great Britain) seeking roots and political mobilization in a larger society.

Ethnography is a powerful method to record, describe, and interpret the system of meanings held by groups and to discover how those meanings affect the lives of group members. It is a method well adapted to the study of situations in which people interact with one another and the researcher can interact with them as well, so that information about meanings can be evoked and observed. Ethnography is especially suited to exploration and elucidation of unsuspected connections; ideally, it is used in combination with other methods—experimental, survey, or comparative—to establish with precision the relative strengths and weaknesses of such connections. By the same token, experimental, survey, and comparative methods frequently yield connections, the meaning of which is unknown; ethnographic methods are a valuable way to determine them.

- Models for Representing Phenomena