An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Data management in clinical research: An overview

Binny krishnankutty, shantala bellary, naveen br kumar, latha s moodahadu.

- Author information

- Article notes

- Copyright and License information

Correspondence to: Dr. Binny Krishnankutty, E-mail: [email protected]

Received 2011 Mar 7; Revised 2011 Nov 8; Accepted 2012 Jan 1.

This is an open-access article distributed under the terms of the Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Clinical Data Management (CDM) is a critical phase in clinical research, which leads to generation of high-quality, reliable, and statistically sound data from clinical trials. This helps to produce a drastic reduction in time from drug development to marketing. Team members of CDM are actively involved in all stages of clinical trial right from inception to completion. They should have adequate process knowledge that helps maintain the quality standards of CDM processes. Various procedures in CDM including Case Report Form (CRF) designing, CRF annotation, database designing, data-entry, data validation, discrepancy management, medical coding, data extraction, and database locking are assessed for quality at regular intervals during a trial. In the present scenario, there is an increased demand to improve the CDM standards to meet the regulatory requirements and stay ahead of the competition by means of faster commercialization of product. With the implementation of regulatory compliant data management tools, CDM team can meet these demands. Additionally, it is becoming mandatory for companies to submit the data electronically. CDM professionals should meet appropriate expectations and set standards for data quality and also have a drive to adapt to the rapidly changing technology. This article highlights the processes involved and provides the reader an overview of the tools and standards adopted as well as the roles and responsibilities in CDM.

KEY WORDS: Clinical data interchange standards consortium, clinical data management systems, data management, e-CRF, good clinical data management practices, validation

Introduction

Clinical trial is intended to find answers to the research question by means of generating data for proving or disproving a hypothesis. The quality of data generated plays an important role in the outcome of the study. Often research students ask the question, “what is Clinical Data Management (CDM) and what is its significance?” Clinical data management is a relevant and important part of a clinical trial. All researchers try their hands on CDM activities during their research work, knowingly or unknowingly. Without identifying the technical phases, we undertake some of the processes involved in CDM during our research work. This article highlights the processes involved in CDM and gives the reader an overview of how data is managed in clinical trials.

CDM is the process of collection, cleaning, and management of subject data in compliance with regulatory standards. The primary objective of CDM processes is to provide high-quality data by keeping the number of errors and missing data as low as possible and gather maximum data for analysis.[ 1 ] To meet this objective, best practices are adopted to ensure that data are complete, reliable, and processed correctly. This has been facilitated by the use of software applications that maintain an audit trail and provide easy identification and resolution of data discrepancies. Sophisticated innovations[ 2 ] have enabled CDM to handle large trials and ensure the data quality even in complex trials.

How do we define ‘high-quality’ data? High-quality data should be absolutely accurate and suitable for statistical analysis. These should meet the protocol-specified parameters and comply with the protocol requirements. This implies that in case of a deviation, not meeting the protocol-specifications, we may think of excluding the patient from the final database. It should be borne in mind that in some situations, regulatory authorities may be interested in looking at such data. Similarly, missing data is also a matter of concern for clinical researchers. High-quality data should have minimal or no misses. But most importantly, high-quality data should possess only an arbitrarily ‘acceptable level of variation’ that would not affect the conclusion of the study on statistical analysis. The data should also meet the applicable regulatory requirements specified for data quality.

Tools for CDM

Many software tools are available for data management, and these are called Clinical Data Management Systems (CDMS). In multicentric trials, a CDMS has become essential to handle the huge amount of data. Most of the CDMS used in pharmaceutical companies are commercial, but a few open source tools are available as well. Commonly used CDM tools are ORACLE CLINICAL, CLINTRIAL, MACRO, RAVE, and eClinical Suite. In terms of functionality, these software tools are more or less similar and there is no significant advantage of one system over the other. These software tools are expensive and need sophisticated Information Technology infrastructure to function. Additionally, some multinational pharmaceutical giants use custom-made CDMS tools to suit their operational needs and procedures. Among the open source tools, the most prominent ones are OpenClinica, openCDMS, TrialDB, and PhOSCo. These CDM software are available free of cost and are as good as their commercial counterparts in terms of functionality. These open source software can be downloaded from their respective websites.

In regulatory submission studies, maintaining an audit trail of data management activities is of paramount importance. These CDM tools ensure the audit trail and help in the management of discrepancies. According to the roles and responsibilities (explained later), multiple user IDs can be created with access limitation to data entry, medical coding, database designing, or quality check. This ensures that each user can access only the respective functionalities allotted to that user ID and cannot make any other change in the database. For responsibilities where changes are permitted to be made in the data, the software will record the change made, the user ID that made the change and the time and date of change, for audit purposes (audit trail). During a regulatory audit, the auditors can verify the discrepancy management process; the changes made and can confirm that no unauthorized or false changes were made.

Regulations, Guidelines, and Standards in CDM

Akin to other areas in clinical research, CDM has guidelines and standards that must be followed. Since the pharmaceutical industry relies on the electronically captured data for the evaluation of medicines, there is a need to follow good practices in CDM and maintain standards in electronic data capture. These electronic records have to comply with a Code of Federal Regulations (CFR), 21 CFR Part 11. This regulation is applicable to records in electronic format that are created, modified, maintained, archived, retrieved, or transmitted. This demands the use of validated systems to ensure accuracy, reliability, and consistency of data with the use of secure, computer-generated, time-stamped audit trails to independently record the date and time of operator entries and actions that create, modify, or delete electronic records.[ 3 ] Adequate procedures and controls should be put in place to ensure the integrity, authenticity, and confidentiality of data. If data have to be submitted to regulatory authorities, it should be entered and processed in 21 CFR part 11-compliant systems. Most of the CDM systems available are like this and pharmaceutical companies as well as contract research organizations ensure this compliance.

Society for Clinical Data Management (SCDM) publishes the Good Clinical Data Management Practices (GCDMP) guidelines, a document providing the standards of good practice within CDM. GCDMP was initially published in September 2000 and has undergone several revisions thereafter. The July 2009 version is the currently followed GCDMP document. GCDMP provides guidance on the accepted practices in CDM that are consistent with regulatory practices. Addressed in 20 chapters, it covers the CDM process by highlighting the minimum standards and best practices.

Clinical Data Interchange Standards Consortium (CDISC), a multidisciplinary non-profit organization, has developed standards to support acquisition, exchange, submission, and archival of clinical research data and metadata. Metadata is the data of the data entered. This includes data about the individual who made the entry or a change in the clinical data, the date and time of entry/change and details of the changes that have been made. Among the standards, two important ones are the Study Data Tabulation Model Implementation Guide for Human Clinical Trials (SDTMIG) and the Clinical Data Acquisition Standards Harmonization (CDASH) standards, available free of cost from the CDISC website ( www.cdisc.org ). The SDTMIG standard[ 4 ] describes the details of model and standard terminologies for the data and serves as a guide to the organization. CDASH v 1.1[ 5 ] defines the basic standards for the collection of data in a clinical trial and enlists the basic data information needed from a clinical, regulatory, and scientific perspective.

The CDM Process

The CDM process, like a clinical trial, begins with the end in mind. This means that the whole process is designed keeping the deliverable in view. As a clinical trial is designed to answer the research question, the CDM process is designed to deliver an error-free, valid, and statistically sound database. To meet this objective, the CDM process starts early, even before the finalization of the study protocol.

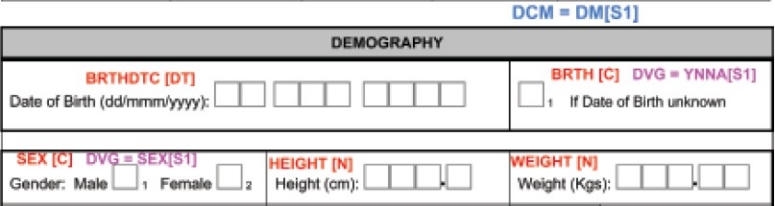

Review and finalization of study documents

The protocol is reviewed from a database designing perspective, for clarity and consistency. During this review, the CDM personnel will identify the data items to be collected and the frequency of collection with respect to the visit schedule. A Case Report Form (CRF) is designed by the CDM team, as this is the first step in translating the protocol-specific activities into data being generated. The data fields should be clearly defined and be consistent throughout. The type of data to be entered should be evident from the CRF. For example, if weight has to be captured in two decimal places, the data entry field should have two data boxes placed after the decimal as shown in Figure 1 . Similarly, the units in which measurements have to be made should also be mentioned next to the data field. The CRF should be concise, self-explanatory, and user-friendly (unless you are the one entering data into the CRF). Along with the CRF, the filling instructions (called CRF Completion Guidelines) should also be provided to study investigators for error-free data acquisition. CRF annotation is done wherein the variable is named according to the SDTMIG or the conventions followed internally. Annotations are coded terms used in CDM tools to indicate the variables in the study. An example of an annotated CRF is provided in Figure 1 . In questions with discrete value options (like the variable gender having values male and female as responses), all possible options will be coded appropriately.

Annotated sample of a Case Report Form (CRF). Annotations are entered in coloured text in this figure to differentiate from the CRF questions. DCM = Data collection module, DVG = Discrete value group, YNNA [S1] = Yes, No = Not applicable [subset 1], C = Character, N = Numerical, DT = Date format. For xample, BRTHDTC [DT] indicates date of birth in the date format

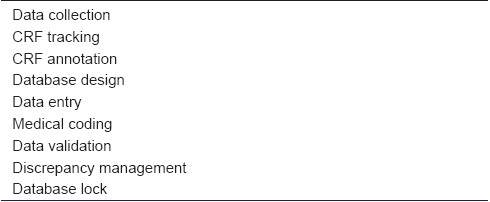

Based on these, a Data Management Plan (DMP) is developed. DMP document is a road map to handle the data under foreseeable circumstances and describes the CDM activities to be followed in the trial. A list of CDM activities is provided in Table 1 . The DMP describes the database design, data entry and data tracking guidelines, quality control measures, SAE reconciliation guidelines, discrepancy management, data transfer/extraction, and database locking guidelines. Along with the DMP, a Data Validation Plan (DVP) containing all edit-checks to be performed and the calculations for derived variables are also prepared. The edit check programs in the DVP help in cleaning up the data by identifying the discrepancies.

List of clinical data management activities

Database designing

Databases are the clinical software applications, which are built to facilitate the CDM tasks to carry out multiple studies.[ 6 ] Generally, these tools have built-in compliance with regulatory requirements and are easy to use. “System validation” is conducted to ensure data security, during which system specifications,[ 7 ] user requirements, and regulatory compliance are evaluated before implementation. Study details like objectives, intervals, visits, investigators, sites, and patients are defined in the database and CRF layouts are designed for data entry. These entry screens are tested with dummy data before moving them to the real data capture.

Data collection

Data collection is done using the CRF that may exist in the form of a paper or an electronic version. The traditional method is to employ paper CRFs to collect the data responses, which are translated to the database by means of data entry done in-house. These paper CRFs are filled up by the investigator according to the completion guidelines. In the e-CRF-based CDM, the investigator or a designee will be logging into the CDM system and entering the data directly at the site. In e-CRF method, chances of errors are less, and the resolution of discrepancies happens faster. Since pharmaceutical companies try to reduce the time taken for drug development processes by enhancing the speed of processes involved, many pharmaceutical companies are opting for e-CRF options (also called remote data entry).

CRF tracking

The entries made in the CRF will be monitored by the Clinical Research Associate (CRA) for completeness and filled up CRFs are retrieved and handed over to the CDM team. The CDM team will track the retrieved CRFs and maintain their record. CRFs are tracked for missing pages and illegible data manually to assure that the data are not lost. In case of missing or illegible data, a clarification is obtained from the investigator and the issue is resolved.

Data entry takes place according to the guidelines prepared along with the DMP. This is applicable only in the case of paper CRF retrieved from the sites. Usually, double data entry is performed wherein the data is entered by two operators separately.[ 8 ] The second pass entry (entry made by the second person) helps in verification and reconciliation by identifying the transcription errors and discrepancies caused by illegible data. Moreover, double data entry helps in getting a cleaner database compared to a single data entry. Earlier studies have shown that double data entry ensures better consistency with paper CRF as denoted by a lesser error rate.[ 9 ]

Data validation

Data validation is the process of testing the validity of data in accordance with the protocol specifications. Edit check programs are written to identify the discrepancies in the entered data, which are embedded in the database, to ensure data validity. These programs are written according to the logic condition mentioned in the DVP. These edit check programs are initially tested with dummy data containing discrepancies. Discrepancy is defined as a data point that fails to pass a validation check. Discrepancy may be due to inconsistent data, missing data, range checks, and deviations from the protocol. In e-CRF based studies, data validation process will be run frequently for identifying discrepancies. These discrepancies will be resolved by investigators after logging into the system. Ongoing quality control of data processing is undertaken at regular intervals during the course of CDM. For example, if the inclusion criteria specify that the age of the patient should be between 18 and 65 years (both inclusive), an edit program will be written for two conditions viz . age <18 and >65. If for any patient, the condition becomes TRUE, a discrepancy will be generated. These discrepancies will be highlighted in the system and Data Clarification Forms (DCFs) can be generated. DCFs are documents containing queries pertaining to the discrepancies identified.

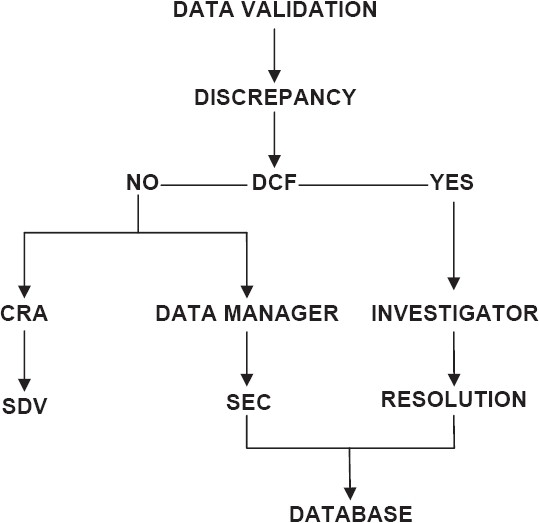

Discrepancy management

This is also called query resolution. Discrepancy management includes reviewing discrepancies, investigating the reason, and resolving them with documentary proof or declaring them as irresolvable. Discrepancy management helps in cleaning the data and gathers enough evidence for the deviations observed in data. Almost all CDMS have a discrepancy database where all discrepancies will be recorded and stored with audit trail.

Based on the types identified, discrepancies are either flagged to the investigator for clarification or closed in-house by Self-Evident Corrections (SEC) without sending DCF to the site. The most common SECs are obvious spelling errors. For discrepancies that require clarifications from the investigator, DCFs will be sent to the site. The CDM tools help in the creation and printing of DCFs. Investigators will write the resolution or explain the circumstances that led to the discrepancy in data. When a resolution is provided by the investigator, the same will be updated in the database. In case of e-CRFs, the investigator can access the discrepancies flagged to him and will be able to provide the resolutions online. Figure 2 illustrates the flow of discrepancy management.

Discrepancy management (DCF = Data clarification form, CRA = Clinical Research Associate, SDV = Source document verification, SEC = Self-evident correction)

The CDM team reviews all discrepancies at regular intervals to ensure that they have been resolved. The resolved data discrepancies are recorded as ‘closed’. This means that those validation failures are no longer considered to be active, and future data validation attempts on the same data will not create a discrepancy for same data point. But closure of discrepancies is not always possible. In some cases, the investigator will not be able to provide a resolution for the discrepancy. Such discrepancies will be considered as ‘irresolvable’ and will be updated in the discrepancy database.

Discrepancy management is the most critical activity in the CDM process. Being the vital activity in cleaning up the data, utmost attention must be observed while handling the discrepancies.

Medical coding

Medical coding helps in identifying and properly classifying the medical terminologies associated with the clinical trial. For classification of events, medical dictionaries available online are used. Technically, this activity needs the knowledge of medical terminology, understanding of disease entities, drugs used, and a basic knowledge of the pathological processes involved. Functionally, it also requires knowledge about the structure of electronic medical dictionaries and the hierarchy of classifications available in them. Adverse events occurring during the study, prior to and concomitantly administered medications and pre-or co-existing illnesses are coded using the available medical dictionaries. Commonly, Medical Dictionary for Regulatory Activities (MedDRA) is used for the coding of adverse events as well as other illnesses and World Health Organization–Drug Dictionary Enhanced (WHO-DDE) is used for coding the medications. These dictionaries contain the respective classifications of adverse events and drugs in proper classes. Other dictionaries are also available for use in data management (eg, WHO-ART is a dictionary that deals with adverse reactions terminology). Some pharmaceutical companies utilize customized dictionaries to suit their needs and meet their standard operating procedures.

Medical coding helps in classifying reported medical terms on the CRF to standard dictionary terms in order to achieve data consistency and avoid unnecessary duplication. For example, the investigators may use different terms for the same adverse event, but it is important to code all of them to a single standard code and maintain uniformity in the process. The right coding and classification of adverse events and medication is crucial as an incorrect coding may lead to masking of safety issues or highlight the wrong safety concerns related to the drug.

Database locking

After a proper quality check and assurance, the final data validation is run. If there are no discrepancies, the SAS datasets are finalized in consultation with the statistician. All data management activities should have been completed prior to database lock. To ensure this, a pre-lock checklist is used and completion of all activities is confirmed. This is done as the database cannot be changed in any manner after locking. Once the approval for locking is obtained from all stakeholders, the database is locked and clean data is extracted for statistical analysis. Generally, no modification in the database is possible. But in case of a critical issue or for other important operational reasons, privileged users can modify the data even after the database is locked. This, however, requires proper documentation and an audit trail has to be maintained with sufficient justification for updating the locked database. Data extraction is done from the final database after locking. This is followed by its archival.

Roles and Responsibilities in CDM

In a CDM team, different roles and responsibilities are attributed to the team members. The minimum educational requirement for a team member in CDM should be graduation in life science and knowledge of computer applications. Ideally, medical coders should be medical graduates. However, in the industry, paramedical graduates are also recruited as medical coders. Some key roles are essential to all CDM teams. The list of roles given below can be considered as minimum requirements for a CDM team:

Data Manager

Database Programmer/Designer

Medical Coder

Clinical Data Coordinator

Quality Control Associate

Data Entry Associate

The data manager is responsible for supervising the entire CDM process. The data manager prepares the DMP, approves the CDM procedures and all internal documents related to CDM activities. Controlling and allocating the database access to team members is also the responsibility of the data manager. The database programmer/designer performs the CRF annotation, creates the study database, and programs the edit checks for data validation. He/she is also responsible for designing of data entry screens in the database and validating the edit checks with dummy data. The medical coder will do the coding for adverse events, medical history, co-illnesses, and concomitant medication administered during the study. The clinical data coordinator designs the CRF, prepares the CRF filling instructions, and is responsible for developing the DVP and discrepancy management. All other CDM-related documents, checklists, and guideline documents are prepared by the clinical data coordinator. The quality control associate checks the accuracy of data entry and conducts data audits.[ 10 ] Sometimes, there is a separate quality assurance person to conduct the audit on the data entered. Additionally, the quality control associate verifies the documentation pertaining to the procedures being followed. The data entry personnel will be tracking the receipt of CRF pages and performs the data entry into the database.

CDM has evolved in response to the ever-increasing demand from pharmaceutical companies to fast-track the drug development process and from the regulatory authorities to put the quality systems in place to ensure generation of high-quality data for accurate drug evaluation. To meet the expectations, there is a gradual shift from the paper-based to the electronic systems of data management. Developments on the technological front have positively impacted the CDM process and systems, thereby leading to encouraging results on speed and quality of data being generated. At the same time, CDM professionals should ensure the standards for improving data quality.[ 11 ] CDM, being a speciality in itself, should be evaluated by means of the systems and processes being implemented and the standards being followed. The biggest challenge from the regulatory perspective would be the standardization of data management process across organizations, and development of regulations to define the procedures to be followed and the data standards. From the industry perspective, the biggest hurdle would be the planning and implementation of data management systems in a changing operational environment where the rapid pace of technology development outdates the existing infrastructure. In spite of these, CDM is evolving to become a standard-based clinical research entity, by striking a balance between the expectations from and constraints in the existing systems, driven by technological developments and business demands.

Source of Support: Nil.

Conflict of Interest: None declared.

- 1. Gerritsen MG, Sartorius OE, vd Veen FM, Meester GT. Data management in multi-center clinical trials and the role of a nation-wide computer network. A 5 year evaluation. Proc Annu Symp Comput Appl Med Care. 1993:659–62. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 2. Lu Z, Su J. Clinical data management: Current status, challenges, and future directions from industry perspectives. Open Access J Clin Trials. 2010;2:93–105. [ Google Scholar ]

- 3. CFR - Code of Federal Regulations Title 21 [Internet] Maryland: Food and Drug Administration. [Updated 2010 Apr 4; Cited 2011 Mar 1]. Available from: http://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfcfr/CFRSearch.cfm?fr=11.10 .

- 4. Study Data Tabulation Model [Internet] Texas: Clinical Data Interchange Standards Consortium. c2011. [Updated 2007 Jul; Cited 2011 Mar 1]. Available from: http://www.cdisc.org/sdtm .

- 5. CDASH [Internet] Texas: Clinical Data Interchange Standards Consortium. c2011. [Updated 2011 Jan; Cited 2011 Mar 1]. Available from: http://www.cdisc.org/cdash .

- 6. Fegan GW, Lang TA. Could an open-source clinical trial data-management system be what we have all been looking for? PLoS Med. 2008;5:e6. doi: 10.1371/journal.pmed.0050006. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 7. Kuchinke W, Ohmann C, Yang Q, Salas N, Lauritsen J, Gueyffier F, et al. Heterogeneity prevails: The state of clinical trial data management in Europe - results of a survey of ECRIN centres. Trials. 2010;11:79. doi: 10.1186/1745-6215-11-79. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 8. Cummings J, Masten J. Customized dual data entry for computerized data analysis. Qual Assur. 1994;3:300–3. [ PubMed ] [ Google Scholar ]

- 9. Reynolds-Haertle RA, McBride R. Single vs. double data entry in CAST. Control Clin Trials. 1992;13:487–94. doi: 10.1016/0197-2456(92)90205-e. [ DOI ] [ PubMed ] [ Google Scholar ]

- 10. Ottevanger PB, Therasse P, van de Velde C, Bernier J, van Krieken H, Grol R, et al. Quality assurance in clinical trials. Crit Rev Oncol Hematol. 2003;47:213–35. doi: 10.1016/s1040-8428(03)00028-3. [ DOI ] [ PubMed ] [ Google Scholar ]

- 11. Haux R, Knaup P, Leiner F. On educating about medical data management - the other side of the electronic health record. Methods Inf Med. 2007;46:74–9. [ PubMed ] [ Google Scholar ]

- View on publisher site

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 10 November 2022

Rethinking clinical study data: why we should respect analysis results as data

- Joana M. Barros ORCID: orcid.org/0000-0002-2952-5420 1 , 2 na1 ,

- Lukas A. Widmer ORCID: orcid.org/0000-0003-1471-3493 1 ,

- Mark Baillie ORCID: orcid.org/0000-0002-5618-0667 1 &

- Simon Wandel ORCID: orcid.org/0000-0002-1442-597X 1

Scientific Data volume 9 , Article number: 686 ( 2022 ) Cite this article

6754 Accesses

1 Citations

6 Altmetric

Metrics details

- Medical research

- Research data

- Research management

The development and approval of new treatments generates large volumes of results, such as summaries of efficacy and safety. However, it is commonly overlooked that analyzing clinical study data also produces data in the form of results. For example, descriptive statistics and model predictions are data. Although integrating and putting findings into context is a cornerstone of scientific work, analysis results are often neglected as a data source. Results end up stored as “data products” such as PDF documents that are not machine readable or amenable to future analyses. We propose a solution to “calculate once, use many times” by combining analysis results standards with a common data model. This analysis results data model re-frames the target of analyses from static representations of the results (e.g., tables and figures) to a data model with applications in various contexts, including knowledge discovery. Further, we provide a working proof of concept detailing how to approach standardization and construct a schema to store and query analysis results.

Similar content being viewed by others

Constructing a finer-grained representation of clinical trial results from ClinicalTrials.gov

Reproducibility of real-world evidence studies using clinical practice data to inform regulatory and coverage decisions

Reporting guidelines for precision medicine research of clinical relevance: the BePRECISE checklist

Introduction.

The process of analyzing data also produces data in the form of results. In other words, project outcomes themselves are a data source for future research: aggregated summaries, descriptive statistics, model estimates, predictions, and evaluation measurements may be reused for secondary purposes. For example, the development and approval of new treatments generates large volumes of results, such as summaries of efficacy and safety from supporting clinical trials through the development phases. Integrating these findings forms the evidence base for efficacy and safety review for new treatments under consideration.

Although integrating and putting scientific findings into context is a cornerstone of scientific work, project results are often neglected or indeed not handled as data (i.e., the machine-readable numerical outcome from an analysis). Analysis results are typically shared as part of presentations, reports, or publications addressing a greater objective. The results of data analysis end up stored as data products , namely, presentation-suitable formats such as PDF, PowerPoint, or HTML documents populated with text, tables, and figures showcasing the results of a single analysis or an assembly of analyses. Contrary to data which can be stored in data frames or databases, data products are not designed to be machine-readable or amenable to future data analyses. An example comparing a data product with data is given in Fig. 3 . In this example, we illustrate how a descriptive analysis of individual patient data - in this case the survival probability by treatment over time - then becomes a new machine-readable data source for subsequent analyses. In other words, the results from one analysis becomes a data source for new analyses. This is the case for clinical trial reporting where the data analysis summaries from a study are rendered to rich text format (RTF) files that are then compiled into appendices following the International Council for Harmonisation of Technical Requirements for Pharmaceuticals for Human Use (ICH) E3 guideline 1 where each appendix is a table, listing or and figure summary of a drug efficacy and safety evaluation. The analysis results stored in these appendices - which can span 1000s of pages - are not readily reusable: extracting information from PDF files is notoriously difficult, and even if machine-readable formats (RTFs) are available, often some manual work is required since important (meta-)information is contained in footnotes for which no standard formats exist. There have been recent attempts to modernise the reporting of clinical trials including the use of electronic notebooks and web-based frameworks. However, while literate programming documents such as Rmarkdown allow documenting code and results together and R-shiny enables dynamic data exploration, the rendered data products also suffer the same fate of presentation-suitable formats. In other words, modern data products also do not handle data analysis results as data. Although there is an agreement on which information should be shared as part of a data package and that sharing data can accelerate new discoveries, there is no proposed solution to facilitate the sharing and reuse of analysis results 2 .

A focus on results presentation over storage considerations sets up a barrier impeding the assimilation of scientific knowledge, understanding what was intended and what was implemented. As a repercussion, the scientific process cycle is broken, leaving researchers who want to reuse prior results with three options:

Re-run the analysis if the code and original source data are accessible.

Re-do the analysis if only the original source data is accessible.

Manually or (pseudo-)automatically extract information from the data products (e.g., tables, figures, published notebooks).

The first option would appear to be the best one and is, for instance, being implemented in Elife executable research articles 3 . However, being able to rerun the analysis does not guarantee reproducibility and can be computationally expensive when covering many studies, large data, or sophisticated models. Analyses can depend on technical factors such as the products used, their versions, and (hardware and software) dependencies, all of which affect the outcome. Even tailored statistical environments such as R 4 have a wide range of output discrepancies and must rely on extensions, such as broom 5 for reformatting and standardizing the outputs of data analysis.

For the second option, there are additional complications to account for: even if we assume that the entire analysis is fully documented, common analyses are not straightforward to implement. This option assumes that the complete details required to implement the analysis are documented, for example, in a statistical analysis plan (SAP). However, data-driven and expertise-driven undocumented choices are a hidden source of deviations that make reproducing or replicating the results an elusive task 6 . On top of this, the selective reporting of results limits replication of the complete set of performed data analyses (both pre-specified and ad-hoc) within a research project 7 , 8 , 9 .

The last scenario is common place for secondary research that combines and integrates findings of single, independent studies, such as meta-analyses or systematic reviews. Following the Cochrane Handbook for Systematic Reviews of Interventions to perform a meta-analysis, to assess the findings, it is necessary to first digitize the studies’ documents either through a laborious manual effort or by using extraction tools known to be error-prone and requiring verification 10 . Furthermore, the unavailability of complete results, potentially through selective reporting, requires researchers to extrapolate the missing results, which can lead to questionable reliability and risk of bias 11 .

Data management is an important, but often undervalued, pillar of scientific work. Good data management supports key activities from planning and execution to analysis and reporting. The importance of data stewardship is now also recognized as an additional pillar. Good data stewardship supports activities beyond the single project into areas such as knowledge discovery, as well as the reuse of data for secondary purposes, to other downstream tasks such as the contextualization, appraisal, and integration of knowledge. Initiatives like FAIR set up the minimal guiding principles and practices for data stewardship based on making the data Findable, Accessible, Interoperable, and Reusable 12 . Likewise, the software and data mining community (e.g., IBM , ONNX , and PFA ) have introduced initiatives bringing standardization to analytic applications, thus facilitating data exchange and releasing the researcher from the burden of translating the output of statistical analysis into a suitable format for the data product.

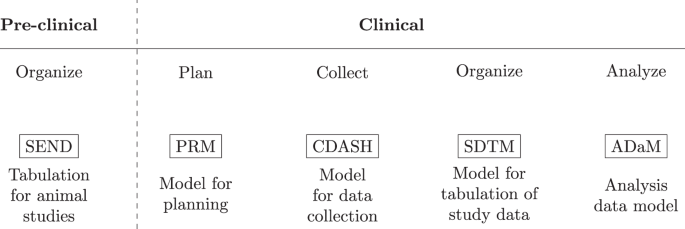

An important component of data management is the data model which specifies the information to capture, how to store it, and standardizes how the elements relate to one another. In the clinical domain, data management is a critical element in preparing regulatory submissions and to obtain market approval. In 1999 the Clinical Data Interchange Standards Consortium (CDISC) introduced the operational data model (ODM) facilitating the collection, organization, and sharing of clinical research data and metadata 13 . In addition, the ODM enabled the creation of standards (Fig. 1 ) such as the Standard Data Tabulation Model (SDTM) and the analysis data model (ADaM) to easily derive analysis datasets for regulatory submissions. Owing to the needs at the different stages of the clinical research lifecycle, CDISC data standards reflect the key steps of the clinical data lifecycle. Although regulatory procedures were traditionally focused on document submission, there has since been a gradual desire to also assess the data used to create the documents 14 . CDISC data standards address this need; however, these standards only consider data from planning and collection, up to analysis data (i.e. data prepared and ready for data analysis). Therefore, the outcome of this paper can be viewed as a potential extension to the CDISC data standards and how not only individual patient data but also descriptive and inferential results should be stored and made available for future reuse.

CDISC defines a collection of standards adapted to the different stages in the clinical research process. For example, ADaM defines data sets that support efficient generation, replication, and review of analyses 36 .

In this paper, we explore the concept of viewing the output of data analysis as data. By doing so, we address the problems associated with the limited reproducibility and reusability of analysis results. We demonstrate why we should respect analysis results as data and put forward a solution using an analysis result data model (ARDM), re-framing the analyses target from the applications of the results (e.g., tables and figures) to a data model. By integrating the analysis results into a similar schema with specific constraints, we would ensure analysis data quality, improve reusability, and facilitate the development of tools leveraging the re-use of analysis results. Taking meta-analyses again as an example, applying an ARDM would now only require one database query instead of a long process of information extraction and verification. Tables, listings, and figures could be generated directly from the results instead of repeating the analysis. Furthermore, storing the results as independent datasets would also allow sharing information without the need for the underlying individual patient data, a useful property given data protection regulations in both academic and industry publications. Viewing analysis results as a data source moves us from repeating or redundantly recording results to a calculate once, use many times mindset. While we use the latter term focusing on results of statistical analyses for clinical studies, it can be seen as a special case of the more general concept of open science and open data, which aims at reducing redundancy in scientific research on a larger scale.

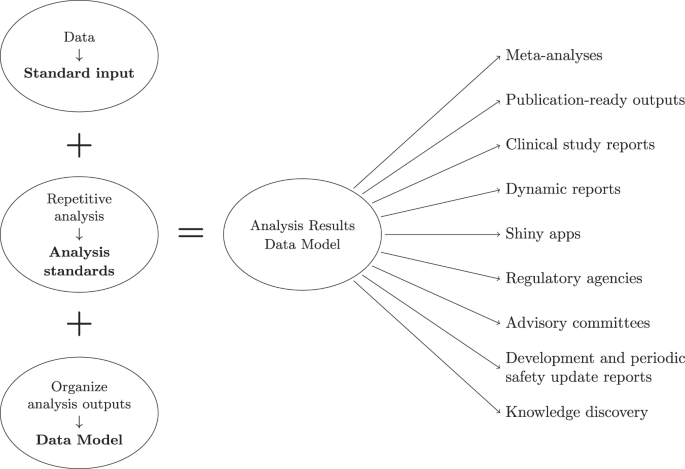

Implementing the ARDM in clinical research

The ARDM is adaptive and expandable. For example, with each analysis standard, we can adapt or create new tables to the schema. With respect to the inspection and visualization of the results, there is also the flexibility to create a variety of outputs, independent of the analysis standard. The proof of concept for the ARDM is implemented using the R programming language and a relational SQLite database ; however, these choices can be revisited as the ARDM can be implemented using a variety of languages and databases. This implementation should be viewed as a starting point rather than a complete solution. Here, we highlight the considerations we took to construct the ARDM utilizing three analysis standards (descriptive statistics, safety, and survival analysis) and leveraging the CDISC Pilot Project ADaM dataset. Further documentation is available in the code repository. An overview of the requirements to create the ARDM is shown is Fig. 2 .

In clinical development, the analysis results data model enables a source of truth for results applied in various applications. Currently, the examples on the right require running analyses independently, even when using the same results.

Prior to ingesting clinical data, the algorithm first creates empty tables with specifications on the column names and data types. These tables are grouped into metadata, intermediate data, and results. The metadata tables are created to record additional information such as variables types (e.g., categorical and continuous) and measurement units (e.g., age is given in years). As part of the metadata tables, the algorithm also creates an analysis standards table requiring information on the analysis standard name, function calls, and its parameters. The intermediate data tables aggregate information at the subject level and are useful to avoid repeated data transformations (e.g., repeated aggregations) thus, reducing potential errors and computational execution time during the analysis. The results tables specify the analysis results information that will be stored. Note that the creation of the metadata, intermediate data, and result tables require upfront planning to identify which information should be recorded. Although it is possible to create tables ad hoc, a fundamental part of the ARDM is to generalize and remove redundancies rather than creating a multitude of fit-for-purpose solutions. Hence, creating a successful ARDM requires understanding the clinical development pipeline to effectively plan the analysis by taking into account the downstream applications of the results (e.g., the analysis standard or the data products). As the information stored in the results tables is dictated by the data model, it is possible to inspect the results by querying the database and creating visualizations. In the public repository 15 , we showcase how to query the database and create different products from the results. Furthermore, the modular nature of the ARDM separates the results rendering from the downstream outputs hence, updates to the data products do not affect the results.

Applications

Analysis standards are a fundamental part of the ARDM to guarantee coherent and suitable outputs. They ensure that the results are comparable, which is not always the case. Similarly, where conventions exist (e.g., safety analysis), we can use an ARDM to provide structure to the results storage thus, facilitating access and reusability. In short, it provides a knowledge source of validated analysis results, i.e. a single source of truth. This enables the separation between the analysis and the data products, streamlining the creation of tables or figures for publications, or other products as outlined in Fig. 2 .

Tracking, searching, and retrieving outputs is facilitated by having an ARDM as it enables query-based searches. For example, we can search based on primary endpoints “p-value”, “point estimates”, and adverse events incidence for any given trial present in the database. With automation, we can also select cohorts through query-based searches and apply the analysis standards to automate the creation of results using the selected data. This also facilitates decision-making and enhancements. For example, one can have access to complete trial results beyond the primary endpoint, and extrapolate to cohorts that require special considerations such as pediatric patients. In addition, a single source of truth for results encourages the adoption of more sophisticated approaches to gather new inferences, for example, using knowledge graphs and network analysis.

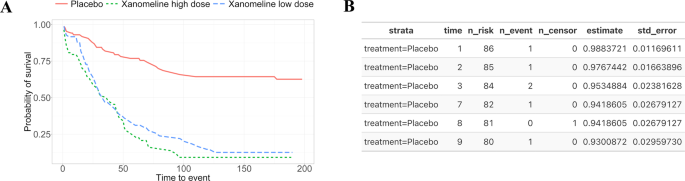

Case study: updating a Kaplan–Meier plot

The Kaplan-Meier plot is a common way to visualize the results from a survival or time-to-event analysis. The purpose of the Kaplan-Meier non-parametric method is to estimate the survival probability from observed survival times 16 . Note that some patients might not experience the event (e.g., death, relapse); hence, censoring is used to differentiate between the cases and to allow for valid inferences. As a result of the analysis, survival curves are created for the given strata. For the CDISC pilot study which was conducted in patients with mild to moderate Alzheimer’s disease, a time-to-event safety endpoint, the time to dermatologic events, is available. Such time-to-event safety endpoints are not uncommon in practice since they allow understanding potential differences between the treatment groups in the time to onset of the first event. Since the pilot study involved three treatment groups – placebo, low dose, and high dose – it may be a good starting point to plot all groups first. Figure 3A shows a Kaplan-Meier plot with three strata corresponding to the treatments in the CDISC pilot study.

The Kaplan-Meier plot corresponds to a data product from a survival analysis ( A ). On the contrary, the data from the analysis is stored in a machine-readable format ( B ) allowing for updates to the Kaplan-Meier plot and for use in downstream analyses.

Even in the showcased scenario, we assume to have access to the clinical data, however, this might not be the case. Data protection is an important aspect of any research area. While data protection regulations have provided a way to share data and in return improve the reproducibility of experiments, in clinical research, sharing sensitive subject-specific data is impractical or simply not possible for legal reasons. Another option is to only share aggregated data or the analysis results. While this option can still bring privacy issues, for example due to the presence of outliers, results are already widely shared in publications through visualizations like the ones shown in Figs. 3 and 4 . For Kaplan-Meier plots, this has led to numerous approaches 17 , 18 , 19 , 20 on extracting/retrieving the underlying results data, since these are often required e.g. in health technology assessments or when incorporating historical information into actual studies (e.g., Roychoudhury and Neuenschwander (2020) 21 ). In contrast to current practice, having an ARDM in place gives many options on what data to share to support results reusability in a variety of contexts. For example, even regulatory agencies can benefit from the ARDM since outputs such as tables, graphics and listings can be easily generated from the results without the need to repeat or reproduce analyses. From our experience, it is common to initially share results with limited people (e.g., within a team) where we do not give much importance to details like aesthetics. However, at a later stage, researchers need the results to update the visualization to suit a wider audience, or use this data for future research. In the Kaplan-Meier plot example, this requires reverse-engineering by using tools to digitize the plot and create machine-readable results.

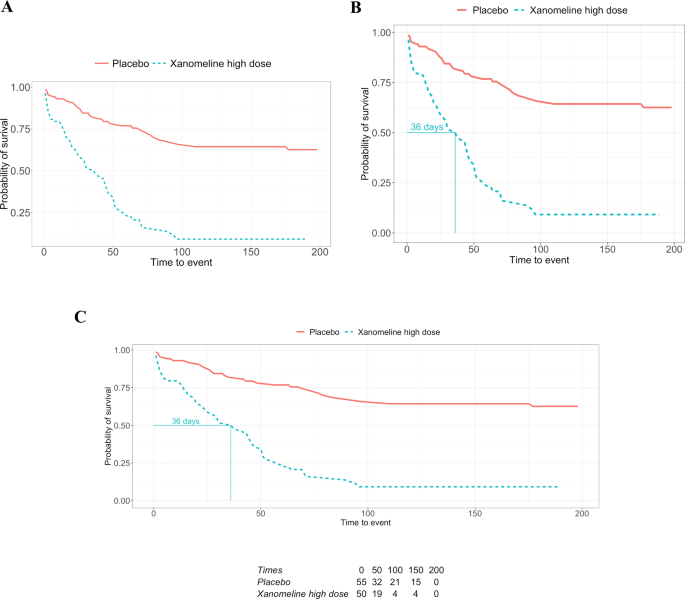

Employing an Analysis Results Data Model enables re-use at the results level rather than requiring source data. In this example, treatment arms can be removed ( A ), or additional summary statistics, such as the median survival time ( B ) or a risk table ( C ), can provide more context without repeating the underlying analysis.

A results visualization can appear in a variety of documents from presentation slides, an initial report, or a final publication, however, it is most likely not accompanied by the results used to create it. This hinders the reuse of the information (i.e., results) in the plot. A frequently encountered situation is illustrated in Fig. 4A , where one stratum is removed and the plot only shows two survival curves, for placebo and the high dose. This is not atypical in drug development, since after a general study overview, the focus is often on one dose only. While this update may seem trivial, from our experience, this task can require considerable time and effort due to the unavailability of the results. Without an analysis results data model or a known location where to find the results from the survival analysis, one must first locate the clinical data to perform the same analysis again. Then, search for and find the analysis code and the instructions to create the Kaplan-Meier plot. Eventually, one must repeat the analysis entirely. Thirdly, it is advisable to confirm whether the new plot matches the one we want to update; this is especially important if the analysis had to be redone as data transformations might have happened (e.g., different censoring than originally planned). Finally, one can filter the strata and create the plot in Fig. 4A .

The analysis results data model

To create an analysis results data model, the first step requires thinking of the results of the analysis as data itself. Through this abstraction, we can begin organizing the data in a common model linking (e.g., clinical) datasets with the analysis results. Before we further introduce the ARDM it is necessary to clarify what an analysis and analysis results entail. An analysis is formally defined as a “detailed examination of the elements or structure of something” 22 . In practice, it is a collection of steps to inspect and understand data, explore a hypothesis, generate results, inferences, and possibly predictions. Analyses are fluid and can change depending on the conclusions drawn after each one of the steps. Nonetheless, routine analyses promote conventions that we can use as a foundation to create analysis standards. For example, looking at the table of contents of a Clinical Study Report (CSR) we can see a collection of routine results summaries. Diving deeper into these sections, we can see the same or similar analysis results between CSRs of independent clinical studies, namely due to conventions 1 . For example, it is standard for a clinical trial to report the demographics and baseline characteristics of the study population, and a summary of adverse events. These data summaries may also be a collection of separate data analyses grouped together in tables or figures (i.e., descriptive statistics of various baseline measurements, or the incidence rates of common adverse drug reactions, by assigned treatment). Also, the same statistics , such as the number of patients assigned to a treatment arm, may be repeated throughout the CSR. Complex inferential statistics may also be repeated in various tables and figures. For example, key outcomes maybe grouped together in a standalone summary of a drug’s benefit-risk profile. Therefore, without upfront planning, the same statistics may be implemented many times in separate code.

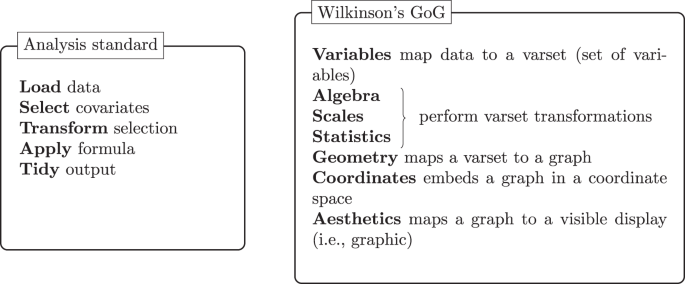

The analysis standard follows a grammar to define the steps in the analysis. Similarly, Wilkinson’s 23 grammar of graphics (GoG) concisely defines the components required to produce a graphic.

The analysis results are the outcome of the analysis and are typically rendered into tables, figures, and listings to facilitate the presentation to stakeholders. Some examples of applications that can reuse the same results are present in Fig. 2 (right). Before the rendering, the results are stored in intermediate formats such as data frames or datasets. We can use this to our advantage and capture the results for posterior use in research by defining which elements to store and the respective constraints. This supports planning the analyses and the potential applications for the results, minimizing imprudent applications. An analysis results data model can be used to formalize the result elements to store and the constraints with the additional benefit of making the relationships between the results explicit. For example, we can store intermediate results, generated after the initial analysis steps, and use them to achieve the final analysis results. Besides improving the reusability of results, and reproducibility of the analysis, establishing relationships enables retracing the analysis steps and promotes transparency.

Data standards are useful to integrate and represent data correctly by specifying formats, units, and fields, among others. Due to the many requirements in clinical development, guidelines detailing how to implement a data standard are also frequent and essential to ensure the standard is correctly implemented and to describe the fundamental principles that apply to all data. An analysis standard would thus define the inputs and outputs of the analysis as well as the steps necessary to achieve those outputs. While an analysis convention follows a general set of context-dependent analysis steps, a standard ensures the analysis steps are inclusive (i.e., independent of context), consistent and uniform where each step is specified through a grammar 23 , 24 , 25 or the querying syntax used in database systems . In Fig. 5 , we compare the concepts behind an analysis standard with Wilkinson’s grammar of graphics (GoG) data flow. Both follow an immutable order, ensuring that previous steps must be fulfilled to achieve the end result. For example, any data transformation needs to occur before we apply a formula (e.g., compute the descriptive statistics), otherwise, the result of the analysis becomes dubious. The collection of steps forms a grammar; however, each step also offers choices. For example, apply formula can refer to a linear model or Cox model. Wilkinson refers to this characteristic as the system’s richness by the means of “paths” constructed by choosing different designs, scales, statistical methods, geometries, coordinate systems, and aesthetics. In the context of the ARDM, analysis standards support pre-planning, compelling the researcher to iterate over the potential analysis routes and the underlying question the analysis should address. In general, it is good practice to write down the details of an analysis, for example using a SAP, with sufficient granularity that the analysis could be reproduced independently if only the source data was available. Thus, the analysis standards would translate the intent expressed in the SAP into clear and well-defined steps.

Analysis standards bring immediate benefits to the analysis data quality 26 , 27 as it enables the validation of software and methods. With software validity, we refer to whether a piece of software does what it is expected and whether it clearly states how the output was reached. The validation of methods addresses whether the adequate statistical methodology was chosen. Due to its nature, this quality aspect is tightly related to other components of the clinical development process such as the SAP. In clinical development, standard operating procedures already cover many of these steps. However, they critically do not handle analysis results as a data source. Combining a data model with analysis standards would benefit clinical practice in four aspects:

Guaranteeing data quality and consistency across a clinical program, essentially creating a single source of truth designed to handle different levels of project abstraction. For example, from a single data analysis to a complete study, or a collection of studies.

Reusability by providing standardization across therapeutic areas and instigating the development of tools using the results instead of requiring individual patient data (e.g., interactive apps).

Simplicity as the analysis standard would encourage upfront planning and identify the necessary inputs, steps, and outputs to keep (e.g., reducing the complexity of forest plots and benefit-risk graphs summaries).

Efficiency by avoiding the manual and recurrent repetition of the analysis, and leveraging modularization and standardization of inferential statistics.

Analysis results datasets have been previously put forward as a solution to improve the uptake of graphics within Novartis, under the banner of graph-ready datasets 28 . Experienced study team leads often have implemented it for efficiency gains, especially around analysis outputs that would reuse existing summary statistics, for example, to support benefit-risk graphs where outcomes may come from different domains. Our experience has also revealed an element of institutional inertia. Standardizing analysis and results requires upfront planning which is often seen as added effort. However, teams that have gone through the steps of setting up a data model and a lightweight analysis process, have found efficiency and quality gains in reusing and maintaining code, as well as verifying and validating results. Regarding inferential results, instead of using results documents or repeating an analysis, we can simply access a common database where these are stored. An ARDM also simplifies modifications to the analysis (and consequently the results). With current practice, these changes might impact one function, program, or script in the best case, or multiple programs or scripts in the worst case. Using an ARDM only requires changes to one program as these can automatically propagate to any downstream analyses. The validation is also simplified as we transition from comparing data products (e.g., RTF files and plots) to comparing datasets directly. Additionally, this brings clarity and transparency, and is suitable for automation.

Six guiding principles

To create the ARDM we follow a collection of principles addressing the obstacles commonly faced during the clinical research process but also present in other areas. These principles are highlighted in Table 1 and broadly put forward improvements to quality, accessibility, efficiency, and reproducibility. On top of providing a data management solution, the ARDM compels us to take a holistic view of the clinical research process, from the initial data capture to the potential end applications. With this view, we have a clearer picture of where deficiencies occur and of their impact on the process.

The “searchable” principle refers to the easy retrieval of information by guaranteeing storage in a known, consistent, and technically-sound way. As we previously highlighted, it is common to have vast collections of results with very limited searchability. For example, figures in a collection of PDF documents. A practical solution is to have a data model to store the information consistently. In turn, this supports using a database that is by default more searchable than the PDF documents. With “searchable” in place, one can apply the “interoperable”, “nonredundant”, and “reusable and extensible” principles. In practice, this includes the use of consistent field names to store data in the database (e.g., the column “mean” has the mean value stored as a numeric value). The resulting coherent database is system-agnostic and can be queried through a variety of tools such as APIs. Thus, the data storing process supports straightforward querying which in turn can be used to avoid storing redundant results. Overall, this facilitates the use of the stored (results) data for primary analysis (i.e., submission to regulators) and secondary purposes (e.g., meta-analysis) but also allows for extensions of the data model granted the current model constraints are respected. The “separation of concerns” refers to having the analysis (i.e., analysis code) separated from the source data, the results (e.g., from a survival analysis as shown in Fig. 3B ), and the data products (e.g., the Kaplan-Meier plot in Fig. 3A ). Finally, the “community-driven” principle ensures that the ARDM can be used pervasively, for instance, such that locations for tracking and finding results are not just multiplied across organizations but are community-developed and ideally lead to a single, widely accepted resource that can be searched as pioneered by the EMBL GWAS Catalog .

In many industries where sub-optimal but quick solutions are preferred, technical debt is a growing problem. While some amount of technical debt is inevitable, understanding our processes can point us to where to make progressive updates and improvements. For example, upfront planning using analysis standards would reduce this debt by default as our starting point are previously verified and validated analyses (i.e., analysis standards). In an effort to continue reducing the debt, the ARDM separation of concerns principle streamlines changes and updates to processes since the analysis, results, and products are separate entities. Standardizing how to store results enables the use of different programming languages to perform analysis with traditionally non-comparable output formats (e.g., SAS and R). Furthermore, we believe the ARDM should grow organically and community-driven, supporting consensus building and cross-organization access.

The ARDM provides a solution to handle analysis results as data by creating a single source of truth. To guarantee the accuracy of the source, it leverages analysis standards (i.e., validated analyses) with known outputs which are then organized in a database following the proposed data model. The use of analysis standards supports the pre-planning of analyses, compelling the researcher to iterate on the best approach for analyzing the data, and potentially deciding to use pre-existing and appropriate analysis standards. Considering the ARDM from the biomedical data lifecycle view (e.g., through the lens of the Harvard Medical School’s Biomedical Data Lifecycle) , the ARDM touches the documentation & metadata, analysis ready datasets, data repositories, data sharing, and reproducibility stages. However, we take the point of view of a clinical researcher (both data consumer and producer) who sees the recurring problem of having to extract results data from published work. Therefore, in the context of the clinical trial lifecycle 2 , extending CDISC with the ARDM would touch on all of the biomedical data lifecycle phases as the ARDM relies on details present in supporting documents like the statistical analysis plan and data specifications.

The concept of creating standards through a common data model is recognised as good data management and stewardship practice. A few examples include the Observational Medical Outcomes Partnership data model, a standard designed to standardize the structure and content of observational data 29 and the Large-scale Evidence Generation and Evaluation across a Network of Databases research initiative to generate and store evidence from observational data 30 . The data model created by the Sentinel initiative , led by the Food and Drug Administration (FDA), is tailored to organize medical billing information and electronic health records from a network of health care organizations. Similarly, the National Patient-Centered Clinical Research Network also established a standard to organize the data collected from their network of partners. Finally, expanding the search to translational medicine, the Informatics for Integrating Biology and the Bedside introduced a standard to organize electronic medical records and clinical research data 31 .

Alongside data models, standard processes have been established to generate analysis results such as the requirement to document analyses in SAPs 32 , including all data transformations from the source data to analysis ready data sets. However, analyses can be complex and dependent on technical factors, such as the statistical software used, as well as undocumented analysis choices throughout the pipeline, from source data to result. Even less complex routine analyses are error-prone and might not be clearly reproducible. Altogether, this process is time and resource-consuming. A proposed solution is to perform the analysis automatically. With this in mind and targeting clinical development, Brix et al . 33 introduce the ODM Data Analysis, a tool to automatically validate, monitor, and generate descriptive statistics from clinical data stored in the CDISC Operational Data Model format. The FDA’s Sentinel Initiative is also capable of generating descriptive summaries and performing specific analysis leveraging the proprietary Sentinel Routine Querying System .

Following this direction, the natural progression would be to create a standard suited for storing analysis results. Such an idea is implemented in the genome-wide association studies (GWAS) catalogue where curators assess GWAS literature, extract data, and store it following a standard including the summary statistics . Taking a step in this direction, CDISC began the 360 initiative to support the implementation of standards as linked metadata in an attempt to improve efficiency, consistency, and reusability across the clinical research. Nonetheless, the irreproducibility of research results remains an obstacle in clinical research and has brought up calls for global data standardization to enable semantic interoperability and adherence to the FAIR principles 34 . In our view, analysis standards and the ARDM are an important contribution to this initiative.

An important aspect which we did not explicitly discuss is the quality of the (raw/source) data which will ultimately serve as the source of any analyses for which results dataset are created through the ARDM. While the ARDM can be seen as a concept naturally tied to the CDISC philosophy, which is most prominently used in drug development studies that are conducted in a highly regulated environment with rigorous data quality standards, its applicability goes far beyond. For example, analyses conducted on open health data could also benefit from the ARDM, which would help to simplify traceability, exchangeability and reproducibility of analysis results. However, when working with these kind of data, understanding the quality of the underlying raw data is of paramount importance. In particular, since the ARDM will make analysis results more easily accessible and reusable also to an audience who may only have a limited understanding of how to assess the quality of the underlying raw data. In this wider context, it may beneficial to use data quality evaluation approaches that were developed for a non-technical audience or for an audience without subject-matter (domain) expertise 35 . This will allow the audience to interpret the results taking the quality of the underlying raw data into account.

Utilizing the proposed ARDM has a set of requirements. Firstly, the provided clinical data must follow a consistent standard (i.e., CDISC ADaM). Our solution involves automatically populating a database, hence there are expectations regarding the structure of the data. Similarly, data standards are necessary to enable analysis standards. If the analysis input expectations are not met, the analysis is unsuccessful and no results are produced or stored. Further, when a data standard is updated it is necessary to also update the analysis standards and the ARDM accordingly. Another limitation is the necessity of analysis standards. Without quality analysis standards, the quality of the source of truth is not guaranteed. Creating analysis standards requires a good understanding of the analysis to correctly define the underlying grammar and identify relevant decision options for the user (e.g., filter data before modeling). The third limitation corresponds to the applications. At the moment, the ARDM stores and organizes results in a suitable way to reuse in known applications (e.g., creating plots, tables, and requesting individual result values). As future applications are unknown, the data model might not store all the information needed. However, given the ARDM modular approach, it is only necessary to update the result information to be kept rather than updating the entire workflow. Another limitation refers to the supported data modalities. The proposed ARDM is implemented on tabular clinical trial data. However, it is possible to adapt the ARDM and design choices (e.g., type of database) to support diverse data. For example, the summary statistics present in the genome-wide association studies (GWAS) catalog could be stored following an ARDM.

The current option to share and access clinical trials results is ClinicalTrials.gov . Nonetheless, this is a repository and does not permit querying results as these are not stored as data (i.e., a machine-readable dataframe). The ARDM is an attempt to bring forward the problem of reproducibility and the lack of a single source of truth for analysis results. With it, we call for a paradigm shift where the target for the data analysis becomes the data model. Nonetheless, we understand the ARDM limitations and view it as one solution to a complex problem. We believe the best way to understand how the ARDM should evolve, or to shape it into a better solution, is to hear the opinions of the community. Hence, our underlying objective is to get the community’s attention, discover similar initiatives, and converge on how to move forward in establishing analysis results as a data source to support future reusability and knowledge discovery.

Data availability

The CDISC Pilot Project ADaM ADSL, ADTTE, and ADAE datasets were used to support the implementation of the analysis results data model. This data can be found at the PHUSE scripts repository ( https://github.com/phuse-org/phuse-scripts/blob/fa55614d7d178a193cc9b6e74256ea2d8dcf5d80/data/adam/TDF_ADaM_v1.0.zip ) and at the repository supporting this manuscript 15 .

Code availability

The implementation of the analysis results data model is available on Github 15 . This repository exemplifies how to construct the data model and the respective schema, as well as shows how to query the underlying database. Furthermore, we provide three output examples to visualize the results.

European Medicines Agency. ICH Topic E 3 - Structure and Content of Clinical Study Reports. https://www.ema.europa.eu/en/documents/scientific-guideline/ich-e-3-structure-content-clinical-study-reports-step-5_en.pdf (1996).

Committee on Strategies for Responsible Sharing of Clinical Trial Data, Board on Health Sciences Policy & Institute of Medicine. Sharing clinical trial data (National Academies Press, Washington, D.C. 2015).

Maciocci, Giuliano and Aufreiter, Michael and Bentley, Nokome. Introducing eLife’s first computationally reproducible article. https://elifesciences.org/labs/ad58f08d/introducing-elife-s-first-computationally-reproducible-article (2019).

R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/ (2021).

Robinson, D., Hayes, A. & Couch, S. broom: Convert Statistical Objects into Tidy Tibbles. https://CRAN.R-project.org/package=broom . R package version 0.7.6 (2021).

Siebert, M. et al . Data-sharing and re-analysis for main studies assessed by the european medicines agency—a crosssectional study on european public assessment reports. BMC medicine 20 , 1–14 (2022).

Article Google Scholar

Gelman, A. & Loken, E. The garden of forking paths: Why multiple comparisons can be a problem, even when there is no “fishing expedition” or “p-hacking” and the research hypothesis was posited ahead of time. Dep. Stat. Columbia Univ. 348 (2013).

Wicherts, J. M. et al . Degrees of freedom in planning, running, analyzing, and reporting psychological studies: A checklist to avoid p-hacking. Front. psychology 1832 (2016).

Devezer, B., Navarro, D. J., Vandekerckhove, J. & Ozge Buzbas, E. The case for formal methodology in scientific reform. Royal Soc. open science 8 , 200805 (2020).

Higgins, J. P. et al . Cochrane handbook for systematic reviews of interventions (John Wiley & Sons, 2019).

Tendal, B. et al . Disagreements in meta-analyses using outcomes measured on continuous or rating scales: observer agreement study. BMJ 339 (2009).

Wilkinson, M. D. et al . The fair guiding principles for scientific data management and stewardship. Sci. data 3 , 1–9 (2016).

Huser, V., Sastry, C., Breymaier, M., Idriss, A. & Cimino, J. J. Standardizing data exchange for clinical research protocols and case report forms: An assessment of the suitability of the Clinical Data Interchange Standards Consortium (CDISC) Operational Data Model (ODM). J. biomedical informatics 57 , 88–99 (2015).

Article PubMed Google Scholar

European Medicines Agency. European Medicines Regulatory Network Data Standardisation Strategy. https://www.ema.europa.eu/en/documents/other/european-medicines-regulatory-network-data-standardisation-strategy_en.pdf (2021).

Barros, JM., A Widmer, L. & Baillie, M. Analysis Results Data Model, Zenodo , https://doi.org/10.5281/zenodo.7163032 (2022).

Kaplan, E. L. & Meier, P. Nonparametric estimation from incomplete observations. J. Am. Stat. Assoc. 53 , 457–481 (1958).

Article MathSciNet MATH Google Scholar

Guyot, P., Ades, A., Ouwens, M. J. & Welton, N. J. Enhanced secondary analysis of survival data: reconstructing the data from published kaplan-meier survival curves. BMC medical research methodology 12 , 1–13 (2012).

Liu, Z., Rich, B. & Hanley, J. A. Recovering the raw data behind a non-parametric survival curve. Syst. reviews 3 , 1–10 (2014).

Article CAS Google Scholar

Liu, N., Zhou, Y. & Lee, J. J. IPDfromKM: reconstruct individual patient data from published kaplan-meier survival curves. BMC Med. Res. Methodol. 21 , 1–22 (2021).

Article ADS CAS PubMed PubMed Central Google Scholar

Rogula, B., Lozano-Ortega, G. & Johnston, K. M. A method for reconstructing individual patient data from kaplan-meier survival curves that incorporate marked censoring times. MDM Policy & Pract. 7 (2022).

Roychoudhury, S. & Neuenschwander, B. Bayesian leveraging of historical control data for a clinical trial with time-to-event endpoint. Stat. medicine 39 , 984–995 (2020).

Article MathSciNet Google Scholar

Cambridge University Press. Analysis. In Cambridge Academic Content Dictionary, https://dictionary.cambridge.org/dictionary/english/analysis (Cambridge University Press, 2021).

Wilkinson, L. The grammar of graphics. In Handbook of computational statistics, 375–414 (Springer, 2012).

Wickham, H. Tidy data. J. Stat. Softw. 59 , 1–23 (2014).

Lee, S., Cook, D. & Lawrence, M. Plyranges: A grammar of genomic data transformation. Genome biology 20 , 1–10 (2019).

PhUSE Standard Analysis and Code Sharing Working Group. Best Practices for Quality Control and Validation. https://phuse.s3.eu-central-1.amazonaws.com/Deliverables/Standard+Analyses+and+Code+Sharing/Best+Practices+for+Quality+Control+%26+Validation.pdf (2020).

European Medicines Agency. ICH Topic E 6 - Guideline for good clinical practice (R2). https://www.ema.europa.eu/en/documents/scientific-guideline/ich-e-6-r2-guideline-good-clinical-practice-step-5_en.pdf (2015).

Vandemeulebroecke, M. et al . How can we make better graphs? an initiative to increase the graphical expertise and productivity of quantitative scientists. Pharm. Stat. 18 , 106–114 (2019).

Observational Medical Outcomes Partnership. OMOP Common Data Model. https://ohdsi.github.io/CommonDataModel/ (2021).

Schuemie, M. J. et al . Principles of large-scale evidence generation and evaluation across a network of databases (LEGEND). J. Am. Med. Informatics Assoc. 27 , 1331–1337 (2020).

Murphy, S. N. et al . Serving the enterprise and beyond with informatics for integrating biology and the bedside (i2b2). J. Am. Med. Informatics Assoc. 17 , 124–130 (2010).

Gamble, C. et al . Guidelines for the content of statistical analysis plans in clinical trials. JAMA 318 , 2337–2343 (2017).

Brix, T. J. et al . ODM data analysis—a tool for the automatic validation, monitoring and generation of generic descriptive statistics of patient data. PloS one 13 , e0199242 (2018).

Article MathSciNet PubMed PubMed Central Google Scholar

Jauregui, B. et al . The turning point for clinical research: Global data standardization. J. Appl. Clin. Trials (2019).

Nikiforova, A. Analysis of open health data quality using data object-driven approach to data quality evaluation: insights from a latvian context. In IADIS International Conference e-Health , 119–126 (2019).

Peter Van Reusel. CDISC 360: What’s in It for Me? www.cdisc.org/sites/default/files/2021-10/CDISC_360_2021_EU_Interchange.pdf (2021).

Download references

Acknowledgements

We thank Carlotta Caroli, Nicholas Kelley, and Shahram Ebadollahi for their role in establishing and stewarding the AI4Life residency program. We also want to acknowledge Janice Branson for her valuable comments and support in this journey. Finally, J.M.B. would like to thank Idorsia Pharmaceuticals for the support during the final submission.

Author information

This work took place and was submitted when the author was at Novartis: Joana M. Barros.

Authors and Affiliations

Analytics, Novartis Pharma AG, Basel, Switzerland

Joana M. Barros, Lukas A. Widmer, Mark Baillie & Simon Wandel

Department of Biometry, Idorsia Pharmaceuticals, Allschwil, Switzerland