Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Gurupradeep/deeplearning.ai-Assignments

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 48 Commits | ||||

Repository files navigation

Deep learning specialisation.

Instructor: Andrew Ng

This repository contains all the solutions of the programming assignments along with few output images. It also has some of the important papers which are referred during the course.

NOTE : Use the solutions only for reference purpose :)

This specialisation has five courses.

Course 1: Neural Networks and Deep Learning

Learning Objectives :

- Understand the major technology trends driving Deep Learning

- Be able to build, train and apply fully connected deep neural networks

- Know how to implement efficient (vectorized) neural networks

- Understand the key parameters in a neural network's architecture

Programming Assignments

- Week 2 - Programming Assignment 1 - Logistic Regression with a Neural Network mindset

- Week 3 - Programming Assignment 2 - Planar data classification with one hidden layer

- Week 4 - Programming Assignment 3 - Building your Deep Neural Network: Step by Step

- Week 4 - Programming Assignment 4 - Deep Neural Network for Image Classification: Application

Course 2: Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization

- Understand industry best-practices for building deep learning applications.

- Be able to effectively use the common neural network "tricks", including initialization, L2 and dropout regularization, Batch normalization, gradient checking,

- Be able to implement and apply a variety of optimization algorithms, such as mini-batch gradient descent, Momentum, RMSprop and Adam, and check for their convergence.

- Understand new best-practices for the deep learning era of how to set up train/dev/test sets and analyze bias/variance

- Be able to implement a neural network in TensorFlow.

- Week 1 - Programming Assignment 1 - Initialization

- Week 1 - Programming Assignment 2 - Regularization

- Week 1 - Programming Assignment 3 - Gradient Checking

- Week 2 - Programming Assignment 4 - Optimization Methods

- Week 3 - Programming Assignment 5 - TensorFlow Tutorial

Course 3: Structuring Machine Learning Projects

- Understand how to diagnose errors in a machine learning system, and

- Be able to prioritize the most promising directions for reducing error

- Understand complex ML settings, such as mismatched training/test sets, and comparing to and/or surpassing human-level performance

- Know how to apply end-to-end learning, transfer learning, and multi-task learning

This course doesn't have any programming assignments

Course 4: Convolutional Neural Networks

- Understand how to build a convolutional neural network, including recent variations such as residual networks.

- Know how to apply convolutional networks to visual detection and recognition tasks.

- Know to use neural style transfer to generate art.

- Be able to apply these algorithms to a variety of image, video, and other 2D or 3D data.

- Week 1 - Programming Assignment 1 - Convolution model Step by Step

- Week 1 - Programming Assignment 2 - Convolution model Application

- Week 2 - Programming Assignment 3 - Keras Tutorial Happy House

- Week 2 - Programming Assignment 4 - Residual Networks

- Week 3 - Programming Assignment 5 - Autonomous driving application - Car Detection

- Week 4 - Programming Assignment 6 - Face Recognition for Happy House

- Week 4 - Programming Assignment 7 - Art Generation with Neural Style transfer

Course 5: Sequence Models

- Understand how to build and train Recurrent Neural Networks (RNNs), and commonly-used variants such as GRUs and LSTMs.

- Be able to apply sequence models to natural language problems, including text synthesis.

- Be able to apply sequence models to audio applications, including speech recognition and music synthesis.

- Week1 - Programming Assignment 1 - Building a Recurrent Neural Network

- Week1 - Programming Assignment 2 - Character level Dinosaur Name generation

- Week1 - Programming Assignment 3 - Music Generation

- Week2 - Programming Assignment 1 - Operations on Word vectors

- Week2 - Programming Assignment 2 - Emojify

- Week3 - Programming Assignment 1 - Neural Machine translation with attention

- Week3 - Programming Assignment 2 - Trigger word detection

IMPORTANT PAPERS

- Neural Style Transfer

Contributors 2

- Jupyter Notebook 100.0%

- 🌐 All Sites

- _APDaga DumpBox

- _APDaga Tech

- _APDaga Invest

- _APDaga Videos

- 🗃️ Categories

- _Free Tutorials

- __Python (A to Z)

- __Internet of Things

- __Coursera (ML/DL)

- __HackerRank (SQL)

- __Interview Q&A

- _Artificial Intelligence

- __Machine Learning

- __Deep Learning

- _Internet of Things

- __Raspberry Pi

- __Coursera MCQs

- __Linkedin MCQs

- __Celonis MCQs

- _Handwriting Analysis

- __Graphology

- _Investment Ideas

- _Open Diary

- _Troubleshoots

- _Freescale/NXP

- 📣 Mega Menu

- _Logo Maker

- _Youtube Tumbnail Downloader

- 🕸️ Sitemap

Coursera: Neural Networks and Deep Learning (Week 4A) [Assignment Solution] - deeplearning.ai

▸ building your deep neural network: step by step. i have recently completed the neural networks and deep learning course from coursera by deeplearning.ai while doing the course we have to go through various quiz and assignments in python. here, i am sharing my solutions for the weekly assignments throughout the course. these solutions are for reference only. > it is recommended that you should solve the assignments by yourself honestly then only it makes sense to complete the course. > but, in case you stuck in between, feel free to refer to the solutions provided by me., don't just copy paste the code for the sake of completion. even if you copy the code, make sure you understand the code first. click here : coursera: neural networks & deep learning (week 3) click here: coursera: neural networks & deep learning (week 4b) scroll down for coursera: neural networks & deep learning (week 4a) assignments . (adsbygoogle = window.adsbygoogle || []).push({}); recommended machine learning courses: coursera: machine learning coursera: deep learning specialization coursera: machine learning with python coursera: advanced machine learning specialization udemy: machine learning linkedin: machine learning eduonix: machine learning edx: machine learning fast.ai: introduction to machine learning for coders, building your deep neural network: step by step, in this notebook, you will implement all the functions required to build a deep neural network. in the next assignment, you will use these functions to build a deep neural network for image classification. after this assignment you will be able to: use non-linear units like relu to improve your model build a deeper neural network (with more than 1 hidden layer) implement an easy-to-use neural network class notation : superscript [ l ] denotes a quantity associated with the l t h layer. example: a [ l ] is the l t h layer activation. w [ l ] and b [ l ] are the l t h layer parameters. superscript ( i ) denotes a quantity associated with the i t h example. example: x ( i ) is the i t h training example. lowerscript i i denotes the i t h entry of a vector. example: a [ l ] i denotes the i t h entry of the l t h layer's activations). let's get started, 1 - packages, let's first import all the packages that you will need during this assignment. numpy is the main package for scientific computing with python. matplotlib is a library to plot graphs in python. dnn_utils provides some necessary functions for this notebook. testcases provides some test cases to assess the correctness of your functions np.random.seed(1) is used to keep all the random function calls consistent. it will help us grade your work. please don't change the seed., 2 - outline of the assignment.

- Initialize the parameters for a two-layer network and for an L -layer neural network.

- Complete the LINEAR part of a layer's forward propagation step (resulting in Z [ l ] ).

- We give you the ACTIVATION function (relu/sigmoid).

- Combine the previous two steps into a new [LINEAR->ACTIVATION] forward function.

- Stack the [LINEAR->RELU] forward function L-1 time (for layers 1 through L-1) and add a [LINEAR->SIGMOID] at the end (for the final layer L ). This gives you a new L_model_forward function.

- Compute the loss.

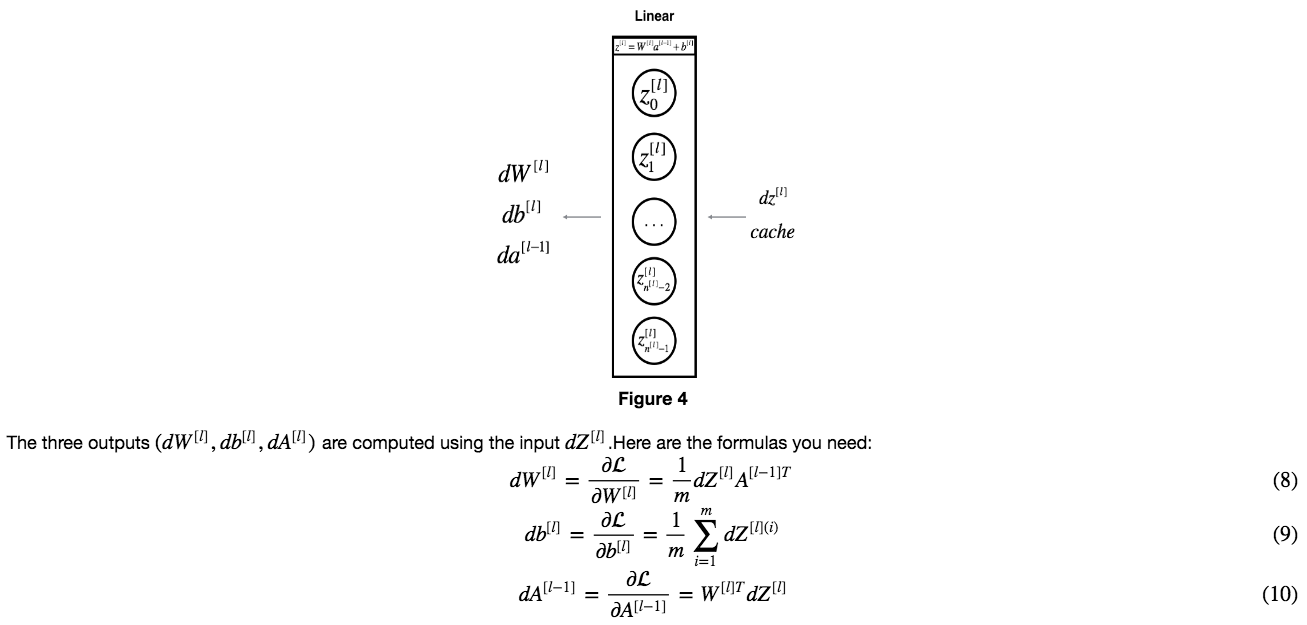

- Complete the LINEAR part of a layer's backward propagation step.

- We give you the gradient of the ACTIVATE function (relu_backward/sigmoid_backward)

- Combine the previous two steps into a new [LINEAR->ACTIVATION] backward function.

- Stack [LINEAR->RELU] backward L-1 times and add [LINEAR->SIGMOID] backward in a new L_model_backward function

- Finally update the parameters.

Check-out our free tutorials on IOT (Internet of Things):

3 - Initialization

3.1 - 2-layer neural network.

- The model's structure is: LINEAR -> RELU -> LINEAR -> SIGMOID .

- Use random initialization for the weight matrices. Use np.random.randn(shape)*0.01 with the correct shape.

- Use zero initialization for the biases. Use np.zeros(shape) .

3.2 - L-layer Neural Network

- The model's structure is [LINEAR -> RELU] × × (L-1) -> LINEAR -> SIGMOID . I.e., it has L − 1 L − 1 layers using a ReLU activation function followed by an output layer with a sigmoid activation function.

- Use random initialization for the weight matrices. Use np.random.randn(shape) * 0.01 .

- Use zeros initialization for the biases. Use np.zeros(shape) .

- We will store n [ l ] n [ l ] , the number of units in different layers, in a variable layer_dims . For example, the layer_dims for the "Planar Data classification model" from last week would have been [2,4,1]: There were two inputs, one hidden layer with 4 hidden units, and an output layer with 1 output unit. Thus means W1 's shape was (4,2), b1 was (4,1), W2 was (1,4) and b2 was (1,1). Now you will generalize this to L L layers!

- Here is the implementation for L = 1 L = 1 (one layer neural network). It should inspire you to implement the general case (L-layer neural network).

4 - Forward propagation module

4.1 - linear forward.

- LINEAR -> ACTIVATION where ACTIVATION will be either ReLU or Sigmoid.

- [LINEAR -> RELU] × × (L-1) -> LINEAR -> SIGMOID (whole model)

| [[ 3.26295337 -1.23429987]] |

4.2 - Linear-Activation Forward

- Sigmoid : σ ( Z ) = σ ( W A + b ) = 1 1 + e − ( W A + b ) . We have provided you with the sigmoid function. This function returns two items: the activation value " a " and a " cache " that contains " Z " (it's what we will feed in to the corresponding backward function). To use it you could just call: A , activation_cache = sigmoid ( Z )

- ReLU : The mathematical formula for ReLu is A = R E L U ( Z ) = m a x ( 0 , Z ) A = R E L U ( Z ) = m a x ( 0 , Z ) . We have provided you with the relu function. This function returns two items: the activation value " A " and a " cache " that contains " Z " (it's what we will feed in to the corresponding backward function). To use it you could just call: A , activation_cache = relu ( Z )

| [[ 0.96890023 0.11013289]] | |

| [[ 3.43896131 0. ]] |

d) L-Layer Model

- Use the functions you had previously written

- Use a for loop to replicate [LINEAR->RELU] (L-1) times

- Don't forget to keep track of the caches in the "caches" list. To add a new value c to a list , you can use list.append(c) .

| [[ 0.03921668 0.70498921 0.19734387 0.04728177]] | |

| 3 |

5 - Cost function

| 0.41493159961539694 |

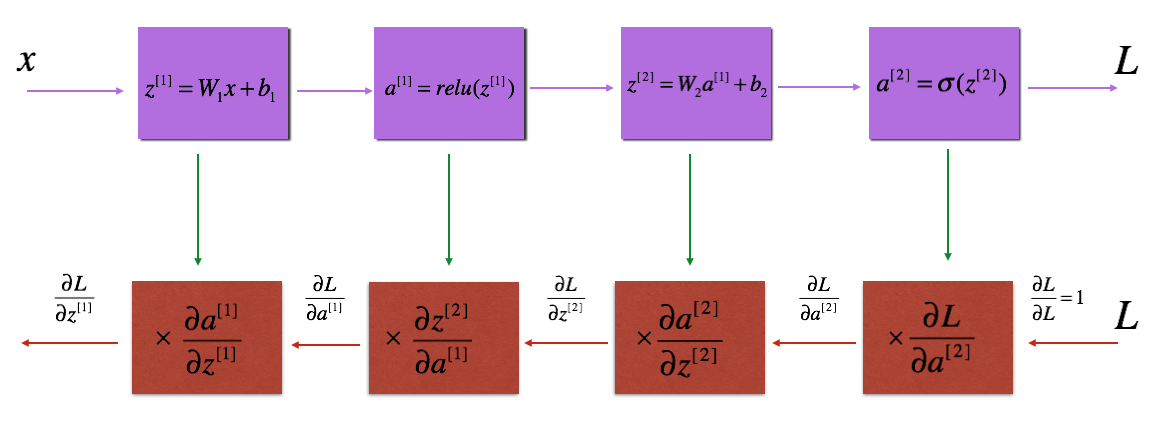

6 - Backward propagation module

- LINEAR backward

- LINEAR -> ACTIVATION backward where ACTIVATION computes the derivative of either the ReLU or sigmoid activation

- [LINEAR -> RELU] × × (L-1) -> LINEAR -> SIGMOID backward (whole model)

6.1 - Linear backward

6.2 - Linear-Activation backward

- sigmoid_backward : Implements the backward propagation for SIGMOID unit. You can call it as follows:

- relu_backward : Implements the backward propagation for RELU unit. You can call it as follows:

6.3 - L-Model Backward

| dW1 | [[ 0.41010002 0.07807203 0.13798444 0.10502167] [ 0. 0. 0. 0. ] [ 0.05283652 0.01005865 0.01777766 0.0135308 ]] |

| db1 | [[-0.22007063] [ 0. ] [-0.02835349]] |

| dA1 | [[ 0.12913162 -0.44014127] [-0.14175655 0.48317296] [ 0.01663708 -0.05670698]] |

6.4 - Update Parameters

7 - Conclusion

- A two-layer neural network

- An L-layer neural network

hi bro...i was working on the week 4 assignment .i am getting an assertion error on cost_compute function.help me with this..but the same function is working for the l layer model AssertionError Traceback (most recent call last) in () ----> 1 parameters = two_layer_model(train_x, train_y, layers_dims = (n_x, n_h, n_y), num_iterations = 2500, print_cost= True) in two_layer_model(X, Y, layers_dims, learning_rate, num_iterations, print_cost) 46 # Compute cost 47 ### START CODE HERE ### (≈ 1 line of code) ---> 48 cost = compute_cost(A2, Y) 49 ### END CODE HERE ### 50 /home/jovyan/work/Week 4/Deep Neural Network Application: Image Classification/dnn_app_utils_v3.py in compute_cost(AL, Y) 265 266 cost = np.squeeze(cost) # To make sure your cost's shape is what we expect (e.g. this turns [[17]] into 17). --> 267 assert(cost.shape == ()) 268 269 return cost AssertionError:

Hey,I am facing problem in linear activation forward function of week 4 assignment Building Deep Neural Network. I think I have implemented it correctly and the output matches with the expected one. I also cross check it with your solution and both were same. But the grader marks it, and all the functions in which this function is called as incorrect. I am unable to find any error in its coding as it was straightforward in which I used built in functions of SIGMOID and RELU. Please guide.

hi bro iam always getting the grading error although iam getting the crrt o/p for all

Our website uses cookies to improve your experience. Learn more

Contact form

IMAGES

VIDEO