Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

A review of machine learning algorithms for cloud computing security.

1. Introduction

2. related work, 3. background study, 3.1.1. cloud service models.

- IaaS; has many benefits but also some issues. IaaS provides the infrastructure through the virtual machine (VM), but VMs are gradually becoming obsolete. This is due to mismatching the cloud to provide security and VM security. Data deletion and issues can be solved by deciding the time frame for data deletion by both the client and the cloud provider. Compatibility issue occurs in IaaS as client-only run legacy software, which may increase the cost [ 10 ]. The security of the hypervisor is important splitting physical resources between the VMs.

- PaaS; is a web-based software creation and delivery platform offered as a server for programmers, enabling the application to be developed and deployed [ 10 ]. The security issues of PaaS are inter-operation, host vulnerability, privacy-aware authentication, continuity of service, and fault tolerance.

- SaaS; has no practical need for indirect deployment because it is not geographically dispersed and is delivered nearly immediately. Security issues in the SaaS are authentication, approval, data privacy, availability, and network security [ 28 ].

3.1.2. Design of the Cloud

- Cloud Consumer: An individual or association that maintains career, relationship, and utilization administrations from the cloud providers [ 29 ].

- Cloud Provider: An individual or organization for manufacturing, or administration, available to invested individuals.

- Cloud Auditor: A gathering that can direct the self-sufficient examination of cloud organizations, information system activities, implementation, and security of cloud users.

- Cloud Broker: A substance that manages the usage, implementation, and conveyance of cloud benefits and arranges links between cloud purchasers and cloud suppliers [ 29 ].

- Cloud Carrier: A medium that offers a system of cloud administrations from cloud suppliers to the cloud consumers.

3.1.3. Cloud Deployment Models

3.2. cloud threats, 3.2.1. cloud security threats.

- Confidentiality threats involves an insider threat to client information, risk of external attack, and data issues [ 39 ]. First, insider risk to client information is related to unapproved or illegal access to customer information from an insider of a cloud service provider is a significant security challenge [ 31 ]. Second, the risk of outside attack is increasingly relevant for cloud applications in unsecured area. This risk includes remote software or hardware hits on cloud clients and applications [ 40 ]. Third, information leakage is an unlimited risk to cloud bargain data because of human mistake, lack of instruments, secured access failures, after which anything is possible.

- Integrity threats involve the threats of information separation, poor client access control, and risk to information quality. First is the risk of information isolation, which inaccurately joins the meanings of security parameters, ill-advised design of VMs, and off base client-side hypervisors. This is complicated issue inside the cloud, which offers assets connecting the clients; if assets change, that could affect information trustworthiness [ 41 , 42 ]. Next is poor client access control, which because of inefficient access and character control has various issues and threats that enable assailants harm information assets [ 43 , 44 ].

- Availability threats include the effect of progress on the board, organization non-accessibility, physical interruption of assets, and inefficient recovery strategies. First is the effect of progress on the board that incorporates the effect of the testing client entrance for different clients, and the effect of foundation changes [ 31 ]. Both equipment and application change inside the cloud condition negatively affect the accessibility of cloud organizations [ 45 ]. Next is the non-accessibility of services that incorporate the non-accessibility of system data transfer capacity, domain name system (DNS) organization registering software, and assets. It is an external risk that affects all cloud models [ 46 ]. The third is its physical disturbance IT administrations of the service providers, cloud customers, and wide area network (WAN) specialist organization. The fourth are weak recuperation techniques, such as deficient failure recovery which impacts recovery time and effectiveness if there should develop an occasion of a scene.

3.2.2. Attacks on the Cloud

- Network-based attacks: Three types of system attacks discussed here are port checking, botnets, and spoofing attacks. A port scan is useful and of considerable interest to hackers in assessing the attacker to collect relevant information to launch a successful attack [ 46 ]. Based on whether a network’s defense routinely searches ports, the defenders usually do not hide their identity, whereas the attackers do so during port scanning [ 47 ]. A botnet is a progression of malware-contaminated web associated devices that can be penetrated by hackers [ 48 , 49 ]. A spoofing assault is when a hacker or malicious software effectively operates on behalf of another user (or system) by impersonating data [ 46 ]. It occurs when the intruder pretends to be someone else (or another machine, such as a phone) on a network to manipulate other machines, devices, or people into real activities or giving up sensitive data.

- VM-based attacks: Different VMs facilitated on a frameworks cause multiple security issues. A side-channel assault is any intrusion based on computer process implementation data rather than flaws in the code itself [ 25 ]. Malicious code that is placed inside the VM image will be replicated during the creation of the VM [ 46 ]. VMs picture the executive’s framework offers separating and filtering for recognizing and recovering from the security threats.

- Storage-based attacks: A strict monitoring mechanism is not considered then the attackers steal the important data stored on some storage devices. Data scavenging refers to the inability to completely remove data from storage devices, in which the attacker may access or recover this data. Data de-duplication refers to duplicate copies of the repeating data [ 50 ]. This attack is mitigated by ensuring the duplication occurs when the precise number of file copies is specified.

- Application-based attacks: The application running on the cloud may face many attacks that affect its performance and cause information leakage for malicious purposes. The three primary applications-based attacks are malware infusion and stenography attacks, shared designs, web services, and convention-based attacks [ 46 ].

3.3. ML and Cloud Security

Types of ml algorithms.

- Supervised learning is an ML task of learning a function that maps a contribution to the yield subject to procedure data yield sets. It prompts a capacity for naming data involving many of the preparation models. Managed learning is a significant part of the data science [ 56 ]. Administered learning is the ML assignment of initiating a limit from named getting ready data, preparing data involves many getting ready models. (a) Supervised Neural Network: In a supervised neural network, the yield of the information is known. The predicted yield of the neural system is compared with the real yield. Given the mistake, the parameters are changed and afterward addressed the neural system once more. The administered neural system is used in a feed-forward neural system [ 57 ]. (b) K-Nearest Neighbor (K-NN): A basic, simple to-execute administered ML calculation that can be used to solve both characterization and regression issues. A regression issue has a genuine number (a number with a decimal point) as its yield. For instance, it uses the information in the table below to appraise somebody’s weight given their height. (c) Support Vector Machine (SVM): A regulated ML algorithm used for both gathering and relapse challenges. It is generally used in characterization issues. The SVM classifier is a frontier that separates the two classes (hyper-plane). (d) Naïve Bayes: A regulated ML algorithm that uses Bayes’ theorem, which accepts that highlights are factually free. Despite this assumption, it has demonstrated itself to be a classifier with effective outcomes.

- Unsupervised learning is a type of ML algorithm used to draw deductions from datasets consisting of information without marked reactions. The most widely recognized unsupervised learning strategy is cluster analysis, which is used for exploratory information analysis to discover hidden examples or grouping in the information [ 58 ]. (a) Unsupervised Neural Network: The neural system has no earlier intimation about the yield of the information. The primary occupation of the system is to classify the information based on several similarities. The neural system verfies the connection between diverse source of information and gatherings. (b) K-Means: One of the easiest and renowned unsupervised ML algorithms. The K-means algorithm perceives k number of centroids, and a short time later generates each data point to the closest gathering, while simultaneously maintaining the centroids as little as could be typical considering the present circumstance. (c) Singular Value Decomposition (SVD): One of the most broadly used unsupervised learning algorithms, at the center of numerous proposals and dimensionality reduction frameworks that are essential to worldwide organizations, such as Google, Netflix, and others.

- Semi-Supervised Learning is an ML method that combines a small quantity of named information with abundant unlabeled information during training. Semi-supervised learning falls between unsupervised and supervised learning. The objective of semi-supervised learning is to observe how combining labeled and unlabeled information may change the learning conduct and to structure calculations that exploit such a combination.

- Reinforcement Learning (RL) is a territory of ML that emphasizes programming administrators should use activities in a scenario to enlarge some idea of the total prize. RL is one of three major ML perfect models, followed closely by supervised learning and unsupervised learning. One of the challenges that emerges in RL, and not in other types of learning, is the exchange of the examination and abuse. Of the extensive approaches to ML, RL is the nearest to humans and animals.

4. ML Algorithms for the Cloud Security

4.1. supervised learning, 4.1.1. supervised anns, 4.1.2. k-nn, 4.1.3. naive bayes, 4.1.5. discussion and lessons learned, 4.2. unsupervised learning, 4.2.1. unsupervised anns, 4.2.2. k-means, 4.2.3. singular value decomposition (svd), 4.2.4. discussion and lessons learned, 5. future research directions.

- An appropriate investigation of overhead should be performed before including new progressions, for example, virtualization could be used to produce the preferred position concerning essential capabilities.

- ML datasets: a collection of AI datasets across numerous fields, for which there exist security-applicable datasets associated with themes, such as spam, phishing, and so on [ 91 ].

- HTTP dataset CSIC: The HTTP dataset CSIC contains a substantial number of automatically-produced Web demands and could be used for the testing of Web assault protection frameworks.

- Expose deep neural system: This is an open-source deep neural system venture that endeavors to distinguish malicious URLs, document ways, and registry keys with legitimate preparation. Datasets can be found in the information or model’s registry in the sample scores.json documents.

- Although the exploration of ML with crowdsourcing has advanced significantly in the recent years, there are still some basic issues that remain to be studied [ 92 ].

- Potential directions exist to of positioning innovation by coordinating heterogeneous LBS frameworks and consistently indoor and outdoor situations [ 93 ]. There remain numerous challenges that can be explored in the future.

6. Conclusions

Author contributions, conflicts of interest, abbreviations.

| ANN | Artificial Neural Network |

| CaSF | Cloud-Assisted Smart Factory |

| CC | Cloud Computing |

| CCE | Contact Center Enterprise |

| CDN | Content Delivery Network |

| CIA | Confidentiality, Integrity, Availability |

| CNN | Convolutional Neural Network |

| DDoS | Distributed Denial of Service |

| DeepRM | Deep Reinforcement Learning |

| DRLCS | Deep Reinforcement Learning for Cloud Scheduling |

| ECS | Elastic Compute Service |

| GA | Genetic Algorithm |

| GAN | Generative Adversarial Network |

| IaaS | Infrastructure as a Service |

| IDPS | Intrusion Detection and Prevention Service |

| IDS | Intrusion Detection System |

| IoT | Internet of Things |

| K-NN | K-Nearest Neighbors |

| LAMB | Levenberg-Marquardt Back Propagation |

| MCC | Mobile Cloud Computing |

| MEC | Mobile Edge Computing |

| ML | Machine Learning |

| PaaS | Platform as a Service |

| PART | Partial Tree |

| RBF | Radial Basis Function |

| RL | Reinforcement Learning |

| RNN | Recurrent Neural Network |

| SaaS | Software as a Service |

| SMOTE | Synthetic Minority Oversampling Technique |

| SMP | Secure Multi-party Computation |

| SVD | Singular Value Decomposition |

| SVM | Support Vector Machine |

| UNSW | University of New South Wales |

| VM | Virtual Machine |

- Lim, S.Y.; Kiah, M.M.; Ang, T.F. Security Issues and Future Challenges of Cloud Service Authentication. Polytech. Hung. 2017 , 14 , 69–89. [ Google Scholar ]

- Borylo, P.; Tornatore, M.; Jaglarz, P.; Shahriar, N.; Cholda, P.; Boutaba, R. Latency and energy-aware provisioning of network slices in cloud networks. Comput. Commun. 2020 , 157 , 1–19. [ Google Scholar ] [ CrossRef ]

- Carmo, M.; Dantas Silva, F.S.; Neto, A.V.; Corujo, D.; Aguiar, R. Network-Cloud Slicing Definitions for Wi-Fi Sharing Systems to Enhance 5G Ultra-Dense Network Capabilities. Wirel. Commun. Mob. Comput. 2019 , 2019 , 8015274. [ Google Scholar ] [ CrossRef ]

- Dang, L.M.; Piran, M.; Han, D.; Min, K.; Moon, H. A Survey on Internet of Things and Cloud Computing for healthcare. Electronics 2019 , 8 , 768. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Srinivasamurthy, S.; Liu, D. Survey on Cloud Computing Security. 2020. Available online: https://www.semanticscholar.org/ (accessed on 19 July 2020).

- Mathkunti, N. Cloud Computing: Security Issues. Int. J. Comput. Commun. Eng. 2014 , 3 , 259–263. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Stefan, H.; Liakat, M. Cloud Computing Security Threats And Solutions. J. Cloud Comput. 2015 , 4 , 1. [ Google Scholar ] [ CrossRef ]

- Fauzi, C.; Azila, A.; Noraziah, A.; Tutut, H.; Noriyani, Z. On Cloud Computing Security Issues. Intell. Inf. Database Syst. Lect. Notes Comput. Sci. 2012 , 7197 , 560–569. [ Google Scholar ]

- Palumbo, F.; Aceto, G.; Botta, A.; Ciuonzo, D.; Persico, V.; Pescapé, A. Characterizing Cloud-to-user Latency as perceived by AWS and Azure Users spread over the Globe. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Taipei, Taiwan, 7–11 December 2019; pp. 1–6. [ Google Scholar ]

- Hussein, N.H.; Khalid, A. A survey of Cloud Computing Security challenges and solutions. Int. J. Comput. Sci. Inf. Secur. 2017 , 1 , 52–56. [ Google Scholar ]

- Le Duc, T.; Leiva, R.G.; Casari, P.; Östberg, P.O. Machine Learning Methods for Reliable Resource Provisioning in Edge-Cloud Computing: A Survey. ACM Comput. Surv. 2019 , 52 , 1–39. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Li, K.; Gibson, C.; Ho, D.; Zhou, Q.; Kim, J.; Buhisi, O.; Gerber, M. Assessment of machine learning algorithms in cloud computing frameworks. In Proceedings of the IEEE Systems and Information Engineering Design Symposium, Charlottesville, VA, USA, 26 April 2013; pp. 98–103. [ Google Scholar ]

- Callara, M.; Wira, P. User Behavior Analysis with Machine Learning Techniques in Cloud Computing Architectures. In Proceedings of the 2018 International Conference on Applied Smart Systems, Médéa, Algeria, 24–25 November 2018; pp. 1–6. [ Google Scholar ]

- Singh, S.; Jeong, Y.-S.; Park, J. A Survey on Cloud Computing Security: Issues, Threats, and Solutions. J. Netw. Comput. Appl. 2016 , 75 , 200–222. [ Google Scholar ] [ CrossRef ]

- Khan, A.N.; Fan, M.Y.; Malik, A.; Memon, R.A. Learning from Privacy Preserved Encrypted Data on Cloud Through Supervised and Unsupervised Machine Learning. In Proceedings of the International Conference on Computing, Mathematics and Engineering Technologies, Sindh, Pakistan, 29–30 January 2019; pp. 1–5. [ Google Scholar ]

- Khilar, P.; Vijay, C.; Rakesh, S. Trust-Based Access Control in Cloud Computing Using Machine Learning. In Cloud Computing for Geospatial Big Data Analytics ; Das, H., Barik, R., Dubey, H., Roy, D., Eds.; Springer: Cham, Switzerland, 2019; Volume 49, pp. 55–79. [ Google Scholar ]

- Subashini, S.; Kavitha, V. A Survey on Security Issues in Service Delivery Models of Cloud Computing. J. Netw. Comput. Appl. 2011 , 35 , 1–11. [ Google Scholar ] [ CrossRef ]

- Bhamare, D.; Salman, T.; Samaka, M.; Erbad, A.; Jain, R. Feasibility of Supervised Machine Learning for Cloud Security. In Proceedings of the International Conference on Information Science and Security, Jaipur, India, 16–20 December 2016; pp. 1–5. [ Google Scholar ]

- Li, C.; Song, M.; Zhang, M.; Luo, Y. Effective replica management for improving reliability and availability in edge-cloud computing environment. J. Parallel Distrib. Comput. 2020 , 143 , 107–128. [ Google Scholar ] [ CrossRef ]

- Purniemaa, P.; Kannan, R.; Jaisankar, N. Security Threat and Attack in Cloud Infrastructure: A Survey. Int. J. Comput. Sci. Appl. 2013 , 2 , 1–12. [ Google Scholar ]

- Yuhong, L.; Yan, S.; Jungwoo, R.; Syed, R.; Athanasios, V. A Survey of Security and Privacy Challenges in Cloud Computing: Solutions and Future Directions. J. Comput. Sci. Eng. 2015 , 9 , 119–133. [ Google Scholar ]

- Chirag, M.; Dhiren, P.; Bhavesh, B.; Avi, P.; Muttukrishnan, R. A survey on security issues and solutions at different layers of Cloud computing. J. Supercomput. 2013 , 63 , 561–592. [ Google Scholar ]

- Behl, A.; Behl, K. An analysis of cloud computing security issues. In Proceedings of the World Congress on Information and Communication Technologies, Trivandrum, India, 30 October–2 November 2012; pp. 109–114. [ Google Scholar ]

- Selamat, N.; Ali, F. Comparison of malware detection techniques using machine learning algorithm. Indones. J. Electr. Eng. Comput. Sci. 2019 , 16 , 435. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Shamshirband, S.; Fathi, M.; Chronopoulos, A.T.; Montieri, A.; Palumbo, F.; Pescapè, A. Computational Intelligence Intrusion Detection Techniques in Mobile Cloud Computing Environments: Review, Taxonomy, and Open Research Issues. J. Inf. Secur. Appl. 2019 , 1–52. [ Google Scholar ]

- Farhan, S.; Haider, S. Security threats in cloud computing. In Proceedings of the Internet Technology and Secured Transactions (ICITST), Abu Dhabi, UAE, 11–14 December 2011; pp. 214–219. [ Google Scholar ]

- Shaikh, F.B.; Haider, S. Security issues in cloud computing. In Proceedings of the International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 15–16 May 2015; pp. 691–694. [ Google Scholar ]

- Hourani, H.; Abdallah, M. Cloud Computing: Legal and Security Issues. In Proceedings of the International Conference on Computer Science and Information Technology (CSIT), Helsinki, Finland, 13–14 June 2018; pp. 13–16. [ Google Scholar ]

- Alam, M.S.B. Cloud Computing-Architecture, Platform and Security Issues: A Survey. World Sci. News 2017 , 86 , 253–264. [ Google Scholar ]

- Shukla, S.; Maheshwari, H. Discerning the Threats in Cloud Computing Security. J. Comput. Theor. Nanosci. 2019 , 16 , 4255–4261. [ Google Scholar ] [ CrossRef ]

- Alsolami, E. Security threats and legal issues related to Cloud based solutions. Int. J. Comput. Sci. Netw. Secur. 2018 , 18 , 156–163. [ Google Scholar ]

- Badshah, A.; Ghani, A.; Shamshirband, S.; Aceto, G.; Pescapè, A. Performance-based service-level agreement in cloud computing to optimise penalties and revenue. IET Commun. 2020 , 14 , 1102–1112. [ Google Scholar ] [ CrossRef ]

- Tsuruoka, Y. Cloud Computing—Current Status and Future Directions. J. Inf. Process. 2016 , 24 , 183–194. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Nagaraju, K.; Sridaran, R. A Survey on Security Threats for Cloud Computing. Int. J. Eng. Res. Technol. 2012 , 1 , 1–10. [ Google Scholar ]

- Mozumder, D.P.; Mahi, J.N.; Whaiduzzaman, M.; Mahi, M.J.N. Cloud Computing Security Breaches and Threats Analysis. Int. J. Sci. Eng. Res. 2017 , 8 , 1287–1297. [ Google Scholar ]

- Gessert, F.; Wingerath, W.; Ritter, N. Latency in Cloud-Based Applications. In Fast and Scalable Cloud Data Management ; Springer: Cham, Switzerland, 2020. [ Google Scholar ]

- De Donno, M.; Giaretta, A.; Dragoni, N.; Bucchiarone, A.; Mazzara, M. Cyber-Storms Come from Clouds: Security of Cloud Computing in the IoT Era. Future Internet 2019 , 11 , 127. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Xue, M.; Yuan, C.; Wu, H.; Zhang, Y.; Liu, W. Machine Learning Security: Threats, Countermeasures, and Evaluations. IEEE Access 2020 , 8 , 74720–74742. [ Google Scholar ] [ CrossRef ]

- Deshpande, P.; Sharma, S.C.; Peddoju, S.K. Security threats in cloud computing. In Proceedings of the International Conference on Computing, Communication and Automation, Greater Noida, India, 11–14 December 2011; pp. 632–636. [ Google Scholar ]

- Varun, K.A.; Rajkumar, N.; Kumar, N.K. Survey on security threats in cloud computing. Int. J. Appl. Eng. Res. 2014 , 9 , 10495–10500. [ Google Scholar ]

- Kazim, M.; Zhu, S.Y. A survey on top security threats in cloud computing. Int. J. Adv. Comput. Sci. Appl. 2015 , 6 . [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Barona, R.; Anita, M. A survey on data breach challenges in cloud computing security: Issues and threats. In Proceedings of the International Conference on Circuit, Power and Computing Technologies (ICCPCT), Paris, France, 17–18 September 2017; pp. 1–8. [ Google Scholar ]

- Aawadallah, N. Security Threats of Cloud Computing. Int. J. Recent Innov. Trends Comput. Commun. 2015 , 3 , 2393–2397. [ Google Scholar ] [ CrossRef ]

- Nadeem, M. Cloud Computing: Security Issues and Challenges. J. Wirel. Commun. 2016 , 1 , 10–15. [ Google Scholar ] [ CrossRef ]

- Nicho, M.; Hendy, M. Dimensions Of Security Threats in Cloud Computing: A Case Study. Rev. Bus. Inf. Syst. 2013 , 17 , 159. [ Google Scholar ] [ CrossRef ]

- Khan, M. A survey of security issues for cloud computing. J. Netw. Comput. Appl. 2016 , 71 , 11–29. [ Google Scholar ] [ CrossRef ]

- Lin, C.; Lu, H. Response to Co-resident Threats in Cloud Computing Using Machine Learning. In Proceedings of the International Conference on Advanced Information Networking and Applications, Caserta, Italy, 15–17 April 2020; Volume 926, pp. 904–913. [ Google Scholar ]

- Venkatraman, S.; Mamoun, A. Use of data visualisation for zero-day malware detection. Secur. Commun. Netw. 2018 , 1–13. [ Google Scholar ] [ CrossRef ]

- Venkatraman, S.; Mamoun, A.; Vinayakumar, R. A hybrid deep learning image-based analysis for effective malware detection. J. Inf. Secur. Appl. 2019 , 47 , 377–389. [ Google Scholar ] [ CrossRef ]

- Lee, K. Security threats in cloud computing environments. Int. J. Secur. Its Appl. 2012 , 6 , 25–32. [ Google Scholar ]

- Liu, Q.; Li, P.; Zhao, W.; Cai, W.; Yu, S.; Leung, V.C. A Survey on Security Threats and Defensive Techniques of Machine Learning: A Data Driven View. IEEE Access 2018 , 6 , 12103–12117. [ Google Scholar ] [ CrossRef ]

- Sarma, M.; Srinivas, Y.; Ramesh, N.; Abhiram, M. Improving the Performance of Secure Cloud Infrastructure with Machine Learning Techniques. In Proceedings of the International Conference on Cloud Computing in Emerging Markets (CCEM), Bangalore, India, 19–21 October 2016; pp. 78–83. [ Google Scholar ]

- Malomo, O.; Rawat, D.B.; Garuba, M. A Survey on Recent Advances in Cloud Computing Security. J. Next Gener. Inf. Technol. 2018 , 9 , 32–48. [ Google Scholar ]

- Hou, S.; Xin, H. Use of machine learning in detecting network security of edge computing system. In Proceedings of the 4th International Conference on Big Data Analytics (ICBDA), Suzhou, China, 13–15 March 2019; pp. 252–256. [ Google Scholar ]

- Zhao, Y.; Chen, J.; Wu, D.; Teng, J.; Yu, S. Multi-Task Network Anomaly Detection using Federated Learning. In Proceedings of the Tenth International Symposium on Information and Communication Technology, Jeju Island, Korea, 16–18 October 2019; pp. 273–279. [ Google Scholar ]

- Aceto, G.; Ciuonzo, D.; Montieri, A.; Persico, V.; Pescapé, A. Know your big data trade-offs when classifying encrypted mobile traffic with deep learning. In Proceedings of the Network Traffic Measurement and Analysis Conference (TMA), Paris, France, 19–21 June 2019; pp. 121–128. [ Google Scholar ]

- Shamshirband, S.; Rabczuk, T.; Chau, K.W. A survey of deep learning techniques: Application in wind and solar energy resources. IEEE Access 2019 , 7 , 64650–164666. [ Google Scholar ] [ CrossRef ]

- Usama, M.; Qadir, J.; Raza, A.; Arif, H.; Yau, K.L.A.; Elkhatib, Y.; Al-Fuqaha, A. Unsupervised Machine Learning for Networking: Techniques, Applications and Research Challenges. IEEE Access 2017 , 7 , 65579–65615. [ Google Scholar ] [ CrossRef ]

- Elzamly, A.; Hussin, B.; Basari, A.S. Classification of Critical Cloud Computing Security Issues for Banking Organizations: A Cloud Delphi Study. Int. J. Grid Distrib. Comput. 2016 , 9 , 137–158. [ Google Scholar ] [ CrossRef ]

- Sayantan, G.; Stephen, Y.; Arun-Balaji, B. Attack Detection in Cloud Infrastructures Using Artificial Neural Network with Genetic Feature Selection. In Proceedings of the IEEE 14th International Conference on Dependable, Autonomic and Secure Computing, Athens, Greece, 12–15 August 2016; pp. 414–419. [ Google Scholar ]

- Lee, Y.; Yongjoon, P.; Kim, D. Security Threats Analysis and Considerations for Internet of Things. In Proceedings of the International Conference on Security Technology (SecTech), Jeju Island, Korea, 25–28 November 2015; pp. 28–30. [ Google Scholar ]

- Al-Janabi, S.; Shehab, A. Edge Computing: Review and Future Directions. REVISTA AUS J. 2019 , 26 , 368–380. [ Google Scholar ]

- Pham, Q.V.; Fang, F.; Ha, V.N.; Piran, M.J.; Le, M.; Le, L.B.; Hwang, W.J.; Ding, Z. A survey of multi-access edge computing in 5G and beyond: Fundamentals, technology integration, and state-of-the-art. IEEE Access 2020 , 8 , 116974–117017. [ Google Scholar ] [ CrossRef ]

- El-Boghdadi, H.; Rabie, A. Resource Scheduling for Offline Cloud Computing Using Deep Reinforcement Learning. Int. J. Comput. Sci. Netw. 2019 , 19 , 342–356. [ Google Scholar ]

- Nawrocki, P.; Śnieżyński, B.; Słojewski, H. Adaptable mobile cloud computing environment with code transfer based on machine learning. Pervasive Mob. Comput. 2019 , 57 , 49–63. [ Google Scholar ] [ CrossRef ]

- Nguyen, N.; Hoang, D.; Niyato, D.; Wang, P.; Nguyen, D.; Dutkiewicz, E. Cyberattack detection in mobile cloud computing: A deep learning approach. In Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC), Barcelona, Spain, 15–18 April 2018; pp. 1–6. [ Google Scholar ]

- Saljoughi, A.; Mehrdad, M.; Hamid, M. Attacks and intrusion detection in cloud computing using neural networks and particle swarm optimization algorithms. Emerg. Sci. J. 2017 , 1 , 179–191. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Xiao, Y.; Jia, Y.; Liu, C.; Cheng, X.; Yu, J.; Lv, W. Edge Computing Security: State of the Art and Challenges. Proc. IEEE 2019 , 107 , 1608–1631. [ Google Scholar ] [ CrossRef ]

- Zamzam, M.; Tallal, E.; Mohamed, A. Resource Management using Machine Learning in Mobile Edge Computing: A Survey. In Proceedings of the Ninth International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 8–10 December 2019; pp. 112–117. [ Google Scholar ]

- Zardari, M.A.; Jung, L.T.; Zakaria, N. K-NN classifier for data confidentiality in cloud computing. In Proceedings of the International Conference on Computer and Information Sciences (ICCOINS), Kuala Lumpur, Malaysia, 3–5 June 2014; pp. 1–6. [ Google Scholar ]

- Calderon, R. The Benefits of Artificial Intelligence in Cybersecurity. Available online: https://digitalcommons.lasalle.edu/ecf-capstones/36 (accessed on 19 July 2020).

- Shamshirband, S.; Chronopoulos, A.T. A new malware detection system using a high performance-ELM method. In Proceedings of the 23rd International Database Applications & Engineering Symposium, Athens, Greece, 10–12 June 2019; pp. 1–10. [ Google Scholar ]

- Park, J.; Lee, D. Privacy preserving K-nearest neighbor for medical diagnosis in e-health cloud. J. Healthc. Eng. 2018 , 1–11. [ Google Scholar ] [ CrossRef ] [ PubMed ] [ Green Version ]

- Zekri, M.; El Kafhali, S.; Aboutabit, N.; Saadi, Y. DDoS attack detection using machine learning techniques in cloud computing environments. In Proceedings of the International Conference of Cloud Computing Technologies and Applications (CloudTech), Rabat, Morocco, 24–26 October 2017; pp. 1–7. [ Google Scholar ]

- Kour, H.; Gondhi, N.K. Machine Learning Techniques: A Survey. In Innnovative Data Communication Technologies and Application Lecture Notes on Data Engineering and Communications Technologies ; Springer: Cham, Switzerland, 2020; pp. 266–275. [ Google Scholar ]

- Hanna, M.S.; Bader, A.A.; Ibrahim, E.E.; Adel, A.A. Application of Intelligent Data Mining Approach in Securing the Cloud Computing. Int. J. Adv. Comput. Sci. Appl. 2016 , 7 , 151–159. [ Google Scholar ]

- Mishra, A.; Gupta, N.; Gupta, B.B. Security Threats and Recent Countermeasures in Cloud Computing. In Modern Principles, Practices, and Algorithms for Cloud Security Advances in Information Security, Privacy, and Ethics ; IGI Global: Hershey, PA, USA, 2020; pp. 145–161. [ Google Scholar ]

- Hussien, N.; Sulaiman, S. Web pre-fetching schemes using Machine Learning for Mobile Cloud Computing. Int. J. Adv. Soft Comput. Appl. 2017 , 9 , 154–187. [ Google Scholar ]

- Arjunan, K.; Modi, C. An enhanced intrusion detection framework for securing network layer of cloud computing. In Proceedings of the ISEA Asia Security and Privacy (ISEASP), Surat, India, 29 January–1 February 2017; pp. 1–10. [ Google Scholar ]

- Grusho, A.; Zabezhailo, M.; Zatsarinnyi, A.; Piskovskii, V. On some artificial intelligence methods and technologies for cloud-computing protection. Autom. Doc. Math. Linguist. 2017 , 51 , 62–74. [ Google Scholar ] [ CrossRef ]

- Wani, A.; Rana, Q.; Saxena, U.; Pandey, N. Analysis and Detection of DDoS Attacks on Cloud Computing Environment using Machine Learning Techniques. In Proceedings of the Amity International Conference on Artificial Intelligence (AICAI), Dubai, UAE, 4–6 February 2019; pp. 870–875. [ Google Scholar ]

- Wan, J.; Yang, J.; Wang, Z.; Hua, Q. Artificial Intelligence for Cloud-Assisted Smart Factory. IEEE Access 2018 , 6 , 55419–55430. [ Google Scholar ] [ CrossRef ]

- Abdurachman, E.; Gaol, F.L.; Soewito, B. Survey on Threats and Risks in the Cloud Computing Environment. Procedia Comput. Sci. 2019 , 161 , 1325–1332. [ Google Scholar ]

- Kumar, R.; Wicker, A.; Swann, M. Practical Machine Learning for Cloud Intrusion Detection: Challenges and the Way Forward. In Proceedings of the ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 3 November 2017; pp. 81–90. [ Google Scholar ]

- Quitian, O.I.T.; Lis-Gutiérrez, J.P.; Viloria, A. Supervised and Unsupervised Learning Applied to Crowdfunding. In Computational Vision and Bio-Inspired Computing. ICCVBIC 2019 ; Springer: Cham, Switzerland, 2020. [ Google Scholar ]

- Meryem, A.; Samira, D.; Bouabid, E.O. Enhancing Cloud Security using advanced Map Reduce k-means on log files. In Proceedings of the International Conference on Software Engineering and Information Management, New York, NY, USA, 4–6 January 2018; pp. 63–67. [ Google Scholar ] [ CrossRef ]

- Zhao, X.; Zhang, W. An Anomaly Intrusion Detection Method Based on Improved K-Means of Cloud Computing. In Proceedings of the Sixth International Conference on Instrumentation & Measurement, Computer, Communication and Control (IMCCC), Harbin, China, 21–23 July 2016; pp. 284–288. [ Google Scholar ]

- Chen, J.; Liu, L.; Chen, R.; Peng, W. SHOSVD: Secure Outsourcing of High-Order Singular Value Decomposition. In Proceedings of the Australasian Conference on Information Security and Privacy, Perth, Australia, 30 November–2 December 2020; pp. 309–329. [ Google Scholar ]

- Feng, J.; Yang, L.; Dai, G.; Wang, W.; Zou, D. A Secure High-Order Lanczos-Based Orthogonal Tensor SVD for Big Data Reduction in Cloud Environment. IEEE Trans. Big Data 2019 , 5 , 355–367. [ Google Scholar ] [ CrossRef ]

- Subramanian, E.; Tamilselvan, L. A focus on future cloud: Machine learning-based cloud security. Serv. Oriented Comput. Appl. 2019 , 13 , 237–249. [ Google Scholar ] [ CrossRef ]

- Alazab, M.; Layton, R.; Broadhurst, R.; Bouhours, B. Malicious spam emails developments and authorship attribution. In Proceedings of the Fourth Cybercrime and Trustworthy Computing Workshop, Sydney, Australia, 21–22 November 2013; pp. 58–68. [ Google Scholar ]

- Sheng, V.; Zhang, J. Machine Learning with Crowdsourcing: A Brief Summary of the Past Research and Future Directions. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 9837–9843. [ Google Scholar ]

- Li, Z.; Xu, K.; Wang, H.; Zhao, Y.; Wang, X.; Shen, M. Machine-Learning-based Positioning: A Survey and Future Directions. IEEE Netw. 2019 , 33 , 96–101. [ Google Scholar ] [ CrossRef ]

Click here to enlarge figure

| Reference | Year | Areas Focused | ML Techniques | Security Issues | Impact in Cloud |

|---|---|---|---|---|---|

| [ ] | 2019 | Protection preserved encrypted data | Supervised and unsupervised learning | Limited | Minor or Intermediate Issues |

| [ ] | 2019 | Trust-based access control | Unsupervised learning | No | A few solutions accessible |

| [ ] | 2020 | Security issues | Supervised and unsupervised learning | Limited | Minor issues |

| [ ] | 2011 | Security and threat issues | Supervised learning | Yes | Long term issues |

| [ ] | 2016 | Security issues and datasets | Supervised learning | Limited | Minor or intermediate issues |

| [ ] | 2018 | Cloud Security | Supervised and unsupervised learning | Limited | Minor or intermediate issues |

| [ ] | 2017 | Cloud threats classification | Supervised and unsupervised learning | No | A few solutions accessible |

| [ ] | 2019 | Malware security threats and protection | Supervised learning | Yes | Long term issues |

| [ ] | 2020 | Security and threat Issues | Supervised learning | Limited | Minor or intermediate issues |

| Cloud Models | Pros | Cons |

|---|---|---|

| Public | • High scalability | • Less secure |

| • Flexibility | • Less customizability | |

| • Cost-effective | ||

| • Reliability | ||

| • Location independence | ||

| Private | • More reliable | • Lack of visibility |

| • More control | • Scalability | |

| • High security and privacy | • Limited services | |

| • Cost and energy efficient | • Security breaches | |

| • Data loss | ||

| Community | • More secure than public Cloud | • Data segregation |

| • Low cost than private Cloud | • Responsibilities allocation within the organization | |

| • More flexible and Scalable | ||

| Hybrid | • High scalability | • Security compliance |

| • Low cost | • Infrastructure dependent | |

| • More flexible | ||

| • More secure |

| Reference | Objective | Technique | Advantages | Disadvantages |

|---|---|---|---|---|

| [ ] | Public Cloud and private Cloud authorities | ANN | Ensure high data privacy; Cloud workload protection | Dedicated and specialized client-server applications for proper functionality |

| [ ] | Supervised and unsupervised for secure cryptosystems | SVM | Secure Data; Improve Security Issues | Storage Issues; Network Error; Security Issues |

| [ ] | Attack detection MCC | ANNs | High accuracy | Time and Storage |

| [ ] | Attack and intrusion detection | ANNs | Tested on different dataset | Accuracy was not reported. |

| [ ] | Reliable resource provisioning in joint edge Cloud environments | K-NN and Data Mining Techniques | K-NN is very simple and intuitive; Better classification over large data sets | Difficulties in finding optimal k value; Time Consuming; High memory utilization |

| [ ] | Privacy Preserving | K-NN | Time efficiency | Accuracy was not reported. |

| [ ] | ML for Cloud Security & C4.5 Algorithm for better protection in the Cloud | C4.5 Algorithm and signature detection Techniques | C4.5 algorithm deals with noise; C4.5 accepted both continues and discrete values | The small variation of data may produce different decision trees; Over-fitting |

| [ ] | Web pre-fetching scheme in MCC | Naive Bayes | Efficient data handling | Time and Storage issues |

| [ ] | Intrusion detection | Navie Bayes | Compatability | Accuracy was not reported. |

| [ ] | Security and privacy issues identification & clarifies the information transfer using ML | ANN | Cloud workload protection and transfer data easily | Dedicate and specialized client-server application for proper functionality; Security issues |

| [ ] | Intrusion detection | SVM and Navie Bayes | High Accuracy | Limited test environments. |

| [ ] | Pros and cons of different authentication strategies for Cloud authentication | ANN & Cloud Delphi techniques | Improved data analysis; ANN gets lower detection precision | Unexplained behavior of ANN; Influence the performance of the network |

| [ ] | Attacks launched on different level of Cloud | ANN & NN Techniques | Provide parallel processing capability | Computational cost increases |

| Reference | Objective | Technique | Advantages | Disadvantages |

|---|---|---|---|---|

| [ ] | ML capability for secure cryptosystems K-Means | ANN Techniques | Ensure high data privacy; Cloud workload protection | Dedicated and specialized client-server applications or proper functionality |

| [ ] | A trust evaluation strategy based on the ML approach predicting the trust values of user and resources | SVD Techniques | A trust-based access control model is an efficient method for security in CC; Privacy protection | Influence the performance of the network; Security Issues |

| [ ] | The encrypted mobile traffic using deep learning | CNN & Deep learning | Secure data; Fast data transfer | Runtime error |

| [ ] | Challenges and successful operationalization of ML based security detections | K-Means & Intrusion Detection Techniques | Ensure high data privacy consistency, restriction, and information | Difficulties to manage information |

| [ ] | Intrusion detection | K-mean | High accuracy and consistency | Comparability |

| [ ] | User privacy | SVD | High Accuracy | Tested on a single model |

| [ ] | Dimensionality reduction | SVD | High accuracy | Comparability |

Share and Cite

Butt, U.A.; Mehmood, M.; Shah, S.B.H.; Amin, R.; Shaukat, M.W.; Raza, S.M.; Suh, D.Y.; Piran, M.J. A Review of Machine Learning Algorithms for Cloud Computing Security. Electronics 2020 , 9 , 1379. https://doi.org/10.3390/electronics9091379

Butt UA, Mehmood M, Shah SBH, Amin R, Shaukat MW, Raza SM, Suh DY, Piran MJ. A Review of Machine Learning Algorithms for Cloud Computing Security. Electronics . 2020; 9(9):1379. https://doi.org/10.3390/electronics9091379

Butt, Umer Ahmed, Muhammad Mehmood, Syed Bilal Hussain Shah, Rashid Amin, M. Waqas Shaukat, Syed Mohsan Raza, Doug Young Suh, and Md. Jalil Piran. 2020. "A Review of Machine Learning Algorithms for Cloud Computing Security" Electronics 9, no. 9: 1379. https://doi.org/10.3390/electronics9091379

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

Advertisement

Smart Malaria Classification: A Novel Machine Learning Algorithms for Early Malaria Monitoring and Detecting Using IoT-Based Healthcare Environment

- Published: 09 September 2024

- Volume 25 , article number 55 , ( 2024 )

Cite this article

- Aleka Melese Ayalew 1 ,

- Wasyihun Sema Admass 2 ,

- Biniyam Mulugeta Abuhayi 1 ,

- Girma Sisay Negashe 3 &

- Yohannes Agegnehu Bezabh 1

13 Accesses

Explore all metrics

Malaria, caused by the Plasmodium parasite and transmitted by female Anopheles mosquitoes, poses a significant risk to nearly half of the global population, with sub-Saharan Africa being the most affected. A rapid and accurate detection method is crucial due to its high mortality rate and swift transmission. This study proposes a real-time malaria monitoring and detection system using an Internet of Things (IoT) framework. The system collects real-time symptom data via wearable sensors, employs edge computing for processing, utilizes cloud infrastructure for data storage, and applies machine learning models for data analysis. The five key components of the framework are wearable sensor-based symptom data collection and uploading, edge (fog) computing, cloud infrastructure, machine learning models for data analysis, and doctors (physicians). The study compares four machine learning techniques: Support Vector Machine (SVM), Artificial Neural Network (ANN), K-Nearest Neighbor (KNN), and Naïve Bayes. SVM outperformed the other algorithms, achieving 98% training accuracy, 96% test accuracy, and a 95% AUC score. Based on the findings, we anticipate that real-time symptom data would enable the proposed system can effectively and accurately diagnose malaria, classifying cases as either Parasitized or Normal.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Web-enabled distributed health-care framework for automated malaria parasite classification: an e-health approach.

Diagnosing malaria from some symptoms: a machine learning approach and public health implications

Detection of Malaria Disease Using Image Processing and Machine Learning

Explore related subjects.

- Artificial Intelligence

Data Availability

No datasets were generated or analysed during the current study.

Malaria parasite detection using deep learning algorithms based on (CNNs) technique. Computers & Electrical Engineering , 103, p. 108316, (Oct. 2022). https://doi.org/10.1016/j.compeleceng.2022.108316

Fact sheet about malaria, World Health Organization Accessed: Dec. 10, 2023. [Online]. Available: https://www.who.int/news-room/fact-sheets/detail/malaria

Đumić, D., Kečo, D., & Mašetić, Z. (2022). Automatization of microscopy malaria diagnosis using computer vision and random forest method. IFAC-Pap , 55 (4), 80–84. https://doi.org/10.1016/j.ifacol.2022.06.013

Article Google Scholar

Rosado, L., Costa, J. M. C. D., Elias, D., & Cardoso, J. S. (2016). Automated detection of malaria parasites on thick blood smears via mobile devices. Procedia Comput Sci , 90 , 138–144. https://doi.org/10.1016/j.procs.2016.07.024

Malaria diagnosis by the polymerase chain reaction: a field study in southeastern Venezuela, Trans. R. Soc. Trop. Med. Hyg , vol. 92, no. 5, pp. 509–511 (Sep. 1998). https://doi.org/10.1016/S0035-9203(98)90893-8

How malaria RDTs work Accessed: Dec. 10, 2023. [Online]. Available: https://www.who.int/teams/global-malaria-programme/case-management/diagnosis/rapid-diagnostic-tests/how-malaria-rdts-work

Langley, D. J., Van Doorn, J., Ng, I. C. L., Stieglitz, S., Lazovik, A., & Boonstra, A. (Jan. 2021). The internet of everything: smart things and their impact on business models. Journal of Business Research , 122 , 853–863. https://doi.org/10.1016/j.jbusres.2019.12.035

Maqbool, S., Waseem Iqbal, M., Raza Naqvi, M., Sarmad Arif, K., Ahmed, M., & Arif, M., IoT based remote patient monitoring system, in (2020). International Conference on Decision Aid Sciences and Application (DASA) , Sakheer, Bahrain: IEEE, Nov. 2020, pp. 1255–1260. https://doi.org/10.1109/DASA51403.2020.9317213

Gómez, J., Oviedo, B., & Zhuma, E. (2016). Patient monitoring system based on internet of things. Procedia Comput Sci , 83 , 90–97. https://doi.org/10.1016/j.procs.2016.04.103

Boikanyo, K., Zungeru, A. M., Sigweni, B., Yahya, A., & Lebekwe, C. (Jul. 2023). Remote patient monitoring systems: applications, architecture, and challenges. Sci Afr , 20 , e01638. https://doi.org/10.1016/j.sciaf.2023.e01638

Gupta, S., Shabaz, M., Gupta, A., Alqahtani, A., Alsubai, S., & Ofori, I. (2023). Personal healthcare of things: a novel paradigm and futuristic approach, CAAI Trans. Intell. Technol , p. cit2.12220, Apr. https://doi.org/10.1049/cit2.12220

Rejeb, A., et al. (Jul. 2023). The internet of things (IoT) in healthcare: Taking stock and moving forward. Internet Things , 22 , 100721. https://doi.org/10.1016/j.iot.2023.100721

Turcu, C. E., & Turcu, C. O. (Feb. 2013). Internet of things as Key enabler for sustainable healthcare delivery. Procedia - Soc Behav Sci , 73 , 251–256. https://doi.org/10.1016/j.sbspro.2013.02.049

Pinto, S., Cabral, J., & Gomes, T., We-care: An IoT-based health care system for elderly people, in (2017). IEEE International Conference on Industrial Technology (ICIT) , Toronto, ON: IEEE, Mar. 2017, pp. 1378–1383. https://doi.org/10.1109/ICIT.2017.7915565

Din, I. U., Guizani, M., Rodrigues, J. J. P. C., Hassan, S., & Korotaev, V. V. (2019). Machine learning in the Internet of Things: designed techniques for smart cities, Future Gener. Comput. Syst , vol. 100, pp. 826–843, Nov. https://doi.org/10.1016/j.future.2019.04.017

Sadique, K. M., Rahmani, R., & Johannesson, P. (2018). Towards security on internet of things: Applications and challenges in Technology. Procedia Comput Sci , 141 , 199–206. https://doi.org/10.1016/j.procs.2018.10.168

Vij, A., Vijendra, S., Jain, A., Bajaj, S., Bassi, A., & Sharma, A. (2020). IoT and machine learning approaches for automation of farm irrigation system. Procedia Comput Sci , 167 , 1250–1257. https://doi.org/10.1016/j.procs.2020.03.440

Durai, S. K. S., & Shamili, M. D. (Jun. 2022). Smart farming using machine learning and deep learning techniques. Decis Anal J , 3 , 100041. https://doi.org/10.1016/j.dajour.2022.100041

Kumar Bhoi, A., Mallick, P. K., Narayana Mohanty, M., & Albuquerque, V. H. C. D. (Eds.). (2021). Hybrid Artificial Intelligence and IoT in Healthcare , vol. 209. in Intelligent Systems Reference Library, vol. 209. Singapore: Springer Singapore, https://doi.org/10.1007/978-981-16-2972-3

Krishnadas, P., Chadaga, K., Sampathila, N., Rao, S., S. K. S., and, & Prabhu, S. (2022). Classification of malaria using object detection models, Informatics , vol. 9, no. 4, p. 76, Sep. https://doi.org/10.3390/informatics9040076

Dsilva, L. R., et al. (2024). Wavelet scattering- and object detection-based computer vision for identifying dengue from peripheral blood microscopy. International Journal of Imaging Systems and Technology , 34 (1), e23020. https://doi.org/10.1002/ima.23020

Meraj, M., Singh, S. P., Johri, P., & Quasim, M. T. An Analysis of malaria prediction through ml- algorithms in python and iot adoptability, 25 (6), 2021, [Online]. Available: https://www.annalsofrscb.ro/index.php/journal/article/view/8273

Vijayakumar, V., Malathi, D., Subramaniyaswamy, V., Saravanan, P., & Logesh, R. (2019). Fog computing-based intelligent healthcare system for the detection and prevention of mosquito-borne diseases, Comput. Hum. Behav , 100 , pp. 275–285, Nov. https://doi.org/10.1016/j.chb.2018.12.009

Sabukunze, I. D., & Suyoto, S. (Mar. 2021). Designing a smart monitoring and alert system for malaria patients based on IoT in Burundi. Int J Online Biomed Eng IJOE , 17 (03), 130. https://doi.org/10.3991/ijoe.v17i03.20369

K, K. (May 2021). Use of internet of things for chronic disease management: an overview. J Med Signals Sens , 11 (2). https://doi.org/10.4103/jmss.JMSS_13_20

Kumar, N. M., & Mallick, P. K. (2018). The internet of things: insights into the building blocks, component interactions, and architecture layers. Procedia Comput Sci , 132 , 109–117. https://doi.org/10.1016/j.procs.2018.05.170

Domínguez-Bolaño, T., Campos, O., Barral, V., Escudero, C. J., & García-Naya, J. A. (Nov. 2022). An overview of IoT architectures, technologies, and existing open-source projects. Internet Things , 20 , 100626. https://doi.org/10.1016/j.iot.2022.100626

Li, C., Wang, J., Wang, S., & Zhang, Y. (Jan. 2024). A review of IoT applications in healthcare. Neurocomputing , 565 , 127017. https://doi.org/10.1016/j.neucom.2023.127017

Kapoor, R., Sidhu, J. S., & Chander, S. (2018). IoT based national healthcare framework for vector-borne diseases in india perspective: a feasibility study, in International Conference on Advances in Computing, Communication Control and Networking (ICACCCN) , Greater Noida (UP), India: IEEE, Oct. 2018, pp. 228–235. https://doi.org/10.1109/ICACCCN.2018.8748330

Medina, J., Espinilla, M., García-Fernández, Á. L., & Martínez, L. (Jan. 2018). Intelligent multi-dose medication controller for fever: From wearable devices to remote dispensers. Computers & Electrical Engineering , 65 , 400–412. https://doi.org/10.1016/j.compeleceng.2017.03.012

Al Bassam, N., Hussain, S. A., Al Qaraghuli, A., Khan, J., Sumesh, E. P., & Lavanya, V. (2021). IoT based wearable device to monitor the signs of quarantined remote patients of COVID-19. Inform Med Unlocked , 24 , 100588. https://doi.org/10.1016/j.imu.2021.100588

Palanisamy, P., Padmanabhan, A., Ramasamy, A., & Subramaniam, S. (2023). Remote patient activity monitoring system by integrating IoT sensors and artificial intelligence techniques, Sensors , vol. 23, no. 13, p. 5869, Jun. https://doi.org/10.3390/s23135869

Ichwana, D., Ikhlas, R. Z., & Ekariani, S. (2018). Heart rate monitoring system during physical exercise for fatigue warning using non-invasive wearable sensor, in International Conference on Information Technology Systems and Innovation (ICITSI) , Bandung - Padang, Indonesia: IEEE, Oct. 2018, pp. 497–502. https://doi.org/10.1109/ICITSI.2018.8696039

Siirtola, P., Koskimäki, H., Mönttinen, H., & Röning, J. (2018). Using sleep time data from wearable sensors for early detection of migraine attacks, Sensors , vol. 18, no. 5, p. 1374, Apr. https://doi.org/10.3390/s18051374

Sood, S. K., & Mahajan, I. (2017). Wearable IoT sensor based healthcare system for identifying and controlling chikungunya virus, Comput. Ind , vol. 91, pp. 33–44, Oct. https://doi.org/10.1016/j.compind.2017.05.006

Abdulrazak, B., Mostafa Ahmed, H., Aloulou, H., Mokhtari, M., & Blanchet, F. G. (Sep. 2023). IoT in medical diagnosis: detecting excretory functional disorders for older adults via bathroom activity change using unobtrusive IoT technology. Front Public Health , 11 , 1161943. https://doi.org/10.3389/fpubh.2023.1161943

Chaudhury, S. (2022). Jun., Wearables detect malaria early in a controlled human-infection study, IEEE Trans. Biomed. Eng , vol. 69, no. 6, pp. 2119–2129, https://doi.org/10.1109/TBME.2021.3137756

Al-Halhouli, A., Albagdady, A., Alawadi, J., & Abeeleh, M. A. (May 2021). Monitoring symptoms of infectious diseases: perspectives for printed wearable sensors. Micromachines , 12 (6), 620. https://doi.org/10.3390/mi12060620

Yousefpour, A. (2019). Sep., All one needs to know about fog computing and related edge computing paradigms: A complete survey, J. Syst. Archit , vol. 98, pp. 289–330, https://doi.org/10.1016/j.sysarc.2019.02.009

Matthews, L. R., Gounaris, C. E., & Kevrekidis, I. G. (2019). Designing networks with resiliency to edge failures using two-stage robust optimization, Eur. J. Oper. Res , vol. 279, no. 3, pp. 704–720, Dec. https://doi.org/10.1016/j.ejor.2019.06.021

Li, F., & Du, J. (2012). Mass data storage and management solution based on cloud computing. IERI Procedia , 2 , 742–747. https://doi.org/10.1016/j.ieri.2012.06.164

Tian, W., & Zhao, Y. (2015). An introduction to cloud computing. in Optimized Cloud Resource Management and Scheduling (pp. 1–15). Elsevier. https://doi.org/10.1016/B978-0-12-801476-9.00001-X

Department of Information Technology, College of Engineering, K. L. N., Madurai, India, G., Ramesh, J., Logeshwaran, V., Aravindarajan, & Department of Information Technology. (2023). and, KLN College of Engineering, Madurai, India, A Secured Database Monitoring Method to Improve Data Backup and Recovery Operations in Cloud Computing, BOHR Int. J. Comput. Sci , vol. 2, no. 1, pp. 1–7, https://doi.org/10.54646/bijcs.019

Kumar, P. M., Lokesh, S., Varatharajan, R., Chandra Babu, G., & Parthasarathy, P. (2018). Cloud and IoT based disease prediction and diagnosis system for healthcare using Fuzzy neural classifier, Future Gener. Comput. Syst , vol. 86, pp. 527–534, Sep. https://doi.org/10.1016/j.future.2018.04.036

Md, S., Rahman, T., Ghosh, N. F., Aurna, M. S., Kaiser, M., Anannya, & Hosen, A. S. M. S. (2023). Machine learning and internet of things in industry 4.0: A review, Meas. Sens , vol. 28, p. 100822, Aug. https://doi.org/10.1016/j.measen.2023.100822

Mahdavinejad, M. S., Rezvan, M., Barekatain, M., Adibi, P., Barnaghi, P., & Sheth, A. P. (2018). Machine learning for internet of things data analysis: a survey, Digit. Commun. Netw , vol. 4, no. 3, pp. 161–175, Aug. https://doi.org/10.1016/j.dcan.2017.10.002

Adeboye, N. O., Abimbola, O. V., & Folorunso, S. O. (Feb. 2020). Malaria patients in Nigeria: Data exploration approach. Data Brief , 28 , 104997. https://doi.org/10.1016/j.dib.2019.104997

Blanco, V., Japón, A., & Puerto, J. (Oct. 2022). A mathematical programming approach to SVM-based classification with label noise. Computer and Industrial Engineering , 172 , 108611. https://doi.org/10.1016/j.cie.2022.108611

Ayalew, A. M., Bezabh, Y. A., Abuhayi, B. M., & Ayalew, A. Y. (2024). Atelectasis detection in chest X-ray images using convolutional neural networks and transfer learning with anisotropic diffusion filter. Inform Med Unlocked , 45 , 101448. https://doi.org/10.1016/j.imu.2024.101448

Guresen, E., & Kayakutlu, G. (2011). Definition of artificial neural networks with comparison to other networks. Procedia Comput Sci , 3 , 426–433. https://doi.org/10.1016/j.procs.2010.12.071

Download references

Authors declare no funding for this research.

Author information

Authors and affiliations.

Department of Information Technology, University of Gondar, Gondar, Ethiopia

Aleka Melese Ayalew, Biniyam Mulugeta Abuhayi & Yohannes Agegnehu Bezabh

Department of Information Technology, Mekdela Amba University, Mekdela Amba, Ethiopia

Wasyihun Sema Admass

Department of Information Systems, University of Gondar, Gondar, Ethiopia

Girma Sisay Negashe

You can also search for this author in PubMed Google Scholar

Contributions

A.M.A and W.S.A: Conceptualization, Methodology, Software, Writing review & editing original draft, Data curation, Methodology, Software. B.M.A, G.S.N, and Y.A.B: Visualization, Investigation, Visualization, Investigation, Validation.

Corresponding author

Correspondence to Aleka Melese Ayalew .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Ayalew, A.M., Admass, W.S., Abuhayi, B.M. et al. Smart Malaria Classification: A Novel Machine Learning Algorithms for Early Malaria Monitoring and Detecting Using IoT-Based Healthcare Environment. Sens Imaging 25 , 55 (2024). https://doi.org/10.1007/s11220-024-00503-3

Download citation

Received : 17 June 2024

Revised : 19 July 2024

Accepted : 05 August 2024

Published : 09 September 2024

DOI : https://doi.org/10.1007/s11220-024-00503-3

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Internet of Things

- Machine learning

- Early identification

- Real-time monitoring

- Find a journal

- Publish with us

- Track your research

IEEE Account

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Advances, Systems and Applications

- Open access

- Published: 27 January 2023

Task scheduling in cloud environment: optimization, security prioritization and processor selection schemes

- Tao Hai 1 , 2 ,

- Jincheng Zhou 1 , 3 ,

- Dayang Jawawi 2 ,

- Dan Wang 3 , 4 ,

- Uzoma Oduah 5 ,

- Cresantus Biamba 6 &

- Sanjiv Kumar Jain 7

Journal of Cloud Computing volume 12 , Article number: 15 ( 2023 ) Cite this article

4639 Accesses

11 Citations

Metrics details

Cloud computing is an extremely important infrastructure used to perform tasks over processing units. Despite its numerous benefits, a cloud platform has several challenges preventing it from carrying out an efficient workflow submission. One of these is linked to task scheduling. An optimization problem related to this is the maximal determination of cloud computing scheduling criteria. Existing methods have been unable to find the quality of service (QoS) limits of users- like meeting the economic restrictions and reduction of the makespan. Of all these methods, the Heterogeneous Earliest Finish Time (HEFT) algorithm produces the maximum outcomes for scheduling tasks in a heterogeneous environment in a reduced time. Reviewed literature proves that HEFT is efficient in terms of execution time and quality of schedule. The HEFT algorithm makes use of average communication and computation costs as weights in the DAG. In some cases, however, the average cost of computation and selecting the first empty slot may not be enough for a good solution to be produced. In this paper, we propose different HEFT algorithm versions altered to produce improved results. In the first stage (rank generation), we execute several methodologies to calculate the ranks, and in the second stage, we alter how the empty slots are selected for the task scheduling. These alterations do not add any cost to the primary HEFT algorithm, and reduce the makespan of the virtual machines’ workflow submissions. Our findings suggest that the altered versions of the HEFT algorithm have a better performance than the basic HEFT algorithm regarding decreased schedule length of the workflow problems.

Introduction

Cloud computing works on a “pay for each use” system where clients access the cloud services without having full knowledge of the distribution policies and hosting specifics [ 1 , 2 , 3 ]. This provides global on-request access to a shared pool of assets such as storage space, computing servers, and web facilities for a reduced time to shop for enterprises and determine the logical findings [ 4 ]. Clients can access these assets steadily with no stress and no need to communicate with the facility provider [ 5 , 6 ]. The aim of cloud infrastructure is to provide an easy-to-use workspace for dynamic applications.

The workspace can be obtained when various computer hardware are integrated with software package services. These facilities allow clients to transmit their submissions in cyberspace through the indication of their execution, accessibility, and Quality of Service (QoS) necessities [ 7 ]. As a result of the different configuration, deployment, and arrangement necessities of such submissions, the approaches for asset management and task scheduling becomes basic in the development of the efficiency and effectiveness of the cloud framework [ 8 , 9 ]. In a distributed framework, all the jobs may be imagined as executing the various tasks in it. These tasks are classified into dependent and independent tasks. While independent tasks can be performed concurrently by several Virtual Machines (VMs), dependent tasks have to be planned through the fulfilment of their precedence relationships. This can be presented as a Directed Acyclic Graph (DAG) where the graph vertices or nodes represent tasks, and edges represent links between the tasks [ 10 , 11 ]. It is compulsory to perform tasks with precedence restrictions in a scheduling order that decreases the schedule makespan. NP-Complete is the discovery of the maximal results for a task scheduling challenge [ 10 ].

Task scheduling issues can be classified into two primary classes: the deterministic and non-deterministic scheduling. The deterministic (compile-time) scheduling is sub-divided into the heuristics-based [ 12 , 13 ] and Guided Random Search-Based (GRSB) [ 14 , 15 , 16 ]. Deterministic task scheduling is also referred to as static scheduling. The GRSB algorithms (Genetic Algorithms) cost more than heuristics-based scheduling algorithms because the algorithms need more iterations to generate an enhanced schedule. The heuristics-based algorithms on the other hand, provide approximate solutions in record time. They can be categorized as duplication-related [ 17 , 18 ], clustering-based [ 19 , 20 ], and list-based [ 21 , 22 , 23 ]. The duplication-based heuristics have higher time complexity, while clustering-based heuristics are suitable for homogeneous frameworks.

In this paper, we considered list-based heuristics because of their decreased duration and efficiency in delivering a shorter makespan. They work in two primary stages for task scheduling. In the first stage, calculation of rank is done for individual tasks, after that arranged in a descending order. In the second stage, we schedule the task with the highest rank value on the available machine. The Heterogeneous Earliest Finish Time (HEFT) procedure is the most popular among its counterparts for heterogeneous computing because of its high performance trade-off and low costs [ 24 ].

The following are the main contributions of this study:

We design and propose three altered versions of the HEFT algorithm for rank calculation and processor selection, and to reduce the duration for the task scheduling.

We lay out the challenge of task scheduling on heterogeneous machines and the cloud framework-related features for efficiently managing the specified tasks on the available VMs through the inclusion of the dependency restrictions among the tasks.

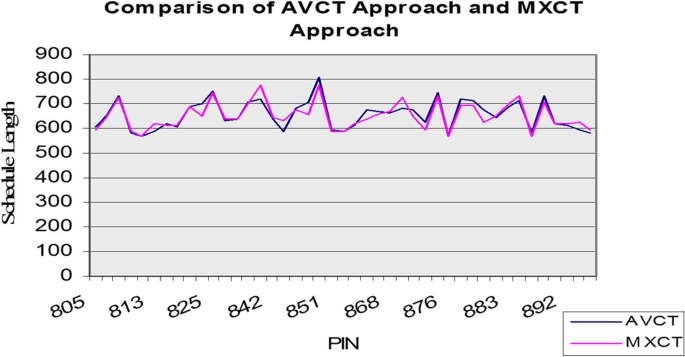

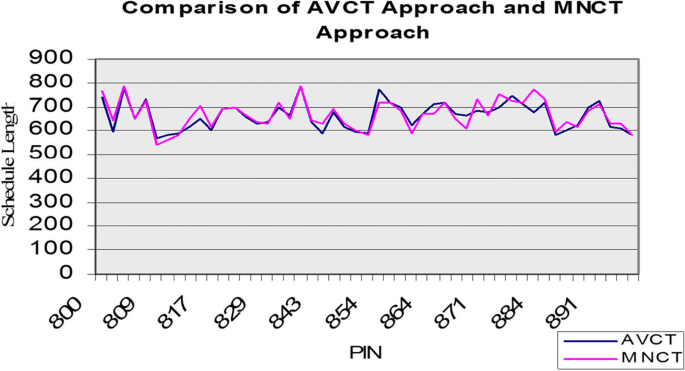

We analyse and compare the proposed algorithms with the basic HEFT algorithm, the AVCT (Average Computation Cost) algorithm on arbitrarily created DAGs of real-world applications.

The novelty of the proposed method lies in the different methodologies in the two stages of the HEFT algorithm. In the first stage (rank generation), we execute several methodologies to calculate the ranks, and in the second stage, we alter how the empty slots are selected for the task scheduling. These alterations do not add any cost to the primary HEFT algorithm, and reduce the makespan of the virtual machines’ workflow submissions. From the computational analyses and experiments we carried out, we observed the significant differences between the performance of the basic HEFT algorithm (AVCT approach) and our proposed altered versions MXCT (Maximum Computation Cost), MNCT (Minimum Computation Cost), and AVBS (Average Computation Cost and Best Empty Slot), regarding the schedule makespan that was produced. This implies that the scheme used affects the schedule length. We also observed that using the average value scheme for rank calculation and selection of the first empty slot is not always the best option. Our findings indicate that our proposed improved versions perform better than the basic HEFT algorithm regarding the decreased schedule length of the workflow problems running on the virtual machines.

The rest of this paper is organized as follows: Section 2 reviews the related literature. Section 3 briefly introduces multiprocessor task scheduling, and describes the problem model. Section 4 explores the HEFT algorithm and the proposed methodology. Section 5 discusses the experimental results. Finally, Section 6 concludes the paper.

Literature review

The authors in [ 5 ] proposed a community-based cloud framework to manage emergencies. Its aim is to coordinate and oversee different organizations and combine large amounts of heterogeneous data in order to deploy logistics and personnel to search and rescue. The framework can also be utilized in the assessment of damage. In [ 6 ], to make clear the fundamentals of cloud computing, the authors explained the features of the areas which distinguish cloud computing from other research areas. They mainly compared cloud computing to grid computing and gave insights to the essentials of both concepts. The authors in [ 7 ] proposed a toolkit which allows the simulation and modelling of application provisioning and cloud computing systems. The aim was to achieve resource performance and application workload models under different user and system configurations. In [ 8 ], the authors provided a brief but comprehensive overview into speech bifurcation, both into series and single words with unrestricted speech, and presented a methodology which converts vocal signals into text. The authors in [ 9 ] proposed a game theoretic framework for the management of dynamic cloud services, including allocation of resources and assignment of tasks, with the aim of providing reliable cloud services. The proposed framework would assist cloud service providers in the management of their resources in a cloud computing environment.

In [ 10 ], the authors presented an algorithm for scheduling of tasks that makes use of the standard deviation of the estimated task execution time on the resources available in the computing environment. This approach considers the heterogeneity of the task and significantly reduces the execution time of a specific application. The authors in [ 11 ] proposed improved versions of algorithms specifically for heterogeneous systems used for compilation of time list scheduling where the priorities of the tasks are computed. In [ 14 ], the authors examined the dynamic scheduling of tasks in a multiprocessor system in order to obtain a viable solution making use of genetic algorithms integrated with popular heuristics. The experimental results showed that the genetic algorithm can used for task scheduling to meet deadlines. The authors in [ 15 ] designed a genetic evolution-based algorithm to find an optimal solution for task scheduling in a multiprocessor system in record time. In [ 16 ], the authors provided a comprehensive overview of genetic algorithms, its techniques, tools and research results which would allow the algorithms to be applied to real-world problems in different fields. The authors in [ 12 ] presented two novel algorithms for heterogeneous processors with the goal of attaining speedy scheduling time and high performance. The experimental results revealed that the proposed algorithms performed better than existing algorithms in terms of quality and cost of schedules.

In [ 13 ], the authors proposed an algorithm for scheduling tasks in a multicore processor system which significantly decreases the recovery time in case the system fails. The proposed algorithm is based on a check pointing method. The authors in [ 17 ] proposed a cutting-edge duplication-based algorithm to reduce the schedule makespan and delay of the task execution. The proposed algorithm schedules tasks with the lowest redundant duplications. In [ 18 ], the authors presented a list scheduling algorithm to consider the heterogeneity of communication and computation. They also proposed a novel approach for priority computation which considers the difference in performances in the target computing system making use of variance. The authors in [ 23 ] proposed a ranking algorithm based on the parent–child relationship and the priority assignment stage of the HEFT algorithm designed for task scheduling in a multiprocessor system. The proposed algorithm works on the keywords’ density, the age of the webpage, and the amount of node successors.

The problem model

Multiprocessor task scheduling.

Previously, various researchers have proposed several list scheduling procedures to resolve the task scheduling issue. The HEFT algorithm [ 12 ] estimates the tasks’ ascendant rank values with the average communication and computation cost. The Standard Deviation Based Task Scheduling (SDBATS) [ 10 ] uses the standard deviation of transmission and computational expenses to approximate ascendant rank values. The Critical Path on a Processor (CPOP) [ 12 ] adds the descendant and ascendant rank values to create an important track and precedence column. During each stage of the DAG, the Performance Effective Task Scheduling (PETS) [ 25 ] includes the average computation cost, data transmission and reception cost to fix the tasks’ rank values. The Duplication based Heterogeneous Earliest Finish time (HEFD) [ 18 ] uses task variance as a feature of heterogeneity to approximate the transmission and computation costs among tasks. Predict Earliest Finish Time (PEFT) [ 24 ] is created on the look-forward technique, and approximates the descendent tasks through the calculation of an Optimistic Cost value Table (OCT). The OCT is a 2D array whose columns and rows indicate the number of processors and tasks, respectively. Each element in the OCT ( \({t}_{i}\) , \({p}_{j}\) ) shows the optimum of the shortest ways of \({t}_{i}\) offspring tasks to the leaving node, noting that machine \({p}_{j}\) is nominated for task \({t}_{i}\) . All these algorithm calculations rely on standard deviation or the average of task weights on accessible machines. They do not include the framework heterogeneity. The most recent effort shows how standard deviation includes task and heterogeneity on existing machines. The various task scheduling algorithms and their limitations, tools, and parameters were analyzed in [ 26 , 27 ].

We believe that the HEFT algorithm’s efficiency can be improved by using three versions of the basic HEFT algorithm. This paper proposes two schemes of the first stage (rank calculation), and a different approach for selection of the empty slot. We examined the schedules makespan generated by each version and regarded the minimum length makespan as the result. Although it slightly increases the algorithm’s costs, it is a trade-off between time complexity and performance. Our evaluation illustrates that the proposed versions produce high value schedules in terms of higher efficiency and decreased schedule length.

The model and objective function

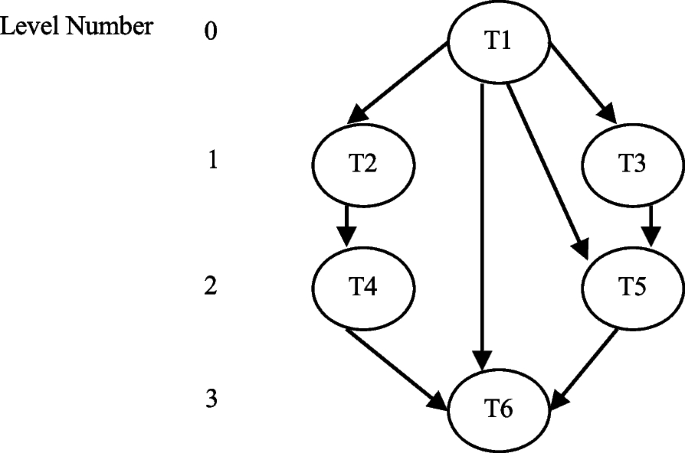

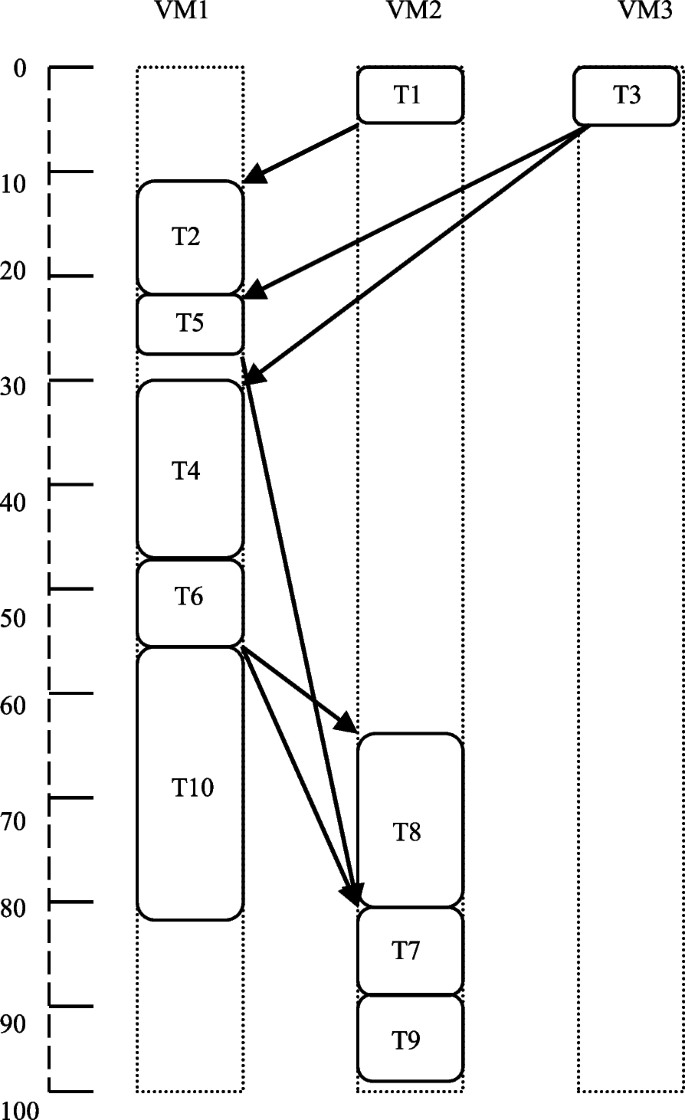

The model of the scheduling structure consists of a target computation architecture, a submission (application), and scheduling standards. A problem can be indicated as a (DAG) = G (T, E, R, C) (see Fig. 1 ), where \(T = \left\{ {t}_{i},i = \mathrm{0,1},2,...,n-1\right\}\) is a set of n tasks [ 28 , 29 , 30 ]. Symbol E indicates a set of edges between tasks \({E = \{ e}_{i,j}, i <j \}\) , and \({e}_{i,j}\) represents the precedence limitations between two linked tasks. Tasks \({t}_{i}\) , \({t}_{j}\) ∈ T, which are connected to each other, signifying the precedence limitation of task \({t}_{j}\) being dependent on task \({t}_{i}\) for its operation. It illustrates that task \({t}_{i}\) results will be applied as the input value for task \({t}_{j}\) , and \({t}_{j}\) cannot begin its implementation before \({t}_{i}\) . The task \({t}_{j}\) is the heir of \({t}_{i}\) and \({t}_{i}\) is the predecessor of \({t}_{j}\) . Here, \(R\) signifies a 2D matrix of size \(v\times m\) , and \({r}_{ij}\) in \(R\) denotes the estimated operating time of \({v}_{i}\) on \({j}^{th}\) processor. A matrix \(CmC(t\times t)\) represents the communication cost between any two tasks \({t}_{i}\) and \({t}_{j}\) . In the graph below, a task with no ancestor is referred to as an entry task, and a task that has no descendant is referred to as an exit (leaving) task.

A model DAG

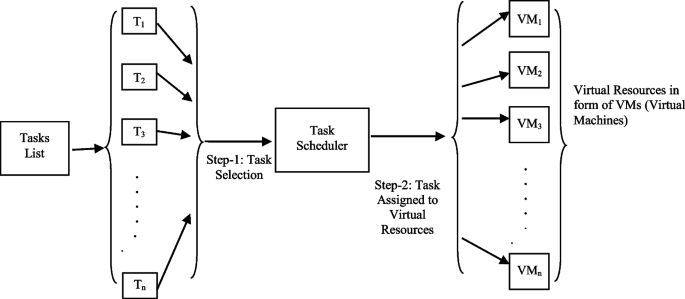

A cloud framework consists of a set \(VM = \{ {vm}_{i} where i = \mathrm{0,1},2,\dots .m-1 \}\) of m VMs that self-regulate and are linked over a high-rate network as illustrated in Fig. 2 . The Data Transfer Frequency (DTF) may change because of the modified network bandwidth of cloud architecture. DTF can be written as an \(m \times m\) two-dimensional array, and among any two VMs as \(DTFm\times m\) . The Probable Execution Cost (PEC) can be indicated by an extra 2D array \(PECn\times m\) to carry out a task \({t}_{i}\) on a VM \({vm}_{j}\) , where \(0 <= i<= n-1\) and \(0 <= j <= m- 1.\) The PEC builds on the VM’s speed of computation and can be different for each VM.

Task scheduling in a cloud-based framework

The communication cost between \({vm}_{x}\) and \({vm}_{y}\) depends on two aspects. The first is the processors’ installed frequency on both sides of communications. The second is the frequency’s correspondence cost value. We assume that each VM workstation can transmit to other workstations of a different VM with no conflict on the transmission channel. We also assume that tasks planned on the similar VM have no cost of communication among them.

The aim of the task arrangement challenge is to organize all the tasks of a given submission to machines for the application’s completion time to reduce, fulfilling all the precedence limitations.

Methodology

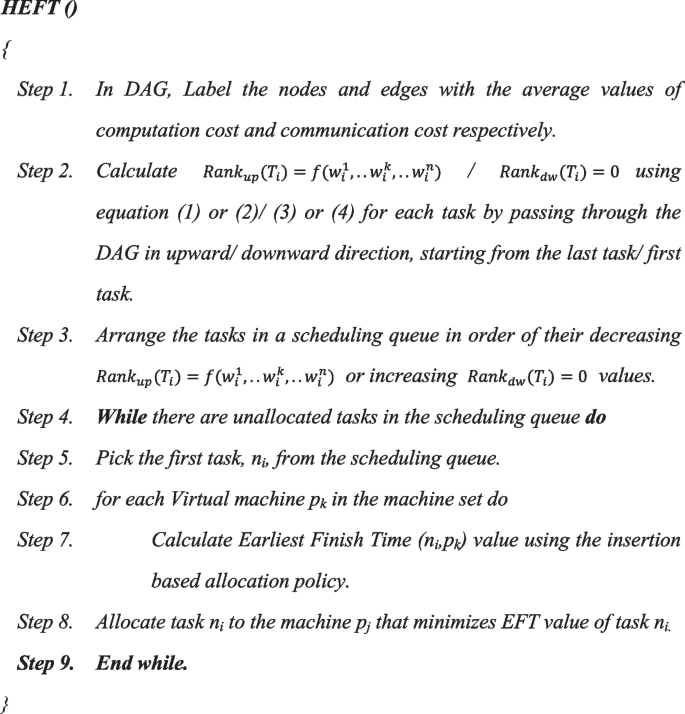

Review of the heft algorithm.