Top 22 JP Morgan Data Scientist Interview Questions + Guide in 2024

Back to Jpmorgan Chase & Co.

Introduction

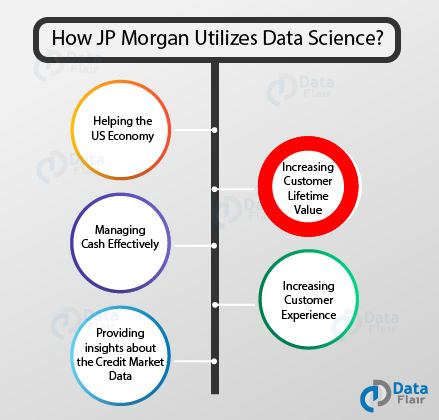

JP Morgan Chase & Co. is a leading global financial services firm and one of the largest banking institutions in the US. As an organization, JP Morgan is becoming increasingly reliant on data-driven business decisions, and sophisticated data management capabilities for top clients. They require data scientists across their teams in functions such as Cybersecurity, Investment Banking, and Commercial Banking for risk analysis, fraud investigation, market research, and many more challenging functions.

If you are planning to interview for a Data Scientist position at JP Morgan, or are curious about the process, this interview guide is for you.

Read on to find out how you can boost your chances when you land that JP Morgan data scientist interview.

JP Morgan Data Scientist Interview Process

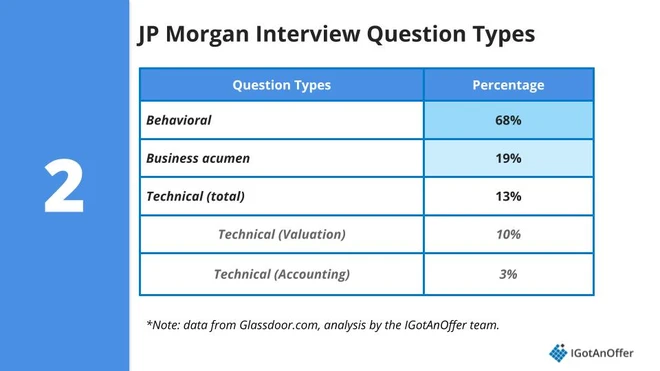

JP Morgan Chase’s interview process is rigorous, reflecting their commitment to hiring individuals who are not only technically adept but also aligned with the company’s values and long-term objectives. The process usually consists of four interview rounds but may differ based on the team and seniority of the position.

1. Application

You can apply for jobs through their website, recruiters, or trusted online platforms. You can consider asking for an employee referral as well when you apply. Make sure to quantify the success of key projects as well as your leadership skills, as these are qualities that they look for in promising candidates. JP Morgan advises that brevity is important during your application.

2. HireVue Interview

This round is often conducted over the HireVue platform. You will be asked to record a series of video responses and respond to coding challenges. The purpose of this round is to assess your candidature virtually to ensure you are a good fit for the in-person rounds later.

3 . In-person Interview(s)

If it’s a good fit, you will be invited onsite to meet your team and have a panel interview. These rounds typically involve a mix of technical, behavioral, and case study questions.

Interview tips from JP Morgan’s careers page: “Stay up to date on the news, both general and firm-specific, so you can speak from a place of knowledge and confidence. Be ready to share specific examples of your previous experience that reflect transferrable skills to the opportunity you are applying for. Prepare questions for our team, so you can learn more about the opportunity and our firm.”

Frequently Asked JP Morgan Data Scientist Interview Questions

You will be expected to be technically sound in SQL, Python, machine learning algorithms, and analytical solutions, and apply these technical skills to real-life scenarios the company faces, such as risk management, fraud detection, investment strategies, operational improvements, etc.

It is a good idea to stay updated about the company through its website and LinkedIn page, and follow firm-specific and data science-related news to stay abreast of the business problems you may encounter.

For a more in-depth discussion, look through our list below as we’ve hand-picked popular questions that have actually been asked in JP Morgan’s Data Science interview.

1. Describe a challenging data science project you handled. How did you manage the complexities, and what was the outcome?

You’ll face a lot of complex decision-making at JP Morgan, so you need to showcase your experience in handling such situations.

How to Answer

Focus on a project you feel comfortable discussing in depth. Detail your approach, strategies, and impact. Be authentic and make sure to demonstrate that you worked collaboratively with your team as well as stakeholders.

“In my previous firm, I led a project to optimize investment strategies using machine learning. The challenge was integrating disparate data sources while ensuring model accuracy. My approach involved collaborating with cross-functional teams to refine data integration and iteratively improving the model based on stakeholder feedback. The outcome was a 15% improvement in prediction accuracy, significantly aiding our decision-making.”

2. Why do you want to join JP Morgan?

Interviewers will want to know why you specifically chose the Data Scientist role at JP Morgan. They want to establish if you’re passionate about the company’s culture and values or if your interest is temporary.

Your answer should cover why you chose the company and role and why you’re a good match for both. Frame your response positively. Additionally, focus on how your selection would benefit both parties.

“J.P. Morgan promises the opportunity to work on complex financial challenges. This aligns with my passion for tackling intricate financial problems and my background in financial analysis and data-driven decision-making. My skills, coupled with my enthusiasm for innovation in finance, make me a good fit. The firm’s commitment to employee development and its inclusive culture also resonate with my professional values and aspirations.”

3. Tell us about a time when you had to explain complex data science concepts to non-technical stakeholders. How did you ensure they understood?

As you will be expected to participate in cross-functional teams and projects, the ability to communicate complex ideas effectively is non-negotiable.

Highlight your communication skills through a specific instance from a past project. Use the STAR method of storytelling - discuss the S pecific situation you were challenged with, the T ask you decided on, the A ction you took, and the R esult of your efforts.

“I was tasked with explaining the outcomes of a predictive model to our marketing team, in a past project. I used analogies related to their daily work to illustrate how the model functions and its relevance to their campaigns, avoiding any unnecessary technical jargon. I followed up with a Q&A session to address any doubts. This extra effort went a long way in promoting team dynamics and ensuring that the marketing team felt included in the technical conversations.”

4. How do you prioritize multiple deadlines?

You may need to work across teams, projects, and even geographies in a global organization like JP Morgan Chase. Time management and organization are essential skills to succeed.

Emphasize your ability to differentiate between urgent and important tasks. Mention any tools or frameworks you use for time management. It’s also important to showcase your ability to adjust priorities.

“In a previous role, I often juggled multiple projects with tight deadlines. I prioritized tasks based on their impact and deadlines using a combination of the Eisenhower Matrix and Agile methodologies. I regularly reassessed priorities to accommodate any changes and communicated proactively with stakeholders about progress and any potential delays.”

5. Can you provide an example of a time when you had to make a quick decision based on incomplete data?

Real-world data is seldom perfect, and there will be occasions when your team or manager asks for your input when a quick decision is paramount. The interviewer wants to test your domain knowledge and critical thinking skills.

Provide an example where you had to make a timely decision with partial data. It’s important to convey the rationale behind your decision. You should also demonstrate that you are willing to seek help from experts when needed - this shows that you are a team player.

“We faced a tight deadline in my old firm, to launch a marketing campaign with incomplete customer data. I looked at existing trends to extrapolate missing information and consulted with domain experts. Based on this, we made an informed decision to proceed with a targeted approach, which ultimately resulted in a successful campaign with higher-than-expected engagement rates.”

6. Given a list of tuples featuring names and grades on a test, write a function to normalize the values of the grades to a linear scale between 0 and 1.

You will need to demonstrate basic data manipulation problem skills in Python, as such operations are necessary for the day-to-day coding requirements for a Data Scientist in JPMC.

Briefly outline your approach, which should involve finding the minimum and maximum grades and then applying a formula to normalize each grade.

“My approach would be to extract the grades from the list of tuples, find the minimum and maximum grades, and then normalize each grade using the formula: (grade - min_grade) / (max_grade - min_grade).”

7. You have access to two tables: transactions , which includes fields like transaction_id , customer_id , amount , and transaction_date , and customers , which includes customer_id , age , and income . Write an SQL query to identify the top 10% of customers by transaction volume in the last quarter and provide insights into their age and income distribution.

In a JP Morgan Data Scientist interview, a question like this will evaluate your ability to extract meaningful insights from financial data using SQL window functions.

Explain your SQL logic systematically. Discuss your insights and how they would aid business strategies.

“I’d join the transactions table with the customers table on the customer_id column. Then, I’d filter the transactions to include those from the last quarter. Using a window function like RANK() or NTILE(), I’d identify the top 10% of customers based on transaction volume. Finally, I’d analyze the age and income distribution by looking for patterns that could inform targeted marketing or product development.”

8. You are given a deck of 500 cards numbered from 1 to 500. If the cards are shuffled randomly and you are asked to pick three, one at a time, what’s the probability of each subsequent card being larger than the previously drawn one?

Probability, permutations and combinations, and logical thinking are mathematical skills essential to analyzing financial data at JPMC.

Emphasize the importance of considering all possible combinations of three cards and then the favorable outcomes. Inform the interviewer what mathematical approach (Binomial distribution) you are going to follow.

“The total number of ways to draw three cards from 500 is $^{500}C_3$. Each specific set of three cards can only be arranged in one way to meet the condition (ascending order). So, the probability is the number of sets of three cards, which is $^{500}C_3$ divided by the total number of ways to draw three cards.”

9. Explain how an XGBoost model differs from a Random Forest model.

You need to know about advanced machine learning techniques to solve complex problems such as credit risk in JPMC.

How to Answer Focus on the key differences, and provide examples of potential applications in financial modeling.

“XGBoost is a gradient boosting algorithm that builds trees one at a time, where each new tree helps to correct errors made by previously trained trees. It uses gradient descent to minimize loss when adding new models. Random Forest, on the other hand, creates a ‘forest’ of decision trees trained on random subsets of data and averages their predictions. This parallel approach in Random Forest is different from the sequential tree-building in XGBoost. Also, XGBoost includes regularization, which helps in reducing overfitting.”

10. Write a function to calculate the total profit gained from investing in an index fund from the start to the end date.

You will be expected to know how to code functions related to investment scenarios on the fly, so do ensure to practice such problems in Python.

You need to calculate the total profit from transactions in an index fund, considering the discrete nature of share purchases and daily price changes. Mention the importance of accounting for the daily valuation of the fund and the timing of transactions.

“I’d first track the total number of shares owned, updating it based on daily deposits and withdrawals. I’d calculate the share purchases based on the available funds and daily index price. For each day, I’d adjust the value of the holdings based on the index’s daily price change. This approach mirrors real-world scenarios at J.P. Morgan.”

11. In analyzing financial transaction data at JPMC, how would you differentiate and handle outliers that are erroneous versus those that represent significant but valid market events?

Addressing how to handle outliers in transaction data demonstrates your analytical skills as well as domain expertise in financial data.

Emphasize the importance of understanding the context of the data. Differentiate between outliers by investigating their source: erroneous outliers often stem from data entry errors or technical glitches, while valid outliers could be due to significant market events like a merger or regulatory change. Stress the importance of using statistical methods to identify outliers, coupled with domain knowledge to interpret them.

“I would use statistical methods like z-scores or IQR to identify outliers. Then, I’d investigate each outlier’s context. For example, if an outlier coincides with a major market event, like a central bank announcement, it’s likely a valid data point reflecting market reaction. However, if the outlier deviates significantly from market trends without a corresponding event, it might be erroneous. In such cases, I would consult with market experts or cross-reference with other data sources.”

12. Let’s say that you are working on analyzing salary data. You are tasked by your manager with computing the average salary of a Data Scientist using a recency-weighted average. Write the function to compute the average Data Scientist salary given a mapped linear recency weighting on the data.

Recency-weighted averages are an important statistical method to analyze trends where market rates fluctuate significantly.

Explain the concept and its relevance in data analysis. In your function, outline how you would assign greater weight to more recent salaries.

“I would write a function that takes a list of salaries from the past ‘n’ years. The function will assign a linearly increasing weight to each year’s salary, with the most recent year having the highest weight. This approach ensures that recent trends in Data Scientist salaries have a more significant impact on the computed average, reflecting the current market conditions more accurately.”

13. How would you estimate the valuation of the JP Morgan Chase mobile app?

This is a relevant situational exercise in applying finance and business analysis principles.

Start with the app’s direct financial impact, like revenue generation through transactions or cost savings. Then, consider the app’s strategic value, like customer retention, data collection, and brand enhancement. Use industry benchmarks and comparable analyses if possible.

“I would first analyze its direct financial contributions, such as fees from mobile transactions or savings from reduced branch operations. Next, I’d assess the strategic value, like how the app improves customer engagement and retention, which can be quantified by looking at customer lifetime value. Additionally, I’d consider the value of data generated by the app for personalized marketing or risk assessment.”

14. Let’s say we’re comparing two machine learning algorithms. In which case would you use a bagging algorithm versus a boosting algorithm? Give an example of the tradeoffs between the two.

This tests your understanding of advanced machine learning techniques and their application in financial contexts, especially with complex datasets and in credit risk prediction.

Highlight the key differences and provide relevant examples where you would employ each method.

“Bagging, like in a Random Forest, is robust against overfitting and works well with complex datasets. However, it might not perform as well when the underlying model is overly simple. Boosting, exemplified by algorithms like XGBoost, often achieves higher accuracy but can be prone to overfitting, especially with noisy data. It’s also typically more computationally intensive.”

15. How would you address and rectify biases in a financial dataset?

Addressing biases in a financial dataset demonstrates your ability to ensure data integrity in relevant business scenarios.

Discuss the statistical techniques you would employ to detect anomalies and the need for thorough data cleaning. Mention the significance of using diverse datasets to train models and regularly updating them with new data to reduce bias over time.

“In a financial context, biases in datasets can lead to inaccurate models and unfair outcomes. To address this, I’d first conduct an exploratory data analysis to identify potential anomalies. For example, if we’re analyzing loan approval data, we need to ensure it doesn’t inherently favor certain demographic groups. I’d use techniques like stratified sampling to ensure representative data and employ algorithms that are less susceptible to biases.”

16. Let’s say you are tasked with building a decision tree model to predict if a borrower will pay back a personal loan. How would you evaluate whether using a decision tree is the correct model? Let’s say you move forward with the decision tree model. How would you evaluate the performance of the model before deployment and after?

Evaluating and implementing a decision tree model for loan repayment prediction is a typical case study that emulates strategic challenges that JP Morgan is trying to solve.

Explain that decision trees are great for their simplicity and interpretability, which is crucial in banking for regulatory compliance and explainability. However, they can be prone to overfitting. Assess whether the dataset has features well-suited for a decision tree and if the model’s simplicity aligns with the complexity of the problem.

“For evaluation, I’d focus on metrics like recall to minimize false negatives, as incorrectly predicting a default could be costly. Pre-deployment, I’d use a portion of the data to test the model, and post-deployment, I’d regularly compare the model’s predictions with actual loan outcomes, adjusting as necessary to ensure accuracy and fairness.”

17. What are the benefits of feature scaling in a logistic regression model?

This is asked to assess your understanding of data preprocessing and its impact on model accuracy and performance, crucial for data-driven financial decision-making.

Focus on how feature scaling aids in faster convergence during training, ensures uniformity in feature influence, and enhances the interpretability of model coefficients. Talk about the practical implications of these benefits.

“Feature scaling standardizes the range of independent variables, leading to faster convergence during optimization. For example, in a credit scoring model at JP Morgan, if income is in thousands and age is in years, without scaling, income would disproportionately influence the model. By scaling, we ensure each feature contributes proportionally.”

18. We are looking into creating a new partner card (think Starbucks-Chase credit card or Whole Foods-Chase credit card). You have access to all of our customer spending data. How would you determine what our next partner card should be?

This tests how you’d leverage analytics for strategic business decisions.

Discuss using customer spending data to identify trends and preferences. Elucidate the importance of clustering or segmentation techniques to understand customer behavior.

“I’d first analyze customer spending patterns by segmenting them based on spending categories, like groceries, dining, travel, etc. For example, if there’s a significant portion of customers with high spending in the hospitality sector, a hotel chain could be a suitable partner. Additionally, I’d look into the customer demographics and geographical data to ensure the chosen partner aligns with our customer base’s preferences and location.”

19. How would you explain Linear Regression to a non-technical person?

Data Scientists participate in cross-functional teams and projects at JP Morgan, so you need to have excellent communication skills as well as robust technical understanding.

Focus on explaining Linear Regression as a way to understand relationships between variables.

“Imagine you’re looking at the relationship between the amount of time you spend studying and your exam scores. Linear Regression is essentially drawing a straight line through a set of points on a graph where each point represents a different amount of study time and the corresponding exam score. This line helps us predict, for example, what score you might expect if you studied for a certain number of hours.”

20. JP Morgan has begun a new email campaign. You are given tables detailing users’ visits to the site and timestamps of when emails were sent to users. How would you measure the success of this campaign?

Answering this well demonstrates your ability to apply Data Science to marketing effectiveness.

Focus on establishing a clear connection between email sent times and user site visits. Highlight the importance of A/B testing and control groups to isolate the effect of the emails.

“I’d first link the timestamps of emails sent with users’ subsequent site visits. A significant increase in visits shortly after emails are sent, compared to typical visit rates, would indicate a positive impact. I’d also recommend an A/B test, where one group receives the emails and another similar group doesn’t. Comparing these groups’ behaviors provides a clearer picture of the campaign’s effectiveness.”

21. Write a function called find_bigrams that takes a sentence or paragraph of strings and returns a list of all its bigrams in order.

This question might be asked in a JP Morgan Data Scientist interview to assess a candidate’s ability to manipulate and process text data, which is essential for tasks like sentiment analysis, customer feedback analysis, and natural language processing in financial documents.

To parse them out of a string, we need to split the input string first. We would use the Python function .split() to create a list with each word as an input. Create another empty list that will eventually be filled with tuples.

Then, once we’ve identified each word, we need to loop through k-1 times (if k is the number of words in a sentence) and append the current word and subsequent word to make a tuple. This tuple gets added to a list that we eventually return.

“Bigrams are pairs of consecutive words in a string, which are useful in natural language processing. To find bigrams, I would start by splitting the string into words using Python’s .split() method and converting them to lowercase for consistency. Then, I would iterate through the list, forming bigrams by pairing each word with the next and storing these pairs as tuples in a list. Finally, I would return this list of bigrams. This approach demonstrates my understanding of both the concept and the practical implementation.”

22. You are given a string that represents some floating-point number. Write a function, digit_accumulator, that returns the sum of every digit in the string.

This question might be asked in a JP Morgan Data Scientist interview to evaluate your problem-solving skills, attention to detail, and programming proficiency. It tests your ability to handle numerical data within a string, which is important for data cleaning and preprocessing tasks.

Start by iterating through each character in the string. For each character, check if it is a digit. If it is, convert it to an integer and add it to a running total. This approach allows you to ignore non-digit characters such as the decimal point and accumulate the sum of all digits efficiently.

“To solve the problem of summing every digit in a string representing a floating-point number, I would iterate through each character in the string and check if it is a digit by seeing if it is in ‘0123456789’. If it is, I would convert it to an integer and add it to an accumulator variable. This method ensures that non-digit characters are ignored, and the final value of the accumulator will be the sum of all the digits in the string.”

How to Prepare for a Data Scientist Interview at JP Morgan

Here are some tips to help you excel in your interview:

Study the Company and Role

Understand the basics of banking, investment, risk management, and the financial products JP Morgan deals with. Follow current trends in the finance industry and how Data Science is applied.

You can also read Interview Query members’ experiences on our discussion board for insider tips and first-hand information. Visit JP Morgan’s page on their hiring process for detailed information.

Understand the Fundamentals

Brush up on core Data Science topics like statistics, Machine Learning algorithms, data preprocessing, and model evaluation. Be comfortable with Python or R, SQL, and the Python libraries that are commonly used for Machine Learning and statistical modeling, like pandas, scikit-learn, and TensorFlow.

For further practice, refer to our popular guide on quantitative interview questions , or practice some cool fintech projects in machine learning to bolster your resume.

If you need further guidance, we also have a tailored Data Science Learning Path covering core topics and practical applications.

Prepare Behavioral Interview Answers

Soft skills such as collaboration and adaptability are paramount to succeeding in any job, especially Data Science roles where you’ll need to coordinate with teams from non-technical backgrounds as well as stakeholders from different geographies.

To test your current preparedness for the interview process, try a mock interview to improve your communication skills.

What is the average salary for a Data Science role at JP Morgan?

Average Base Salary

Average Total Compensation

View the full Data Scientist at Jpmorgan Chase & Co. salary guide

The average base salary for a Data Scientist at JP Morgan is US$128,435 , making the remuneration competitive for prospective applicants.

For more insights into the salary range of Data Scientists at various companies, check out our comprehensive Data Scientist Salary Guide .

Where can I read more discussion posts on the JP Morgan Data Science role here in Interview Query?

Here is our discussion board where Interview Query members talk about their JP Morgan interview experience. You can also use the search bar to look up the general Data Science interview experience to gain insights into other companies’ interview patterns.

Are there job postings for JP Morgan Data Science roles on Interview Query?

We have jobs listed for Data Science roles in JP Morgan, which you can apply for directly through our job portal . You can also have a look at similar roles that are relevant to your career goals and skill set.

In conclusion, succeeding in a JP Morgan Data Science interview requires not only a strong foundation in coding and algorithms but also the ability to apply them to real-world financial problems, and the skill to communicate your findings to business stakeholders.

If you’re considering opportunities at other companies, check out our Company Interview Guides . We cover a range of similar companies, so if you are looking for Data Science positions in financial or banking firms, you can check our guides for Citi , Morgan Stanley , Wells Fargo , and more.

For other data-related roles at JP Morgan, consider exploring our Business Analyst , Machine Learning Engineer , Product Analyst , and similar guides in our main JP Morgan Chase interview guide .

With diligent preparation and a solid interview strategy, you can confidently approach the interview and showcase your potential as a valuable employee to JP Morgan Chase. Check out more of our content here at Interview Query, and we hope you’ll land your dream role very soon!

Please update your browser.

- Careers Brand

- JPMC Careers

- Careers in United States

- English Master

- Careers Home

- Student & Graduate Careers

- Jobs, Student Programs & Internships

AI & Data Science

Help us harness the power of data, analytics and insights.

Delivering excellence at the intersection of data science, research and industry expertise, our AI and Data Science teams go beyond where any bank has gone before. We develop technology and create solutions to help to solve some of the world's most interesting financial problems, while improving our customer and client experiences every day. Whether you’re working with artificial intelligence, big data, machine learning, blockchain technology or robotics, our entrepreneurial team environment challenges you to push the limits of your expertise in the pursuit of impactful and commercial real-world applications.

Program information

Learn More About Our AI & Data Science Internship Opportunities

What you'll do

Who we're looking for

What we offer

You'll apply the latest Data Science techniques to our unique data assets while collaborating directly with traders and salespeople to drive the data-led transformation of our businesses.

Depending on your area of interest, AI & Data Science Interns will be placed on one of the following teams:

- Machine Learning Centre of Excellence : Join a world-class machine learning team that continually advances state-of-the-art methods to solve a wide range of real-world financial problems by leveraging JPMorgan Chase’s vast datasets. With this unparalleled access to data, a remit that spans all of the firm’s lines of business, and history of setting the standard for deep learning and RL based solutions in NLP, time series, speech analytics and more, the team is transforming how the financial industry operates.

- AI Research : Explore cutting-edge research in the fields of AI and Machine Learning, as well as related fields like Cryptography, to develop solutions that are most impactful to J.P. Morgan’s clients and businesses. The team works closely with the QR and Data Analytics teams across the firm, and partners with leading academic and research institutions around the world on areas of mutual interest.

- Applied AI & Machine Learning : Combine machine learning techniques with unique data assets to optimize business decisions. Develop tools to leverage machine learning and deep learning models to solve problems in areas like Speech Recognition, Natural Language Processing and Time Series predictions.

- Asset Management : Provide quantitative solutions to asset allocation and portfolio construction.

Valued qualities

For our internship roles we’re looking for those enrolled in an undergraduate or graduate degree program in math, sciences, engineering, computer science or other quantitative fields.

We are seeking colleagues with excellent analytical, quantitative and problem solving skills and demonstrated research ability. We value strong communication skills and the ability to present findings to a non-technical audience. We do not require you to have prior experience in financial markets.

• Knowledge of machine learning / data science theory, techniques and tools

• Programming experience with one or more of Python, Matlab, C++, Java, C#

• Excellent analytical quantitative and problem solving skills and demonstrated research ability

•Strong communication skills and the ability to present findings to a non-technical audience

Your professional growth and development will be supported throughout the internship program via project work related to your academic and professional interests, mentorship, engaging speaker series with senior leaders and more.

Through research and hands-on work experience, you'll develop solutions and technology that help to solve the world's most interesting financial problems, and improve and protect our customer and client experiences every day. You'll be supported by your teammates, tutors and mentors throughout the internship experience.

Career Progression

The specialized knowledge and skills gained through the program will prepare you for a successful career at the firm. Top performing candidates may receive a full-time offer.

What we do

How we hire

You're now leaving J.P. Morgan

J.P. Morgan’s website and/or mobile terms, privacy and security policies don’t apply to the site or app you're about to visit. Please review its terms, privacy and security policies to see how they apply to you. J.P. Morgan isn’t responsible for (and doesn’t provide) any products, services or content at this third-party site or app, except for products and services that explicitly carry the J.P. Morgan name.

JPMorgan Chase: Digital transformation, AI and data strategy sets up generative AI

View full PDF

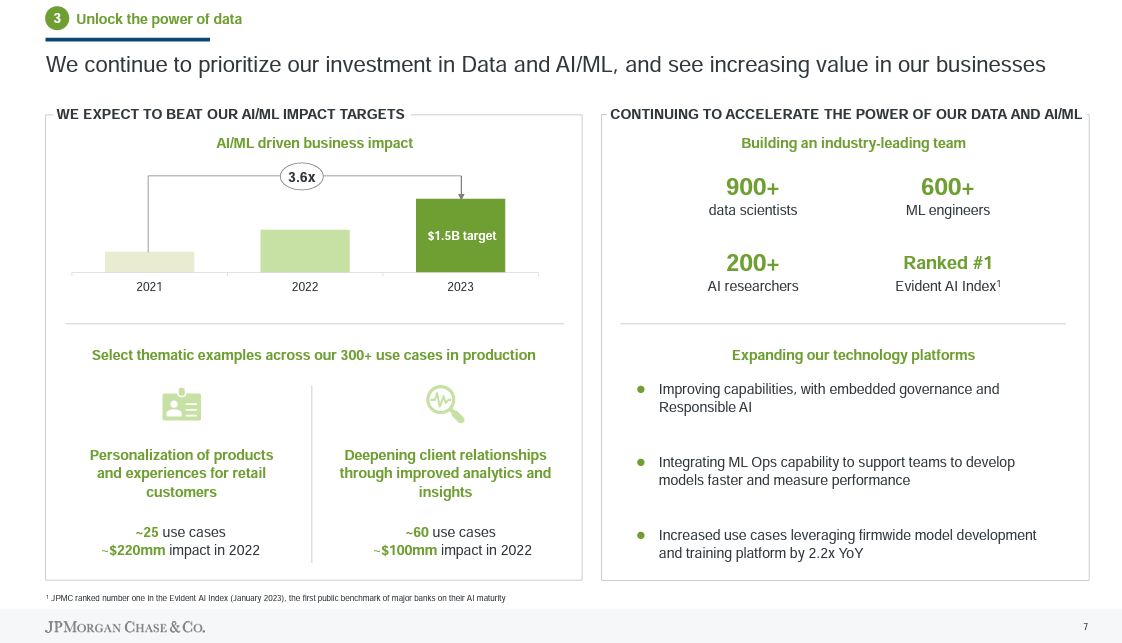

JPMorgan Chase will deliver more than $1.5 billion in business value from artificial intelligence and machine learning efforts in 2023 as it leverages its 500 petabytes of data across 300 use cases in production.

"We've always been a data driven company," said Larry Feinsmith, Managing Director and Head of Technology Strategy, Innovation, & Partnerships at JPMorgan Chase. Feinsmith, speaking with Databricks CEO Ali Ghodsi during a keynote at the company’s Data + AI Summit, said JPMorgan Chase has been continually investing in data, AI, business intelligence tools and dashboards.

Indeed, JPMorgan Chase said it will spend $15.3 billion on technology investments in 2023. JPMorgan Chase's technology budget has grown at a 7% compound annual growth rate over the last four years.

Feinsmith said the bank's AI/ML strategy is one of the big reasons JPMorgan Chase migrated to the public cloud. "If you look at our size and scale, the only way to deploy at scale is to do it through platforms," said Feinsmith. "Everyone has an opinion on data platforms, but you can efficiently move the data once and manage. Once you start moving data around it's highly inefficient and breaks the lineage."

JPMorgan Chase, a customer of Databricks, Snowflake and MongoDB, has multiple platforms, according to Feinsmith. It has an internal platform, JADE (JPMorgan Chase Advanced Data Ecosystem) for moving and managing data and one called Infinite AI for data scientists. "Equally as important as the data is the capabilities that surround that data," said Feinsmith, adding that data discovery, data lineage, governance, compliance and model lifecycle are critical.

According to Feinsmith, JPMorgan Chase's AI efforts start with a business focus with data scientists and AI/ML experts embedded into each business.

Feinsmith said JPMorgan Chase is leveraging streaming data and said he was a fan of Databricks' Lakehouse architecture and new AI features because it's easier to move and process data in one environment instead of two architectures, a data warehouse for business intelligence and a data lake for AI. JPMorgan deploys a central but federated data strategy and interoperability between data platforms is important. "Data has to be interoperable," Feinsmith told Ghodsi. "Not all of our data will wind up in Databricks. Interoperability is very important."

That comment rhymes with what other enterprise technology buyers have said. Despite a lot of talk about consolidating vendors--mostly from vendors looking to gain share--enterprise buyers want to keep options open. How JPMorgan Chase has approached its tech stack is instructive.

The digital transformation behind the AI

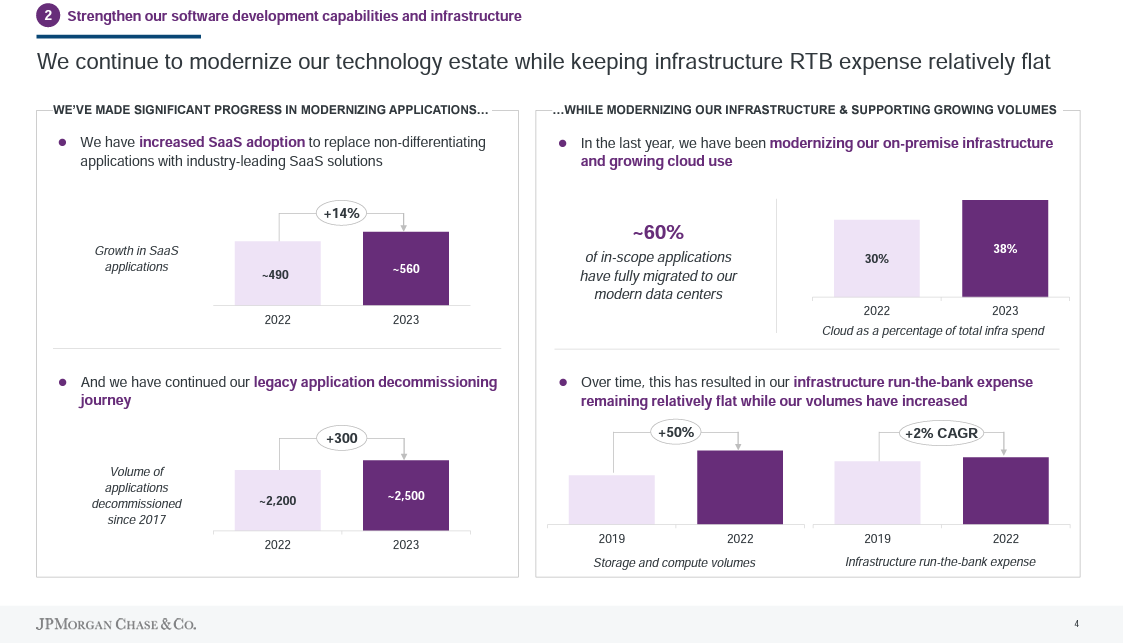

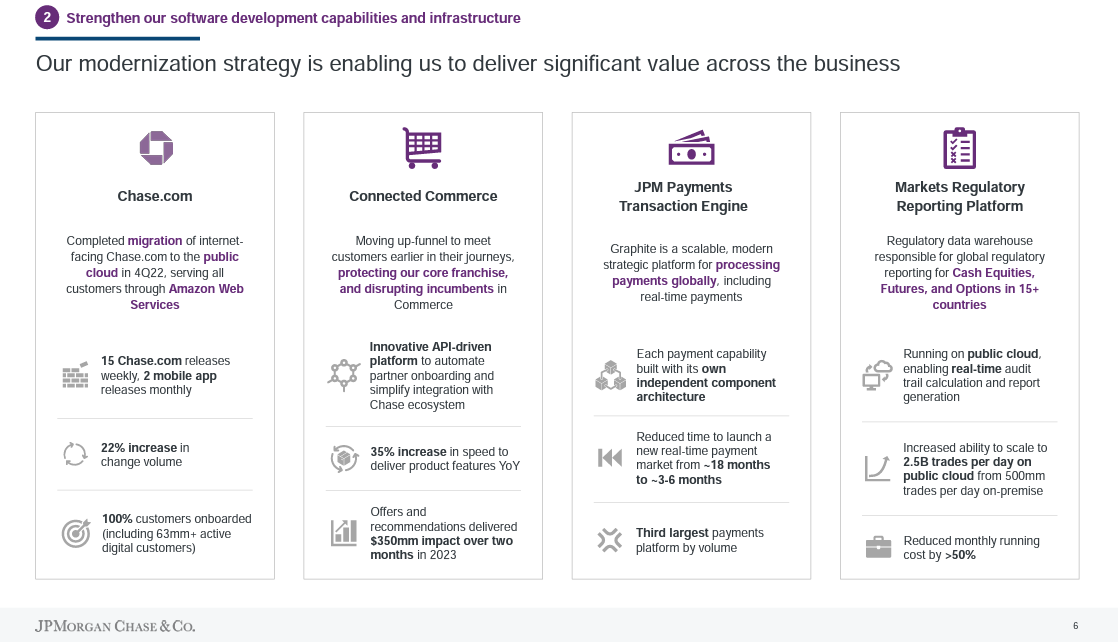

At JPMorgan Chase's Investor Day in May, Lori Beer, Global CIO at the bank, gave an overview of the bank's technology strategy. In 2022, JP Morgan launched a plan to deliver leading technology at scale with its team of 57,000 employees.

"Products and platforms need a strong foundation to be successful, and ours are underpinned by our mission to modernize our technology and practices," explained Beer. "We are already delivering product features 20% faster than last year, and we continue to modernize our applications, leverage software as a service and retire legacy applications."

JPMorgan Chase is moving to a multi-vendor public cloud approach while optimizing its owned data centers. The company is also embedding data and insights throughout the organization, said Beer. Those efforts will pave the way for large language models (LLMs) and other advances in the future.

"We have driven $300 million in efficiency through modern engineering practices and labor productivity, and we have developed a framework that enables us to identify further opportunities in the future. Our infrastructure modernization efforts have yielded an additional $200 million in productivity, driven by improved utilization and vendor rationalization," said Beer.

Here's a look at the key pillars of JP Morgan Chase's digital transformation.

Applications. Beer said the bank has decommissioned more than 2,500 legacy applications since 2017 and is focusing on modernizing software to deliver products faster. The bank has more than 560 SaaS applications, up 14% from 2022. By using industry-leading SaaS applications, Beer said it will be easier to scale new products to more than 290,000 employees.

Infrastructure modernization. Beer said:

"To date, we have moved about 60% of our in-scope applications to new data centers, which are 30% more efficient, and this translates to 16,000 fewer hardware assets. We are also migrating applications to utilize the benefit of public and private cloud. 38% of our infrastructure is now in the cloud, which is up 8 percentage points year-over-year. In total, 56% of our infrastructure spend is modern. Over the next three years, we have line of sight to have nearly 80% on modern infrastructure. Of the remainder, half are mainframes, which are highly efficient and already run in our new data centers."

JPMorgan Chase has been able to maintain infrastructure expenses flat even though compute and storage volumes have increased 50% since 2019, said Beer. One example is Chase.com is now being served through AWS and has an average of 15 releases a week.

Engineering. Beer said JPMorgan is equipping its 43,000 engineers with modern tools to boost productivity. JPMorgan Chase has adopted a framework to speed up the move from backlog to production via agile development practices.

Data and AI. Beer said:

"We have made tremendous progress building what we believe is a competitive advantage for JPMorgan Chase. We have over 900 data scientists, 600 machine learning engineers and about 1,000 people involved in data management. We also have a 200-person top notch AI research team looking at the hardest problems in the new frontiers of finance."

Specifically, Beer said AI is helping JPMorgan Chase deliver more personalized products and experiences to customers with $220 million in benefits in the last year. At JPMorganChase's Commercial Bank, AI provided growth signals and product suggestions for bankers. That move provided $100 million in benefits, said Beer.

The data mesh

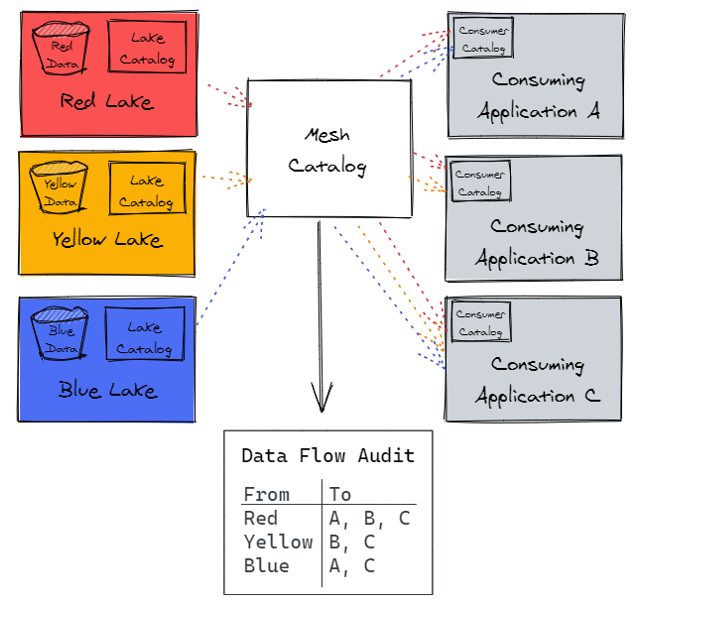

To capitalize on AI, JPMorgan Chase created a data mesh architecture that is designed to ensure data is shareable across the enterprise in a secure and compliant way. The bank outlined its data mesh architecture at a 2021 Data Mesh Learning meetup .

JPMorgan said its data approach is to define data products that are curated by people who understand the data and management requirements. Data products are defined as groups of data from systems that support the business. These data groups are stored in its product specific data lake. Each data lake is separated by its own cloud-based storage layer. JPMorgan Chase catalogs the data in each lake using technologies like AWS S3 and AWS Glue.

Data is then consumed by applications that are separated from each other and the data lakes. JPMorgan Chase said it makes the data lake visible to data users to query it.

At a high level, JPMorgan Chase said its approach will empower data product owners to manage and use data for decisions, share data without copying it and provide visibility into data sharing and lineage.

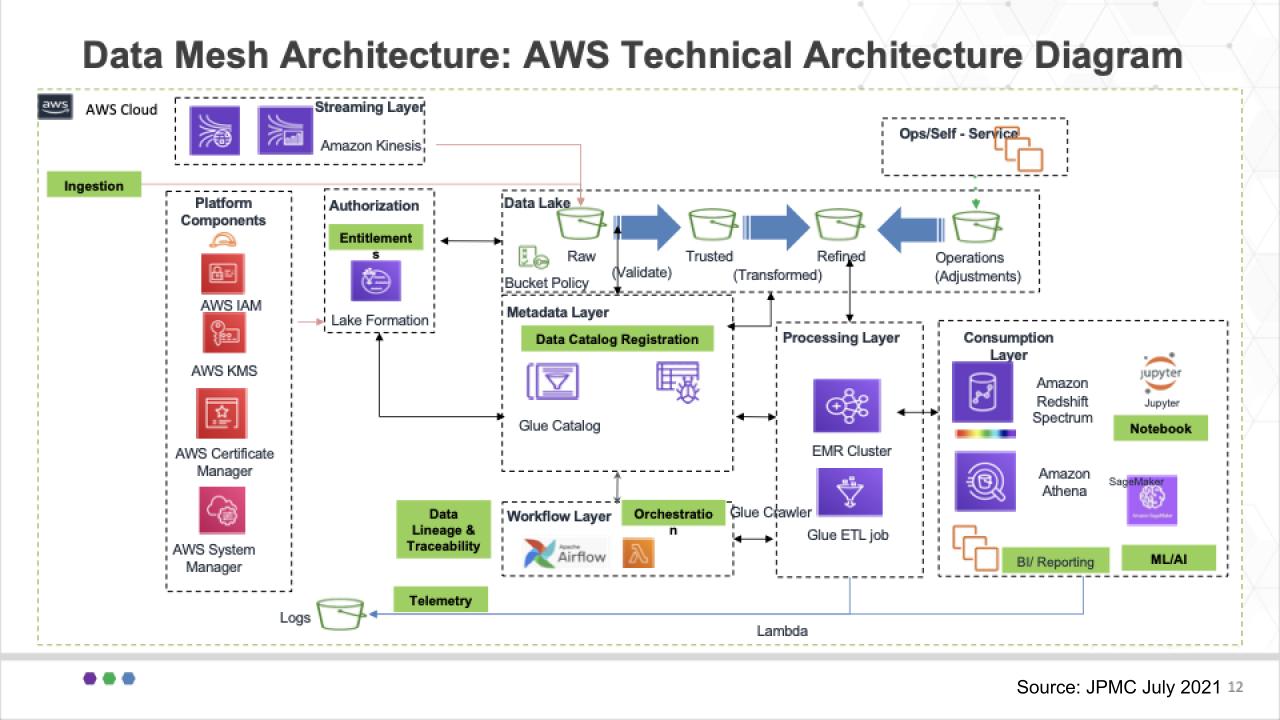

In a slide, this architecture looks like this.

According to JPMorgan Chase, its architecture keeps data storage bills down and ensures accuracy. Since data doesn't physically leave the data lake, JPMorgan Chase said it's easier to enforce decisions product owners make about their data and ensure proper access controls.

How JPMorgan Chase will address generative AI

Given JPMorgan Chase's data strategy and architecture, the bank can more easily leverage new technologies like generative AI. Feinsmith at the Databricks conference said JPMorgan Chase was optimistic about generative AI but said it's very early in the game.

"There's a lot of optimism and a lot of excitement about generative AI. Businesses all know about it and generative AI will make us more productive," said Feinsmith. "But we won't roll out generative AI until we can do it in a responsible way. We won't roll it out until it's done in an entirely responsible manner. It's going to take time."

In the meantime, JPMorgan Chase's Feinsmith said the bank is working through the generative AI risks. The promise for JPMorgan Chase is obvious: Take 500 petabytes of data, train it, make it valuable and then add value to open-source models.

Beer outlined the JPMorgan Chase approach during the bank's Investor Day in May.

"We couldn't discuss AI without mentioning GPT and large language models. We recognize the power and opportunity of these tools and are committed to exploring all the ways they can deliver value for the firm. We are actively configuring our environment and capabilities to enable them. In fact, we have a number of use cases leveraging GPT4 and other open-source models currently under testing and evaluation.”

With Databricks, MongoDB and Snowflake all adding generative AI and large language model (LLMs) capabilities to the data stack, enterprises will have the tools when ready.

JPMorgan Chase has named Teresa Heitsenrether its chief data and analytics officer, a central role overseeing the adoption of AI across the bank. Heitsenrether oversees data use, governance and controls with the aim of harnessing AI technologies to effectively and responsibly develop new products, improve productivity and enhance risk management.

Heitsenrether is a 35-year veteran at JP Morgan Chase and previously was Global Head of Securities Services from 2015 to 2023.

Beer said explained JPMorgan Chase’s approach to responsible AI:

“We take the responsible use of AI very seriously, and we have an interdisciplinary team, including ethicists, data scientists, engineers, AI researchers and risk and control professionals helping us assess the risk and build appropriate controls to prevent unintended misuse, comply with regulation, and promote trust with our customers and communities. We know the industry is making remarkably fast progress, but we have a strong view that successful AI is responsible AI."

Business Research Themes

Memberships.

- About our Research

- Research Index

- Research by Role

- Research by Theme

- Custom Research

- Speaking Engagements

- Technology Acquisition

| Thank you for Signing Up |

You are using an outdated browser. Please upgrade your browser to improve your experience.

UPDATED 12:09 EDT / JULY 10 2021

A new era of data: a deep look at how JPMorgan Chase runs a data mesh on the AWS cloud

BREAKING ANALYSIS by Dave Vellante

A new era of data is upon us.

The technology industry generally and the data business specifically are in a state of transition. Even our language reflects that. For example, we rarely use the phrase “big data” anymore. Rather we talk about digital transformation or data-driven companies.

Many have finally come to the realization that data is not the new oil — because unlike oil, the same data can be used over and over for different purposes. But our language is still confusing. We say things like “data is our most valuable asset,” but in the same sentence we talk about democratizing access and sharing data. When was the last time you wanted to share your financial assets with your co-workers, partners and customers?

In this Breaking Analysis we want to share our assessment of the state of the data business. We’ll do so by looking at the data mesh concept and how a division of a leading financial institution, JPMorgan Chase, is practically applying these relatively new ideas to transform its data architecture for the next decade.

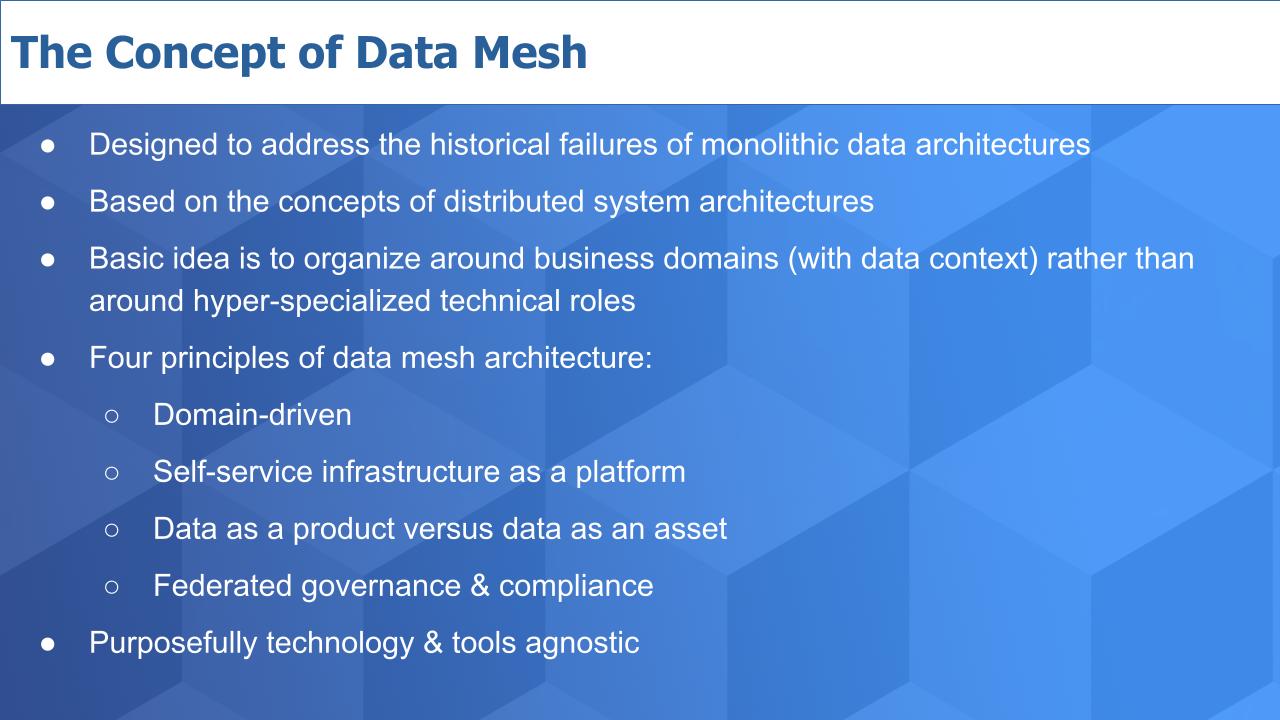

What is a data mesh?

As we’ve previously reported , data mesh is a concept and set of principles introduced in 2018 by Zhamak Dehghani, director of technology at ThoughtWorks Inc. She created this movement because her clients, some of the leading firms in the world, had invested heavily in predominantly monolithic data architectures that failed to deliver desired results.

Her work went deep into understanding why her clients’ investments were not delivering desired results. Her main conclusion was the prevailing method of trying to force our data into a single monolithic architecture is an approach that is fundamentally limiting.

One of the profound ideas of data mesh is the notion that data architectures should be organized around business lines with domain context. That the highly technical and hyperspecialized roles of a centralized cross-functional team are a key blocker to achieving our data aspirations.

This is the first of four high-level principles of data mesh, specifically:

- That the business domain should own the data end-to-end, rather than have to go through a centralized technical team;

- A self-service platform is fundamental to a successful architectural approach where data is discoverable and shareable across an organization and ecosystem;

- Product thinking is central to the idea of data mesh – in other words, data products will power the next era of data success;

- Data products must be built with governance and compliance that is automated and federated.

No. 3 is one of the most significant and difficult to understand. Most discussion around data value in the past decade have centered around using data to create actionable insights: Data informs humans so they can make better decisions. We see this as a necessary but insufficient condition for successful data transformations in the 2020s. In other words, if the end game is better insights we see that as an important but evolutionary extension of reporting. Rather, we believe that building data products that can be monetized – either to cut costs directly or, more importantly, generate new revenue, as the more interesting (and now attainable) target goal.

There’s lots more to the data mesh concept and there are tons of resources on the Web to learn more, including an entire community that has formed around data mesh. But this should give you a basic idea.

Data mesh is tools-agnostic

One other notable point is that in observing Zhamak’s work, she has deliberately avoided discussions around specific tooling, which has frustrated some folks. Understandably, because we all like to have references that tie to products and companies. This has been a two-edged sword in that on the one hand, it’s good, because data mesh is designed to be successful independent of the tools that are chosen. On the other hand, it has led some folks to take liberties with the term data mesh and claim “mission accomplished” when their solution may be more marketing than reality.

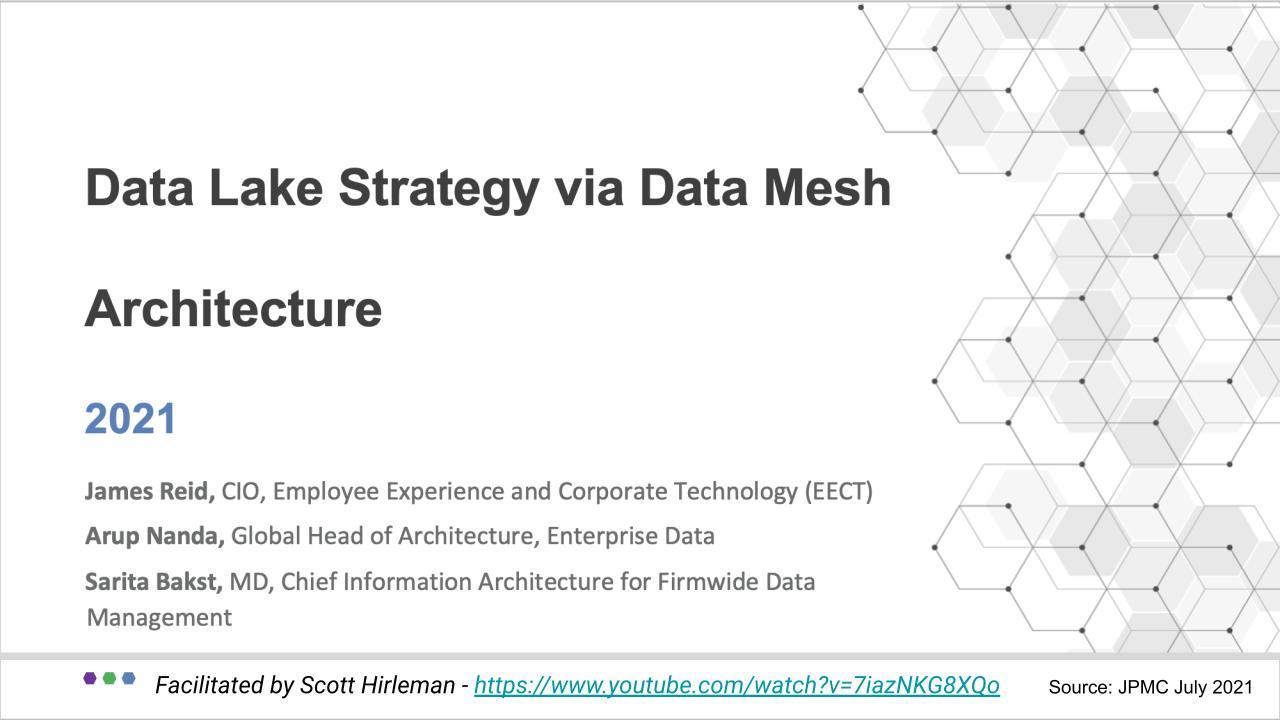

JPMorgan Chase and a data mesh journey

We were really interested to see just this past week, a team from JPMC held a meetup to discuss what it called “Data Lake Strategy via Data Mesh Architecture.” We saw the name of the session and thought, “That’s a weird title.” And we wondered if they are just taking their legacy data lakes and claiming they’re now transformed into a data mesh?

But in listening to the presentation the answer is a definitive “No – not at all.” A gentleman named Scott Hirleman organized the session that comprised the three JPMC speakers shown above: James Reid, a divisional chief information officer, technologist and architect Arup Nanda and information architect Sarita Bakst.

This was the most detailed and practical discussion we’ve seen to data about implementing a data mesh. And this is JPMC. We know it was an early Hadoop adopter, an extremely tech-savvy company, and it has invested probably billions in the past decade on data across this massive company. And rather than dwell on the downsides of its big data past, we were pleased to see how it’s evolving its approach and embracing new thinking around data mesh.

In this post, we’re going to share some of the slides they used and comment on how it dovetails into the concept of data mesh as we understand it, and dig a bit into some of the tooling that is being used by JPMorgan, specifically around the Amazon Web Services cloud.

It’s all about business value

JPMC is in the money business and in that world, it’s all about the bottom line.

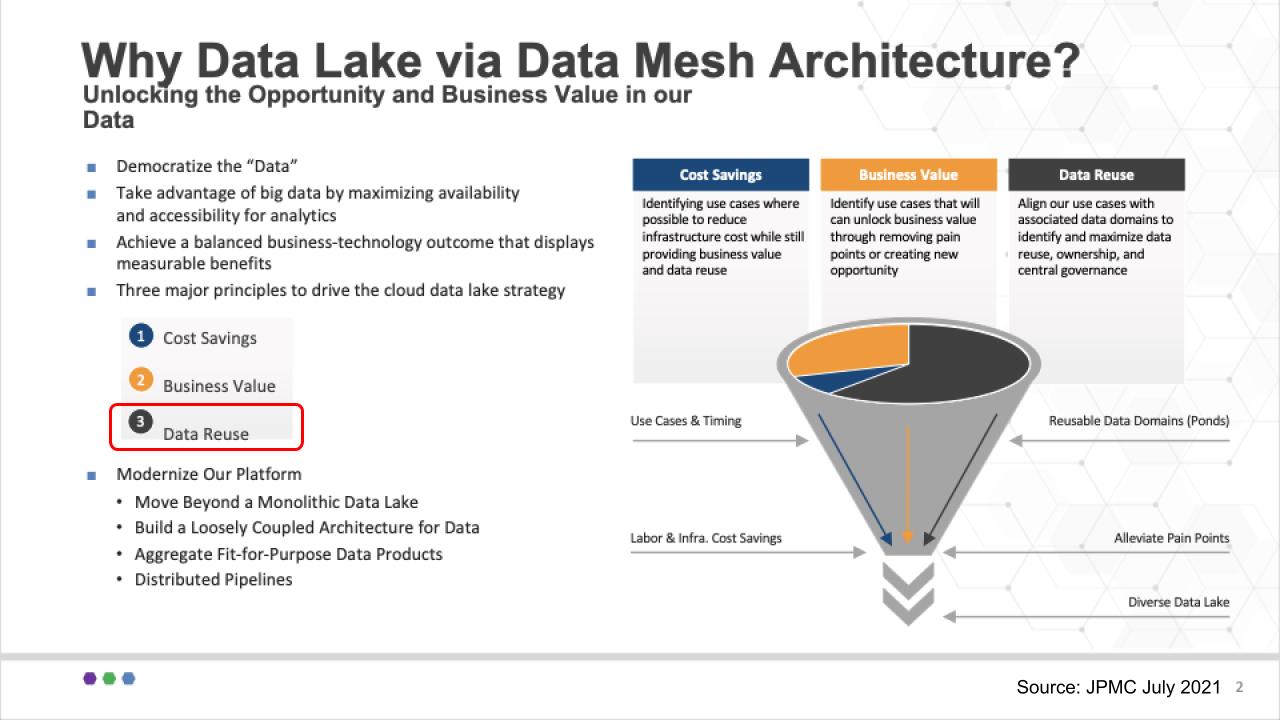

James Reid, the CIO, showed the slide above and talked about the team’s overall goals, which centered on a cloud-first strategy to modernize the JPMC platform. He focused on three factors of value: No. 1: Cutting costs – always, of course. No. 2: Unlocking new opportunities or accelerating time to value. And we really like No. 3, which we’ve highlighted in red: Data re-use as a fundamental value ingredient. And his commentary here was all about aligning with the domains, maximizing data reuse and making sure there’s appropriate governance.

Don’t get caught up in the term data lake – we think that’s just how JP Morgan communicates internally. It’s invested in the data lake concept – and it likes water analogies at JPMC. They use the term data puddles for example, which are single-project data marts and data ponds which comprise multiple puddles that can can feed into data lakes.

As we’ll see, JPMC doesn’t try to force a single version of the truth by putting everything into a monolithic data lake. Rather, it enables the business lines to create and own their own data lakes that comprise fit-for-purpose data products. And it uses a catalog of metadata to track lineage and provenance so that when it reports to regulators, it can trust that the data it’s communicating are current, accurate and consistent with previous disclosures.

Cloud-first platform that recognizes hybrid

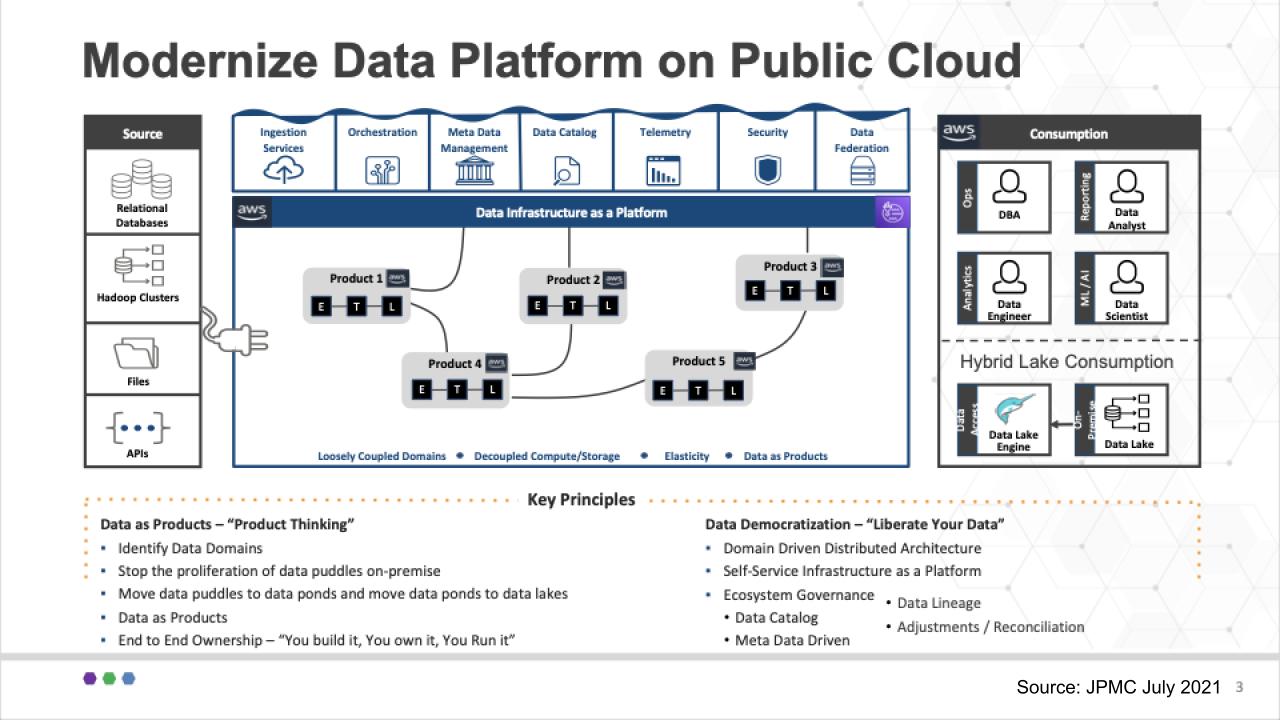

JPMC is leaning into public cloud and adopting agile methods and microservices architectures, and it sees cloud as a fundamental enabler. But it recognizes that on-premises data must be part of the data mesh equation.

Below is a slide that starts to get into some of the generic tech in play:

We’d like to make a couple of points here that tie back to Zhamak Deghani’s original concept.

The first is that unlike many data architectures, this diagram puts data as products right in the fat middle of the chart. The data products live in business domains and are at the heart of the architecture. The databases, Hadoop clusters, files and APIs on the left hand side serve the data product builders.

The specialized roles on the right-hand side – the DBAs, data engineers, data scientists and data analysts serve the data product builders. Because the data products are owned by the business they inherently have context. This is nuanced but an important difference from most technical data teams that are part of a pipeline process but lack business and domain knowledge.

And you can see at the bottom of the slide, the key principles include domain thinking and end-to-end ownership of the data products – build, own, run/manage.

At the same time, the goal is to democratize data with self-service as a platform.

One of the biggest points of contention on data mesh is governance and as Sarita Bakst said on the meetup “metadata is your friend.” She said, “This sounds kinda geeky” – but we agree, it’s vital to have a metadata catalog to understand where data resides, the data lineage and overall change management.

So to us, this passed the data mesh stink test pretty well.

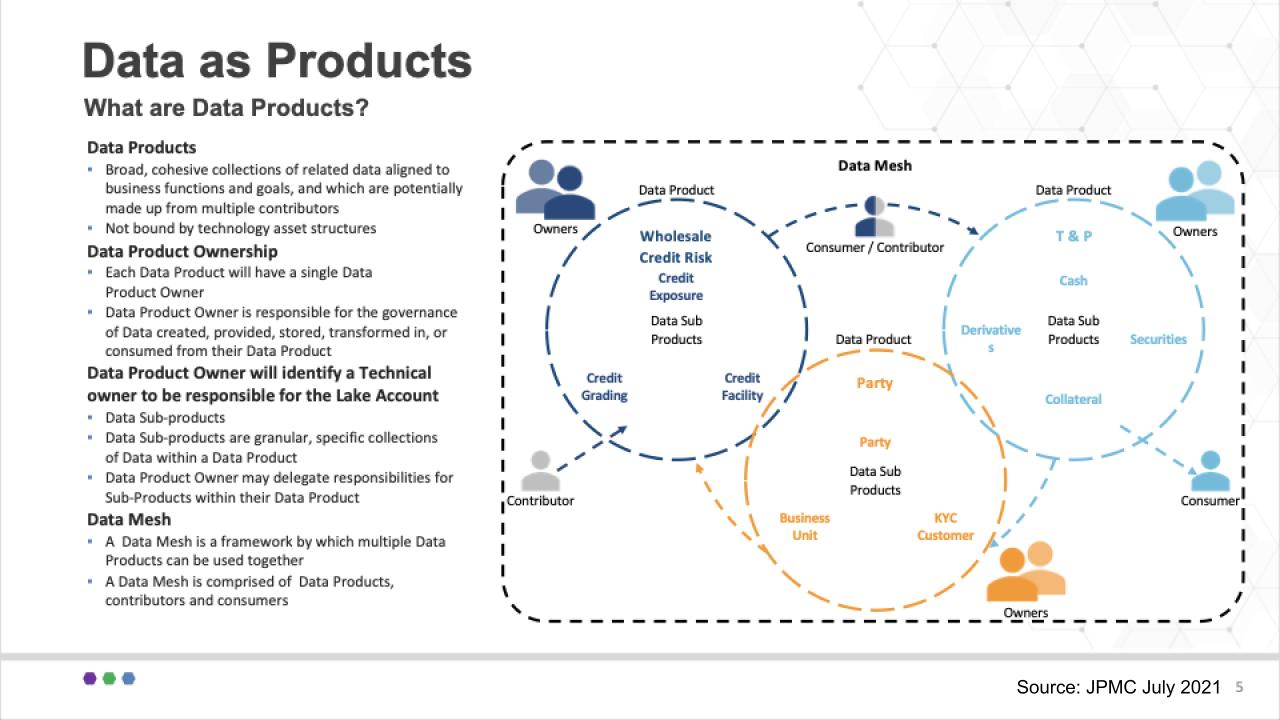

Data as products: Don’t try to boil the ocean

The presenters from JPMC said one of the most difficult things for them was getting their heads around data products. They spent a lot of time getting this concept working. Below is one of the slides they used to describe their data products as it related to their specific segment of the financial industry:

The team stressed that a common language and taxonomy is very important in this regard. It said, for example, it took a lot of discussion and debate to define what is a transaction. But you can see at a high level, three product groups around Wholesale Credit Risk, Party, and Trade and Position Data as Products. And each of these can have subproducts (e.g. KYC under Party). So a key for JPMC was to start at a high level and iterate to get more granular over time.

Lots of decisions had to be made around who owns the products and subproducts. The product owners had to defend why that product should exist, what boundaries should be put in place and what data sets do and don’t belong in the product — and which subproducts should be part of these circles. No doubt those conversations were engaging and perhaps sometimes heated as business line owners carved out their respective turf.

The team didn’t say this specifically, but tying back to data mesh, each of these products, whether in a data lake, data hub, data pond, data warehouse or data puddle, is a node in the global data mesh.

Supporting this notion, Sarita Bakst said this should not be infrastructure-bound; logically, any of these data products, whether on-prem or in the cloud, can connect via the data mesh.

So again we felt like this really stayed true to the data mesh concept.

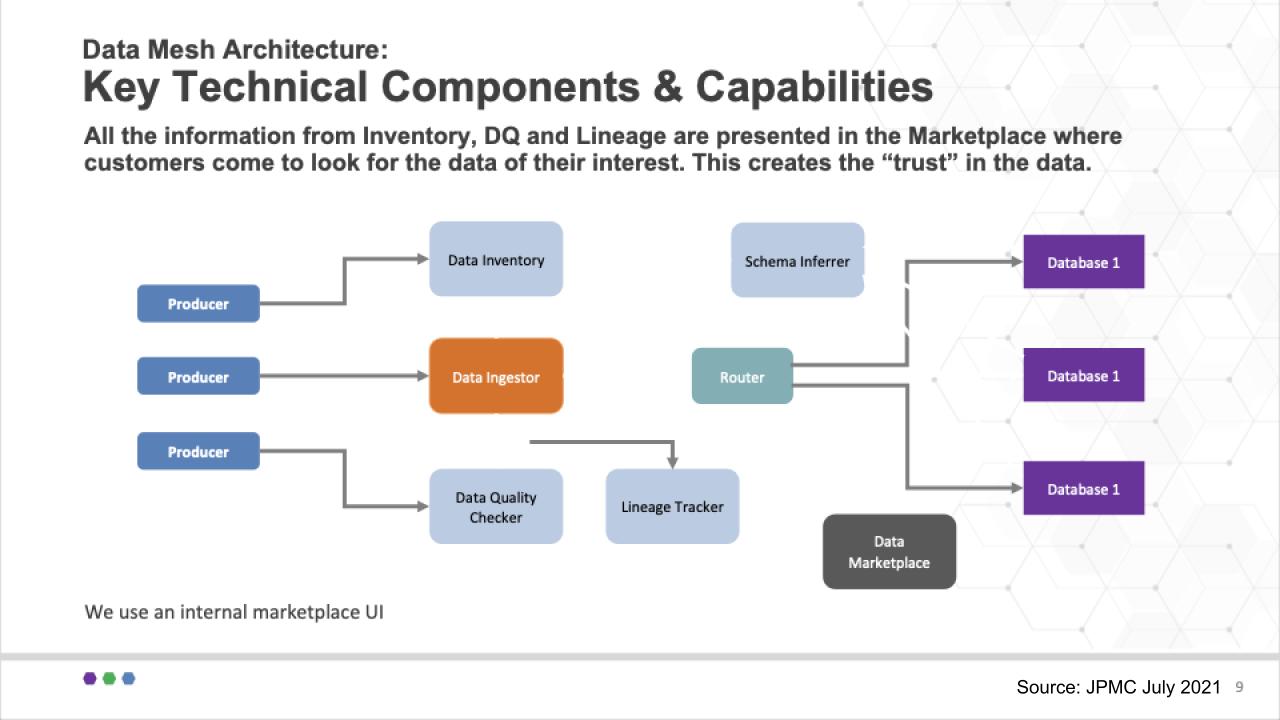

Key technical considerations

This chart below shows a diagram of how JPMorgan thinks about the problem from a technology point of view:

Some of the challenges JPMC had to consider was how to write to various data stores, can you/how can you move data from one data store to another? How can data be transformed? Where is data located? Can the data be trusted, how can it be easily accessed, who has the right to access the data? These are problems that technology can solve.

To address these issues, Arup Nanda explained that the heart of the slide above is the Data Ingestor (versus ETL or extract/transform/load). All data producers/contributors send data to the Ingestor. The Ingestor then registers the data so it’s in the data catalog, it does a data quality check and it tracks the lineage. Then data is sent to the router, which persists the data based on the best destination as informed by the registration.

This is designed to be flexible. In other words, the data store for a data product is not pre-determined and fixed. Rather it’s decided at the point of inventory and that allows changes to be easily made in one place. The router simply reads that optimal location and sends it to the appropriate data store.

The Schema Inferrer is used when there is no clear schema on write. In this case the data product is not allowed to be consumed until the schema is inferred and settled. The data in this case goes to a raw area and the Inferrer determines the proper schema and then updates the inventory system so that the data can be routed to the proper location and accurately tracked.

That’s a high-level snapshot of some of the technical workflow and how the sausage factory works in this use case. Very interesting and worth technical practitioners watching at least the technical section of this 83-minute video , which starts around 19 minutes in.

How JPMC leverages the AWS cloud for data mesh

Now let’s look at the specific implementation on AWS and dig into some of the tooling.

As described in some detail by Arup Nanda, the diagram above shows the reference architecture used by this group at JPMorgan. It shows all the various AWS services and components that support their data mesh approach.

Start with the Authorization block right underneath Kinesis. The Lake Formation is the single point of entitlement for data product owners and has a number of buckets associated with it – including the raw area we just talked about, a trusted bucket, a refined bucket and a bucket for any operational adjustments that are required.

Beneath those buckets you can see the Data Catalog Registration block. This is where the Glue Catalog resides and it reviews the data characteristics to determine in which bucket the router puts the data. If, for example, there is no schema, the data goes into the Raw bucket and so forth, based on policy.

And you can see the many AWS services in use here, identity, the EMR cluster from the legacy Hadoop work done over the years, Redshift Spectrum and Athena. JPMC uses Athena for single threaded workloads and Redshift Spectrum for nested types that can be queried independently of each other.

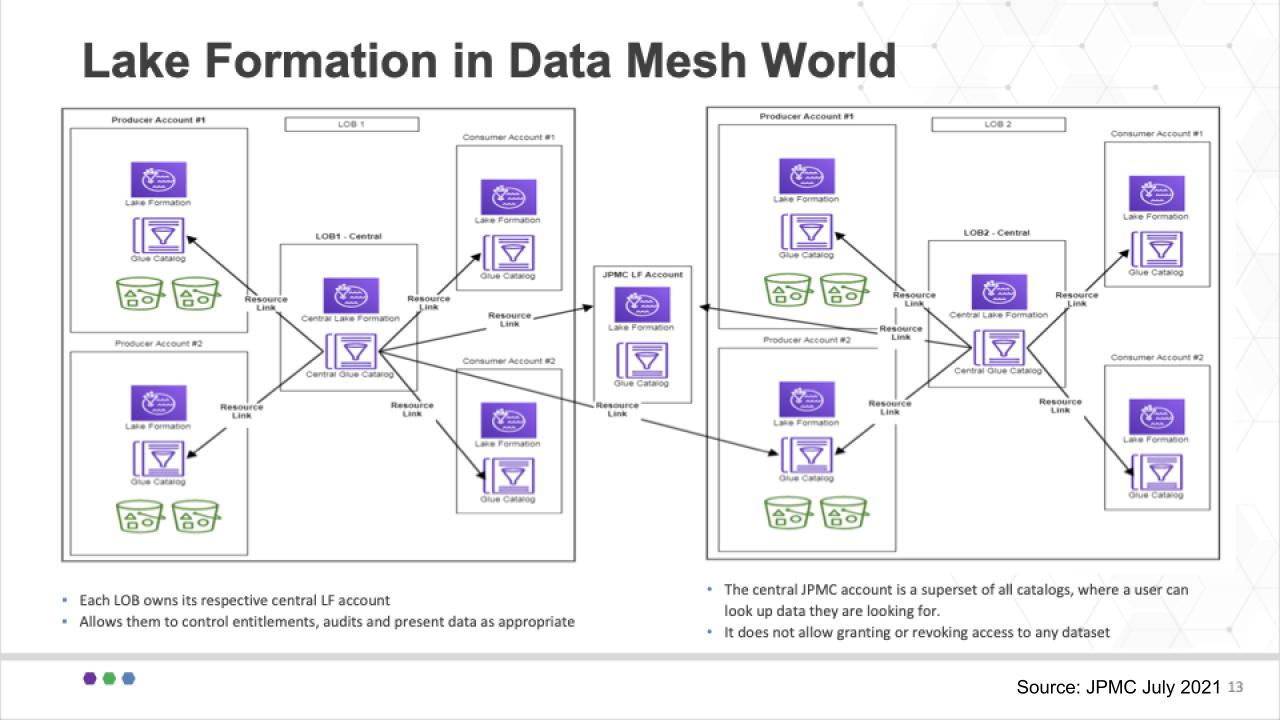

Now remember, very importantly, in this use case, there is not a single lake formation, rather multiple lines of business will be authorized to create their own lakes and that creates a challenge. In other words, how can that be done in a flexible manner to accommodate the business owners?

Note: Here’s an AWS-centric blog on how they recommend implementing data mesh

Enter data mesh

JPMC applied the notion of federated lake formation accounts to support its multiple lines of business. Each line of business can create as many data producer and consumer accounts as they desire and roll them up to their master line of business lake formation account shown in the center of each block. And they cross connect these data products in a federated model as shown below.

These all roll up into a master Glue Catalog as shown in the middle of the diagram so that any authorized user can find out where a specific data element is located. This superset catalog comprises multiple sources and syncs up across the data mesh.

So again this to us was a well thought out and practical application of data mesh. Yes it includes some notion of centralized management but much of that responsibility has been passed down to the lines of business. It does roll up to a single master catalog and that is a metadata management effort and seems compulsory to ensure federated and automated governance.

Importantly, at JPMC, the office of the chief data officer is responsible for ensuring governance and compliance throughout the federation.

Which vendors play in data mesh?

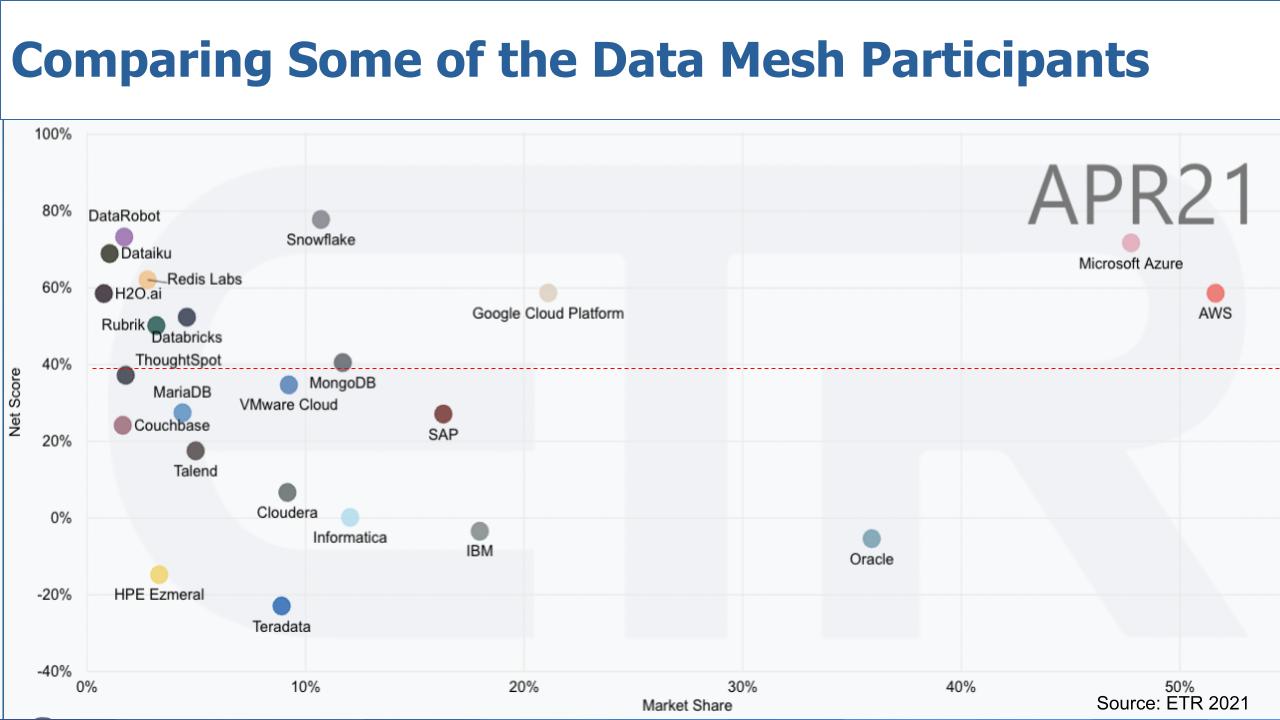

Let’s take a look at some of the suspects in this world of data mesh and bring in the ETR data .

Now, of course, ETR doesn’t have a data mesh category – and there’s no such thing as a data mesh vendor; you build a data mesh, you don’t buy it. So what we did is used the ETR data set to filter certain sectors to identify some of the companies that might contribute to the data mesh to see how they’re performing.

The chart above depicts a popular view we often like to share. It’s a two-dimensional graphic with Net Score or spending momentum on the vertical axis and Market Share or pervasiveness within the data set on the horizontal axis. And we’ve filtered the data on sectors such as analytics, data warehouse, etc, that reflected participation in data mesh.

Let’s make a few observations.

As is often the case, Microsoft Azure and AWS are almost literally off the charts with high spending velocity and a large presence in the market. Oracle Corp. also stands out because much of the world’s data lives inside Oracle databases – it doesn’t have the spending momentum, but the company remains prominent. You can see Google Cloud doesn’t have nearly the presence, but its momentum is elevated.

Remember, that red dotted line at 40% indicates our subjective view of what we consider a highly elevated spending momentum level.

A quick aside on Snowflake

Snowflake Inc. has consistently shown to be the gold standard in Net Score and continues to maintain highly elevated spending velocity in the Enterprise Technology Research data set. In many ways Snowflake with its data marketplace, data cloud vision and data-sharing approach fits nicely into the data mesh concept. Snowflake has used the term data mesh in its marketing, but in our view it lacks clarity and we feel like it’s still trying to figure out how to communicate what that really is.

We don’t see Snowflake as a monolithic architecture, but it’s marketing sometimes uses terms that allow one to infer legacy thinking. Our sense is this is actually customer-driven. What we mean is Snowflake customers are so used to monolithic architectural approaches and because Snowflake is so simple to use, it “paves the cowpath” and applies Snowflake to its legacy organizational structures and ways of thinking.

In reality, the value of Snowflake, in the context of data mesh, is the ability quickly and easily to spin up (and down) virtual data stores and share data across the Snowflake data cloud with federated governance. Snowflake’s vision is to abstract the underlying complexity of the physical cloud location (that is, AWS, GCP or Azure) and enable sharing across the globe, within its governed data cloud. Ideally it minimizes the need to make copies to share data (notwithstanding sometimes copies are necessary for latency considerations).

The bottom line is we actually think Snowflake fits nicely into the data mesh concept and is well-positioned for the future.

Other vendors of note

Databricks Inc. is also interesting because the firm has momentum and we expect further elevated levels on the vertical axis as it readies for IPO. The firm has a strong product and very good managed service. Initially, everyone thought Databricks would try to be the Red Hat of big data and build a service around Spark.

Rather, what it has done is build a managed service, with strong artificial intelligence and data science chops, and is taking the data lake to new levels. It is one to watch for sure and on a collision course with Snowflake in our view. We need to do more research but have always believed Databricks fits well into a federated data mesh approach.

We included a number of other database companies for obvious reasons – such as Redis Labs Inc., MongoDB Inc., MariaDB Inc., Couchbase and Teradata Corp. There’s also SAP SE; it’s not all HANA for SAP, but it’s a prominent player in the market, as is IBM.

Cloudera Inc., which includes Hortonworks Inc. and Hewlett Packard Enterprise Co.’s Ezmeral, which comprises the MapR business that HPE acquired. These include some of the early Hadoop deployments that are evolving. And of course Talend SA and Informatica Corp. are two data integration companies worth noting.

And we also included some of the AI/machine learning specialists and data science players in the mix like DataRobot, which just did a monster $250M round, Dataiku, H2O.ai and ThoughtSpot, which is a specialist using AI to democratize data and fits very well into the data mesh concept, in our view.

And we put VMware Inc. cloud in there for reference because it really is the predominant on-prem infrastructure platform.

JPMC: a practical example of data mesh in action

First, thanks to the team at JPMorgan for sharing this data. We really want to encourage practitioners and technologists to go watch the YouTube video of that meetup. And thanks to Zhamak Deghani and the entire data mesh community for the outstanding work they do challenging established conventions. The JPM presentation gives you real credibility and takes data mesh well beyond concept and demonstrates how it can be done.

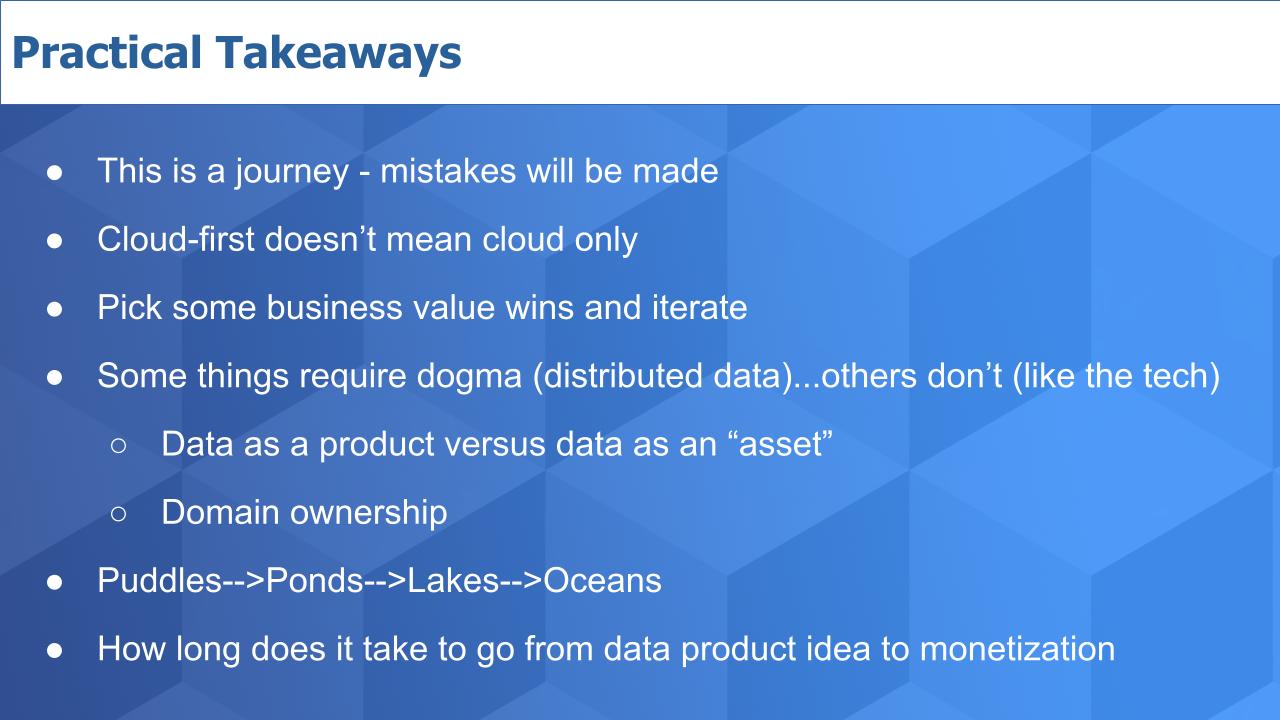

This is not a perfect world. You have to start somewhere and there will be some failures. The key is to recognize that shoving everything into a monolithic data architecture won’t support massive scale and cloudlike agility. It’s a fine approach for smaller firms, but if you’re building a global platform and a data business it’s time to rethink your data architecture and, importantly, your organization.

Much of this is enabled by cloud – but cloud-first doesn’t mean you’ll leave your on-prem data behind. On the contrary, you must include nonpublic cloud data in your data mesh vision as JPMC has done.

Getting some quick wins is crucial so you can gain credibility within the organization and continue to grow.

One of the takeaways from the JPMorgan team is there is a place for dogma – like organizing around data products and domains. On the other hand, you have to remain flexible because technologies will come and they will go.

If you’re going to embrace the metaphor of puddles, ponds and lakes, we suggest you expand the scope to include data oceans – something we have talked about extensively on theCUBE. Data oceans – it’s huge! Watch this fun clip with analyst Ray Wang and John Furrier on the topic.

And think about this: Just as we are evolving our language, we should be evolving our metrics. Much of the last decade of big data was around making the technology work. Getting it up and running and managing massive amounts of data. And there were many KPIs built around standing up infrastructure and ingesting data at high velocity.

This decade is not just about enabling better insights. It’s more than that. Data mesh points us to a new era of data value, and that requires new metrics around monetizing data products. For instance, how long does it take to go from data product idea to monetization? And what is the time to quality? Automation, AI and very importantly, organizational restructuring of our data teams will heavily contribute to success in the coming years.

So go learn, lean in and create your data future.

Ways to keep in touch

Remember we publish each week on Wikibon and SiliconANGLE . These episodes are all available as podcasts wherever you listen .

Email [email protected] , DM @dvellante on Twitter and comment on our LinkedIn posts .

Also, check out this ETR Tutorial we created , which explains the spending methodology in more detail. Note: ETR is a separate company from Wikibon and SiliconANGLE . If you would like to cite or republish any of the company’s data, or inquire about its services, please contact ETR at [email protected].

Here’s the full video analysis:

Image: Alex

A message from john furrier, co-founder of siliconangle:, your vote of support is important to us and it helps us keep the content free., one click below supports our mission to provide free, deep, and relevant content. , join our community on youtube, join the community that includes more than 15,000 #cubealumni experts, including amazon.com ceo andy jassy, dell technologies founder and ceo michael dell, intel ceo pat gelsinger, and many more luminaries and experts..

Like Free Content? Subscribe to follow.

LATEST STORIES

A close look at JPMorgan's aggressive cloud migration

Why Databricks vs. Snowflake is not a zero-sum game

X’s new AI training opt-out setting draws regulatory scrutiny

Cyber insurance provider Cowbell reels in $60M to grow its product portfolio

New AI models flood the market even as AI takes fire from regulators, actors and researchers

US grand jury indicts North Korean hacker for role in Andariel cyberattacks

CLOUD - BY PAUL GILLIN . 2 DAYS AGO

BIG DATA - BY GUEST AUTHOR . 2 DAYS AGO

AI - BY MARIA DEUTSCHER . 2 DAYS AGO

SECURITY - BY MARIA DEUTSCHER . 2 DAYS AGO

AI - BY ROBERT HOF . 3 DAYS AGO

SECURITY - BY MARIA DEUTSCHER . 3 DAYS AGO

More From Forbes

Jpmorgan’s cio has championed a data platform that turbocharges ai.

- Share to Facebook

- Share to Twitter

- Share to Linkedin

JPMorgan Chase headquarters in central Manhattan.

JPMorgan Chase sees artificial intelligence (AI) as critical to its future success. And the mega-bank has a big advantage over many of its smaller rivals: the massive amount of data it gathers from sources such as the 50% of U.S. households with which it has some form of relationship and the $6 trillion worth of payment flows it handles daily.

But until recently, identifying and pulling in relevant data to train AI models was taking up around 60% of the time of the bank’s growing army of data scientists. That was an inefficient use of an expensive and relatively scarce resource. Now a new data platform the bank has developed, called OmniAI, is helping it to get relevant data into its models much faster.

Speaking about the project during a presentation at the AI Summit in New York City this week, Beer said the platform, which has been up-and-running for a couple of months, helps workers identify and ingest “minimum viable data” to build models faster. The platform was developed by a team led by Apoorv Saxena, a former Google executive the bank poached in August 2018 to become its new head of AI and machine-learning services.

The initial focus is on providing data relevant to a dozen high-priority use cases of AI that the bank has identified. They include things like tailoring consumer-banking services for individual customers and driving internal efficiencies in areas like travel-and-entertainment expense management. According to Beer, the use of AI-driven technology has already helped JPMorgan Chase save $150 million in expenses.

The platform doesn’t just let data scientists get their hands on raw material for their models quickly; it also automatically verifies that data are being used in accordance with regulations covering areas like customer privacy—again saving time and effort. JPMorgan Chase pulls in data from around 7,500 external sources as well as leveraging its own information.

Apple iPhone 16, iPhone 16 Pro Release Date Proposed In New Report

Daniel cormier calls out ufc for protecting its ‘golden goose’, today’s nyt mini crossword clues and answers for saturday, august 10.

Beer also spoke about the bank’s efforts to guard against things like bias in the AI models it builds. Last year, it hired Manuela Veloso, a prominent AI expert at Carnegie Mellon University, to head AI research, and she has been helping it think through the ways in which it is deploying AI. “Manuela challenges us all the time,” said Beer.

The new platform is part of an ambitious push by JPMorgan Chase to infuse AI into many different areas of its operations, from efforts to develop deeper insights into customers’ needs to defending itself against cyberattacks. Beer said she thinks AI will also help the financial industry in general to address some significant socioeconomic issues, such as helping more people get access to financial services and build retirement savings.

- Editorial Standards

- Reprints & Permissions

- Performance & Yields

- Ultra-Short

- Short Duration

- Empower Share Class

- Academy Securities

- Cash Segmentation

- Separately Managed Accounts

- Managed Reserves Strategy

- Capitalizing on Prime Money Market Funds

Liquidity Insights

- Liquidity Insights Overview

- Case Studies

- Leveraging the Power of Cash Segmentation

- Cash Investment Policy Statement

Market Insights

- Market Insights Overview

- Eye on the Market

- Guide to the Markets

- Market Updates

Portfolio Insights

- Portfolio Insights Overview

- Fixed Income

- Long-Term Capital Market Assumptions

- Sustainable investing

- Strategic Investment Advisory Group

MORGAN MONEY

- Global Liquidity Investment Academy

- Account Management & Trading

- Announcements

- Navigating market volatility

- 2024 US Money Market Fund Reform

- Diversity, Equity & Inclusion

- Spectrum: Our Investment Platform

- Sustainable and social investing

- Our Leadership Team

- LinkedIn Twitter Facebook

Case studies

Working with clients to solve short-term fixed income needs

FEATURED CASE STUDIES

Meituan-Dianping: A unicorn’s path to achieve world-class treasury

All case studies, vertex pharmaceuticals.

Vertex Pharmaceuticals transforms its investment processes with Morgan Money.

Kulicke & Soffa

Kulicke & Soffa meets strong risk management standard with MORGAN MONEY’s cash optimizer.

Nigeria LNG

Harnessing the Power of Technology. Nigeria LNG transforms Money Market Fund investments with MORGAN MONEY.

Micro Focus

Short-term AAA-rated money market funds provide short-term investment opportunities for divestment proceeds.

Liquidity and security over yield deliver investment benefits to NIO

Active Super (previously known as Local Government Super)

Prioritizing cash management at scale, Active Super (previously known as Local Government Super), an Australian superannuation fund, found “operational alpha”.

Meituan-Dianping

Meituan-Dianping, a growing unicorn, had a major challenge to accurately forecast its cash flow beyond three months.

NTUC Income

Singapore-based insurance provider NTUC Income had always handled its investments entirely through its internal portfolio management team.

Recruit Holdings

As the business has grown globally, liquidity and cash positions outside of Japan have expanded, creating foreign exchange (FX) exposure.

The challenge: to assess the optimal level of liquidity required to ensure John Lewis Partnership has continued financial sustainability.

Explore more

Cash investment policy statement

How to write an investment policy statement for your organization.

Leveraging the power of cash segmentation

The most effective strategy incorporates a clear investment policy, well-defined goals and parameters for liquidity, quality and return.

Invest with ease, operational efficiency and effective controls via our state-of-the-art trading and analytics platform.

- Data Science Tutorials

6 Intriguing Applications of Data Science in Banking – [JP Morgan Case Study]

Free Machine Learning courses with 130+ real-time projects Start Now!!

Companies need data to develop insights and make data-driven decisions. In order to provide better services to its customers and devise strategies for various banking operations, data science is a mandatory requirement.

Furthermore, banks need data to grow their business and draw more customers. We will go through some of the important areas where banking industries use data science to improve their products. We will see the major role of data science in banking sectors.

Then we will understand the use case of JP Morgan Chase applying data science in banking sector.

Data Science in Banking

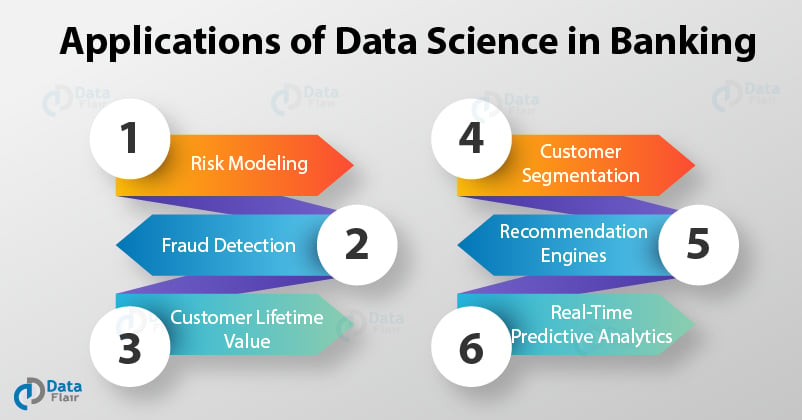

Here are 6 interesting data science applications for banking which will guide you how data science is transforming banking industry.

1. Risk Modeling

Risk Modeling a high priority for the banking industry. It helps them to formulate new strategies for assessing their performance. Credit Risk Modeling is one of its most important aspects. Credit Risk Modeling allows banks to analyze how their loan will be repaid.

In credit risks, there is a chance of the borrower not being able to repay the loan. There are many factors in credit risk that makes it a complex task for the banks.

With Risk Modeling, banks are able to analyze the default rate and develop strategies to reinforce their lending schemes. With the help of Big Data and Data Science, banking industries are able to analyze and classify defaulters before sanctioning loan in a high-risk scenario.

Risk Modeling also applies to the overall functioning of the bank where analytical tools used to quantify the performance of the banks and also keep a track of their performance.

2. Fraud Detection

With the advancements in machine learning , it has become easier for companies to detect frauds and irregularities in transactional patterns. Fraud detection involves monitoring and analysis of the user activity to find any usual or malicious pattern.

With the increase in dependency on the internet and e-commerce for transactions, the number of frauds has increased significantly.

Using data science, industries can leverage the power of machine learning and predictive analytics to create clustering tools that will help to recognize various trends and patterns in the fraud-detection ecosystem.

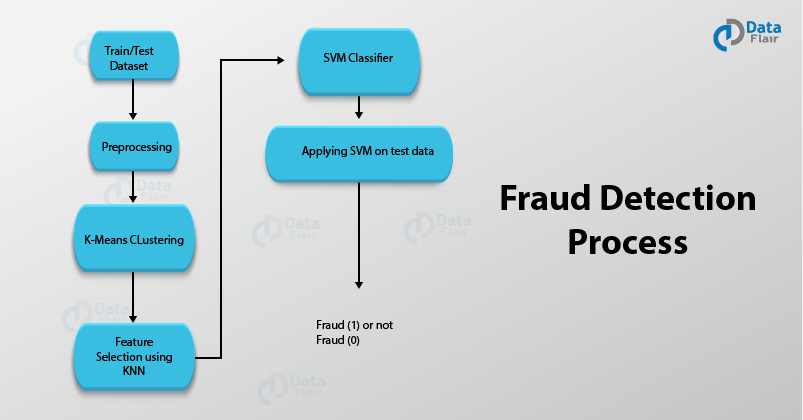

There are various algorithms like K-means clustering, SVM that is helpful in building the platform for recognizing patterns of unusual activities and transactions. The process of Fraud Detection involves –

- Obtaining the data samples for training the model.

- Training our model on the given datasets. The process of training involves the implementation of several machine learning algorithms for feature selection and further classification.

- Testing and Deploying our model.

For instance, two algorithms like K-means clustering and SVM can be used for data-preprocessing and classification. K-means can be used for feature selection and SVMs are then applied to the data for its classification into a fraudulent class or otherwise.

3. Customer Lifetime Value

Customers are an essential part of the banking industries. They ensure a steady stream of revenues. Formally speaking, a Customer Lifetime Value offers a discounted value of the future revenues that are contributed by the customer. Banks are often required to predict future revenues based on past ones.

Also, banks want to know the retention of customers and if they will help to generate revenues in the future as well. Banks want their customers to be satisfied and nurture them for the current as well as future prospects.

Businesses like banking sectors are required to predict their customer lifetime value. Data Science in banking plays an essential role in this part.

With predictive analytics, banks can classify potential customers and assign them with significant future value in order to invest company resources on them. While the classification algorithms help the banks to acquire potential customers, retaining them is another challenging task.