An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Graph Representation Learning and Its Applications: A Survey

Affiliations.

- 1 Department of Artificial Intelligence, The Catholic University of Korea, 43, Jibong-ro, Bucheon-si 14662, Gyeonggi-do, Republic of Korea.

- 2 Data Assimilation Group, Korea Institute of Atmospheric Prediction Systems (KIAPS), 35, Boramae-ro 5-gil, Dongjak-gu, Seoul 07071, Republic of Korea.

- 3 Department of Social Welfare, Dongguk University, 30, Pildong-ro 1-gil, Jung-gu, Seoul 04620, Republic of Korea.

- 4 Semiconductor Devices and Circuits Laboratory, Advanced Institute of Convergence Technology (AICT), Seoul National University, 145, Gwanggyo-ro, Yeongtong-gu, Suwon-si 16229, Gyeonggi-do, Republic of Korea.

- PMID: 37112507

- PMCID: PMC10144941

- DOI: 10.3390/s23084168

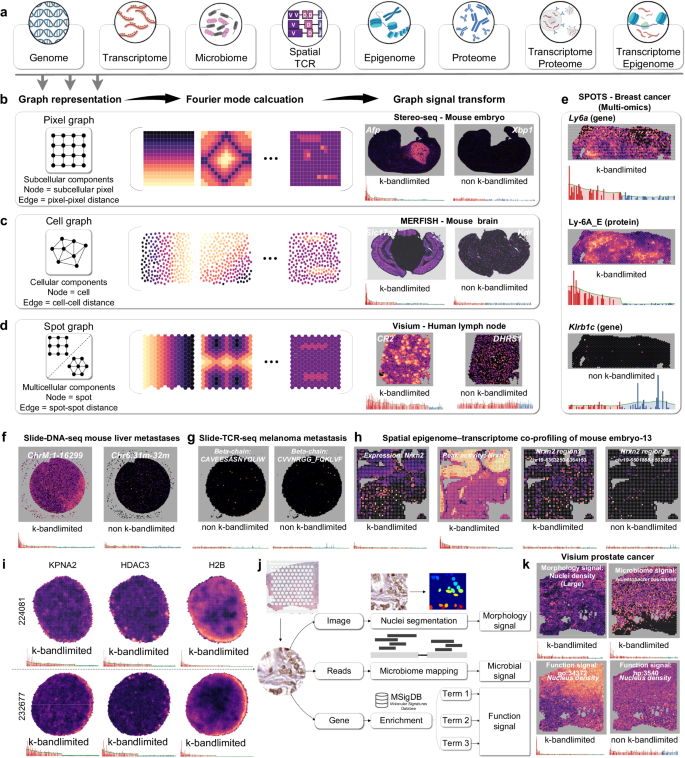

Graphs are data structures that effectively represent relational data in the real world. Graph representation learning is a significant task since it could facilitate various downstream tasks, such as node classification, link prediction, etc. Graph representation learning aims to map graph entities to low-dimensional vectors while preserving graph structure and entity relationships. Over the decades, many models have been proposed for graph representation learning. This paper aims to show a comprehensive picture of graph representation learning models, including traditional and state-of-the-art models on various graphs in different geometric spaces. First, we begin with five types of graph embedding models: graph kernels, matrix factorization models, shallow models, deep-learning models, and non-Euclidean models. In addition, we also discuss graph transformer models and Gaussian embedding models. Second, we present practical applications of graph embedding models, from constructing graphs for specific domains to applying models to solve tasks. Finally, we discuss challenges for existing models and future research directions in detail. As a result, this paper provides a structured overview of the diversity of graph embedding models.

Keywords: graph embedding; graph neural networks; graph representation learning; graph transformer.

PubMed Disclaimer

Conflict of interest statement

The authors declare no conflict of interest.

The popularity of graph representation…

The popularity of graph representation learning models in the Scopus database. The line…

A comprehensive view of graph…

A comprehensive view of graph embedding. Given a spare, high-dimensional graph G =…

Methods for modeling dynamic graphs…

Methods for modeling dynamic graphs over time. ( a ) The representation of…

The proposed taxonomy for graph…

The proposed taxonomy for graph representation learning models.

The Weisfeiler–Lehman isomorphism test. (…

The Weisfeiler–Lehman isomorphism test. ( a ) Original labels, i = 0 ;…

Node sampling techniques. ( a…

Node sampling techniques. ( a ) k -hop sampling; ( b ) Random-walk…

Sampling strategy in Node2Vec and…

Sampling strategy in Node2Vec and WalkLets model. ( a ) Sampling strategy in…

Sampling strategy in Sub2Vec model.…

Sampling strategy in Sub2Vec model. Assume that there are two subgraphs G 1…

The random-walk sampling based on…

The random-walk sampling based on motif. ( a ) Random-walk sampling; ( b…

Updating random-walk paths to the…

Updating random-walk paths to the corpus on dynamic graphs. At time t ,…

The strategy of edge and…

The strategy of edge and node collapsing of HARP model. ( a )…

The self-centered network of NEWEE…

The self-centered network of NEWEE model. For instance, the self-centered of node v…

The architecture of SDNE model.…

The architecture of SDNE model. The features of nodes x i and x…

The architecture of DynGEM model.…

The architecture of DynGEM model. Similarity to the SDNE model, the DynGEM model…

An example of the Topo-LSTM…

An example of the Topo-LSTM model. Given by a cascade sequence S =…

The sampling strategy of [57].…

The sampling strategy of [57]. The model lists all the node pairs in…

The temporal random-walk sampling strategy…

The temporal random-walk sampling strategy of LSTM-Node2Vec model during the graphs’ evolution. (…

An example of the GraphSAINT…

An example of the GraphSAINT model. ( a ) A subgraph has five…

The architecture of GAE and…

The architecture of GAE and VGAE model. The model adopts the adjacency matrix…

Similar articles

- Survey on graph embeddings and their applications to machine learning problems on graphs. Makarov I, Kiselev D, Nikitinsky N, Subelj L. Makarov I, et al. PeerJ Comput Sci. 2021 Feb 4;7:e357. doi: 10.7717/peerj-cs.357. eCollection 2021. PeerJ Comput Sci. 2021. PMID: 33817007 Free PMC article.

- A Comprehensive Survey on Deep Graph Representation Learning. Ju W, Fang Z, Gu Y, Liu Z, Long Q, Qiao Z, Qin Y, Shen J, Sun F, Xiao Z, Yang J, Yuan J, Zhao Y, Wang Y, Luo X, Zhang M. Ju W, et al. Neural Netw. 2024 May;173:106207. doi: 10.1016/j.neunet.2024.106207. Epub 2024 Feb 27. Neural Netw. 2024. PMID: 38442651 Review.

- Graph representation learning in bioinformatics: trends, methods and applications. Yi HC, You ZH, Huang DS, Kwoh CK. Yi HC, et al. Brief Bioinform. 2022 Jan 17;23(1):bbab340. doi: 10.1093/bib/bbab340. Brief Bioinform. 2022. PMID: 34471921

- Co-Embedding of Nodes and Edges With Graph Neural Networks. Jiang X, Zhu R, Ji P, Li S. Jiang X, et al. IEEE Trans Pattern Anal Mach Intell. 2023 Jun;45(6):7075-7086. doi: 10.1109/TPAMI.2020.3029762. Epub 2023 May 5. IEEE Trans Pattern Anal Mach Intell. 2023. PMID: 33052851

- Graph embedding and geometric deep learning relevance to network biology and structural chemistry. Lecca P, Lecca M. Lecca P, et al. Front Artif Intell. 2023 Nov 16;6:1256352. doi: 10.3389/frai.2023.1256352. eCollection 2023. Front Artif Intell. 2023. PMID: 38035201 Free PMC article. Review.

- Assessing Sensor Integrity for Nuclear Waste Monitoring Using Graph Neural Networks. Hembert P, Ghnatios C, Cotton J, Chinesta F. Hembert P, et al. Sensors (Basel). 2024 Feb 29;24(5):1580. doi: 10.3390/s24051580. Sensors (Basel). 2024. PMID: 38475116 Free PMC article.

- Applying precision medicine principles to the management of multimorbidity: the utility of comorbidity networks, graph machine learning, and knowledge graphs. Woodman RJ, Koczwara B, Mangoni AA. Woodman RJ, et al. Front Med (Lausanne). 2024 Jan 24;10:1302844. doi: 10.3389/fmed.2023.1302844. eCollection 2023. Front Med (Lausanne). 2024. PMID: 38404463 Free PMC article. Review.

- A comprehensive review of machine learning algorithms and their application in geriatric medicine: present and future. Woodman RJ, Mangoni AA. Woodman RJ, et al. Aging Clin Exp Res. 2023 Nov;35(11):2363-2397. doi: 10.1007/s40520-023-02552-2. Epub 2023 Sep 8. Aging Clin Exp Res. 2023. PMID: 37682491 Free PMC article. Review.

- Rossi R.A., Ahmed N.K. The Network Data Repository with Interactive Graph Analytics and Visualization; Proceedings of the 29th Conference on Artificial Intelligence (AAAI 2015); Austin, TX, USA. 25–30 January 2015; Austin, TX, USA: AAAI Press; 2015. pp. 4292–4293.

- Ahmed Z., Zeeshan S., Dandekar T. Mining biomedical images towards valuable information retrieval in biomedical and life sciences. Database J. Biol. Databases Curation. 2016;2016:baw118. doi: 10.1093/database/baw118. - DOI - PMC - PubMed

- Hamilton W.L. Synthesis Lectures on Artificial Intelligence and Machine Learning. Springer; Berlin/Heidelberg, Germany: 2020. Graph Representation Learning. - DOI

- Grover A., Leskovec J. node2vec: Scalable Feature Learning for Networks; Proceedings of the 22nd International Conference on Knowledge Discovery and Data Mining (SIGKDD 2016); San Francisco, CA, USA. 13–17 August 2016; San Francisco, CA, USA: ACM; 2016. pp. 855–864. - DOI - PMC - PubMed

- Ou M., Cui P., Pei J., Zhang Z., Zhu W. Asymmetric Transitivity Preserving Graph Embedding; Proceedings of the 22nd International Conference on Knowledge Discovery and Data Mining (ACM SIGKDD 2016); San Francisco, CA, USA. 13–17 August 2016; San Francisco, CA, USA: ACM; 2016. pp. 1105–1114. - DOI

Publication types

- Search in MeSH

Grants and funding

- 2022R1F1A1065516/National Research Foundation of Korea

- KMA2020-02211/Korea Meteorological Administration

- 2022M3F3A2A01076569/National Research Foundation of Korea

- AICT-2022-0015/Advanced Institute of Convergence Technology

- M-2022-B0008-00153/The Catholic University of Korea

LinkOut - more resources

Full text sources.

- Europe PubMed Central

- PubMed Central

Research Materials

- NCI CPTC Antibody Characterization Program

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Europe PMC requires Javascript to function effectively.

Either your web browser doesn't support Javascript or it is currently turned off. In the latter case, please turn on Javascript support in your web browser and reload this page.

IEEE Account

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Export citation

Select the format to use for exporting the citation.

Graph representation learning: a survey

Journal details.

This is published under the terms of the Creative Commons Attribution licence.

Downloaded: 6990 times

| I. INTRODUCTION | |

| II. DEFINITION AND PRELIMINARIES | |

| III. Graph embedding methods | |

| IV. COMPARISON OF DIFFERENT METHODS and APPLICATIONS | |

| V. EVALUATION | |

| VI. EMERGING APPLICATIONS | |

| VII. FUTURE RESEARCH DIRECTIONS | |

| VIII. CONCLUSION |

Research on graph representation learning has received great attention in recent years since most data in real-world applications come in the form of graphs. High-dimensional graph data are often in irregular forms. They are more difficult to analyze than image/video/audio data defined on regular lattices. Various graph embedding techniques have been developed to convert the raw graph data into a low-dimensional vector representation while preserving the intrinsic graph properties. In this review, we first explain the graph embedding task and its challenges. Next, we review a wide range of graph embedding techniques with insights. Then, we evaluate several stat-of-the-art methods against small and large data sets and compare their performance. Finally, potential applications and future directions are presented.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Sensors (Basel)

- PMC10144941

Graph Representation Learning and Its Applications: A Survey

Van thuy hoang.

1 Department of Artificial Intelligence, The Catholic University of Korea, 43, Jibong-ro, Bucheon-si 14662, Gyeonggi-do, Republic of Korea; moc.liamg@09yuhtnavgnaoh (V.T.H.); moc.revan@ereinset (E.-S.Y.)

Hyeon-Ju Jeon

2 Data Assimilation Group, Korea Institute of Atmospheric Prediction Systems (KIAPS), 35, Boramae-ro 5-gil, Dongjak-gu, Seoul 07071, Republic of Korea; gro.spaik@noejjh

Eun-Soon You

Yoewon yoon.

3 Department of Social Welfare, Dongguk University, 30, Pildong-ro 1-gil, Jung-gu, Seoul 04620, Republic of Korea; ude.kuggnod@nooyy

Sungyeop Jung

4 Semiconductor Devices and Circuits Laboratory, Advanced Institute of Convergence Technology (AICT), Seoul National University, 145, Gwanggyo-ro, Yeongtong-gu, Suwon-si 16229, Gyeonggi-do, Republic of Korea; [email protected]

Associated Data

Data sharing not applicable.

Graphs are data structures that effectively represent relational data in the real world. Graph representation learning is a significant task since it could facilitate various downstream tasks, such as node classification, link prediction, etc. Graph representation learning aims to map graph entities to low-dimensional vectors while preserving graph structure and entity relationships. Over the decades, many models have been proposed for graph representation learning. This paper aims to show a comprehensive picture of graph representation learning models, including traditional and state-of-the-art models on various graphs in different geometric spaces. First, we begin with five types of graph embedding models: graph kernels, matrix factorization models, shallow models, deep-learning models, and non-Euclidean models. In addition, we also discuss graph transformer models and Gaussian embedding models. Second, we present practical applications of graph embedding models, from constructing graphs for specific domains to applying models to solve tasks. Finally, we discuss challenges for existing models and future research directions in detail. As a result, this paper provides a structured overview of the diversity of graph embedding models.

1. Introduction

Graphs are a common language for representing complex relational data, including social media, transportation system networks, and biological protein–protein networks [ 1 , 2 ]. Since most graph data are complex and high-dimensional, it is difficult for researchers to extract valuable knowledge. Therefore, processing graph data and transforming them into a form (fixed-dimensional vectors) is an important process that researchers can then apply to different downstream tasks [ 3 ]. The objective of graph representation learning is to obtain vector representations of graph entities (e.g., nodes, edges, subgraphs, etc.) to facilitate various downstream tasks, such as node classification [ 4 ], link prediction [ 5 , 6 ], community detection [ 7 ], etc. As a result, graph representation learning plays an important role since it could significantly promote the performance of the downstream tasks.

Representation of the graph data, however, is challenging and different from image and text data [ 8 ]. In textual data, words are linked together in a sentence, and they have a fixed position in that sentence. In image data, pixels are arranged on an ordered grid space and can be represented by a grid matrix. However, the nodes and edges in graphs are non-ordered and have their features. This leads to mapping graph entities to latent space while preserving the graph structure, and proximity relationships are challenging. In the case of a social network, a user can have many friends (neighbors) and various personal information, such as hometown, education level, and hobbies, which makes preserving the graph structure and properties significantly problematic. In addition, many real-world networks show dynamic behaviors in which graph structures and node features could be changed over time [ 9 , 10 ]. These could deliver challenges in capturing the graph structure and mapping graph entities into vector space.

Over decades, various graph representation learning models have been proposed to project graph entities into fixed-length vectors [ 11 , 12 , 13 ]. Graph embedding models are mainly divided into five main groups: graph kernels, matrix factorization models, shallow models, deep neural network models, and non-Euclidean models. Figure 1 presents the popularity of different graph representation learning models from 2010 to 2022. The number of graph representation learning studies increased considerably over the period of 12 years. Furthermore, there was significant growth in the frequency of research studies on graph neural networks, graph convolutional networks, and graph transformer models. In contrast, the number of studies in graph kernels, graph autoencoder, and matrix factorization-based models increased slightly over the period of 12 years. We obtained the frequency of academic publications including each keyword from Scopus ( https://www.scopus.com (accessed on 16 April 2023)).

The popularity of graph representation learning models in the Scopus database. The line plot shows changes in the number of publications in different types of graph representation learning models from 2010 to 2022. The y-axis denotes the number of publications on the popularity of graph representation learning models over the years. There are seven keywords, including graph representation learning (GRL), graph kernels (GK), matrix factorization-based graph embedding (MF), graph neural networks (GNNs), graph autoencoder (GAE), graph convolution networks (GCNs), graph transformer (GT), and non-Euclidean graph embedding (NEGE). There are nineteen representative models, including DeepWalk [ 14 ], Grarep [ 15 ], LINE [ 16 ], GGCN [ 17 ], GCN [ 18 ], HOPE [ 5 ], Node2Vec [ 4 ], GAT [ 19 ], Metapath2Vec [ 20 ], Struc2Vec [ 21 ], GraphSage [ 22 ], G2G [ 23 ], GIN [ 24 ], HGAT [ 25 ], DGI [ 26 ], HGNN [ 27 ], GCNII [ 28 ], GT [ 29 ], and EGT [ 30 ].

Historically, the first graph representation learning models were graph kernels. The idea of graph kernel methods perhaps comes from the most essential and well-known Weisfeiler–Lehman (WL) isomorphic testing in 1968 [ 31 ]. Graph kernels are kernel functions that aim to measure the similarity between graphs and their entities [ 32 ]. The main idea of graph kernels is to decompose original graphs into substructures and construct vector embeddings based on the substructure features. There are two main types of graph kernels: kernels for graphs and kernels on graphs. The former aims to measure the similarity between pairs of graphs, while the latter estimates the similarity between graph nodes. Several strategies to estimate the similarity of graph pairs have been proposed to represent various graph structures, such as graphlet kernels, random walk, and the shortest path, which started in the 2000s. Based on WL isomorphic testing, various graph kernels are built to compute the similarity of pairs of graph entities, such as WL kernels [ 31 ], WL subtree kernels [ 33 , 34 , 35 ], and random walks [ 36 , 37 ]. However, one of the limitations of graph kernels is the computational complexity when working with large-scale graphs since computing graph kernels is an NP-hard class.

Early models for graph representation learning primarily focused on matrix factorization methods, which are motivated by traditional techniques for dimensionality reduction in 2002 [ 38 ]. Several matrix factorization-based models have been proposed to handle large graphs with millions of nodes [ 39 , 40 ]. The objective of matrix factorization models is to decompose the proximity matrix into a product of small-sized matrices and then learn the embeddings that fit the proximity. Based on the ways to learn vector embeddings, there are two main lines of matrix factorization models: Laplacian eigenmaps and node proximity matrix factorization. Starting in the 2000s, Laplacian eigenmaps methods [ 41 , 42 ] aim to represent each node by Laplacian eigenvectors along with the first k eigenvalues. In contrast, the node proximity matrix factorization methods [ 5 , 15 ] aim to gain node embeddings by the singular value decomposition in 2015. Various proximity matrix factorization models have successfully handled large graphs and achieved great performance [ 15 , 43 ]. However, matrix factorization models suffer from capturing high-order proximity due to computational complexity when performing with high transition matrices.

In 2014 and 2016, early shallow models, DeepWalk [ 14 ] and Node2Vec [ 4 ] were proposed, which learn node embeddings based on shallow neural networks. Remarkably, the primary concept is to learn node embeddings by maximizing the neighborhood probability of target nodes using the skip-gram model started in the natural language processing area. The purpose of this strategy could then be optimized with SGD on neural network layers, thus reducing computational complexity. With this historic milestone, various models have been developed by improving multiple sampling strategies and training processes. Shallow models are the embedding models that aim to map graph entities to low-dimensional vectors by conducting an embedding lookup for each graph entity [ 3 ]. From this perspective, the embedding of node v i could be represented as Z i = M x i , where M denotes an embedding matrix of all nodes and x i is a one-hot vector of node v i . Various shallow models have been proposed to learn embeddings with different strategies to preserve graph structures and the similarity between graph entities. Structure-preservation models aim to preserve the structural connection between entities (e.g., DeepWalk [ 14 ], Node2Vec [ 4 ]). In 2015, Tang et al. [ 16 ] proposed the LINE model, a proximity reconstruction method that aims to preserve proximity between nodes in graphs. After that, various models have been proposed to preserve the node proximity with higher-order proximity and capture more global graph structure. However, most of the above models focus on transductive learning and ignore node features, which may have several limitations to practical applications.

Breakthroughs in deep learning have led to a new research perspective on applying deep neural networks to the graph domain. Since the 2000s, there have been several early models on GNNs designed to learn node embeddings based on neighborhood information using an aggregation mechanism [ 44 , 45 ]. Graph neural networks (GNNs) have shown a significant expressive capacity to represent graph embeddings in an inductive learning manner and solve the limitations of aforementioned shallow models [ 46 , 47 ]. Recurrent GNNs are also the first studies on GNNs based on recurrent neural network architecture [ 48 , 49 ] in 2005. These models aim to learn node embeddings via recurrent layers with the same weights in each hidden layer and run recursively until convergence. Several recurrent GNNs with different strategies have been proposed by the power of recurrent neural network architecture and the combinations with several sampling strategies. However, using the same weights at each hidden layer of the RGNN model may cause the model to be incapable of distinguishing the local and global structure. Since 2016, several graph autoencoder models have been proposed based on the original autoencoder architecture, which could learn complex graph structures by reconstructing the input graph structure [ 50 , 51 ]. The graph autoencoders comprise two main layers: encoder layers take the adjacency matrix as input and squeeze it to generate node embeddings, and decoder layers reconstruct the input data. By contrast, the idea of CGNNs is to use convolutional operators with different weights in each hidden layer, which are more efficient in capturing and distinguishing the local and global structures [ 18 , 52 , 53 , 54 ]. Many studies have been proposed with various variants of CGNNs, including spectral CGNNs [ 55 , 56 , 57 ] started in 2014, spatial CGNNs [ 22 , 24 , 52 ] started in 2016, and attentive CGNNs [ 19 , 58 ] started in 2017. Nevertheless, most GNNs suffer limitations such as over-smoothing problems and noise from neighbor nodes when stacking more GNN layers [ 59 , 60 ].

Motivated by transformer architecture started from natural language processing applications in 2017, several graph transformer models were proposed using the transformer architecture to the graph domain in 2019 [ 61 , 62 ]. Graph transformer models have shown competitive and superior performance against GNNs in learning complex graph structures [ 30 , 63 ]. Graph transformer models can be divided into three main groups: transformer for tree-like graphs, transformer with GNNs, and transformer with global self-attention. Early graph transformer models aim to learn tree-like graphs, which mainly aim at learning node embeddings in tree-like graphs where nodes are arranged hierarchically [ 64 , 65 ] since 2019. These models encode the node positions through their relative and absolute positional encoding in trees as constraints with root nodes and neighbor nodes at the same level. Second, several models leverage the power of GNNs as an auxiliary module in computing attention scores [ 66 ]. In addition, some models put GNN layers on top of the model to overcome the over-smoothing problem and make the model remember the local structure [ 61 ]. Most above graph transformer models adopt vanilla transformer architecture to learn embeddings that rely on multi-head self-attention. Third, several graph transformer models use a global self-attention mechanism to learn node embeddings, which implements self-attention independently and does not require constraints from the neighborhood [ 30 , 67 ]. These models work directly on input graphs and can capture the global structure with global self-attention.

Most of the above models learn embeddings in Euclidean space and represent graph entities as vector points in latent space. However, graphs in the real world could have complex structures and different forms, such that Euclidean space may be inadequate to represent the graph structure and ultimately lead to structural loss [ 68 , 69 ]. Early models learn complex graphs in non-Euclidean geometry by developing efficient algorithms for learning node embeddings based on manifold optimization [ 70 ] in 2017. Following the line, several models aim to represent graph data in non-Euclidean space and gain desirable results [ 68 , 69 , 71 ]. Two typical non-Euclidean spaces, including spherical and hyperbolic geometry, have their advantages. Spherical space could represent graph structures with large cycles, while hyperbolic space is suitable for hierarchical graph structures. Most non-Euclidean models aim to design an efficient algorithm for learning node embeddings since it is challenging to implement operators directly in non-Euclidean. Furthermore, to deal with uncertainty, several Gaussian graph models have been introduced to represent graph entities as density-based embeddings [ 23 ] started in 2016. Node embeddings could be defined as a continuous density mostly based on Gaussian distribution [ 72 ].

To the extent of our knowledge, no comparable paper in the literature focuses on a wide range of graph embedding models for static and dynamic graphs in different geometric spaces. Most current papers only presented specific approaches for graph representation learning. Wu et al. [ 8 ] focused on graph neural network models, which are presented as a section in this paper. Several surveys [ 13 , 73 , 74 ] summarized graph embedding models for various types of graphs, but they did not mention either graph transformer models or non-Euclidean models. From applying graph embedding models to practical applications, several papers only list the applications for specific and narrow tasks [ 12 , 75 ]. However, we discuss how graphs are constructed in specific applications and how graph embedding models are implemented in various domains.

This paper presents a comprehensive picture of graph embedding models in static and dynamic graphs in different geometric spaces. In particular, we recognize five general categories of models for addressing graph representation learning, including graph kernels, matrix factorization models, shallow models, deep neural network models, and non-Euclidean models. The contribution of this study can be categorized as follows:

- This paper presents a taxonomy of graph embedding models based on various algorithms and strategies.

- We provide readers with an in-depth analysis of an overview of graph embedding models with different types of graphs ranging from static to dynamic and from homogeneous to heterogeneous graphs.

- This paper presents graph transformer models, which have achieved remarkable results in a deeper understanding of graph structures in recent years.

- We cover applications of graph representation learning in various areas, from constructing graphs to applying models in specific tasks.

- We discuss the challenges and future directions of existing graph embedding models in detail.

Since abundant graph representation learning models have been proposed recently, we employed different approaches to find related studies. We built a search strategy by defining keywords and analyzing reliable sources. The list of keywords includes graph embedding, graph representation learning, graph neural networks, graph convolution, graph attention, graph transformer, graph embedding in non-Euclidean space, Gaussian graph embedding, and applications of graph embedding. We found related studies at famous top-tier conferences and journals such as AAAI, IJCAI, SIGKDD, ICML, WSDM, Nature Machine Intelligence, Pattern Recognition, Intelligent Systems with Applications, the Web, and so on.

The following sections of this paper are summarized as follows. Section 2 describes fundamental concepts and backgrounds related to graph representation learning. In Section 3 , all the graph embedding models will be presented, such as graph kernels, matrix factorization models, shallow models, deep neural network models, and non-Euclidean models. Section 4 discusses a wide range of practical applications of graph embedding models in the real world. Section 5 summarizes the latest benchmarks, downstream tasks, evaluation metrics, and libraries. Challenges for existing graph embedding models and future research directions will be discussed in Section 6 . The last section, Section 7 is the conclusion.

2. Problem Description

Graph representation learning aims to project the graph entities into low-dimensional vectors while preserving the graph structure and the proximity of entities in graphs. With the desire to map graph entities into vector space, it is necessary to model the graph in mathematical form. Therefore, we begin with several fundamental definitions of graphs. The list of standard notations used in this survey is detailed in Table 1 . Mathematically, a graph G can be defined as follows:

A summary of notations.

| Notations | Descriptions |

|---|---|

| The set of nodes in the graph | |

| The set of edges in graph | |

| The number of nodes in graph | |

| The set of edges with type in heterogeneous graphs | |

| The node in the graph | |

| The edge in the graph | |

| The adjacency matrix of the graph | |

| The feature matrix of nodes in graph | |

| The degree matrix of nodes in graph | |

| Projection function | |

| The embedding vector of node | |

| The transition matrix | |

| The set of neighbors of node | |

| The -hop distance from a target node to other nodes | |

| The dimension of vector in latent space | |

| The label of node |

(Graph [ 3 ]). A graph is a discrete structure consisting of a set of nodes and the edges connecting those nodes. The graph can be described mathematically in the form: G = ( V , E , A ) , where V = { v 1 , v 2 , ⋯ , v N } is the set of nodes, E = { ( v i , v j ) | ( v i , v j ) ∈ V × V } is the set of edges, and A is an adjacency matrix. A is a square matrix of size N × N where N is the number of nodes in graphs. This can be formulated as follows:

where A i j indicates adjacency between node v i and node v j .

When A i j is binary, the matrix A represents only the existence of connections between nodes. By extending the definition of matrix A , we could expand to abundant different types of graph G :

- Directed graph: When A i j = A j i for any 1 ≤ i , j ≤ n , then the graph G is called an undirected graph, and G is directed graph otherwise.

- Weighted graph: is a graph in which each edge is assigned a specific weight value. Therefore, the adjacency matrix could be presented as: A i j = w i j , where w i j ∈ R is the weight of the edge e i j .

- Signed graph: When A i j ∈ [ − ∞ , ∞ ] , the graph G is called signature/signed graph. The graph G could have all positive signed edges when A i j > 0 for any 1 ≤ i , j ≤ n , and G could have all negative signed edges otherwise.

- Attributed graph: A graph G = ( V , E , A , X ) is an attributed graph where V , E is the set of nodes and edges, respectively, and X is the matrix of node attributes with size n × d . Furthermore, we could also have the matrix X as the matrix of edge input attribute with size m × d where m is the number of edges e i j ∈ E for any 1 ≤ i , j ≤ n .

- Hyper graph: A hyper graph G could be represented as G = ( V , E , W ) , where V denotes the set of nodes and E denotes a set of hyperedge. Each hyperedge e i j can connect multiple nodes and is assigned a weight w i j ∈ W . The hypergraph G could be represented by an incidence matrix H size V × E with entries h ( v i , v j ) = 1 if e i j ∈ E , and h ( v i , v j ) = 0 otherwise.

- Heterogeneous graph: A heterogeneous graph is defined as G = ( V , E , T , φ , ρ ) where V , and E are the set of nodes and edges, respectively, φ is the mapping function: φ : V → T v , and the mapping function ρ : E → T e with T v , T e describe the set of node types and edge types, respectively, and T = T v + T e is the sum of the number of node types and edge types.

According to the definitions of graph G = ( V , E ) that have been represented mathematically above, the idea of graph embedding is to map graph entities into low-dimensional vectors with the number of dimensions d with d ≪ N . Mathematically, the graph embedding is formulated as follows:

(Graph embedding [ 14 ]). Given a graph G = ( V , E ) where V is the set of nodes, and E is the set of edges, graph embedding is a projection function ϕ ( · ) , where ϕ : V → R d ( d ≪ | V | ) and k ( v i , v j ) ≃ 〈 ϕ ( v i ) , ϕ ( v j ) 〉 describes the proximity of two nodes v i and v j in the graph and 〈 ϕ ( v i ) , ϕ ( v j ) 〉 is the distance of two vectors ϕ ( v i ) and ϕ ( v j ) in the vector space.

Graph representation learning aims to project graph entities into the vector space while preserving the graph structure and entity proximity. For example, if two nodes v i and v j in the graph G are connected directly, then in vector space, the distance between two vectors ϕ ( v i ) and ϕ ( v j ) must be minimal. Figure 2 shows an example of a graph embedding model that transforms nodes in a graph to low-dimensional vectors ( Z 1 Z 2 ⋯ Z n ) in the vector space.

A comprehensive view of graph embedding. Given a spare, high-dimensional graph G = ( V , E ) where V and E denote the set of nodes and edges. Graph embedding learning aims to find a function ϕ that maps nodes from graph space to d -dimensional vector space with d ≪ | V | .

When mapping graph entities to latent space, preserving the proximity of graph entities is one of the most important factors in preserving the graph structure and the relationship between nodes. In other words, if two nodes v i and v j are connected or close in the graph, the distance between the two vectors Z i and Z j must be minimal in the vector space. Several models [ 16 , 76 , 77 , 78 ] aim to preserve k -order proximity between graph entities in vector space. Formally, the k -order proximity is defined as follows:

( k -order proximity [ 79 ]). Given a graph G = ( V , E ) where V is the set of nodes, and E is the set of edges, k-order proximity describes the similarity of nodes with the distance captured from the k-hop in the graph G. When k = 1 , it is 1st-order proximity that captures the local pairwise proximity of two nodes in graphs. When k is higher, it could capture the global graph structure.

There is another way to define graph embedding from the perspective of Encoder-Decoder architecture [ 3 ]. From this perspective, the task of the encoder part is to encode graph entities into low-dimensional vectors, and the decoder part tries to reconstruct the graph from the latent space. In the real world, many graphs show dynamic behaviors, including node and edge evolution, and feature evolution [ 80 ]. Dynamic graphs are found widely in many applications [ 81 ], such as social networks where connections between friends could be added or removed over time.

(Dynamic graph [ 80 ]). A dynamic graph G is formed of three entities: G = V , E , T where V = V t is the group of node sets, E = { E ( t ) } with t ∈ T is the group of edge sets over time span T, and T denotes the time span. From the statistic perspective, we could also consider a dynamic graph G = { G ( t 0 ) G ( t 1 ) ⋯ G ( t n ) } as a collection of static graphs G ( t k ) where G ( t k ) = V ( t k ) , E ( t k ) denotes the static graph G at time t k , and V ( t k ) , E ( t k ) denotes the set of nodes and set of edges at time t k , respectively.

Figure 3 a presents an example of dynamic graph representation. At time t + 1 , there are several changes in the graph G ( t + 1 ) such as: The edge e 23 will be removed, node v 6 will be added and new edge e 56 . Casteigts et al. [ 80 ] proposed an alternative definition of a dynamic graph with five components: G = ( V , E , T , ρ , ζ ) where ρ : V × T → { 0 , 1 } describes the existence of each node at time t , and ζ : E × T → Ψ describes the existence of an edge at time t .

Methods for modeling dynamic graphs over time. ( a ) The representation of a dynamic graph by a series of snapshots; ( b ) The evolution of edges and nodes in the dynamic graph from time t to t + 1 . In ( a ), the graph G is the collection of G ( t ) (i.e., G = { G ( 1 ) , G ( 2 ) , ⋯ , G ( t ) } ) which t is the time span, and the entities of G change from time t to t + 1 . ( b ) depicts the evolution of edges in the same dynamic graph from ( a ) which each edge contains the series of the time spans from t to t + 1 . At time t , the graph has five nodes ( v 1 , v 2 , v 3 , v 4 , v 5 ) and five edges ( e 13 e 15 e 34 e 45 e 23 ) . However, at time t + 1 , the edge e 23 and node v 2 are removed, and a new node v 6 , a new edge e 56 are added in the graph.

There is another way to model a dynamic graph based on the changes of the graph entities (edges, nodes) taking place on the graph G over a time span t or by an edge stream. From this perspective, a dynamic G could be modeled as G = ( V , E t , T ) where E t presents the collection of edges of dynamic graph G at time t , and function f : E → R + to map edges into integer numbers. It notices that all the edges at time t will have the same labels. Figure 3 b describes the evolution of the edges of a graph from time ( t ) to ( t + 1 ) .

(Dynamic graph embedding [ 82 ]). Given a dynamic graph G = ( V , E , T ) where V = { V ( t ) } is the group of node sets, and E = { E ( t ) } is the group of edge sets over time span T, a dynamic graph embedding is a projection function ϕ ( · ) , where ϕ ( · ) : G × T → R d × T . T describes the time domain in latent space and T is the time span. When G is represented as the collection of snapshots: G = { G ( t 0 ) G ( t 1 ) ⋯ G ( t n ) } , then the projection function ϕ will be defined as: ϕ = { ϕ ( 0 ) ϕ ( 1 ) ⋯ ϕ ( n ) } where ϕ ( t ) is the vector embedding of the graph G ( t ) at time t.

There are two ways to represent a dynamic graph G , including a temporal dynamic graph embedding (changes over a period of time) and topological dynamic graph embedding (changes in the graph structure over time).

- Temporal dynamic graph embedding: A temporal dynamic embedding is a projection function ϕ ( · ) , where ϕ t : G t − k , t × T → R d × T and G t − k , t = { G t − k G t − k + 1 ⋯ G t } describes the collection of graph G during time interval [ t − k , t ] .

- Topological dynamic graph embedding: A topological dynamic graph embedding for graph G for nodes is a mapping function ϕ , where ϕ : V × T → R d × T .

3. Graph Representation Learning Models

This section presents a taxonomy of existing graph representation learning models in the literature. We categorize the existing graph embedding models into five main groups based on strategies to preserve graph structures and proximity of entities in graphs, including graph kernels, matrix factorization-based models, shallow models, deep neural network models, and non-Euclidean models. Figure 4 presents the proposed taxonomy of the graph representation learning models. Furthermore, we deliver open-source implementations of graph embedding models in Appendix A .

The proposed taxonomy for graph representation learning models.

Graph kernels and matrix factorization-based models are one of the pioneer models for graph representation learning. Graph kernels are prevalent in learning graph embeddings using a deterministic mapping function in solving graph classification tasks [ 83 , 84 , 85 ]. There are two types of graph kernels: kernels for graphs, which aim to compare the similarity between graphs, and kernels on graphs aim to find the similarity between nodes in graphs. Second, matrix factorization-based models aim to represent the graph as matrices and gain embeddings by decomposing the matrices [ 5 , 86 ]. There are several strategies for factorization modeling, and most of these models aim to approximate high-order proximity between nodes. However, graph kernels and matrix factorization-based models suffer from computational complexity when handling large graphs and capturing high-order proximity.

Shallow models aim to construct an embedding matrix to transform each graph entity into vectors. We categorize shallow models into two main groups: structure preservation and proximity reconstruction. Structure-preservation strategies aim to conserve structural relationships between nodes in graphs [ 4 , 14 , 87 ]. Depending on specific tasks, several sampling strategies could be employed to capture graph structures, such as random walks [ 4 , 14 ], graphlets [ 88 ], motifs [ 89 , 90 , 91 ], etc. By contrast, the objective of the proximity reconstruction models is to preserve the proximity of nodes in graphs [ 16 , 92 ]. The proximity strategies can vary across different models based on their objectives. For example, the LINE model [ 16 ] aims to preserve 1st-order and 2nd-order proximity between nodes, while PALE [ 77 ] preserves pairwise similarities.

Graph neural networks have shown great performance in learning complex graph structures [ 18 , 50 ]. GNNs can be categorized into three main groups: graph autoencoder [ 50 , 51 ], recurrent GNNs [ 17 , 93 ], and convolutional GNNs. Graph autoencoders and recurrent GNNs are mostly pioneer studies of GNNs based on autoencoder architecture and recurrent neural networks, respectively. Graph autoencoders are composed of an encoder layer and a decoder layer. The encoder layer aims to compress a proximity graph matrix to vector embeddings, and the decoder layer reconstructs the proximity matrix. Most graph autoencoder models employ multilayer perceptron-based layers or recurrent GNNs as the core of autoencoder architecture. Recurrent GNNs aim to learn node embeddings based on recurrent neural network architecture in which connections between neurons can make a cycle. Therefore, earlier RGNNs mainly aimed to learn embeddings on directed acyclic graphs [ 94 ]. Recurrent GNNs employ the same weights in all hidden layers to capture local and global structures. Recently, convolutional GNNs have been much more efficient and can gain outstanding performance compared to RGNNs. The main difference between RGNNs and CGNNs is that CGNNs use different weights in each hidden layer, which could distinguish local and global structures. Various CGNN models have been proposed and mainly fall into two categories: spectral CGNNs, and spatial CGNNs [ 22 , 52 , 95 ]. Spectral CGNNs aim to transform graph data to the frequency domain and learn node embeddings in this domain [ 56 , 96 ]. By contrast, spatial CGNNs work directly on the graph using convolutional filters [ 53 , 54 ]. By staking multiple GNN layers, the models could learn node embeddings more efficiently and capture higher-order structural information [ 97 , 98 ]. However, stacking many layers could cause the over-smoothing problem, which most GNNs have not fully solved in a whole extent.

Recently, several models have enabled transformer architecture to learn graph structures which gain significant results compared to other deep-learning models [ 30 , 46 , 99 ]. We categorize graph transformer models into three main groups: transformer for tree-like graphs [ 64 , 65 ], transformer with GNNs [ 99 , 100 ], and transformer with global self-attention [ 30 , 67 ]. Different types of graph transformer models aim to handle distinct types of graphs. The transformer for tree-like graphs aims to learn node embeddings in tree-like hierarchical graphs [ 64 , 65 , 101 ]. The hierarchical relationships from the target nodes to their parents and neighbors are presented as absolute and relative positional encoding, respectively. Several graph transformer models employ the message-passing mechanism from GNNs as an auxiliary module in computing the attention score matrix [ 61 , 100 ]. GNN layers can be used to aggregate information as input to graph transformer models or put on top of the model, which aims to preserve local structures. In addition, some graph transformer models can directly process graph data without support from GNN layers [ 30 , 67 ]. These models implement a global self-attention to learn local and global structures in a graph input without neighborhood constraints.

Most existing graph embedding models aim to learn embeddings in Euclidean space, which may not deliver good geometric representations and metrics. Recent studies have shown that non-Euclidean spaces are more suitable for representing complex graph structures. The non-Euclidean models could be categorized as hyperbolic, spherical, and Gaussian. Hyperbolic and spherical space are two types of non-Euclidean geometry that could represent different graph structures. Hyperbolic space [ 102 ] is more suitable for representing hierarchical graph structures that follow the power law, while the power of spherical space is to represent large circular graph structures [ 103 ]. Moreover, since the information about the embedding space is unknown and uncertain, several models aim at learning node embeddings as Gaussian distribution [ 23 , 104 ].

3.1. Graph Kernels

Graph kernels aim to compare graphs or their substructures (e.g., nodes, subgraphs, and edges) by measuring their similarity [ 105 ]. The problem of measuring the similarity of graphs is, therefore, at the core of learning graphs in an unsupervised manner. Measuring the similarity of large graphs is problematic since the graph isomorphism problem is assigned to the NP (nondeterministic polynomial time) class. However, it is an NP-complete for subgraphs isomorphism problem. Table 2 describes a summary of graph kernel models.

A summary of graph kernel models.

| Models | Graph Types | Tasks | Loss Function |

|---|---|---|---|

| [ ] | Static graphs | Graph comparison | |

| [ ] | Static graphs | Graph comparison | |

| [ ] | Static graphs | Graph classification | |

| [ ] | Static graphs | Graph classification | |

| [ ] | Static graphs | Graph classification | |

| [ ] | Static graphs | Graph classification | |

| [ ] | Static graphs | Graph comparison | |

| [ ] | Attributed graphs | Graph classification | |

| [ ] | Attributed graphs | Graph classification | |

| [ ] | Attributed graphs | Graph classification | |

| [ ] | Attributed graphs | Graph classification | |

| [ ] | Attributed graphs | Graph classification | |

| [ ] | Attributed graphs | Graph classification | |

| GraTFEL [ ] | Dynamic graphs | Graph reconstruction Link prediction | |

| [ ] | Dynamic graphs | Link prediction | |

| [ ] | Dynamic graphs | Link prediction |

Kernel methods applied to the graph embedding problem can be understood in two forms, including the isomorphism testing of N graphs (kernels for graphs) and embedding entities of graphs to Hilbert space (kernels on graphs).

- Kernels for graphs: Kernels for graphs aim to measure the similarity between graphs. The similarity between the two graphs (isomorphism) could be explained as follows: Given two undirected graphs G 1 = ( V 1 E 1 ) and G 2 = ( V 2 E 2 ) , G 1 and G 2 are isomorphic if they exist a bimodal mapping function ϕ : V 1 → V 2 such that ∀ a b ∈ V 1 , a and b are contiguous on G 1 if ϕ ( a ) and ϕ ( b ) are contiguous on G 2 .

- Kernels on graphs: To embed nodes in graphs, kernel methods refer to finding a function that maps pairs of nodes to latent space using particular similarity measures. Formally, graph kernels could be defined as: Given a graph G = ( V , E ) , a function K = V × V → R is a kernel on G if there is a mapping function ϕ : V → H such that K ( v i v j ) = 〈 ϕ ( v i ) ϕ ( v j ) 〉 for any node pairs ( v i v j ) .

There are several strategies to measure the similarity of pairs of graphs, such as graphlet kernels, WL kernels, random walk, and shortest paths [ 31 , 83 ]. Among the kernel methods, graphlet kernels are one of the simple kernels that could measure the similarity between graphs by counting subgraphs with a limited size k [ 83 , 106 ]. For instance, Shervashidze et al. [ 83 ] introduced a graphlet kernel with the main idea of finding the graph feature by counting the number of different graphlets in graphs. Formally, given an unlabeled graph G , a graphlet list V k = ( G 1 + G 2 + ⋯ + G n k ) is the set of the graphlets with size k where n k depicts the number of graphlets. The graphlet kernel for two unlabeled graphs G and G ′ could be defined as:

where ϕ G and ϕ G ′ are vectors that depict the number of graphlets in a G i and G i ′ , respectively. By counting all graphlets with size k for a graph, the computation time is expensive by the enumeration n k with n depicts the number of nodes in G . One of the practical solutions to overcome this limitation is to design the feature ϕ i G more effectively, called Weisfeiler–Lehman.

Weisfeiler–Lehman (WL) test [ 31 ] is considered to be a traditional strategy to test the homomorphism of two graphs using color refinements. Figure 5 presents the main idea of the WL homomorphism test for two graphs in detail. By updating node labels, all the structure information of nodes in graphs could be stored at each node, including both local and global information, depending on the number of iterations. We can then compute histograms or other summary statistics over these labels as a vector representation for graphs.

The Weisfeiler–Lehman isomorphism test. ( a ) Original labels, i = 0 ; ( b ) Relabeled labels, i = 1 . There are two interactions of WL relabeling for the graph with five nodes v 1 , v 2 , v 3 , v 4 , v 5 . In ( a ), labels of nodes are initialized consisting of 5 nodes. In ( b ), in the first iteration, new labels of the nodes will be reassigned and calculated based on the connection information to its adjacent nodes. For example, node v 1 is adjacent to node v 2 and node v 3 , therefore the new label of v 1 is calculated as v 1 , v 2 , v 3 and resigned as new label v 6 . The same steps are repeated until a steady state for the nodes is reached.

Several models improved the idea from WL isomorphism test [ 34 , 84 ]. The concept of the WL isomorphism test inspired various GNN models later, which aim to be expressive as powerful as the WL test to distinguish different graph structures. Shervashidze et al. [ 33 ] presented three instances of WL kernels, including the WL subtree kernel, WL edge kernel, and WL shortest-path kernel with an enrichment strategy for labels. The key idea of [ 33 ] is to represent a graph G as WL sequences with the height of h . The WL sequences of two graphs G and G ′ can be defined as:

where k G i , G i ′ = ϕ ( G i ) , ϕ ( G i ′ ) . For N graphs, the WL subtree kernel could be computed in a runtime of O ( N h m + N 2 h n ) , where h and m are the numbers of interactions and edges in G , respectively. Therefore, the algorithm could capture more information about the graph G after h interactions and compare graphs at different levels.

However, the vanilla WL isomorphism test requires massive resources since the methods are an NP-hard class. Following the WL isomorphism idea, Morris et al. [ 34 ] presented a set of k -set forms V ( G ) k and built a local and global neighborhood of the k -sets. Instead of working on each node in graphs, the models calculate and update the labels based on the k -set. The feature vectors of graph G then could be calculated by counting the number of occurrences of k -sets. Several models [ 84 , 114 ] improved the Wasserstein distance based on the WL isomorphism test, and the models could estimate weights of subtree patterns before the kernel construction [ 35 ]. Several models adopted a random-walk sampling strategy to capture the graph structure that could help reduce the computational complexity to handle large graphs [ 36 , 37 , 85 , 107 ].

However, the above methods only focus on homogeneous graphs in which nodes do not have side information. In the real world, graph nodes could contain labels and attributes and change over time, making it challenging to learn node embeddings. Several models have been proposed with slight variations from the traditional WL isomorphism test and random walk methods [ 109 , 110 , 111 , 112 , 113 ]. For example, Borgwardt et al. [ 109 ] presented random-walk sampling on attributed edges to capture the graph structure. Since existing kernel models primarily work on small-scale graphs or a subset of graphs, improving similarity based on shortest paths could achieve better computational efficiency for graph kernels in polynomial time. An all-paths kernel K could be defined as:

where P ( G 1 ) and P ( G 2 ) are the set of random-walk paths in G 1 and G 2 , respectively, and k p a t h p 1 , p 2 depicts a positive definite kernel on two paths p 1 and p 2 . The model then applied Floyd–Warshall algorithm [ 115 ] to find k shortest-path kernels in graphs. One of the disadvantages of this model is the runtime complexity, which is about O ( k × n 4 ) , where n depicts the number of nodes in graphs. Morris et al. [ 108 ] introduced a variation of the WL subtree kernel for attributed graphs by improving existing shortest-path kernels. The key idea of this model is to use a hash function that maps continuous attributes to label codes, and then it normalizes the discrete label codes.

To sum up, graph kernels are effective models and bring several advantages:

- Coverage: The graph kernels are one of the most useful functions to measure the similarity between graph entities by performing several strategies to find a kernel in graphs. This could be seen as a generalization of the traditional statistical methods [ 116 ].

- Efficiency: Several kernel tricks have been proposed to reduce the computational cost of kernel methods on graphs [ 117 ]. Kernel tricks could reduce the number of spatial dimensions and computational complexity on substructures while still providing efficient kernels.

Although kernel methods have several advantages, several disadvantages make the kernels difficult to scale:

- Missing entities: Most kernel models could not learn node embeddings for new nodes. In the real world, graphs are dynamic, and their entities could evolve. Therefore, the graph kernels must re-learn graphs every time a new node is added, which is time-consuming and difficult to apply in practice.

- Dealing with weights: Most graph kernel models do not consider the weighted edges, which could lead to structural information loss. This could reduce the possibility of graph representation in the hidden space.

- Computational complexity: Graph kernels are an NP-hard class [ 109 ]. Although several kernel-based models aim to reduce the computational time by considering the distribution of substructures, this may increase the complexity and reduce the ability to capture the global structure.

Although the graph kernels delivered good results when working with small graphs, they remain limitations when working with large and complex graphs [ 118 ]. To address the issue, matrix factorization-based models could bring far more advantages to learning node embeddings by decomposing the large original graphs into small-sized components. Therefore, we discuss matrix factorization-based models for learning node embeddings in the next section.

3.2. Matrix Factorization-Based Models

Matrix factorization aims to reduce the high-dimensional matrix that describes graphs (e.g., adjacency matrix, Laplacian matrix) into a low-dimensional space. Several well-known decomposition models (e.g., SVD, PCA, etc.) are widely applied in graph representation learning and recommendation system problems. Table 3 and Table 4 present matrix factorization-based models for static and dynamic graphs, respectively. Based on the strategy to preserve the graph structures, matrix factorization models could be categorized into two main groups: graph embedding Laplacian eigenmaps and node proximity matrix factorization.

- The Laplacian eigenmaps: To learn representations of a graph G = ( V , E ) , these approaches first represent G as a Laplacian matrix L where L = D − A and D is the degree matrix [ 41 ]. In the matrix L , the positive values depict the degree of nodes, and negative values are the weights of the edges. The matrix L could be decomposed to find the smallest number from eigenvalues which are considered node embeddings. The optimal node embedding Z * , therefore, could be computed using an objective function: Z * = arg min Z Z ⊺ L Z . (5)

- Node proximity matrix factorization: The objective of these models is to decompose node proximity matrix into small-sized matrices directly. In other words, the proximity of nodes in graphs will be preserved in the latent space. Formally, given a proximity matrix M , the models try to optimize the distance between two pair nodes v i and v j , which could be defined as: Z * = arg min Z M i j − Z i Z j T . (6)

Hofmann et al. [ 119 ] proposed an MSDC (Multidimensional Scaling and Data Clustering) model based on matrix factorization. The key idea of MSDC is to represent data points as a bipartite graph and then learn node embeddings based on node similarity in the graph. This method requires a symmetric proximity matrix M ∈ R N × N as input and learns a latent representation of the data in Euclidean space by minimizing the loss that could be defined as:

However, the limitation of the MSDC model is that the model focuses only on the pairwise nodes, which cannot capture the global graph structure. Furthermore, the model investigated the proximity of all the data points in the graph, which could increase computational complexity when working on large graphs. Several models [ 39 , 120 ] adopted k -nearest methods to search neighbor nodes which can capture more graph structure. The k -nearest methods, therefore, could bring the advantage of reducing computational complexity since the models only take k neighbors as inputs. For example, Han et al. [ 120 ] proposed the similarity S i j between two nodes v i and v j as:

where N k ( v i ) depicts the set of k nearest neighbors of v i in graphs. The model could measure the infringement of the constraints between pairs of nodes regarding label distribution. In addition, the model can estimate the correlation between features which would be beneficial to combine common features during the training process.

Several models [ 7 , 40 , 120 , 121 , 122 ] have been proposed to capture side information in graphs such as attributes and labels. He et al. [ 42 ] used the locality-preserving projection technique, a nonlinear Laplacian Eigenmap, to preserve the local structural information in graphs. The model first constructs an adjacency matrix with k nearest neighbors for each pair of nodes. The model then computes the objective function as:

where D is a diagonal matrix, L = D − A is the Laplacian matrix, and a is the transformation matrix in the linear embedding x i → y i = A ⊺ x i . Nevertheless, the idea from [ 42 ] only captures the structure within k nearest neighbors, which fails to capture the global similarity between nodes in the graph. Motivated by these limitations, Cao et al. [ 15 ] introduced the GraRep model, which considers a k -hop neighborhood of each target node. Accordingly, GraRep could capture global structural information in graphs. The model works with k -order probability transition matrix (proximity matrix) M k which could be defined as: M k = M ⋯ M ⏟ k (11)

where M = D − 1 A , D is the degree matrix, A is the adjacent matrix, and M i j k presents the transition probability from node v i to v j . The loss function, thus, is the sum of k transition loss functions:

To construct the vector embeddings, GraRep decomposed the transition matrix into small-sized matrices using SVD matrix factorization. Similarly, Li [ 123 ] introduced NECS (Learning network embedding with the community) to capture the high-order proximity using Equation ( 11 ).

A summary of matrix factorization-based models for static graphs. C indicates the number of clusters in graphs, N Z i | μ c , Σ c refers to the multivariate Gaussian distribution for each cluster, L means the Laplacian matrix, H ∈ R n × k is the probability matrix that a node belongs to a cluster, U denotes the coefficient vector, and W i j is the weight on ( v i , v j ) .

| Models | Graph Types | Tasks | Loss Function |

|---|---|---|---|

| SLE [ ] | Static graphs | Node classification | |

| [ ] | Attributed graphs | Node classification | |

| [ ] | Attributed graphs | Community detection | |

| LPP [ ] | Attributed graphs | Node classification | |

| [ ] | Attributed graphs | Graph reconstruction | |

| [ ] | Static graphs | Node clustering | |

| GLEE [ ] | Attributed graphs | Graph reconstruction, Link prediction | |

| LPP [ ] | Static graphs | Node classification | |

| Grarep [ ] | Static graphs | Node classification, Node clustering | |

| NECS [ ] | Static graphs | Graph reconstruction, Link prediction, Node classification | |

| HOPE [ ] | Static graphs | Graph reconstruction Link prediction, Node classification | |

| [ ] | Static graphs | Link prediction | |

| AROPE [ ] | Static graphs | Graph reconstruction, Link prediction, Node classification | |

| ProNE [ ] | Static graphs | Node classification | |

| ATP [ ] | Static graphs | Link prediction | |

| [ ] | Static graphs | Graph partition | |

| NRL-MF [ ] | Static graphs | Node classification |

In terms of considering the node proximity based on neighbor relations, Ou et al. [ 5 ] presented HOPE, an approach for preserving structural information in graphs using k -order proximity. In contrast to GraRep, HOPE tried to solve the asymmetric transitivity problem in directed graphs by approximating high-order proximity. The objective function needs to be minimized for the approximation proximity could be defined as:

where M is the high-order proximity matrix, for instance, M i j presents the proximity of two nodes v i and v j , Z i and Z j denote vector embeddings of v i and v j , respectively. The proximity matrix M can be measured by decomposing into two small-sized matrices M = M g − 1 · M l . Several common criteria could measure the node proximity, such as Katz Index [ 127 ], Rooted PageRank [ 128 ], Adamic-Adar [ 129 ], and Common Neighbors. Coskun and Mustafa [ 124 ] suggested changes in the proximity measure formulas of the HOPE model. For nodes that have a small degree, singular values could be zero after measuring the node proximity. Therefore, to solve this problem, they added a parameter σ to regularize the Laplacian graph.

A few models have been proposed with the same idea as HOPE and GraRep [ 43 , 86 ]. For example, ProNE model [ 43 ] aimed to use k number of the Chebyshev expansion to avoid Eigen decomposition, instead of using k -order proximity in HOPE models. Sun et al. [ 6 ] introduced a similar approach for preserving asymmetric transitivity with high-order proximity. However, the significant difference is that they proposed a strategy to break directed acyclic graphs while preserving the graph structure. The non-negative matrix factorization could then be applied to produce an embedding matrix. Several models [ 125 , 130 , 131 ] mainly focused on the pointwise mutual information (PMI) of nodes in graphs which calculates the connection between nodes in terms of linear and nonlinear independence. Equation ( 5 ) is used to learn node embeddings.

Several models aimed to reduce computational complexity from matrix factorization by improving the sampling strategies [ 126 , 132 , 133 ]. For instance, the key idea of the NRL-MF model [ 126 ] was to deal with a hashing function for computing dot products. Each node is presented as a binarized vector by a hashing function, which can be calculated faster by XOR operators. The model could learn the binary and quantized codes based on matrix factorization and preserve high-order proximity. Jiezhong [ 133 ] targeted sparse matrix factorization. They implemented random-walk sampling on graphs to construct a NetMF Matrix Sparsifier. RNP model [ 132 ] explored in-depth vector embeddings based on personalized PageRank values, then approximated the PPR matrices.

A summary of matrix factorization-based models for heterogeneous graphs and dynamic graphs. H ∈ R n × k is the probability matrix that a node belongs to a cluster, E ( t ) is the edge matrix with type t , W i j is the weight on ( v i , v j ) , r denotes the relation type, and E ( 1 , 2 ) is the set of edges in two component graphs G 1 and G 2 .

| Models | Graph Types | Tasks | Loss Function |

|---|---|---|---|

| DBMM [ ] | Dynamic graphs | Node classification, Node clustering | |

| [ ] | Dynamic graphs | Link prediction | |

| [ ] | Dynamic graphs | Link prediction | |

| LIST [ ] | Dynamic graphs | Link prediction | |

| TADW [ ] | Attributed graphs | Node classification | |

| PME [ ] | Heterogeneous graphs | Link prediction | |

| EOE [ ] | Heterogeneous graphs | Node classification | |

| [ ] | Heterogeneous graphs | Link prediction | |

| ASPEM [ ] | Heterogeneous graphs | Node classification, Link prediction | |

| MELL [ ] | Heterogeneous graphs | Link prediction | |

| PLE [ ] | Attributed graphs | Node classification |

In the real world, several graphs often contain attributes for nodes and edges, such as user profiles on a social network. These attributes provide helpful information to improve the node representation and help to learn node embedding. Yang et al. [ 131 ] proposed the TADW model by representing the DeepWalk model as a matrix factorization and integrating text features into the factorization model. Ren et al. [ 142 ] introduced the PLE model to learn jointly different types of nodes and edges with text attributes. Since existing models often ignore the noise of labels, PLE is the first work to investigate the noisy type labels by measuring the similarity between entities and type labels.

Beyond static and homogeneous graphs, several models have been proposed to learn embeddings in dynamic and heterogeneous graphs. The embedding models for dynamic graphs are essentially the same as for static graphs, including Laplacian eigenmaps methods and node proximity matrix factorization to model relations in dynamic graphs over time. For Laplacian eigenmaps methods, Li et al. [ 81 ] presented DANE (Dynamic Attributed Network Embedding) model to learn node embeddings in dynamic graphs. The main idea of the DANE model is to represent a Laplacian matrix as L A ( t ) = D A ( t ) − A ( t ) , where A ( t ) ∈ R n × n is the adjacency matrix of dynamic graphs at time t , D A is the diagonal matrix, then the model could be able to learn node embeddings by time in an online manner. To preserve the node proximity, the DANE model aimed to minimize the loss function:

The eigenvectors λ of the Laplacian matrix L can be calculated by solving the generalized eigenproblem: L A ( t ) a = λ D A ( t ) , where a = 〈 a 0 a 1 ⋯ a N 〉 is the eigenvectors.

Several models applied node proximity matrix factorization directly to dynamic graphs by updating the proximity matrix between entities in the dynamic graphs. Rossi et al. [ 134 ] presented dynamic graphs as a set of static graph snapshots: G = { G ( t 0 ) G ( t 1 ) ⋯ G ( t N ) } . The model then learned a transition proximity matrix T , which describes all transitions from the dynamic graphs. For evaluation, they predict the graph G at time t + 1 : G ^ t + 1 = G t T t + 1 , then estimate the error using Frobenius loss: G ^ t + 1 − G t + 1 F . Zhu et al. [ 135 , 137 ] aimed to preserve the graph structure based on temporal matrix factorization during the network evolution. Given an adjacency matrix A ( t ) at time t , two temporal rank- k matrix factorization U and V ( t ) are factorized as A ( t ) = f ( U V ( t ) ⊺ ) , and the objective is to minimize the loss function L A which could be defined as:

Matrix factorization models have been successfully applied to graph embedding, mainly for the node embedding problem. Most models are based on singular value decomposition to find eigenvectors in the latent space. There are several advantages of matrix factorization-based models:

- Training data requirement: The matrix factorization-based models do not need much data to learn embeddings. Compared to other methods, such as neural network-based models, these models bring advantages in case there is little training data.

- Coverage: Since the graphs are presented as Laplacian matrix L , or transition matrix M , then the models could capture all the proximity of the nodes in the graphs. The connection of all the pairs of nodes is observed at least once time under the matrix that makes the models could be able to handle sparsity graphs.

Although matrix factorization is widely used in graph embedding problems, it still has several limitations:

- Computational complexity: The matrix factorization suffers from time complexity and memory complexity for large graphs with millions of nodes. The main reason is the time it takes to decompose the matrix into a product of small-sized matrices [ 15 ].

- Missing values: Models based on matrix factorization cannot handle incomplete graphs with unseen and missing values [ 143 , 144 ]. When the graph data are insufficient, the matrix factorization-based models could not learn generalized vector embeddings. Therefore, we need neural network models that can generalize graphs and better predict entities in graphs.

3.3. Shallow Models

This section focuses on shallow models for mapping graph entities into vector space. These models mainly aim to map nodes, edges, and subgraphs as low-dimensional vectors while preserving the graph structure and entity proximity. Typically, the models first implement a sampling technique to capture graph structure and proximity relation and then learn embeddings based on shallow neural network algorithms. Several sampling strategies could be taken to capture the local and global information in graphs [ 14 , 145 , 146 ]. Based on the sampling strategy, we divide shallow models into two main groups: structure preservation and proximity reconstruction.

Node sampling techniques. ( a ) k -hop sampling; ( b ) Random-walk sampling. The source node v s and the target node v t are taken as the source node and the target node in the graph. In ( a ), the k -hop proximity sampling strategy begins from source node v s , and the green nodes are considered to be the 1 s t -hop proximity of node v s . The blue and the black nodes are considered 2nd-hop and 3rd-hop proximity of node v s , respectively. In ( b ), the random-walk sampling strategy takes a random walk (red arrow) from the source node v s to the target node v t .

- Proximity reconstruction: It refers to preserving a k -hop relationship between nodes in graphs. The relation between neighboring nodes in the k -hop distance should be preserved in the latent space. For instance, Figure 6 b presents a 3-hop proximity from the source node v s .

In general, shallow models have achieved many successes in the past decade [ 4 , 14 , 21 ]. However, there are several disadvantages of shallow models:

- Unseen nodes: When there is a new node in graphs, the shallow models cannot learn embeddings for new nodes. To obtain embedding for new nodes, the models must update new patterns, for example, re-execute random-walk sampling to generate new paths for new nodes, and then the models must be re-trained to learn embeddings. The re-sampling and re-training procedures make it impractical to apply them in practice.

- Node features: Shallow models such as DeepWalk and Node2Vec mainly work suitably on homogeneous graphs and ignore information about the attributes/labels of nodes. However, in the real world, many graphs have attributes and labels that could be informative for graph representation learning. Only a few studies have investigated the attributes and labels of nodes, and edges. However, the limitations of domain knowledge when working with heterogeneous and dynamic graphs have made the model inefficient and increased the computational complexity.

- Parameter sharing: One of the problems of shallow models is that these models cannot share the parameters during the training process. From the statistical perspective, parameter sharing could reduce the computational time and the number of weight updates during the training process.

3.3.1. Structure-Preservation Models

Choosing a strategy to capture the graph structure is essential for shallow models to learn vector embeddings. The graph structure can be sampled through connections between nodes in graphs or substructures (e.g., subgraphs, motifs, graphlets, roles, etc.). Table 5 briefly summarizes structure-preservation models for static and homogeneous graphs.

Over the last decade, various models have been proposed to capture the graph structure and learn embeddings [ 4 , 21 , 147 , 148 ]. Among those models, random-walk-based strategies could be considered one of the most typical strategies to sample the graph structures [ 4 , 14 ]. The main idea of the random-walk strategy is to gather information about the graph structure to generate paths that can be treated as sentences in documents. The definition of random walks could be defined as:

(Random walk [ 14 ]). Given a graph G = ( V , E ) , where V is the set of nodes and E is the set of edges, a random walk with length l is a process starting at a node v i ∈ V and moving to its neighbors for each time step. The next steps are repeated until the length l is reached.

Two models, DeepWalk [ 14 ] and Node2Vec [ 4 ] could be considered to be pioneer models to open a new direction for learning node embeddings.

Inspired by the disadvantages of the matrix factorization-based models, the DeepWalk model could preserve the node neighborhoods based on random-walk sampling, which could capture global information in graphs. Moreover, both DeeWalk and Node2Vec aim to maximize the probability of observing node neighbors by stochastic gradient descent on each single-layer neural network. Therefore, these models reduce running time and computational complexity. DeepWalk [ 14 ] is a simple node embedding model using the random-walk sampling strategy to generate node sequences and treat them as word sentences. The objective of DeepWalk is to maximize the probability of the set of neighbor nodes N ( v i ) given a target node v i . Formally, the optimization problem could be defined as:

where v i denotes the target node, N ( v i ) is the set of neighbors of v i which could be generated from random-walk sampling, ϕ ( v i ) is the mapping function ϕ : v i ∈ V → R | V | × d . The model uses two strategies for finding neighbors given a source node, based on the Breadth-First Search (BFS) and Depth First Search (DFS) strategies. The BFS strategy aims to represent a microscopic view that captures the local structure. In contrast, the DFS strategy delivers the global structure information in graphs. The DeepWalk then uses a skip-gram model and stochastic gradient descent (SGD) to learn latent representations.

A summary of structure-preservation models for homogeneous and static graphs. K indicates the number of clusters in the graph, and μ k refers to the mean value of cluster k .

| Models | Graph Types | Tasks | Loss Function |

|---|---|---|---|

| DeepWalk [ ] | Static graphs | Node classification | |

| Node2Vec [ ] | Static graphs | Node classification, Link prediction | |

| WalkLets [ ] | Static graphs | Node classification | |

| Div2Vec [ ] | Static graphs | Link prediction | |

| Static graphs | Node classification | ||