Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Understanding P values | Definition and Examples

Understanding P-values | Definition and Examples

Published on July 16, 2020 by Rebecca Bevans . Revised on June 22, 2023.

The p value is a number, calculated from a statistical test, that describes how likely you are to have found a particular set of observations if the null hypothesis were true.

P values are used in hypothesis testing to help decide whether to reject the null hypothesis. The smaller the p value, the more likely you are to reject the null hypothesis.

Table of contents

What is a null hypothesis, what exactly is a p value, how do you calculate the p value, p values and statistical significance, reporting p values, caution when using p values, other interesting articles, frequently asked questions about p-values.

All statistical tests have a null hypothesis. For most tests, the null hypothesis is that there is no relationship between your variables of interest or that there is no difference among groups.

For example, in a two-tailed t test , the null hypothesis is that the difference between two groups is zero.

- Null hypothesis ( H 0 ): there is no difference in longevity between the two groups.

- Alternative hypothesis ( H A or H 1 ): there is a difference in longevity between the two groups.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

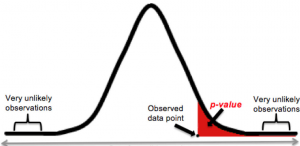

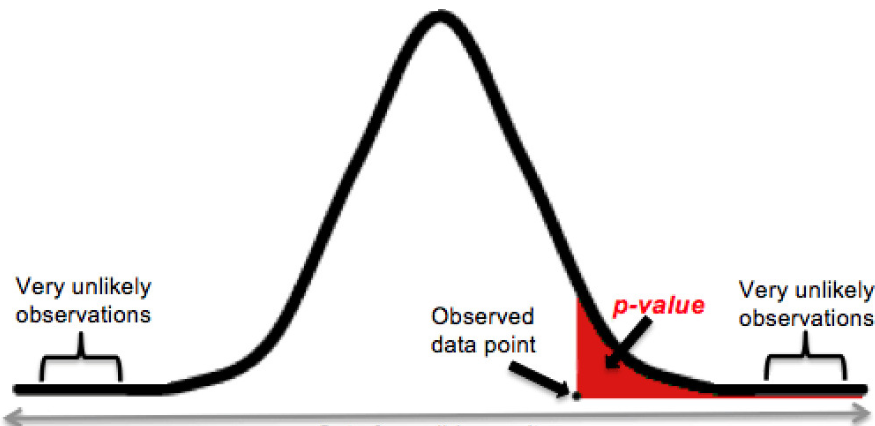

The p value , or probability value, tells you how likely it is that your data could have occurred under the null hypothesis. It does this by calculating the likelihood of your test statistic , which is the number calculated by a statistical test using your data.

The p value tells you how often you would expect to see a test statistic as extreme or more extreme than the one calculated by your statistical test if the null hypothesis of that test was true. The p value gets smaller as the test statistic calculated from your data gets further away from the range of test statistics predicted by the null hypothesis.

The p value is a proportion: if your p value is 0.05, that means that 5% of the time you would see a test statistic at least as extreme as the one you found if the null hypothesis was true.

P values are usually automatically calculated by your statistical program (R, SPSS, etc.).

You can also find tables for estimating the p value of your test statistic online. These tables show, based on the test statistic and degrees of freedom (number of observations minus number of independent variables) of your test, how frequently you would expect to see that test statistic under the null hypothesis.

The calculation of the p value depends on the statistical test you are using to test your hypothesis :

- Different statistical tests have different assumptions and generate different test statistics. You should choose the statistical test that best fits your data and matches the effect or relationship you want to test.

- The number of independent variables you include in your test changes how large or small the test statistic needs to be to generate the same p value.

No matter what test you use, the p value always describes the same thing: how often you can expect to see a test statistic as extreme or more extreme than the one calculated from your test.

P values are most often used by researchers to say whether a certain pattern they have measured is statistically significant.

Statistical significance is another way of saying that the p value of a statistical test is small enough to reject the null hypothesis of the test.

How small is small enough? The most common threshold is p < 0.05; that is, when you would expect to find a test statistic as extreme as the one calculated by your test only 5% of the time. But the threshold depends on your field of study – some fields prefer thresholds of 0.01, or even 0.001.

The threshold value for determining statistical significance is also known as the alpha value.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

P values of statistical tests are usually reported in the results section of a research paper , along with the key information needed for readers to put the p values in context – for example, correlation coefficient in a linear regression , or the average difference between treatment groups in a t -test.

P values are often interpreted as your risk of rejecting the null hypothesis of your test when the null hypothesis is actually true.

In reality, the risk of rejecting the null hypothesis is often higher than the p value, especially when looking at a single study or when using small sample sizes. This is because the smaller your frame of reference, the greater the chance that you stumble across a statistically significant pattern completely by accident.

P values are also often interpreted as supporting or refuting the alternative hypothesis. This is not the case. The p value can only tell you whether or not the null hypothesis is supported. It cannot tell you whether your alternative hypothesis is true, or why.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

- Null hypothesis

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

A p -value , or probability value, is a number describing how likely it is that your data would have occurred under the null hypothesis of your statistical test .

P -values are usually automatically calculated by the program you use to perform your statistical test. They can also be estimated using p -value tables for the relevant test statistic .

P -values are calculated from the null distribution of the test statistic. They tell you how often a test statistic is expected to occur under the null hypothesis of the statistical test, based on where it falls in the null distribution.

If the test statistic is far from the mean of the null distribution, then the p -value will be small, showing that the test statistic is not likely to have occurred under the null hypothesis.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

No. The p -value only tells you how likely the data you have observed is to have occurred under the null hypothesis .

If the p -value is below your threshold of significance (typically p < 0.05), then you can reject the null hypothesis, but this does not necessarily mean that your alternative hypothesis is true.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Understanding P-values | Definition and Examples. Scribbr. Retrieved August 22, 2024, from https://www.scribbr.com/statistics/p-value/

Is this article helpful?

Rebecca Bevans

Other students also liked, an easy introduction to statistical significance (with examples), test statistics | definition, interpretation, and examples, what is effect size and why does it matter (examples), what is your plagiarism score.

P-Value in Statistical Hypothesis Tests: What is it?

P value definition.

A p value is used in hypothesis testing to help you support or reject the null hypothesis . The p value is the evidence against a null hypothesis . The smaller the p-value, the stronger the evidence that you should reject the null hypothesis.

P values are expressed as decimals although it may be easier to understand what they are if you convert them to a percentage . For example, a p value of 0.0254 is 2.54%. This means there is a 2.54% chance your results could be random (i.e. happened by chance). That’s pretty tiny. On the other hand, a large p-value of .9(90%) means your results have a 90% probability of being completely random and not due to anything in your experiment. Therefore, the smaller the p-value, the more important (“ significant “) your results.

When you run a hypothesis test , you compare the p value from your test to the alpha level you selected when you ran the test. Alpha levels can also be written as percentages.

P Value vs Alpha level

Alpha levels are controlled by the researcher and are related to confidence levels . You get an alpha level by subtracting your confidence level from 100%. For example, if you want to be 98 percent confident in your research, the alpha level would be 2% (100% – 98%). When you run the hypothesis test, the test will give you a value for p. Compare that value to your chosen alpha level. For example, let’s say you chose an alpha level of 5% (0.05). If the results from the test give you:

- A small p (≤ 0.05), reject the null hypothesis . This is strong evidence that the null hypothesis is invalid.

- A large p (> 0.05) means the alternate hypothesis is weak, so you do not reject the null.

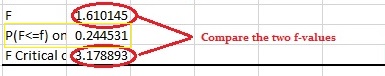

P Values and Critical Values

What if I Don’t Have an Alpha Level?

In an ideal world, you’ll have an alpha level. But if you do not, you can still use the following rough guidelines in deciding whether to support or reject the null hypothesis:

- If p > .10 → “not significant”

- If p ≤ .10 → “marginally significant”

- If p ≤ .05 → “significant”

- If p ≤ .01 → “highly significant.”

How to Calculate a P Value on the TI 83

Example question: The average wait time to see an E.R. doctor is said to be 150 minutes. You think the wait time is actually less. You take a random sample of 30 people and find their average wait is 148 minutes with a standard deviation of 5 minutes. Assume the distribution is normal. Find the p value for this test.

- Press STAT then arrow over to TESTS.

- Press ENTER for Z-Test .

- Arrow over to Stats. Press ENTER.

- Arrow down to μ0 and type 150. This is our null hypothesis mean.

- Arrow down to σ. Type in your std dev: 5.

- Arrow down to xbar. Type in your sample mean : 148.

- Arrow down to n. Type in your sample size : 30.

- Arrow to <μ0 for a left tail test . Press ENTER.

- Arrow down to Calculate. Press ENTER. P is given as .014, or about 1%.

The probability that you would get a sample mean of 148 minutes is tiny, so you should reject the null hypothesis.

Note : If you don’t want to run a test, you could also use the TI 83 NormCDF function to get the area (which is the same thing as the probability value).

Dodge, Y. (2008). The Concise Encyclopedia of Statistics . Springer. Gonick, L. (1993). The Cartoon Guide to Statistics . HarperPerennial.

Warning: The NCBI web site requires JavaScript to function. more...

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Hypothesis testing, p values, confidence intervals, and significance.

Jacob Shreffler ; Martin R. Huecker .

Affiliations

Last Update: March 13, 2023 .

- Definition/Introduction

Medical providers often rely on evidence-based medicine to guide decision-making in practice. Often a research hypothesis is tested with results provided, typically with p values, confidence intervals, or both. Additionally, statistical or research significance is estimated or determined by the investigators. Unfortunately, healthcare providers may have different comfort levels in interpreting these findings, which may affect the adequate application of the data.

- Issues of Concern

Without a foundational understanding of hypothesis testing, p values, confidence intervals, and the difference between statistical and clinical significance, it may affect healthcare providers' ability to make clinical decisions without relying purely on the research investigators deemed level of significance. Therefore, an overview of these concepts is provided to allow medical professionals to use their expertise to determine if results are reported sufficiently and if the study outcomes are clinically appropriate to be applied in healthcare practice.

Hypothesis Testing

Investigators conducting studies need research questions and hypotheses to guide analyses. Starting with broad research questions (RQs), investigators then identify a gap in current clinical practice or research. Any research problem or statement is grounded in a better understanding of relationships between two or more variables. For this article, we will use the following research question example:

Research Question: Is Drug 23 an effective treatment for Disease A?

Research questions do not directly imply specific guesses or predictions; we must formulate research hypotheses. A hypothesis is a predetermined declaration regarding the research question in which the investigator(s) makes a precise, educated guess about a study outcome. This is sometimes called the alternative hypothesis and ultimately allows the researcher to take a stance based on experience or insight from medical literature. An example of a hypothesis is below.

Research Hypothesis: Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

The null hypothesis states that there is no statistical difference between groups based on the stated research hypothesis.

Researchers should be aware of journal recommendations when considering how to report p values, and manuscripts should remain internally consistent.

Regarding p values, as the number of individuals enrolled in a study (the sample size) increases, the likelihood of finding a statistically significant effect increases. With very large sample sizes, the p-value can be very low significant differences in the reduction of symptoms for Disease A between Drug 23 and Drug 22. The null hypothesis is deemed true until a study presents significant data to support rejecting the null hypothesis. Based on the results, the investigators will either reject the null hypothesis (if they found significant differences or associations) or fail to reject the null hypothesis (they could not provide proof that there were significant differences or associations).

To test a hypothesis, researchers obtain data on a representative sample to determine whether to reject or fail to reject a null hypothesis. In most research studies, it is not feasible to obtain data for an entire population. Using a sampling procedure allows for statistical inference, though this involves a certain possibility of error. [1] When determining whether to reject or fail to reject the null hypothesis, mistakes can be made: Type I and Type II errors. Though it is impossible to ensure that these errors have not occurred, researchers should limit the possibilities of these faults. [2]

Significance

Significance is a term to describe the substantive importance of medical research. Statistical significance is the likelihood of results due to chance. [3] Healthcare providers should always delineate statistical significance from clinical significance, a common error when reviewing biomedical research. [4] When conceptualizing findings reported as either significant or not significant, healthcare providers should not simply accept researchers' results or conclusions without considering the clinical significance. Healthcare professionals should consider the clinical importance of findings and understand both p values and confidence intervals so they do not have to rely on the researchers to determine the level of significance. [5] One criterion often used to determine statistical significance is the utilization of p values.

P values are used in research to determine whether the sample estimate is significantly different from a hypothesized value. The p-value is the probability that the observed effect within the study would have occurred by chance if, in reality, there was no true effect. Conventionally, data yielding a p<0.05 or p<0.01 is considered statistically significant. While some have debated that the 0.05 level should be lowered, it is still universally practiced. [6] Hypothesis testing allows us to determine the size of the effect.

An example of findings reported with p values are below:

Statement: Drug 23 reduced patients' symptoms compared to Drug 22. Patients who received Drug 23 (n=100) were 2.1 times less likely than patients who received Drug 22 (n = 100) to experience symptoms of Disease A, p<0.05.

Statement:Individuals who were prescribed Drug 23 experienced fewer symptoms (M = 1.3, SD = 0.7) compared to individuals who were prescribed Drug 22 (M = 5.3, SD = 1.9). This finding was statistically significant, p= 0.02.

For either statement, if the threshold had been set at 0.05, the null hypothesis (that there was no relationship) should be rejected, and we should conclude significant differences. Noticeably, as can be seen in the two statements above, some researchers will report findings with < or > and others will provide an exact p-value (0.000001) but never zero [6] . When examining research, readers should understand how p values are reported. The best practice is to report all p values for all variables within a study design, rather than only providing p values for variables with significant findings. [7] The inclusion of all p values provides evidence for study validity and limits suspicion for selective reporting/data mining.

While researchers have historically used p values, experts who find p values problematic encourage the use of confidence intervals. [8] . P-values alone do not allow us to understand the size or the extent of the differences or associations. [3] In March 2016, the American Statistical Association (ASA) released a statement on p values, noting that scientific decision-making and conclusions should not be based on a fixed p-value threshold (e.g., 0.05). They recommend focusing on the significance of results in the context of study design, quality of measurements, and validity of data. Ultimately, the ASA statement noted that in isolation, a p-value does not provide strong evidence. [9]

When conceptualizing clinical work, healthcare professionals should consider p values with a concurrent appraisal study design validity. For example, a p-value from a double-blinded randomized clinical trial (designed to minimize bias) should be weighted higher than one from a retrospective observational study [7] . The p-value debate has smoldered since the 1950s [10] , and replacement with confidence intervals has been suggested since the 1980s. [11]

Confidence Intervals

A confidence interval provides a range of values within given confidence (e.g., 95%), including the accurate value of the statistical constraint within a targeted population. [12] Most research uses a 95% CI, but investigators can set any level (e.g., 90% CI, 99% CI). [13] A CI provides a range with the lower bound and upper bound limits of a difference or association that would be plausible for a population. [14] Therefore, a CI of 95% indicates that if a study were to be carried out 100 times, the range would contain the true value in 95, [15] confidence intervals provide more evidence regarding the precision of an estimate compared to p-values. [6]

In consideration of the similar research example provided above, one could make the following statement with 95% CI:

Statement: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22; there was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

It is important to note that the width of the CI is affected by the standard error and the sample size; reducing a study sample number will result in less precision of the CI (increase the width). [14] A larger width indicates a smaller sample size or a larger variability. [16] A researcher would want to increase the precision of the CI. For example, a 95% CI of 1.43 – 1.47 is much more precise than the one provided in the example above. In research and clinical practice, CIs provide valuable information on whether the interval includes or excludes any clinically significant values. [14]

Null values are sometimes used for differences with CI (zero for differential comparisons and 1 for ratios). However, CIs provide more information than that. [15] Consider this example: A hospital implements a new protocol that reduced wait time for patients in the emergency department by an average of 25 minutes (95% CI: -2.5 – 41 minutes). Because the range crosses zero, implementing this protocol in different populations could result in longer wait times; however, the range is much higher on the positive side. Thus, while the p-value used to detect statistical significance for this may result in "not significant" findings, individuals should examine this range, consider the study design, and weigh whether or not it is still worth piloting in their workplace.

Similarly to p-values, 95% CIs cannot control for researchers' errors (e.g., study bias or improper data analysis). [14] In consideration of whether to report p-values or CIs, researchers should examine journal preferences. When in doubt, reporting both may be beneficial. [13] An example is below:

Reporting both: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22, p = 0.009. There was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

- Clinical Significance

Recall that clinical significance and statistical significance are two different concepts. Healthcare providers should remember that a study with statistically significant differences and large sample size may be of no interest to clinicians, whereas a study with smaller sample size and statistically non-significant results could impact clinical practice. [14] Additionally, as previously mentioned, a non-significant finding may reflect the study design itself rather than relationships between variables.

Healthcare providers using evidence-based medicine to inform practice should use clinical judgment to determine the practical importance of studies through careful evaluation of the design, sample size, power, likelihood of type I and type II errors, data analysis, and reporting of statistical findings (p values, 95% CI or both). [4] Interestingly, some experts have called for "statistically significant" or "not significant" to be excluded from work as statistical significance never has and will never be equivalent to clinical significance. [17]

The decision on what is clinically significant can be challenging, depending on the providers' experience and especially the severity of the disease. Providers should use their knowledge and experiences to determine the meaningfulness of study results and make inferences based not only on significant or insignificant results by researchers but through their understanding of study limitations and practical implications.

- Nursing, Allied Health, and Interprofessional Team Interventions

All physicians, nurses, pharmacists, and other healthcare professionals should strive to understand the concepts in this chapter. These individuals should maintain the ability to review and incorporate new literature for evidence-based and safe care.

- Review Questions

- Access free multiple choice questions on this topic.

- Comment on this article.

Disclosure: Jacob Shreffler declares no relevant financial relationships with ineligible companies.

Disclosure: Martin Huecker declares no relevant financial relationships with ineligible companies.

This book is distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) ( http://creativecommons.org/licenses/by-nc-nd/4.0/ ), which permits others to distribute the work, provided that the article is not altered or used commercially. You are not required to obtain permission to distribute this article, provided that you credit the author and journal.

- Cite this Page Shreffler J, Huecker MR. Hypothesis Testing, P Values, Confidence Intervals, and Significance. [Updated 2023 Mar 13]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

In this Page

Bulk download.

- Bulk download StatPearls data from FTP

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). [PeerJ. 2021] The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). Messam LLM, Weng HY, Rosenberger NWY, Tan ZH, Payet SDM, Santbakshsing M. PeerJ. 2021; 9:e12453. Epub 2021 Nov 24.

- Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. [J Pharm Pract. 2010] Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. Ferrill MJ, Brown DA, Kyle JA. J Pharm Pract. 2010 Aug; 23(4):344-51. Epub 2010 Apr 13.

- Interpreting "statistical hypothesis testing" results in clinical research. [J Ayurveda Integr Med. 2012] Interpreting "statistical hypothesis testing" results in clinical research. Sarmukaddam SB. J Ayurveda Integr Med. 2012 Apr; 3(2):65-9.

- Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. [Dermatol Surg. 2005] Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. Alam M, Barzilai DA, Wrone DA. Dermatol Surg. 2005 Apr; 31(4):462-6.

- Review Is statistical significance testing useful in interpreting data? [Reprod Toxicol. 1993] Review Is statistical significance testing useful in interpreting data? Savitz DA. Reprod Toxicol. 1993; 7(2):95-100.

Recent Activity

- Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearl... Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearls

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

9.3 - the p-value approach, example 9-4 section .

Up until now, we have used the critical region approach in conducting our hypothesis tests. Now, let's take a look at an example in which we use what is called the P -value approach .

Among patients with lung cancer, usually, 90% or more die within three years. As a result of new forms of treatment, it is felt that this rate has been reduced. In a recent study of n = 150 lung cancer patients, y = 128 died within three years. Is there sufficient evidence at the \(\alpha = 0.05\) level, say, to conclude that the death rate due to lung cancer has been reduced?

The sample proportion is:

\(\hat{p}=\dfrac{128}{150}=0.853\)

The null and alternative hypotheses are:

\(H_0 \colon p = 0.90\) and \(H_A \colon p < 0.90\)

The test statistic is, therefore:

\(Z=\dfrac{\hat{p}-p_0}{\sqrt{\dfrac{p_0(1-p_0)}{n}}}=\dfrac{0.853-0.90}{\sqrt{\dfrac{0.90(0.10)}{150}}}=-1.92\)

And, the rejection region is:

Since the test statistic Z = −1.92 < −1.645, we reject the null hypothesis. There is sufficient evidence at the \(\alpha = 0.05\) level to conclude that the rate has been reduced.

Example 9-4 (continued) Section

What if we set the significance level \(\alpha\) = P (Type I Error) to 0.01? Is there still sufficient evidence to conclude that the death rate due to lung cancer has been reduced?

In this case, with \(\alpha = 0.01\), the rejection region is Z ≤ −2.33. That is, we reject if the test statistic falls in the rejection region defined by Z ≤ −2.33:

Because the test statistic Z = −1.92 > −2.33, we do not reject the null hypothesis. There is insufficient evidence at the \(\alpha = 0.01\) level to conclude that the rate has been reduced.

In the first part of this example, we rejected the null hypothesis when \(\alpha = 0.05\). And, in the second part of this example, we failed to reject the null hypothesis when \(\alpha = 0.01\). There must be some level of \(\alpha\), then, in which we cross the threshold from rejecting to not rejecting the null hypothesis. What is the smallest \(\alpha \text{ -level}\) that would still cause us to reject the null hypothesis?

We would, of course, reject any time the critical value was smaller than our test statistic −1.92:

That is, we would reject if the critical value were −1.645, −1.83, and −1.92. But, we wouldn't reject if the critical value were −1.93. The \(\alpha \text{ -level}\) associated with the test statistic −1.92 is called the P -value . It is the smallest \(\alpha \text{ -level}\) that would lead to rejection. In this case, the P -value is:

P ( Z < −1.92) = 0.0274

So far, all of the examples we've considered have involved a one-tailed hypothesis test in which the alternative hypothesis involved either a less than (<) or a greater than (>) sign. What happens if we weren't sure of the direction in which the proportion could deviate from the hypothesized null value? That is, what if the alternative hypothesis involved a not-equal sign (≠)? Let's take a look at an example.

What if we wanted to perform a " two-tailed " test? That is, what if we wanted to test:

\(H_0 \colon p = 0.90\) versus \(H_A \colon p \ne 0.90\)

at the \(\alpha = 0.05\) level?

Let's first consider the critical value approach . If we allow for the possibility that the sample proportion could either prove to be too large or too small, then we need to specify a threshold value, that is, a critical value, in each tail of the distribution. In this case, we divide the " significance level " \(\alpha\) by 2 to get \(\alpha/2\):

That is, our rejection rule is that we should reject the null hypothesis \(H_0 \text{ if } Z ≥ 1.96\) or we should reject the null hypothesis \(H_0 \text{ if } Z ≤ −1.96\). Alternatively, we can write that we should reject the null hypothesis \(H_0 \text{ if } |Z| ≥ 1.96\). Because our test statistic is −1.92, we just barely fail to reject the null hypothesis, because 1.92 < 1.96. In this case, we would say that there is insufficient evidence at the \(\alpha = 0.05\) level to conclude that the sample proportion differs significantly from 0.90.

Now for the P -value approach . Again, needing to allow for the possibility that the sample proportion is either too large or too small, we multiply the P -value we obtain for the one-tailed test by 2:

That is, the P -value is:

\(P=P(|Z|\geq 1.92)=P(Z>1.92 \text{ or } Z<-1.92)=2 \times 0.0274=0.055\)

Because the P -value 0.055 is (just barely) greater than the significance level \(\alpha = 0.05\), we barely fail to reject the null hypothesis. Again, we would say that there is insufficient evidence at the \(\alpha = 0.05\) level to conclude that the sample proportion differs significantly from 0.90.

Let's close this example by formalizing the definition of a P -value, as well as summarizing the P -value approach to conducting a hypothesis test.

The P -value is the smallest significance level \(\alpha\) that leads us to reject the null hypothesis.

Alternatively (and the way I prefer to think of P -values), the P -value is the probability that we'd observe a more extreme statistic than we did if the null hypothesis were true.

If the P -value is small, that is, if \(P ≤ \alpha\), then we reject the null hypothesis \(H_0\).

Note! Section

By the way, to test \(H_0 \colon p = p_0\), some statisticians will use the test statistic:

\(Z=\dfrac{\hat{p}-p_0}{\sqrt{\dfrac{\hat{p}(1-\hat{p})}{n}}}\)

rather than the one we've been using:

\(Z=\dfrac{\hat{p}-p_0}{\sqrt{\dfrac{p_0(1-p_0)}{n}}}\)

One advantage of doing so is that the interpretation of the confidence interval — does it contain \(p_0\)? — is always consistent with the hypothesis test decision, as illustrated here:

For the sake of ease, let:

\(se(\hat{p})=\sqrt{\dfrac{\hat{p}(1-\hat{p})}{n}}\)

Two-tailed test. In this case, the critical region approach tells us to reject the null hypothesis \(H_0 \colon p = p_0\) against the alternative hypothesis \(H_A \colon p \ne p_0\):

if \(Z=\dfrac{\hat{p}-p_0}{se(\hat{p})} \geq z_{\alpha/2}\) or if \(Z=\dfrac{\hat{p}-p_0}{se(\hat{p})} \leq -z_{\alpha/2}\)

which is equivalent to rejecting the null hypothesis:

if \(\hat{p}-p_0 \geq z_{\alpha/2}se(\hat{p})\) or if \(\hat{p}-p_0 \leq -z_{\alpha/2}se(\hat{p})\)

if \(p_0 \geq \hat{p}+z_{\alpha/2}se(\hat{p})\) or if \(p_0 \leq \hat{p}-z_{\alpha/2}se(\hat{p})\)

That's the same as saying that we should reject the null hypothesis \(H_0 \text{ if } p_0\) is not in the \(\left(1-\alpha\right)100\%\) confidence interval!

Left-tailed test. In this case, the critical region approach tells us to reject the null hypothesis \(H_0 \colon p = p_0\) against the alternative hypothesis \(H_A \colon p < p_0\):

if \(Z=\dfrac{\hat{p}-p_0}{se(\hat{p})} \leq -z_{\alpha}\)

if \(\hat{p}-p_0 \leq -z_{\alpha}se(\hat{p})\)

if \(p_0 \geq \hat{p}+z_{\alpha}se(\hat{p})\)

That's the same as saying that we should reject the null hypothesis \(H_0 \text{ if } p_0\) is not in the upper \(\left(1-\alpha\right)100\%\) confidence interval:

\((0,\hat{p}+z_{\alpha}se(\hat{p}))\)

What is The Null Hypothesis & When Do You Reject The Null Hypothesis

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A null hypothesis is a statistical concept suggesting no significant difference or relationship between measured variables. It’s the default assumption unless empirical evidence proves otherwise.

The null hypothesis states no relationship exists between the two variables being studied (i.e., one variable does not affect the other).

The null hypothesis is the statement that a researcher or an investigator wants to disprove.

Testing the null hypothesis can tell you whether your results are due to the effects of manipulating the dependent variable or due to random chance.

How to Write a Null Hypothesis

Null hypotheses (H0) start as research questions that the investigator rephrases as statements indicating no effect or relationship between the independent and dependent variables.

It is a default position that your research aims to challenge or confirm.

For example, if studying the impact of exercise on weight loss, your null hypothesis might be:

There is no significant difference in weight loss between individuals who exercise daily and those who do not.

Examples of Null Hypotheses

| Research Question | Null Hypothesis |

|---|---|

| Do teenagers use cell phones more than adults? | Teenagers and adults use cell phones the same amount. |

| Do tomato plants exhibit a higher rate of growth when planted in compost rather than in soil? | Tomato plants show no difference in growth rates when planted in compost rather than soil. |

| Does daily meditation decrease the incidence of depression? | Daily meditation does not decrease the incidence of depression. |

| Does daily exercise increase test performance? | There is no relationship between daily exercise time and test performance. |

| Does the new vaccine prevent infections? | The vaccine does not affect the infection rate. |

| Does flossing your teeth affect the number of cavities? | Flossing your teeth has no effect on the number of cavities. |

When Do We Reject The Null Hypothesis?

We reject the null hypothesis when the data provide strong enough evidence to conclude that it is likely incorrect. This often occurs when the p-value (probability of observing the data given the null hypothesis is true) is below a predetermined significance level.

If the collected data does not meet the expectation of the null hypothesis, a researcher can conclude that the data lacks sufficient evidence to back up the null hypothesis, and thus the null hypothesis is rejected.

Rejecting the null hypothesis means that a relationship does exist between a set of variables and the effect is statistically significant ( p > 0.05).

If the data collected from the random sample is not statistically significance , then the null hypothesis will be accepted, and the researchers can conclude that there is no relationship between the variables.

You need to perform a statistical test on your data in order to evaluate how consistent it is with the null hypothesis. A p-value is one statistical measurement used to validate a hypothesis against observed data.

Calculating the p-value is a critical part of null-hypothesis significance testing because it quantifies how strongly the sample data contradicts the null hypothesis.

The level of statistical significance is often expressed as a p -value between 0 and 1. The smaller the p-value, the stronger the evidence that you should reject the null hypothesis.

Usually, a researcher uses a confidence level of 95% or 99% (p-value of 0.05 or 0.01) as general guidelines to decide if you should reject or keep the null.

When your p-value is less than or equal to your significance level, you reject the null hypothesis.

In other words, smaller p-values are taken as stronger evidence against the null hypothesis. Conversely, when the p-value is greater than your significance level, you fail to reject the null hypothesis.

In this case, the sample data provides insufficient data to conclude that the effect exists in the population.

Because you can never know with complete certainty whether there is an effect in the population, your inferences about a population will sometimes be incorrect.

When you incorrectly reject the null hypothesis, it’s called a type I error. When you incorrectly fail to reject it, it’s called a type II error.

Why Do We Never Accept The Null Hypothesis?

The reason we do not say “accept the null” is because we are always assuming the null hypothesis is true and then conducting a study to see if there is evidence against it. And, even if we don’t find evidence against it, a null hypothesis is not accepted.

A lack of evidence only means that you haven’t proven that something exists. It does not prove that something doesn’t exist.

It is risky to conclude that the null hypothesis is true merely because we did not find evidence to reject it. It is always possible that researchers elsewhere have disproved the null hypothesis, so we cannot accept it as true, but instead, we state that we failed to reject the null.

One can either reject the null hypothesis, or fail to reject it, but can never accept it.

Why Do We Use The Null Hypothesis?

We can never prove with 100% certainty that a hypothesis is true; We can only collect evidence that supports a theory. However, testing a hypothesis can set the stage for rejecting or accepting this hypothesis within a certain confidence level.

The null hypothesis is useful because it can tell us whether the results of our study are due to random chance or the manipulation of a variable (with a certain level of confidence).

A null hypothesis is rejected if the measured data is significantly unlikely to have occurred and a null hypothesis is accepted if the observed outcome is consistent with the position held by the null hypothesis.

Rejecting the null hypothesis sets the stage for further experimentation to see if a relationship between two variables exists.

Hypothesis testing is a critical part of the scientific method as it helps decide whether the results of a research study support a particular theory about a given population. Hypothesis testing is a systematic way of backing up researchers’ predictions with statistical analysis.

It helps provide sufficient statistical evidence that either favors or rejects a certain hypothesis about the population parameter.

Purpose of a Null Hypothesis

- The primary purpose of the null hypothesis is to disprove an assumption.

- Whether rejected or accepted, the null hypothesis can help further progress a theory in many scientific cases.

- A null hypothesis can be used to ascertain how consistent the outcomes of multiple studies are.

Do you always need both a Null Hypothesis and an Alternative Hypothesis?

The null (H0) and alternative (Ha or H1) hypotheses are two competing claims that describe the effect of the independent variable on the dependent variable. They are mutually exclusive, which means that only one of the two hypotheses can be true.

While the null hypothesis states that there is no effect in the population, an alternative hypothesis states that there is statistical significance between two variables.

The goal of hypothesis testing is to make inferences about a population based on a sample. In order to undertake hypothesis testing, you must express your research hypothesis as a null and alternative hypothesis. Both hypotheses are required to cover every possible outcome of the study.

What is the difference between a null hypothesis and an alternative hypothesis?

The alternative hypothesis is the complement to the null hypothesis. The null hypothesis states that there is no effect or no relationship between variables, while the alternative hypothesis claims that there is an effect or relationship in the population.

It is the claim that you expect or hope will be true. The null hypothesis and the alternative hypothesis are always mutually exclusive, meaning that only one can be true at a time.

What are some problems with the null hypothesis?

One major problem with the null hypothesis is that researchers typically will assume that accepting the null is a failure of the experiment. However, accepting or rejecting any hypothesis is a positive result. Even if the null is not refuted, the researchers will still learn something new.

Why can a null hypothesis not be accepted?

We can either reject or fail to reject a null hypothesis, but never accept it. If your test fails to detect an effect, this is not proof that the effect doesn’t exist. It just means that your sample did not have enough evidence to conclude that it exists.

We can’t accept a null hypothesis because a lack of evidence does not prove something that does not exist. Instead, we fail to reject it.

Failing to reject the null indicates that the sample did not provide sufficient enough evidence to conclude that an effect exists.

If the p-value is greater than the significance level, then you fail to reject the null hypothesis.

Is a null hypothesis directional or non-directional?

A hypothesis test can either contain an alternative directional hypothesis or a non-directional alternative hypothesis. A directional hypothesis is one that contains the less than (“<“) or greater than (“>”) sign.

A nondirectional hypothesis contains the not equal sign (“≠”). However, a null hypothesis is neither directional nor non-directional.

A null hypothesis is a prediction that there will be no change, relationship, or difference between two variables.

The directional hypothesis or nondirectional hypothesis would then be considered alternative hypotheses to the null hypothesis.

Gill, J. (1999). The insignificance of null hypothesis significance testing. Political research quarterly , 52 (3), 647-674.

Krueger, J. (2001). Null hypothesis significance testing: On the survival of a flawed method. American Psychologist , 56 (1), 16.

Masson, M. E. (2011). A tutorial on a practical Bayesian alternative to null-hypothesis significance testing. Behavior research methods , 43 , 679-690.

Nickerson, R. S. (2000). Null hypothesis significance testing: a review of an old and continuing controversy. Psychological methods , 5 (2), 241.

Rozeboom, W. W. (1960). The fallacy of the null-hypothesis significance test. Psychological bulletin , 57 (5), 416.

The p value – definition and interpretation of p-values in statistics

This article examines the most common statistic reported in scientific papers and used in applied statistical analyses – the p -value . The article goes through the definition illustrated with examples, discusses its utility, interpretation, and common misinterpretations of observed statistical significance and significance levels. It is structured as follows:

What does ‘ p ‘ in ‘ p -value’ stand for?

What does p measure and how to interpret it.

- A p-value only makes sense under a specified null hypothesis

How to calculate a p -value?

A practical example, p -values as convenient summary statistics.

- Quantifying the relative uncertainty of data

Easy comparison of different statistical tests

- p -value interpretation in outcomes of experiments (randomized controlled trials)

- p -value interpretation in regressions and correlations of observational data

Mistaking statistical significance with practical significance

Treating the significance level as likelihood for the observed effect, treating p -values as likelihoods attached to hypotheses, a high p -value means the null hypothesis is true, lack of statistical significance suggests a small effect size, p -value definition and meaning.

The technical definition of the p -value is (based on [4,5,6]):

A p -value is the probability of the data-generating mechanism corresponding to a specified null hypothesis to produce an outcome as extreme or more extreme than the one observed.

However, it is only straightforward to understand for those already familiar in detail with terms such as ‘probability’, ‘null hypothesis’, ‘data generating mechanism’, ‘extreme outcome’. These, in turn, require knowledge of what a ‘hypothesis’, a ‘statistical model’ and ‘statistic’ mean, and so on. While some of these will be explained on a cursory level in the following paragraphs, those looking for deeper understanding should consider consulting the following glossary definitions: statistical model , hypothesis , null hypothesis , statistic .

A slightly less technical and therefore more accessible definition is:

A p -value quantifies how likely it is to erroneously reject a specific statistical hypothesis, were it true, based on a given set of data.

Let us break these down and examine several examples to make both of these definitions make sense.

p stands for p robability where probability means the frequency with which an event occurs under certain assumptions. The most common example is the frequency with which a coin lands heads under the assumption that it is equally balanced (a fair coin toss ). That frequency is 0.5 (50%).

Capital ‘P’ stands for probability in general, whereas lowercase ‘ p ‘ refers to the probability of a particular data realization. To expand on the coin toss example: P would stand for the probability of heads in general, whereas p could refer to the probability of landing a series of five heads in a row, or the probability of landing less than or equal to 38 heads out of 100 coin flips.

Given that it was established that p stands for probability, it is easy to figure out it measures a sort of probability.

In everyday language the term ‘probability’ might be used as synonymous to ‘chance’, ‘likelihood’, ‘odds’, e.g. there is 90% probability that it will rain tomorrow. However, in statistics one cannot speak of ‘probability’ without specifying a mechanism which generates the observed data. A simple example of such a mechanism is a device which produces fair coin tosses. A statistical model based on this data-generating mechanism can be put forth and under that model the probability of 38 or less heads out of 100 tosses can be estimated to be 1.05%, for example by using a binomial calculator . The p -value against the model of a fair coin would be ~0.01 (rounding it to 0.01 from hereon for the purposes of the article).

The way to interpret that p -value is: observing 38 heads or less out of the 100 tosses could have happened in only 1% of infinitely many series of 100 fair coin tosses. The null hypothesis in this case is defined as the coin being fair, therefore having a 50% chance for heads and 50% chance for tails on each toss.

Assuming the null hypothesis is true allows the comparison of the observed data to what would have been expected under the null. It turns out the particular observation of 38/100 heads is a rather improbable and thus surprising outcome under the assumption of the null hypothesis. This is measured by the low p -value which also accounts for more extreme outcomes such as 37/100, 36/100, and so on all the way to 0/100.

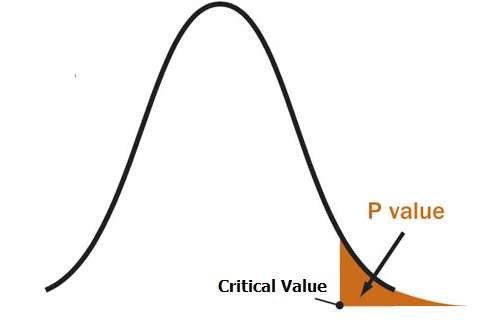

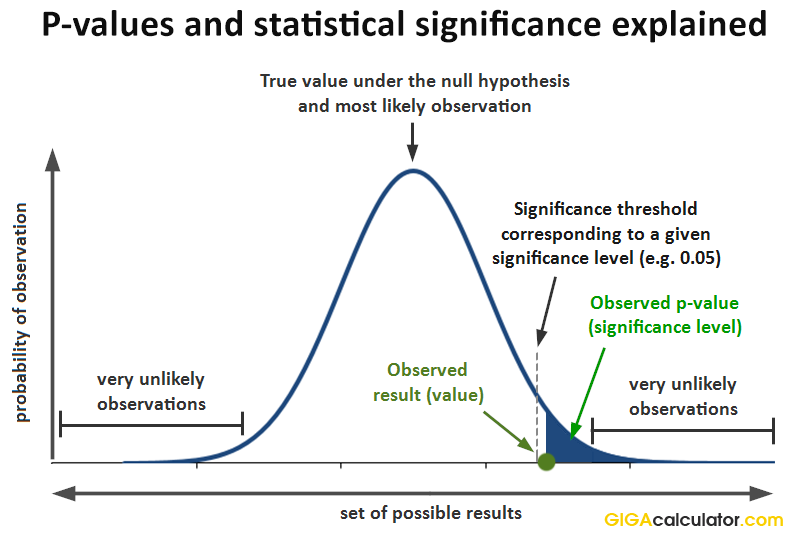

If one had a predefined level of statistical significance at 0.05 then one would claim that the outcome is statistically significant since it’s p -value of 0.01 meets the 0.05 significance level (0.01 ≤ 0.05). A visual representation of the relationship between p -values, significance level ( p -value threshold), and statistical significance of an outcome is illustrated visually in this graph:

In fact, had the significance threshold been at any value above 0.01, the outcome would have been statistically significant, therefore it is usually said that with a p -value of 0.01, the outcome is statistically significant at any level above 0.01 .

Continuing with the interpretation: were one to reject the null hypothesis based on this p -value of 0.01, they would be acting as if a significance level of 0.01 or lower provides sufficient evidence against the hypothesis of the coin being fair. One could interpret this as a rule for a long-run series of experiments and inferences . In such a series, by using this p -value threshold one would incorrectly reject the fair coin hypothesis in at most 1 out of 100 cases, regardless of whether the coin is actually fair in any one of them. An incorrect rejection of the null is often called a type I error as opposed to a type II error which is to incorrectly fail to reject a null.

A more intuitive interpretation proceeds without reference to hypothetical long-runs. This second interpretation comes in the form of a strong argument from coincidence :

- there was a low probability (0.01 or 1%) that something would have happened assuming the null was true

- it did happen so it has to be an unusual (to the extent that the p -value is low) coincidence that it happened

- this warrants the conclusion to reject the null hypothesis

( source ). It stems from the concept of severe testing as developed by Prof. Deborah Mayo in her various works [1,2,3,4,5] and reflects an error-probabilistic approach to inference.

A p -value only makes sense under a specified null hypothesis

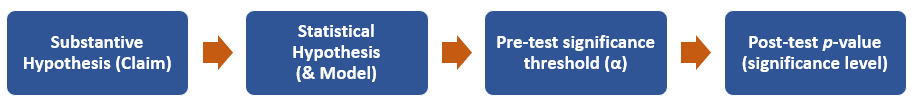

It is important to understand why a specified ‘null hypothesis’ should always accompany any reported p -value and why p-values are crucial in so-called Null Hypothesis Statistical Tests (NHST) . Statistical significance only makes sense when referring to a particular statistical model which in turn corresponds to a given null hypothesis. A p -value calculation has a statistical model and a statistical null hypothesis defined within it as prerequisites, and a statistical null is only interesting because of some tightly related substantive null such as ‘this treatment improves outcomes’. The relationship is shown in the chart below:

In the coin example, the substantive null that is interesting to (potentially) reject is the claim that the coin is fair. It translates to a statistical null hypothesis (model) with the following key properties:

- heads having 50% chance and tails having 50% chance, on each toss

- independence of each toss from any other toss. The outcome of any given coin toss does not depend on past or future coin tosses.

- homogeneity of the coin behavior over time (the true chance does not change across infinitely many tosses)

- a binomial error distribution

The resulting p -value of 0.01 from the coin toss experiment should be interpreted as the probability only under these particular assumptions.

What happens, however, if someone is interested in rejecting the claim that the coin is somewhat biased against heads? To be precise: the claim that it has a true frequency of heads of 40% or less (hence 60% for tails) is the one they are looking to deny with a certain evidential threshold.

The p -value needs to be recalculated under their null hypothesis so now the same 38 heads out of 100 tosses result in a p -value of ~0.38 ( calculation ). If they were interested in rejecting such a null hypothesis, then this data provide poor evidence against it since a 38/100 outcome would not be unusual at all if it were in fact true (p ≤ 0.38 would occur with probability 38%).

Similarly, the p -value needs to be recalculated for a claim of bias in the other direction, say that the coin produces heads with a frequency of 60% or more. The probability of observing 38 or fewer out of 100 under this null hypothesis is so extremely small ( p -value ~= 0.000007364 or 7.364 x 10 -6 in standard form , calculation ) that maintaining a claim for 60/40 bias in favor of heads becomes near-impossible for most practical purposes.

A p -value can be calculated for any frequentist statistical test. Common types of statistical tests include tests for:

- absolute difference in proportions;

- absolute difference in means;

- relative difference in means or proportions;

- goodness-of-fit;

- homogeneity

- independence

- analysis of variance (ANOVA)

and others. Different statistics would be computed depending on the error distribution of the parameter of interest in each case, e.g. a t value, z value, chi-square (Χ 2 ) value, f -value, and so on.

p -values can then be calculated based on the cumulative distribution functions (CDFs) of these statistics whereas pre-test significance thresholds (critical values) can be computed based on the inverses of these functions. You can try these by plugging different inputs in our critical value calculator , and also by consulting its documentation.

In its generic form, a p -value formula can be written down as:

p = P(d(X) ≥ d(x 0 ); H 0 )

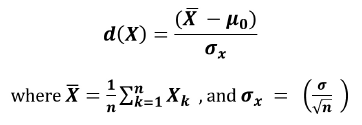

where P stands for probability, d(X) is a test statistic (distance function) of a random variable X , x 0 is a typical realization of X and H 0 is the selected null hypothesis. The semi-colon means ‘assuming’. The distance function is the aforementioned cumulative distribution function for the relevant error distribution. In its generic form a distance function equation can be written as:

X -bar is the arithmetic mean of the observed values, μ 0 is a hypothetical or expected mean to which X is compared, and n is the sample size. The result of a distance function will often be expressed in a standardized form – the number of standard deviations between the observed value and the expected value.

The p -value calculation is different in each case and so a different formula will be applied depending on circumstances. You can see examples in the p -values reported in our statistical calculators, such as the statistical significance calculator for difference of means or proportions , the Chi-square calculator , the risk ratio calculator , odds ratio calculator , hazard ratio calculator , and the normality calculator .

A very fresh (as of late 2020) example of the application of p -values in scientific hypothesis testing can be found in the recently concluded COVID-19 clinical trials. Multiple vaccines for the virus which spread from China in late 2019 and early 2020 have been tested on tens of thousands of volunteers split randomly into two groups – one gets the vaccine and the other gets a placebo. This is called a randomized controlled trial (RCT). The main parameter of interest is the difference between the rates of infections in the two groups. An appropriate test is the t-test for difference of proportions, but the same data can be examined in terms of risk ratios or odds ratio.

The null hypothesis in many of these medical trials is that the vaccine is at least 30% efficient. A statistical model can be built about the expected difference in proportions if the vaccine’s efficiency is 30% or less, and then the actual observed data from a medical trial can be compared to that null hypothesis. Most trials set their significance level at the minimum required by the regulatory bodies (FDA, EMA, etc.), which is usually set at 0.05 . So, if the p -value from a vaccine trial is calculated to be below 0.05, the outcome would be statistically significant and the null hypothesis of the vaccine being less than or equal to 30% efficient would be rejected.

Let us say a vaccine trial results in a p -value of 0.0001 against that null hypothesis. As this is highly unlikely under the assumption of the null hypothesis being true, it provides very strong evidence against the hypothesis that the tested treatment has less than 30% efficiency.

However, many regulators stated that they require at least 50% proven efficiency. They posit a different null hypothesis and so the p -value presented before these bodies needs to be calculated against it. This p -value would be somewhat increased since 50% is a higher null value than 30%, but given that the observed effects of the first vaccines to finalize their trials are around 95% with 95% confidence interval bounds hovering around 90%, the p -value against a null hypothesis stating that the vaccine’s efficiency is 50% or less is likely to still be highly statistically significant, say at 0.001 . Such an outcome is to be interpreted as follows: had the efficiency been 50% or below, such an extreme outcome would have most likely not been observed, therefore one can proceed to reject the claim that the vaccine has efficiency of 50% or less with a significance level of 0.001 .

While this example is fictitious in that it doesn’t reference any particular experiment, it should serve as a good illustration of how null hypothesis statistical testing (NHST) operates based on p -values and significance thresholds.

The utility of p -values and statistical significance

It is not often appreciated how much utility p-values bring to the practice of performing statistical tests for scientific and business purposes.

Quantifying relative uncertainty of data

First and foremost, p -values are a convenient expression of the uncertainty in the data with respect to a given claim. They quantify how unexpected a given observation is, assuming some claim which is put to the test is true. If the p-value is low the probability that it would have been observed under the null hypothesis is low. This means the uncertainty the data introduce is high. Therefore, anyone defending the substantive claim which corresponds to the statistical null hypothesis would be pressed to concede that their position is untenable in the face of such data.

If the p-value is high, then the uncertainty with regard to the null hypothesis is low and we are not in a position to reject it, hence the corresponding claim can still be maintained.

As evident by the generic p -value formula and the equation for a distance function which is a part of it, a p -value incorporates information about:

- the observed effect size relative to the null effect size

- the sample size of the test

- the variance and error distribution of the statistic of interest

It would be much more complicated to communicate the outcomes of a statistical test if one had to communicate all three pieces of information. Instead, by way of a single value on the scale of 0 to 1 one can communicate how surprising an outcome is. This value is affected by any change in any of these variables.

This quality stems from the fact that assuming that a p -value from one statistical test can easily and directly be compared to another. The minimal assumptions behind significance tests mean that given that all of them are met, the strength of the statistical evidence offered by data relative to a null hypothesis of interest is the same in two tests if they have approximately equal p -values.

This is especially useful in conducting meta-analyses of various sorts, or for combining evidence from multiple tests.

p -value interpretation in outcomes of experiments

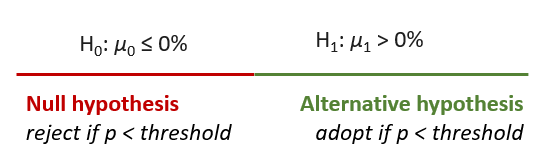

When a p -value is calculated for the outcome of a randomized controlled experiment, it is used to assess the strength of evidence against a null hypothesis of interest, such as that a given intervention does not have a positive effect. If H 0 : μ 0 ≤ 0% and the observed effect is μ 1 = 30% and the calculated p -value is 0.025, this can be used to reject the claim H 0 : μ 0 ≤ 0% at any significance level ≥ 0.025. This, in turn, allows us to claim that H 1 , a complementary hypothesis called the ‘alternative hypothesis’, is in fact true. In this case since H 0 : μ 0 ≤ 0% then H 1 : μ 1 > 0% in order to exhaust the parameter space, as illustrated below:

A claim as the above corresponds to what is called a one-sided null hypothesis . There could be a point null as well, for example the claim that an intervention has no effect whatsoever translates to H 0 : μ 0 = 0%. In such a case the corresponding p -value refers to that point null and hence should be interpreted as rejecting the claim of the effect being exactly zero. For those interested in the differences between point null hypotheses and one-sided hypotheses the articles on onesided.org should be an interesting read. TLDR: most of the time you’d want to reject a directional claim and hence a one-tailed p -value should be reported [8] .

These finer points aside, after observing a low enough p -value, one can claim the rejection of the null and hence the adoption of the complementary alternative hypothesis as true. The alternative hypothesis is simply a negation of the null and is therefore a composite claim such as ‘there is a positive effect’ or ‘there is some non-zero effect’. Note that any inference about a particular effect size within the alternative space has not been tested and hence claiming it has probability equal to p calculated against a zero effect null hypothesis (a.k.a. the nil hypothesis) does not make sense.

p – value interpretation in regressions and correlations of observational data

When performing statistical analyses of observational data p -values are often calculated for regressors in addition to regression coefficients and for the correlation in addition to correlation coefficients. A p -value falling below a specific statistical significance threshold measures how surprising the observed correlation or regression coefficient would be if the variable of interest is in fact orthogonal to the outcome variable. That is – how likely would it be to observe the apparent relationship, if there was no actual relationship between the variable and the outcome variable.

Our correlation calculator outputs both p -values and confidence intervals for the calculated coefficients and is an easy way to explore the concept in the case of correlations. Extrapolating to regressions is then straightforward.

Misinterpretations of statistically significant p -values

There are several common misinterpretations [7] of p -values and statistical significance and no calculator can save one from falling for them. The following errors are often committed when a result is seen as statistically significant.

A result may be highly statistically significant (e.g. p -value 0.0001) but it might still have no practical consequences due to a trivial effect size. This often happens with overpowered designs, but it can also happen in a properly designed statistical test. This error can be avoided by always reporting the effect size and confidence intervals around it.

Observing a highly significant result, say p -value 0.01 does not mean that the likelihood that the observed difference is the true difference. In fact, that likelihood is much, much smaller. Remember that statistical significance has a strict meaning in the NHST framework.

For example, if the observed effect size μ 1 from an intervention is 20% improvement in some outcome and a p -value against the null hypothesis of μ 0 ≤ 0% has been calculated to be 0.01, it does not mean that one can reject μ 0 ≤ 20% with a p -value of 0.01. In fact, the p -value against μ 0 ≤ 20% would be 0.5, which is not statistically significant by any measure.

To make claims about a particular effect size it is recommended to use confidence intervals or severity, or both.

For example, stating that a p -value of 0.02 means that there is 98% probability that the alternative hypothesis is true or that there is 2% probability that the null hypothesis is true . This is a logical error.

By design, even if the null hypothesis is true, p -values equal to or lower than 0.02 would be observed exactly 2% of the time, so one cannot use the fact that a low p -value has been observed to argue there is only 2% probability that the null hypothesis is true. Frequentist and error-statistical methods do not allow one to attach probabilities to hypotheses or claims, only to events [4] . Doing so requires an exhaustive list of hypotheses and prior probabilities attached to them which goes firmly into decision-making territory. Put in Bayesian terms, the p -value is not a posterior probability.

Misinterpretations of statistically non-significant outcomes

Statistically non-significant p-values – that is, p is greater than the specified significance threshold α (alpha), can lead to a different set of misinterpretations. Due to the ubiquitous use of p -values, these are committed often as well.

Treating a high p -value / low significance level as evidence, by itself, that the null hypothesis is true is a common mistake. For example, after observing p = 0.2 one may claim this is evidence that there is no effect, e.g. no difference between two means, is a common mistake.

However, it is trivial to demonstrate why it is wrong to interpret a high p -value as providing support for the null hypothesis. Take a simple experiment in which one measures only 2 (two) people or objects in the control and treatment groups. The p -value for this test of significance will surely not be statistically significant. Does that mean that the intervention is ineffective? Of course not, since that claim has not been tested severely enough. Using a statistic such as severity can completely eliminate this error [4,5] .

A more detailed response would say that failure to observe a statistically significant result, given that the test has enough statistical power, can be used to argue for accepting the null hypothesis to the extent warranted by the power and with reference to the minimum detectable effect for which it was calculated. For example, if the statistical test had 99% power to detect an effect of size μ 1 at level α and it failed, then it could be argued that it is quite unlikely that there exists and effect of size μ 1 or greater as in that case one would have most likely observed a significant p -value.

This is a softer version of the above mistake wherein instead of claiming support for the null hypothesis, a low p -value is taken, by itself, as indicating that the effect size must be small.

This is a mistake since the test might have simply lacked power to exclude many effects of meaningful size. Examining confidence intervals and performing severity calculations against particular hypothesized effect sizes would be a way to avoid this issue.

References:

[1] Mayo, D.G. 1983. “An Objective Theory of Statistical Testing.” Synthese 57 (3): 297–340. DOI:10.1007/BF01064701. [2] Mayo, D.G. 1996 “Error and the Growth of Experimental Knowledge.” Chicago, Illinois: University of Chicago Press. DOI:10.1080/106351599260247. [3] Mayo, D.G., and A. Spanos. 2006. “Severe Testing as a Basic Concept in a Neyman–Pearson Philosophy of Induction.” The British Journal for the Philosophy of Science 57 (2): 323–357. DOI:10.1093/bjps/axl003. [4] Mayo, D.G., and A. Spanos. 2011. “Error Statistics.” Vol. 7, in Handbook of Philosophy of Science Volume 7 – Philosophy of Statistics , by D.G., Spanos, A. et al. Mayo, 1-46. Elsevier. [5] Mayo, D.G. 2018 “Statistical Inference as Severe Testing.” Cambridge: Cambridge University Press. ISBN: 978-1107664647 [6] Georgiev, G.Z. (2019) “Statistical Methods in Online A/B Testing”, ISBN: 978-1694079725 [7] Greenland, S. et al. (2016) “Statistical tests, P values, confidence intervals, and power: a guide to misinterpretations”, European Journal of Epidemiology 31:337–350; DOI:10.1007/s10654-016-0149-3 [8] Georgiev, G.Z. (2018) “Directional claims require directional (statistical) hypotheses” [online, accessed on Dec 07, 2020, at https://www.onesided.org/articles/directional-claims-require-directional-hypotheses.php]

An applied statistician, data analyst, and optimizer by calling, Georgi has expertise in web analytics, statistics, design of experiments, and business risk management. He covers a variety of topics where mathematical models and statistics are useful. Georgi is also the author of “Statistical Methods in Online A/B Testing”.

Recent Articles

- Mastering Formulas in Baking: Baker’s Math, Kitchen Conversions, + More

- Margin vs. Markup: Decoding Profitability in Simple Terms

- How Much Do I Have to Earn Per Hour to Afford the Cost of Living?

- How to Calculate for VAT When Traveling Abroad

- Mathematics in the Kitchen

- Search GIGA Articles

- Cybersecurity

- Home & Garden

- Mathematics

- Quality Improvement

- Talk To Minitab

How to Correctly Interpret P Values

Topics: Hypothesis Testing

The P value is used all over statistics, from t-tests to regression analysis . Everyone knows that you use P values to determine statistical significance in a hypothesis test . In fact, P values often determine what studies get published and what projects get funding.

Despite being so important, the P value is a slippery concept that people often interpret incorrectly. How do you interpret P values?

In this post, I'll help you to understand P values in a more intuitive way and to avoid a very common misinterpretation that can cost you money and credibility.

What Is the Null Hypothesis in Hypothesis Testing?

In every experiment, there is an effect or difference between groups that the researchers are testing. It could be the effectiveness of a new drug, building material, or other intervention that has benefits. Unfortunately for the researchers, there is always the possibility that there is no effect, that is, that there is no difference between the groups. This lack of a difference is called the null hypothesis , which is essentially the position a devil’s advocate would take when evaluating the results of an experiment.

To see why, let’s imagine an experiment for a drug that we know is totally ineffective. The null hypothesis is true: there is no difference between the experimental groups at the population level.

Despite the null being true, it’s entirely possible that there will be an effect in the sample data due to random sampling error. In fact, it is extremely unlikely that the sample groups will ever exactly equal the null hypothesis value. Consequently, the devil’s advocate position is that the observed difference in the sample does not reflect a true difference between populations .

What Are P Values?

- High P values: your data are likely with a true null.

- Low P values: your data are unlikely with a true null.

A low P value suggests that your sample provides enough evidence that you can reject the null hypothesis for the entire population.

How Do You Interpret P Values?

For example, suppose that a vaccine study produced a P value of 0.04. This P value indicates that if the vaccine had no effect, you’d obtain the observed difference or more in 4% of studies due to random sampling error.

P values address only one question: how likely are your data, assuming a true null hypothesis? It does not measure support for the alternative hypothesis. This limitation leads us into the next section to cover a very common misinterpretation of P values.

hbspt.cta._relativeUrls=true;hbspt.cta.load(3447555, '16128196-352b-4dd2-8356-f063c37c5b2a', {"useNewLoader":"true","region":"na1"});

P values are not the probability of making a mistake.

Incorrect interpretations of P values are very common. The most common mistake is to interpret a P value as the probability of making a mistake by rejecting a true null hypothesis (a Type I error ).

There are several reasons why P values can’t be the error rate.

First, P values are calculated based on the assumptions that the null is true for the population and that the difference in the sample is caused entirely by random chance. Consequently, P values can’t tell you the probability that the null is true or false because it is 100% true from the perspective of the calculations.

Second, while a low P value indicates that your data are unlikely assuming a true null, it can’t evaluate which of two competing cases is more likely:

- The null is true but your sample was unusual.

- The null is false.

Determining which case is more likely requires subject area knowledge and replicate studies.

Let’s go back to the vaccine study and compare the correct and incorrect way to interpret the P value of 0.04:

- Correct: Assuming that the vaccine had no effect, you’d obtain the observed difference or more in 4% of studies due to random sampling error.

- Incorrect: If you reject the null hypothesis, there’s a 4% chance that you’re making a mistake.

To see a graphical representation of how hypothesis tests work, see my post: Understanding Hypothesis Tests: Significance Levels and P Values .

What Is the True Error Rate?

If a P value is not the error rate, what the heck is the error rate? (Can you guess which way this is heading now?)

Sellke et al.* have estimated the error rates associated with different P values. While the precise error rate depends on various assumptions (which I discuss here ), the table summarizes them for middle-of-the-road assumptions.

|

|

|

| 0.05 | At least 23% (and typically close to 50%) |

| 0.01 | At least 7% (and typically close to 15%) |

Do the higher error rates in this table surprise you? Unfortunately, the common misinterpretation of P values as the error rate creates the illusion of substantially more evidence against the null hypothesis than is justified. As you can see, if you base a decision on a single study with a P value near 0.05, the difference observed in the sample may not exist at the population level. That can be costly!

Now that you know how to interpret P values, read my five guidelines for how to use P values and avoid mistakes .

You can also read my rebuttal to an academic journal that actually banned P values !

An exciting study about the reproducibility of experimental results was published in August 2015. This study highlights the importance of understanding the true error rate. For more information, read my blog post: P Values and the Replication of Experiments .