Examples of data management plans

These examples of data management plans (DMPs) were provided by University of Minnesota researchers. They feature different elements. One is concise and the other is detailed. One utilizes secondary data, while the other collects primary data. Both have explicit plans for how the data is handled through the life cycle of the project.

School of Public Health featuring data use agreements and secondary data analysis

All data to be used in the proposed study will be obtained from XXXXXX; only completely de-identified data will be obtained. No new data collection is planned. The pre-analysis data obtained from the XXX should be requested from the XXX directly. Below is the contact information provided with the funding opportunity announcement (PAR_XXX).

Types of data : Appendix # contains the specific variable list that will be used in the proposed study. The data specification including the size, file format, number of files, data dictionary and codebook will be documented upon receipt of the data from the XXX. Any newly created variables from the process of data management and analyses will be updated to the data specification.

Data use for others : The post-analysis data may be useful for researchers who plan to conduct a study in WTC related injuries and personal economic status and quality of life change. The Injury Exposure Index that will be created from this project will also be useful for causal analysis between WTC exposure and injuries among WTC general responders.

Data limitations for secondary use : While the data involve human subjects, only completely de-identified data will be available and used in the proposed study. Secondary data use is not expected to be limited, given the permission obtained to use the data from the XXX, through the data use agreement (Appendix #).

Data preparation for transformations, preservation and sharing : The pre-analysis data will be delivered in Stata format. The post-analysis data will also be stored in Stata format. If requested, other data formats, including comma-separated-values (CSV), Excel, SAS, R, and SPSS can be transformed.

Metadata documentation : The Data Use Log will document all data-related activities. The proposed study investigators will have access to a highly secured network drive controlled by the University of Minnesota that requires logging of any data use. For specific data management activities, Stata “log” function will record all activities and store in relevant designated folders. Standard file naming convention will be used with a format: “WTCINJ_[six letter of data indication]_mmddyy_[initial of personnel]”.

Data sharing agreement : Data sharing will require two steps of permission. 1) data use agreement from the XXXXXX for pre-analysis data use, and 2) data use agreement from the Principal Investigator, Dr. XXX XXX ([email protected] and 612-xxx-xxxx) for post-analysis data use.

Data repository/sharing/archiving : A long-term data sharing and preservation plan will be used to store and make publicly accessible the data beyond the life of the project. The data will be deposited into the Data Repository for the University of Minnesota (DRUM), http://hdl.handle.net/11299/166578. This University Libraries’ hosted institutional data repository is an open access platform for dissemination and archiving of university research data. Date files in DRUM are written to an Isilon storage system with two copies, one local to each of the two geographically separated University of Minnesota Data Centers. The local Isilon cluster stores the data in such a way that the data can survive the loss of any two disks or any one node of the cluster. Within two hours of the initial write, data replication to the 2nd Isilon cluster commences. The 2nd cluster employs the same protections as the local cluster, and both verify with a checksum procedure that data has not altered on write. In addition, DRUM provides long-term preservation of digital data files for at least 10 years using services such as migration (limited format types), secure backup, bit-level checksums, and maintains a persistent DOIs for data sets, facilitating data citations. In accordance to DRUM policies, the de-identified data will be accompanied by the appropriate documentation, metadata, and code to facilitate reuse and provide the potential for interoperability with similar data sets.

Expected timeline : Preparation for data sharing will begin with completion of planned publications and anticipated data release date will be six months prior.

Back to top

College of Education and Human Development featuring quantitative and qualitative data

Types of data to be collected and shared The following quantitative and qualitative data (for which we have participant consent to share in de-identified form) will be collected as part of the project and will be available for sharing in raw or aggregate form. Specifically, any individual level data will be de-identified before sharing. Demographic data may only be shared at an aggregated level as needed to maintain confidentiality.

Student-level data including

- Pre- and posttest data from proximal and distal writing measures

- Demographic data (age, sex, race/ethnicity, free or reduced price lunch status, home language, special education and English language learning services status)

- Pre/post knowledge and skills data (collected via secure survey tools such as Qualtrics)

- Teacher efficacy data (collected via secure survey tools such as Qualtrics)

- Fidelity data (teachers’ accuracy of implementation of Data-Based Instruction; DBI)

- Teacher logs of time spent on DBI activities

- Demographic data (age, sex, race/ethnicity, degrees earned, teaching certification, years and nature of teaching experience)

- Qualitative field notes from classroom observations and transcribed teacher responses to semi-structured follow-up interview questions.

- Coded qualitative data

- Audio and video files from teacher observations and interviews (participants will sign a release form indicating that they understand that sharing of these files may reveal their identity)

Procedures for managing and for maintaining the confidentiality of the data to be shared

The following procedures will be used to maintain data confidentiality (for managing confidentiality of qualitative data, we will follow additional guidelines ).

- When participants give consent and are enrolled in the study, each will be assigned a unique (random) study identification number. This ID number will be associated with all participant data that are collected, entered, and analyzed for the study.

- All paper data will be stored in locked file cabinets in locked lab/storage space accessible only to research staff at the performance sites. Whenever possible, paper data will only be labeled with the participant’s study ID. Any direct identifiers will be redacted from paper data as soon as it is processed for data entry.

- All electronic data will be stripped of participant names and other identifiable information such as addresses, and emails.

- During the active project period (while data are being collected, coded, and analyzed), data from students and teachers will be entered remotely from the two performance sites into the University of Minnesota’s secure BOX storage (box.umn.edu), which is a highly secure online file-sharing system. Participants’ names and any other direct identifiers will not be entered into this system; rather, study ID numbers will be associated with the data entered into BOX.

- Data will be downloaded from BOX for analysis onto password protected computers and saved only on secure University servers. A log (saved in BOX) will be maintained to track when, at which site, and by whom data are entered as well as downloaded for analysis (including what data are downloaded and for what specific purpose).

Roles and responsibilities of project or institutional staff in the management and retention of research data

Key personnel on the project (PIs XXXXX and XXXXX; Co-Investigator XXXXX) will be the data stewards while the data are “active” (i.e., during data collection, coding, analysis, and publication phases of the project), and will be responsible for documenting and managing the data throughout this time. Additional project personnel (cost analyst, project coordinators, and graduate research assistants at each site) will receive human subjects and data management training at their institutions, and will also be responsible for adhering to the data management plan described above.

Project PIs will develop study-specific protocols and will train all project staff who handle data to follow these protocols. Protocols will include guidelines for managing confidentiality of data (described above), as well as protocols for naming, organizing, and sharing files and entering and downloading data. For example, we will establish file naming conventions and hierarchies for file and folder organization, as well as conventions for versioning files. We will also develop a directory that lists all types of data and where they are stored and entered. As described above, we will create a log to track data entry and downloads for analysis. We will designate one project staff member (e.g., UMN project coordinator) to ensure that these protocols are followed and documentation is maintained. This person will work closely with Co-Investigator XXXXX, who will oversee primary data analysis activities.

At the end of the grant and publication processes, the data will be archived and shared (see Access below) and the University of Minnesota Libraries will serve as the steward of the de-identified, archived dataset from that point forward.

Expected schedule for data access

The complete dataset is expected to be accessible after the study and all related publications are completed, and will remain accessible for at least 10 years after the data are made available publicly. The PIs and Co-Investigator acknowledge that each annual report must contain information about data accessibility, and that the timeframe of data accessibility will be reviewed as part of the annual progress reviews and revised as necessary for each publication.

Format of the final dataset

The format of the final dataset to be available for public access is as follows: De-identified raw paper data (e.g., student pre/posttest data) will be scanned into pdf files. Raw data collected electronically (e.g., via survey tools, field notes) will be available in MS Excel spreadsheets or pdf files. Raw data from audio/video files will be in .wav format. Audio/video materials and field notes from observations/interviews will also be transcribed and coded onto paper forms and scanned into pdf files. The final database will be in a .csv file that can be exported into MS Excel, SAS, SPSS, or ASCII files.

Dataset documentation to be provided

The final data file to be shared will include (a) raw item-level data (where applicable to recreate analyses) with appropriate variable and value labels, (b) all computed variables created during setup and scoring, and (c) all scale scores for the demographic, behavioral, and assessment data. These data will be the de-identified and individual- or aggregate-level data used for the final and published analyses.

Dataset documentation will consist of electronic codebooks documenting the following information: (a) a description of the research questions, methodology, and sample, (b) a description of each specific data source (e.g., measures, observation protocols), and (c) a description of the raw data and derived variables, including variable lists and definitions.

To aid in final dataset documentation, throughout the project, we will maintain a log of when, where, and how data were collected, decisions related to methods, coding, and analysis, statistical analyses, software and instruments used, where data and corresponding documentation are stored, and future research ideas and plans.

Method of data access

Final peer-reviewed publications resulting from the study/grant will be accompanied by the dataset used at the time of publication, during and after the grant period. A long-term data sharing and preservation plan will be used to store and make publicly accessible the data beyond the life of the project. The data will be deposited into the Data Repository for the University of Minnesota (DRUM), http://hdl.handle.net/11299/166578 . This University Libraries’ hosted institutional data repository is an open access platform for dissemination and archiving of university research data. Date files in DRUM are written to an Isilon storage system with two copies, one local to each of the two geographically separated University of Minnesota Data Centers. The local Isilon cluster stores the data in such a way that the data can survive the loss of any two disks or any one node of the cluster. Within two hours of the initial write, data replication to the 2nd Isilon cluster commences. The 2nd cluster employs the same protections as the local cluster, and both verify with a checksum procedure that data has not altered on write. In addition, DRUM provides long-term preservation of digital data files for at least 10 years using services such as migration (limited format types), secure backup, bit-level checksums, and maintains persistent DOIs for datasets, facilitating data citations. In accordance to DRUM policies, the de-identified data will be accompanied by the appropriate documentation, metadata, and code to facilitate reuse and provide the potential for interoperability with similar datasets.

The main benefit of DRUM is whatever is shared through this repository is public; however, a completely open system is not optimal if any of the data could be identifying (e.g., certain types of demographic data). We will work with the University of MN Library System to determine if DRUM is the best option. Another option available to the University of MN, ICPSR ( https://www.icpsr.umich.edu/icpsrweb/ ), would allow us to share data at different levels. Through ICPSR, data are available to researchers at member institutions of ICPSR rather than publicly. ICPSR allows for various mediated forms of sharing, where people interested in getting less de-identified individual level would sign data use agreements before receiving the data, or would need to use special software to access it directly from ICPSR rather than downloading it, for security proposes. ICPSR is a good option for sensitive or other kinds of data that are difficult to de-identify, but is not as open as DRUM. We expect that data for this project will be de-identifiable to a level that we can use DRUM, but will consider ICPSR as an option if needed.

Data agreement

No specific data sharing agreement will be needed if we use DRUM; however, DRUM does have a general end-user access policy ( conservancy.umn.edu/pages/drum/policies/#end-user-access-policy ). If we go with a less open access system such as ICPSR, we will work with ICPSR and the Un-funded Research Agreements (UFRA) coordinator at the University of Minnesota to develop necessary data sharing agreements.

Circumstances preventing data sharing

The data for this study fall under multiple statutes for confidentiality including multiple IRB requirements for confidentiality and FERPA. If it is not possible to meet all of the requirements of these agencies, data will not be shared.

For example, at the two sites where data will be collected, both universities (University of Minnesota and University of Missouri) and school districts have specific requirements for data confidentiality that will be described in consent forms. Participants will be informed of procedures used to maintain data confidentiality and that only de-identified data will be shared publicly. Some demographic data may not be sharable at the individual level and thus would only be provided in aggregate form.

When we collect audio/video data, participants will sign a release form that provides options to have data shared with project personnel only and/or for sharing purposes. We will not share audio/video data from people who do not consent to share it, and we will not publicly share any data that could identify an individual (these parameters will be specified in our IRB-approved informed consent forms). De-identifying is also required for FERPA data. The level of de-identification needed to meet these requirements is extensive, so it may not be possible to share all raw data exactly as collected in order to protect privacy of participants and maintain confidentiality of data.

Data Analysis Techniques in Research – Methods, Tools & Examples

Varun Saharawat is a seasoned professional in the fields of SEO and content writing. With a profound knowledge of the intricate aspects of these disciplines, Varun has established himself as a valuable asset in the world of digital marketing and online content creation.

Data analysis techniques in research are essential because they allow researchers to derive meaningful insights from data sets to support their hypotheses or research objectives.

Data Analysis Techniques in Research : While various groups, institutions, and professionals may have diverse approaches to data analysis, a universal definition captures its essence. Data analysis involves refining, transforming, and interpreting raw data to derive actionable insights that guide informed decision-making for businesses.

A straightforward illustration of data analysis emerges when we make everyday decisions, basing our choices on past experiences or predictions of potential outcomes.

If you want to learn more about this topic and acquire valuable skills that will set you apart in today’s data-driven world, we highly recommend enrolling in the Data Analytics Course by Physics Wallah . And as a special offer for our readers, use the coupon code “READER” to get a discount on this course.

Table of Contents

What is Data Analysis?

Data analysis is the systematic process of inspecting, cleaning, transforming, and interpreting data with the objective of discovering valuable insights and drawing meaningful conclusions. This process involves several steps:

- Inspecting : Initial examination of data to understand its structure, quality, and completeness.

- Cleaning : Removing errors, inconsistencies, or irrelevant information to ensure accurate analysis.

- Transforming : Converting data into a format suitable for analysis, such as normalization or aggregation.

- Interpreting : Analyzing the transformed data to identify patterns, trends, and relationships.

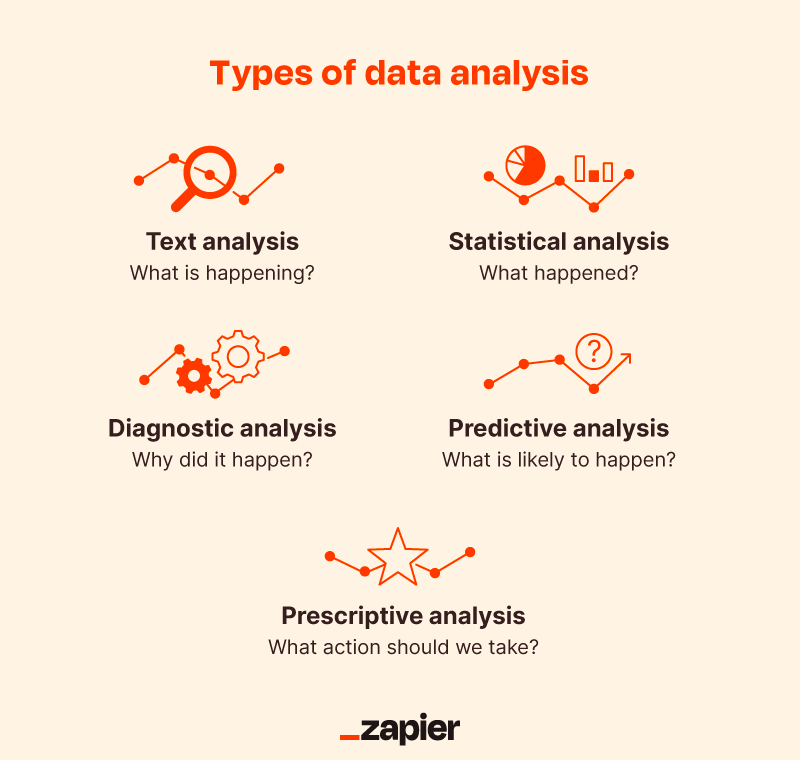

Types of Data Analysis Techniques in Research

Data analysis techniques in research are categorized into qualitative and quantitative methods, each with its specific approaches and tools. These techniques are instrumental in extracting meaningful insights, patterns, and relationships from data to support informed decision-making, validate hypotheses, and derive actionable recommendations. Below is an in-depth exploration of the various types of data analysis techniques commonly employed in research:

1) Qualitative Analysis:

Definition: Qualitative analysis focuses on understanding non-numerical data, such as opinions, concepts, or experiences, to derive insights into human behavior, attitudes, and perceptions.

- Content Analysis: Examines textual data, such as interview transcripts, articles, or open-ended survey responses, to identify themes, patterns, or trends.

- Narrative Analysis: Analyzes personal stories or narratives to understand individuals’ experiences, emotions, or perspectives.

- Ethnographic Studies: Involves observing and analyzing cultural practices, behaviors, and norms within specific communities or settings.

2) Quantitative Analysis:

Quantitative analysis emphasizes numerical data and employs statistical methods to explore relationships, patterns, and trends. It encompasses several approaches:

Descriptive Analysis:

- Frequency Distribution: Represents the number of occurrences of distinct values within a dataset.

- Central Tendency: Measures such as mean, median, and mode provide insights into the central values of a dataset.

- Dispersion: Techniques like variance and standard deviation indicate the spread or variability of data.

Diagnostic Analysis:

- Regression Analysis: Assesses the relationship between dependent and independent variables, enabling prediction or understanding causality.

- ANOVA (Analysis of Variance): Examines differences between groups to identify significant variations or effects.

Predictive Analysis:

- Time Series Forecasting: Uses historical data points to predict future trends or outcomes.

- Machine Learning Algorithms: Techniques like decision trees, random forests, and neural networks predict outcomes based on patterns in data.

Prescriptive Analysis:

- Optimization Models: Utilizes linear programming, integer programming, or other optimization techniques to identify the best solutions or strategies.

- Simulation: Mimics real-world scenarios to evaluate various strategies or decisions and determine optimal outcomes.

Specific Techniques:

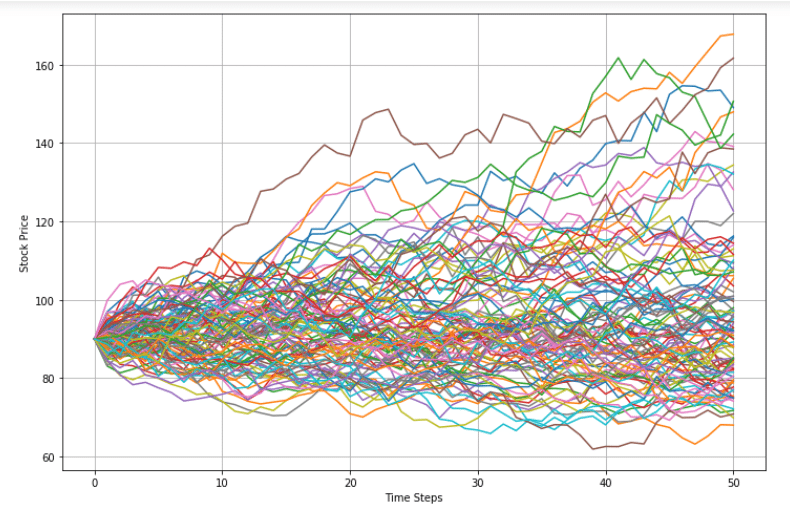

- Monte Carlo Simulation: Models probabilistic outcomes to assess risk and uncertainty.

- Factor Analysis: Reduces the dimensionality of data by identifying underlying factors or components.

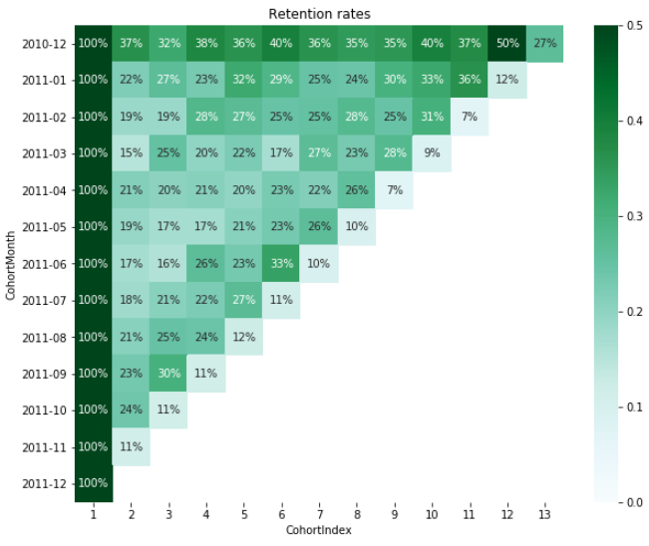

- Cohort Analysis: Studies specific groups or cohorts over time to understand trends, behaviors, or patterns within these groups.

- Cluster Analysis: Classifies objects or individuals into homogeneous groups or clusters based on similarities or attributes.

- Sentiment Analysis: Uses natural language processing and machine learning techniques to determine sentiment, emotions, or opinions from textual data.

Also Read: AI and Predictive Analytics: Examples, Tools, Uses, Ai Vs Predictive Analytics

Data Analysis Techniques in Research Examples

To provide a clearer understanding of how data analysis techniques are applied in research, let’s consider a hypothetical research study focused on evaluating the impact of online learning platforms on students’ academic performance.

Research Objective:

Determine if students using online learning platforms achieve higher academic performance compared to those relying solely on traditional classroom instruction.

Data Collection:

- Quantitative Data: Academic scores (grades) of students using online platforms and those using traditional classroom methods.

- Qualitative Data: Feedback from students regarding their learning experiences, challenges faced, and preferences.

Data Analysis Techniques Applied:

1) Descriptive Analysis:

- Calculate the mean, median, and mode of academic scores for both groups.

- Create frequency distributions to represent the distribution of grades in each group.

2) Diagnostic Analysis:

- Conduct an Analysis of Variance (ANOVA) to determine if there’s a statistically significant difference in academic scores between the two groups.

- Perform Regression Analysis to assess the relationship between the time spent on online platforms and academic performance.

3) Predictive Analysis:

- Utilize Time Series Forecasting to predict future academic performance trends based on historical data.

- Implement Machine Learning algorithms to develop a predictive model that identifies factors contributing to academic success on online platforms.

4) Prescriptive Analysis:

- Apply Optimization Models to identify the optimal combination of online learning resources (e.g., video lectures, interactive quizzes) that maximize academic performance.

- Use Simulation Techniques to evaluate different scenarios, such as varying student engagement levels with online resources, to determine the most effective strategies for improving learning outcomes.

5) Specific Techniques:

- Conduct Factor Analysis on qualitative feedback to identify common themes or factors influencing students’ perceptions and experiences with online learning.

- Perform Cluster Analysis to segment students based on their engagement levels, preferences, or academic outcomes, enabling targeted interventions or personalized learning strategies.

- Apply Sentiment Analysis on textual feedback to categorize students’ sentiments as positive, negative, or neutral regarding online learning experiences.

By applying a combination of qualitative and quantitative data analysis techniques, this research example aims to provide comprehensive insights into the effectiveness of online learning platforms.

Also Read: Learning Path to Become a Data Analyst in 2024

Data Analysis Techniques in Quantitative Research

Quantitative research involves collecting numerical data to examine relationships, test hypotheses, and make predictions. Various data analysis techniques are employed to interpret and draw conclusions from quantitative data. Here are some key data analysis techniques commonly used in quantitative research:

1) Descriptive Statistics:

- Description: Descriptive statistics are used to summarize and describe the main aspects of a dataset, such as central tendency (mean, median, mode), variability (range, variance, standard deviation), and distribution (skewness, kurtosis).

- Applications: Summarizing data, identifying patterns, and providing initial insights into the dataset.

2) Inferential Statistics:

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. This technique includes hypothesis testing, confidence intervals, t-tests, chi-square tests, analysis of variance (ANOVA), regression analysis, and correlation analysis.

- Applications: Testing hypotheses, making predictions, and generalizing findings from a sample to a larger population.

3) Regression Analysis:

- Description: Regression analysis is a statistical technique used to model and examine the relationship between a dependent variable and one or more independent variables. Linear regression, multiple regression, logistic regression, and nonlinear regression are common types of regression analysis .

- Applications: Predicting outcomes, identifying relationships between variables, and understanding the impact of independent variables on the dependent variable.

4) Correlation Analysis:

- Description: Correlation analysis is used to measure and assess the strength and direction of the relationship between two or more variables. The Pearson correlation coefficient, Spearman rank correlation coefficient, and Kendall’s tau are commonly used measures of correlation.

- Applications: Identifying associations between variables and assessing the degree and nature of the relationship.

5) Factor Analysis:

- Description: Factor analysis is a multivariate statistical technique used to identify and analyze underlying relationships or factors among a set of observed variables. It helps in reducing the dimensionality of data and identifying latent variables or constructs.

- Applications: Identifying underlying factors or constructs, simplifying data structures, and understanding the underlying relationships among variables.

6) Time Series Analysis:

- Description: Time series analysis involves analyzing data collected or recorded over a specific period at regular intervals to identify patterns, trends, and seasonality. Techniques such as moving averages, exponential smoothing, autoregressive integrated moving average (ARIMA), and Fourier analysis are used.

- Applications: Forecasting future trends, analyzing seasonal patterns, and understanding time-dependent relationships in data.

7) ANOVA (Analysis of Variance):

- Description: Analysis of variance (ANOVA) is a statistical technique used to analyze and compare the means of two or more groups or treatments to determine if they are statistically different from each other. One-way ANOVA, two-way ANOVA, and MANOVA (Multivariate Analysis of Variance) are common types of ANOVA.

- Applications: Comparing group means, testing hypotheses, and determining the effects of categorical independent variables on a continuous dependent variable.

8) Chi-Square Tests:

- Description: Chi-square tests are non-parametric statistical tests used to assess the association between categorical variables in a contingency table. The Chi-square test of independence, goodness-of-fit test, and test of homogeneity are common chi-square tests.

- Applications: Testing relationships between categorical variables, assessing goodness-of-fit, and evaluating independence.

These quantitative data analysis techniques provide researchers with valuable tools and methods to analyze, interpret, and derive meaningful insights from numerical data. The selection of a specific technique often depends on the research objectives, the nature of the data, and the underlying assumptions of the statistical methods being used.

Also Read: Analysis vs. Analytics: How Are They Different?

Data Analysis Methods

Data analysis methods refer to the techniques and procedures used to analyze, interpret, and draw conclusions from data. These methods are essential for transforming raw data into meaningful insights, facilitating decision-making processes, and driving strategies across various fields. Here are some common data analysis methods:

- Description: Descriptive statistics summarize and organize data to provide a clear and concise overview of the dataset. Measures such as mean, median, mode, range, variance, and standard deviation are commonly used.

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. Techniques such as hypothesis testing, confidence intervals, and regression analysis are used.

3) Exploratory Data Analysis (EDA):

- Description: EDA techniques involve visually exploring and analyzing data to discover patterns, relationships, anomalies, and insights. Methods such as scatter plots, histograms, box plots, and correlation matrices are utilized.

- Applications: Identifying trends, patterns, outliers, and relationships within the dataset.

4) Predictive Analytics:

- Description: Predictive analytics use statistical algorithms and machine learning techniques to analyze historical data and make predictions about future events or outcomes. Techniques such as regression analysis, time series forecasting, and machine learning algorithms (e.g., decision trees, random forests, neural networks) are employed.

- Applications: Forecasting future trends, predicting outcomes, and identifying potential risks or opportunities.

5) Prescriptive Analytics:

- Description: Prescriptive analytics involve analyzing data to recommend actions or strategies that optimize specific objectives or outcomes. Optimization techniques, simulation models, and decision-making algorithms are utilized.

- Applications: Recommending optimal strategies, decision-making support, and resource allocation.

6) Qualitative Data Analysis:

- Description: Qualitative data analysis involves analyzing non-numerical data, such as text, images, videos, or audio, to identify themes, patterns, and insights. Methods such as content analysis, thematic analysis, and narrative analysis are used.

- Applications: Understanding human behavior, attitudes, perceptions, and experiences.

7) Big Data Analytics:

- Description: Big data analytics methods are designed to analyze large volumes of structured and unstructured data to extract valuable insights. Technologies such as Hadoop, Spark, and NoSQL databases are used to process and analyze big data.

- Applications: Analyzing large datasets, identifying trends, patterns, and insights from big data sources.

8) Text Analytics:

- Description: Text analytics methods involve analyzing textual data, such as customer reviews, social media posts, emails, and documents, to extract meaningful information and insights. Techniques such as sentiment analysis, text mining, and natural language processing (NLP) are used.

- Applications: Analyzing customer feedback, monitoring brand reputation, and extracting insights from textual data sources.

These data analysis methods are instrumental in transforming data into actionable insights, informing decision-making processes, and driving organizational success across various sectors, including business, healthcare, finance, marketing, and research. The selection of a specific method often depends on the nature of the data, the research objectives, and the analytical requirements of the project or organization.

Also Read: Quantitative Data Analysis: Types, Analysis & Examples

Data Analysis Tools

Data analysis tools are essential instruments that facilitate the process of examining, cleaning, transforming, and modeling data to uncover useful information, make informed decisions, and drive strategies. Here are some prominent data analysis tools widely used across various industries:

1) Microsoft Excel:

- Description: A spreadsheet software that offers basic to advanced data analysis features, including pivot tables, data visualization tools, and statistical functions.

- Applications: Data cleaning, basic statistical analysis, visualization, and reporting.

2) R Programming Language:

- Description: An open-source programming language specifically designed for statistical computing and data visualization.

- Applications: Advanced statistical analysis, data manipulation, visualization, and machine learning.

3) Python (with Libraries like Pandas, NumPy, Matplotlib, and Seaborn):

- Description: A versatile programming language with libraries that support data manipulation, analysis, and visualization.

- Applications: Data cleaning, statistical analysis, machine learning, and data visualization.

4) SPSS (Statistical Package for the Social Sciences):

- Description: A comprehensive statistical software suite used for data analysis, data mining, and predictive analytics.

- Applications: Descriptive statistics, hypothesis testing, regression analysis, and advanced analytics.

5) SAS (Statistical Analysis System):

- Description: A software suite used for advanced analytics, multivariate analysis, and predictive modeling.

- Applications: Data management, statistical analysis, predictive modeling, and business intelligence.

6) Tableau:

- Description: A data visualization tool that allows users to create interactive and shareable dashboards and reports.

- Applications: Data visualization , business intelligence , and interactive dashboard creation.

7) Power BI:

- Description: A business analytics tool developed by Microsoft that provides interactive visualizations and business intelligence capabilities.

- Applications: Data visualization, business intelligence, reporting, and dashboard creation.

8) SQL (Structured Query Language) Databases (e.g., MySQL, PostgreSQL, Microsoft SQL Server):

- Description: Database management systems that support data storage, retrieval, and manipulation using SQL queries.

- Applications: Data retrieval, data cleaning, data transformation, and database management.

9) Apache Spark:

- Description: A fast and general-purpose distributed computing system designed for big data processing and analytics.

- Applications: Big data processing, machine learning, data streaming, and real-time analytics.

10) IBM SPSS Modeler:

- Description: A data mining software application used for building predictive models and conducting advanced analytics.

- Applications: Predictive modeling, data mining, statistical analysis, and decision optimization.

These tools serve various purposes and cater to different data analysis needs, from basic statistical analysis and data visualization to advanced analytics, machine learning, and big data processing. The choice of a specific tool often depends on the nature of the data, the complexity of the analysis, and the specific requirements of the project or organization.

Also Read: How to Analyze Survey Data: Methods & Examples

Importance of Data Analysis in Research

The importance of data analysis in research cannot be overstated; it serves as the backbone of any scientific investigation or study. Here are several key reasons why data analysis is crucial in the research process:

- Data analysis helps ensure that the results obtained are valid and reliable. By systematically examining the data, researchers can identify any inconsistencies or anomalies that may affect the credibility of the findings.

- Effective data analysis provides researchers with the necessary information to make informed decisions. By interpreting the collected data, researchers can draw conclusions, make predictions, or formulate recommendations based on evidence rather than intuition or guesswork.

- Data analysis allows researchers to identify patterns, trends, and relationships within the data. This can lead to a deeper understanding of the research topic, enabling researchers to uncover insights that may not be immediately apparent.

- In empirical research, data analysis plays a critical role in testing hypotheses. Researchers collect data to either support or refute their hypotheses, and data analysis provides the tools and techniques to evaluate these hypotheses rigorously.

- Transparent and well-executed data analysis enhances the credibility of research findings. By clearly documenting the data analysis methods and procedures, researchers allow others to replicate the study, thereby contributing to the reproducibility of research findings.

- In fields such as business or healthcare, data analysis helps organizations allocate resources more efficiently. By analyzing data on consumer behavior, market trends, or patient outcomes, organizations can make strategic decisions about resource allocation, budgeting, and planning.

- In public policy and social sciences, data analysis is instrumental in developing and evaluating policies and interventions. By analyzing data on social, economic, or environmental factors, policymakers can assess the effectiveness of existing policies and inform the development of new ones.

- Data analysis allows for continuous improvement in research methods and practices. By analyzing past research projects, identifying areas for improvement, and implementing changes based on data-driven insights, researchers can refine their approaches and enhance the quality of future research endeavors.

However, it is important to remember that mastering these techniques requires practice and continuous learning. That’s why we highly recommend the Data Analytics Course by Physics Wallah . Not only does it cover all the fundamentals of data analysis, but it also provides hands-on experience with various tools such as Excel, Python, and Tableau. Plus, if you use the “ READER ” coupon code at checkout, you can get a special discount on the course.

For Latest Tech Related Information, Join Our Official Free Telegram Group : PW Skills Telegram Group

Data Analysis Techniques in Research FAQs

What are the 5 techniques for data analysis.

The five techniques for data analysis include: Descriptive Analysis Diagnostic Analysis Predictive Analysis Prescriptive Analysis Qualitative Analysis

What are techniques of data analysis in research?

Techniques of data analysis in research encompass both qualitative and quantitative methods. These techniques involve processes like summarizing raw data, investigating causes of events, forecasting future outcomes, offering recommendations based on predictions, and examining non-numerical data to understand concepts or experiences.

What are the 3 methods of data analysis?

The three primary methods of data analysis are: Qualitative Analysis Quantitative Analysis Mixed-Methods Analysis

What are the four types of data analysis techniques?

The four types of data analysis techniques are: Descriptive Analysis Diagnostic Analysis Predictive Analysis Prescriptive Analysis

- Top 15 SAS Courses For Aspiring Data Analysts

The top 15 SAS Courses for aspiring data scientists include- 1. SAS Professional Programmer Certificate, 2. SAS Advanced Programmer Certificate…

- How To Become Data Analyst In 2023

Data Analyst in 2023: In 2023, becoming a data analyst is not just a career choice but is also the…

- Top 25 Big Data Interview Questions and Answers

Big Data Interview Questions and Answers: In the fast-paced digital age, data multiplies rapidly. Big Data is the hidden hero,…

Related Articles

- 10 Best Companies For Data Analysis Internships 2024

- Finance Data Analysis: What is a Financial Data Analysis?

- Data Analytics Meaning, Importance, Techniques, Examples

- 5 BI Business Intelligence Tools You Must Know in 2024

- What Is Business BI?

- What Is Predictive Data Analytics, Definition, Tools, How Does It Work?

- What Is Big Data Analytics? Definition, Benefits, and More

Ask Yale Library

My Library Accounts

Find, Request, and Use

Help and Research Support

Visit and Study

Explore Collections

Research Data Management: Plan for Data

- Plan for Data

- Organize & Document Data

- Store & Secure Data

- Validate Data

- Share & Re-use Data

- Data Use Agreements

- Research Data Policies

What is a Data Management Plan?

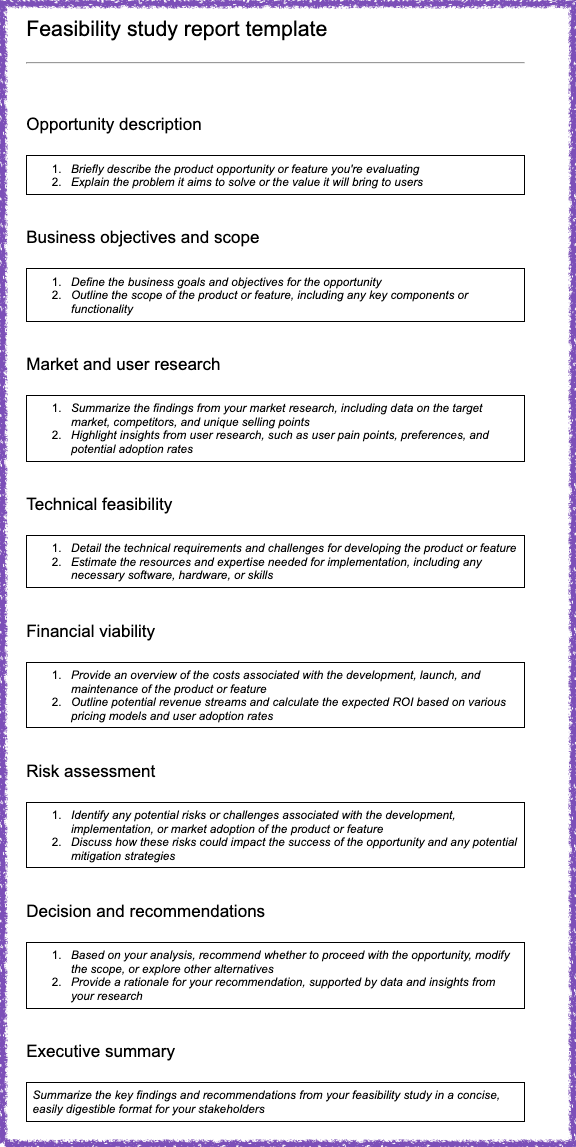

Data management plans (DMPs) are documents that outline how data will be collected , stored , secured , analyzed , disseminated , and preserved over the lifecycle of a research project. They are typically created in the early stages of a project, and they are typically short documents that may evolve over time. Increasingly, they are required by funders and institutions alike, and they are a recommended best practice in research data management.

Tab through this guide to consider each stage of the research data management process, and each correlated section of a data management plan.

Tools for Data Management Planning

DMPTool is a collaborative effort between several universities to streamline the data management planning process.

The DMPTool supports the majority of federal and many non-profit and private funding agencies that require data management plans as part of a grant proposal application. ( View the list of supported organizations and corresponding templates.) If the funder you're applying to isn't listed or you just want to create one as good practice, there is an option for a generic plan.

Key features:

Data management plan templates from most major funders

Guided creation of a data management plan with click-throughs and helpful questions and examples

Access to public plans , to review ahead of creating your own

Ability to share plans with collaborators as well as copy and reuse existing plans

How to get started:

Log in with your yale.edu email to be directed to a NetID sign-in, and review the quick start guide .

Research Data Lifecycle

Additional Resources for Data Management Planning

- << Previous: Overview

- Next: Organize & Document Data >>

- Last Updated: Sep 27, 2023 1:15 PM

- URL: https://guides.library.yale.edu/datamanagement

Site Navigation

P.O. BOX 208240 New Haven, CT 06250-8240 (203) 432-1775

Yale's Libraries

Bass Library

Beinecke Rare Book and Manuscript Library

Classics Library

Cushing/Whitney Medical Library

Divinity Library

East Asia Library

Gilmore Music Library

Haas Family Arts Library

Lewis Walpole Library

Lillian Goldman Law Library

Marx Science and Social Science Library

Sterling Memorial Library

Yale Center for British Art

SUBSCRIBE TO OUR NEWSLETTER

@YALELIBRARY

Yale Library Instagram

Accessibility Diversity, Equity, and Inclusion Giving Privacy and Data Use Contact Our Web Team

© 2022 Yale University Library • All Rights Reserved

- Data Management Plans

What is a Data Management Plan (DMP)?

For nih (recently updated), what about other government agencies, writing a plan, having a plan reviewed, what generally goes into a dmp, anticipate the storage, infrastructure, and software needs of the project, create or adopt standard terminology and file-naming practices, set a schedule for your data management activities, assign responsibilities, think long-term.

A DMP (or DMSP, Data Management and Sharing Plan) describes what data will be acquired or generated as part of a research project, how the data will be managed, described, analyzed, and stored, and what mechanisms will be used to at the end of your project to share and preserve the data.

One of the key advantages to writing a DMP is that it helps you think concretely about your process, identify potential weaknesses in your plans, and provide a record of what you intend to do. Developing a DMP can prompt valuable discussion among collaborators that uncovers and resolves unspoken assumptions, and provide a framework for documentation that keeps graduate students, postdocs, and collaborators on the same page with respect to practices, expectations, and policies.

Data management planning is most effective in the early stages of a research project, but it is never too late to develop a data management plan.

How can I find out what my funding agency requires?

Most funding agencies require a DMP as part of an application for funding, but the specific requirements differ across and even within agencies. Many agencies, including the NSF and NIH, have requirements that apply generally, with some additional considerations depending on the specific funding announcement or the directorate/institute.

Here are some resources to help identify what you’ll need:

- DMP Requirements (PAPPG, Chapter 2, Proposal prep instructions)

- Data sharing policy (PAPPG, Chapter 11, Post-award requirements)

- Links to directorate-specific requirements

- Data Management and Sharing Policy Overview

- Research Covered Under the Data Management and Sharing Policy

- Writing a Data Management and Sharing Plan

- Final NIH Policy for Data Management and Sharing

- NOTE : Some specific NIH Institutes, Centers, or Offices have additional requirements for DMSPs. For example, applications to NIMH require a data validation schedule. Please check with your institute and your funding announcement to ensure all aspects expected are included in your DMSP.

- Data Sharing Policies

- General guidance and examples

- Data Sharing and Management Policies for US Federal Agencies

Need help figuring out what your agency needs? Ask a PRDS team member !

Where can I get help with writing a DMP?

With recent and upcoming changes to the research landscape, it can be tricky to determine what information is needed for your Data Management (and Sharing) Plan. As a Princeton researcher, you have several ways of obtaining support in this area

You have free access to an online tool for writing DMPs: DMPTool . You just need to sign in as a Princeton researcher, and you’ll be able to use and adapt templates, example DMPs, and Princeton-specific guidance. You can find some helpful public guidance on using DMPTool created by Arizona State University.

You are also welcome to schedule an appointment with a member of the PRDS team. While we are unable to write your DMP for you, we are happy to review your funding call and guide you through the information you will need to provide as part of your DMP

PRDS also offers free and confidential feedback on draft DMPs. If you would like to request feedback, we require:

- Your draft DMP (either via email [[email protected]] or by selecting the “Request Feedback” option on the last page of your DMP template in the DMPTool ).

- Your funding announcement.

- Your deadline to submit your grant proposal.

NOTE: Reviewing DMPs is a process and may involve several rounds of edits or a conversation between you and our team. The timeline for requesting a DMP review is as follows:

- Single-lab or single-PI grants: no fewer than 5 business days ;

- Complex, multi-institution grants, including Centers: no fewer than 10 business days .

We will make every effort to review all DMPs submitted to us, however, we cannot guarantee a thorough review if submitted after our requested time frame.

Details will vary from funder to funder, but the Digital Curation Centre’s Checklist for a Data Management Plan provides a useful list of questions to consider when writing a DMP:

- What data will you collect or create? Type of data, e.g., observation, experimental, simulation, derived/compiled Form of data, e.g., text, numeric, audiovisual, discipline- or instrument-specific File Formats, ideally using research community standards or open format (e.g., txt, csv, pdf, jpg)

- How will the data be collected or created?

- What documentation and metadata will accompany the data?

- How will you manage any ethical issues?

- How will you manage copyright and intellectual property rights issues?

- How will the data be stored and backed up during research?

- How will you manage access and security?

- Which data should be retained, shared, and/or preserved?

- What is the long-term preservation plan for the dataset?

- How will you share the data?

- Are any restrictions on data sharing required?

- Who will be responsible for data management?

- What resources will you require to implement your plan?

Additional key things to consider

Consider the types of data that will be created or used in the project. For example, will your project…

- generate large amounts of data?

- require coordinated effort between offsite collaborators?

- use data that has licensing agreements or other restrictions on its use?

- Involve human or non-human animal subjects?

Answers to questions like these will help you accurately assess what you’ll need during the project and prevent delays during crucial stages.

Decide on file and directory naming conventions and stick to them. Document them (either independently or as part of a standard operating procedure (SOP) document) so that any new graduate students, post-docs, or collaborators can transition smoothly into the project.

Plan and implement a back-up schedule onto shared storage in order to ensure that more than one copy of the data exists. Periodic file and/or directory clean-ups will help keep “publication quality” data safe and accessible.

Make it clear who is responsible for what. For example, assign a data manager who can check that backup clients are functional, monitor shared directories for clean-up or archiving maintenance, and follow up with project members as needed.

Decide where your data will go after the end of the project. Data that are associated with publications need to be preserved long-term, and so it’s good to decide early on where the data will be stored (e.g. a discipline or institutional repository) and when and how it will get there. Other data may need this level of preservation as well. PRDS can help you find places to store your data and provide advice about what kinds of data to plan to keep.

- Events and Training

- Getting Started

- Guides for Good Data Management

- Data Curation

- Data Ownership

- Large Data Resources

- Data Use Agreements

- Human Subjects

- Finding Datasets

- Non-Human Animal Subjects

- Data Security

- File Organization

- READMEs for Research Data

- Research Computing Resources

- Collaboration Options

- Help with Statistics

- Publisher Requirements

- Data Repositories

- Princeton Data Commons

- Getting Started as a Princeton Data Commons Describe Contributor

- Publishing Large Datasets

- Using Globus with Princeton Data Commons (PDC)

- Storage Options

- Tools and Resources

- Data Management in Research: A Comprehensive Guide

Research Data Management (RDM) is an important part of any research project. Learn about RDM examples from University of Minnesota & how it helps researchers manage & share their research data.

Research data management (RDM) is a term that describes the organization, storage, preservation, and sharing of data collected and used in a research project. It involves the daily management of research data throughout the life of a research project, such as using consistent file naming conventions. Examples of data management plans (DMPs) can be found in universities, such as the University of Minnesota . These plans can be concise or detailed, depending on the type of data used (secondary or primary).

Research data can include video, sound, or text data, as long as it is used for systematic analysis. For example, a collection of video interviews used to collect and identify facial gestures and expressions in a study of emotional responses to stimuli would be considered research data. Making this data accessible to everyone in the group, even those who are not on the team but who are in the same discipline, can open up enormous opportunities to advance their own research. The Stata logging function can record all activities and store them in the relevant designated folders.

Qualitative data is subjective and exists only in relation to the observer (McLeod, 201). The Stanford Digital Repository (SDR) provides digital preservation, hosting and access services that allow researchers to preserve, manage and share research data in a secure environment for citation, access and long-term reuse. In addition, DRUM provides long-term preservation of digital data files for at least 10 years using services such as migration (limited format types), secure backups, bit-level checksums, and maintains persistent DOIs for data sets. The DMPTool includes data management plan templates along with a wealth of information and assistance to guide you through the process of creating a ready-to-use DMP for your specific research project and funding agency.

Some demographics may not be shareable on an individual level and would therefore only be provided in aggregate form. In accordance with DRUM policies, unidentified data will be accompanied by appropriate documentation, metadata and code to facilitate reuse and provide the potential for interoperability with similar data sets. It is important to remember that you can generate data at any point in your research but if you don't document it properly it will become useless. For example, observation data should be recorded immediately to avoid data loss while reference data is not as time-sensitive.

Research data management describes a way to organize and store the data that a research project has accumulated in the most efficient way possible. The willingness of researchers to manage and share their data has been evolving under increasing pressure from government mandates of the National Institutes of Health and the data exchange policies of major publishers that now require researchers to share their data and the processes they took to collect the data if they want to continue receiving funding or have their articles published. Librarians have begun to provide a range of services in this area and are now teaching data management to researchers, working with individual researchers to improve their data management practices, create thematic data management guides, and help support agencies' data requirements funding and publishers. Some operating systems also support embedding metadata in this way, such as Microsoft Document Properties.

Related Posts

- The Benefits of Data Management in Biology

Data management in biology is an essential process that involves the acquisition, modeling, storage, integration, analysis, and interpretation of various types of data. Learn more about its benefits & best practices here.

- What are the data management principles?

The first and most important guiding principle of data management is data modeling. One of the most important data management principles is to develop a data management plan.

- The Benefits of Good Data Management Explained

Data management helps minimize potential errors by establishing usage processes and policies and building trust in the data used to make decisions across the organization. Learn more about how good MDM can improve transaction efficiency.

- The Benefits of Data Management for Businesses

Data management is an essential practice for businesses of all sizes. It helps minimize potential errors by establishing usage processes & policies while ensuring accuracy & protection. Learn how industries around the world are using it.

More articles

- Data Management Services: A Comprehensive Guide

- Who will approve data management plan?

Data Management: A Comprehensive Guide

- Data Management: An Overview of the 5 Key Functions

- What is the Role of Data Management in Organizations?

- What are the 5 basic steps in data analysis?

- Data Management Softwares: A Comprehensive Guide

- A Comprehensive Guide to Data Management Plans

- What are data management skills?

- The Benefits of Data Management Tools

- Types of Data Management Functions Explained

- What is Data Services and How Does it Work?

- Data Management: Unlocking the Potential of Your Data

- Data Management Plans: A Comprehensive Guide for Researchers

- The Benefits of Enterprise Data Management for Businesses

- The Ultimate Guide to Enterprise Data Management

- Data Management: What You Need to Know

- Data Management: What is it and How to Implement it?

- Why is tools management important?

- The Ultimate Guide to Data Management

- The Benefits of Data Management: Unlocking the Value of Data

- 6 Major Areas for Best Practices in Data Management

- What should a data management plan include?

- The Benefits of Enterprise Data Management for Every Enterprise

- What is a data principle?

- The Essential Role of Data Management in Business

- The Advantages of Enterprise Data Management

- Who created data management?

- What are the 5 importance of data processing?

- The Benefits of Data Management in Research

- Data Management Tools: A Comprehensive Guide

- What are the best practices in big data adoption?

- Types of Management Tools: A Comprehensive Guide

- Data Management in Quantitative Research: An Expert's Guide

- Data Management: A Comprehensive Guide to Maximize Benefits

- How is big data used in biology?

- The Benefits of Data Management in Research: A Comprehensive Guide

- The Essential Role of Enterprise Data Management

- A Comprehensive Guide to Different Types of Data Management

- What is managing the data?

- What is a data standard?

- What are data standards and interoperability?

- What software create and manage data base?

- Which of the following is api management tool?

- Types of Data in Research: A Comprehensive Guide

- A Comprehensive Guide to Writing a Data Management Research Plan

- What Are the Different Types of Management Tools?

Data Processing: Exploring the 3 Main Methods

- The Benefits of Research Data Management

- What tools are used in data analysis?

- 9 Best Practices of Master Data Management

- What is data management framework in d365?

- How do you create a data standard?

- Data Management: A Comprehensive Guide to Activities Involved

- 10 Steps to Master Data Management

- What is Enterprise Data Management and How Can It Help Your Organization?

- Data Management Standards: A Comprehensive Guide

- Data Management: A Comprehensive Guide to Unlocking Data Potential

- Data Management Best Practices: A Comprehensive Guide

- The Benefits of Data Management: Unlocking the Potential of Data

- Why is data management and analysis important?

- The Difference Between Enterprise Data Management and Data Management

- The Benefits of Understanding Project Data and Processes

- Data Management Principles: A Comprehensive Guide

- What is Enterprise Data Management Framework?

- What is a value in data management?

- Data Management Tools: An Expert's Guide

- The Benefits of Enterprise Data Management

- What are database systems?

- Data Management: Functions, Storage, Security and More

- Four Essential Best Practices for Big Data Governance

- Data Management: What is it and How to Use it?

- What is the Purpose of a Data Management Plan?

- The Benefits of Data Management: Unlocking the Power of Your Data

- Data Management: Unlocking the Potential of Data

- How does data governance add value?

- A Comprehensive Guide to Database Management Systems

- 4 Types of Data Management Explained

- Data Management: Unlocking the Power of Data Analysis

- Data Management in Research Studies: An Expert's Guide

- The Significance of Data Management in Mathematics

- What is data standard?

- Do You Need Math to Become a Data Scientist?

- What is Data Management and How to Manage it Effectively?

- Data Governance vs Data Management: What's the Difference?

New Articles

Which cookies do you want to accept?

What is Data Analysis? An Expert Guide With Examples

What is data analysis.

Data analysis is a comprehensive method of inspecting, cleansing, transforming, and modeling data to discover useful information, draw conclusions, and support decision-making. It is a multifaceted process involving various techniques and methodologies to interpret data from various sources in different formats, both structured and unstructured.

Data analysis is not just a mere process; it's a tool that empowers organizations to make informed decisions, predict trends, and improve operational efficiency. It's the backbone of strategic planning in businesses, governments, and other organizations.

Consider the example of a leading e-commerce company. Through data analysis, they can understand their customers' buying behavior, preferences, and patterns. They can then use this information to personalize customer experiences, forecast sales, and optimize marketing strategies, ultimately driving business growth and customer satisfaction.

Learn more about how to become a data analyst in our separate article, which covers everything you need to know about launching your career in this field and the skills you’ll need to master.

AI Upskilling for Beginners

The importance of data analysis in today's digital world.

In the era of digital transformation, data analysis has become more critical than ever. The explosion of data generated by digital technologies has led to the advent of what we now call 'big data.' This vast amount of data, if analyzed correctly, can provide invaluable insights that can revolutionize businesses.

Data analysis is the key to unlocking the potential of big data. It helps organizations to make sense of this data, turning it into actionable insights. These insights can be used to improve products and services, enhance experiences, streamline operations, and increase profitability.

A good example is the healthcare industry . Through data analysis, healthcare providers can predict disease outbreaks, improve patient care, and make informed decisions about treatment strategies. Similarly, in the finance sector, data analysis can help in risk assessment, fraud detection, and investment decision-making.

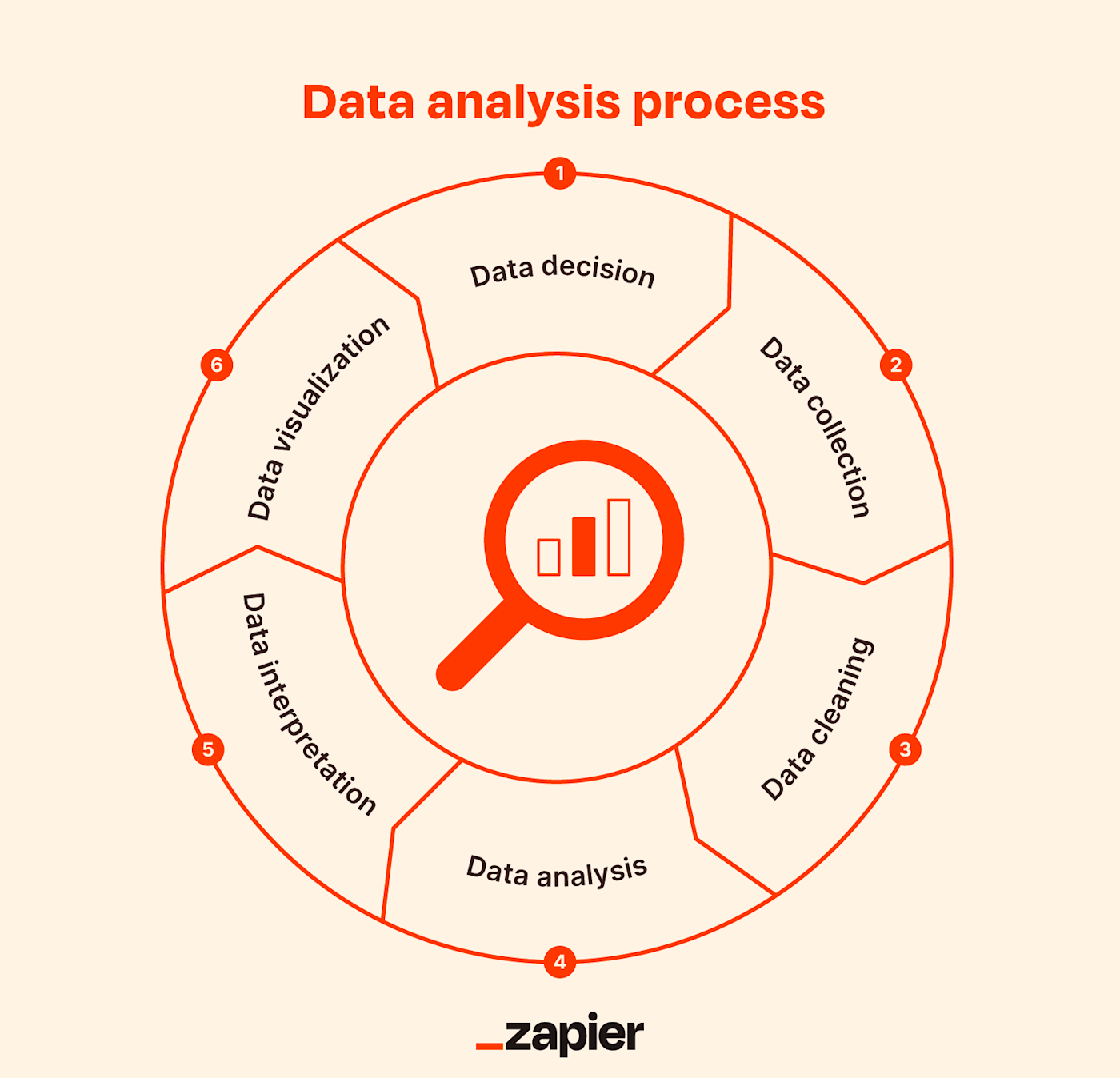

The Data Analysis Process: A Step-by-Step Guide

The process of data analysis is a systematic approach that involves several stages, each crucial to ensuring the accuracy and usefulness of the results. Here, we'll walk you through each step, from defining objectives to data storytelling. You can learn more about how businesses analyze data in a separate guide.

The data analysis process in a nutshell

Step 1: Defining objectives and questions

The first step in the data analysis process is to define the objectives and formulate clear, specific questions that your analysis aims to answer. This step is crucial as it sets the direction for the entire process. It involves understanding the problem or situation at hand, identifying the data needed to address it, and defining the metrics or indicators to measure the outcomes.

Step 2: Data collection

Once the objectives and questions are defined, the next step is to collect the relevant data. This can be done through various methods such as surveys, interviews, observations, or extracting from existing databases. The data collected can be quantitative (numerical) or qualitative (non-numerical), depending on the nature of the problem and the questions being asked.

Step 3: Data cleaning

Data cleaning, also known as data cleansing, is a critical step in the data analysis process. It involves checking the data for errors and inconsistencies, and correcting or removing them. This step ensures the quality and reliability of the data, which is crucial for obtaining accurate and meaningful results from the analysis.

Step 4: Data analysis

Once the data is cleaned, it's time for the actual analysis. This involves applying statistical or mathematical techniques to the data to discover patterns, relationships, or trends. There are various tools and software available for this purpose, such as Python, R, Excel, and specialized software like SPSS and SAS.

Step 5: Data interpretation and visualization

After the data is analyzed, the next step is to interpret the results and visualize them in a way that is easy to understand. This could involve creating charts, graphs, or other visual representations of the data. Data visualization helps to make complex data more understandable and provides a clear picture of the findings.

Step 6: Data storytelling

The final step in the data analysis process is data storytelling. This involves presenting the findings of the analysis in a narrative form that is engaging and easy to understand. Data storytelling is crucial for communicating the results to non-technical audiences and for making data-driven decisions.

The Types of Data Analysis

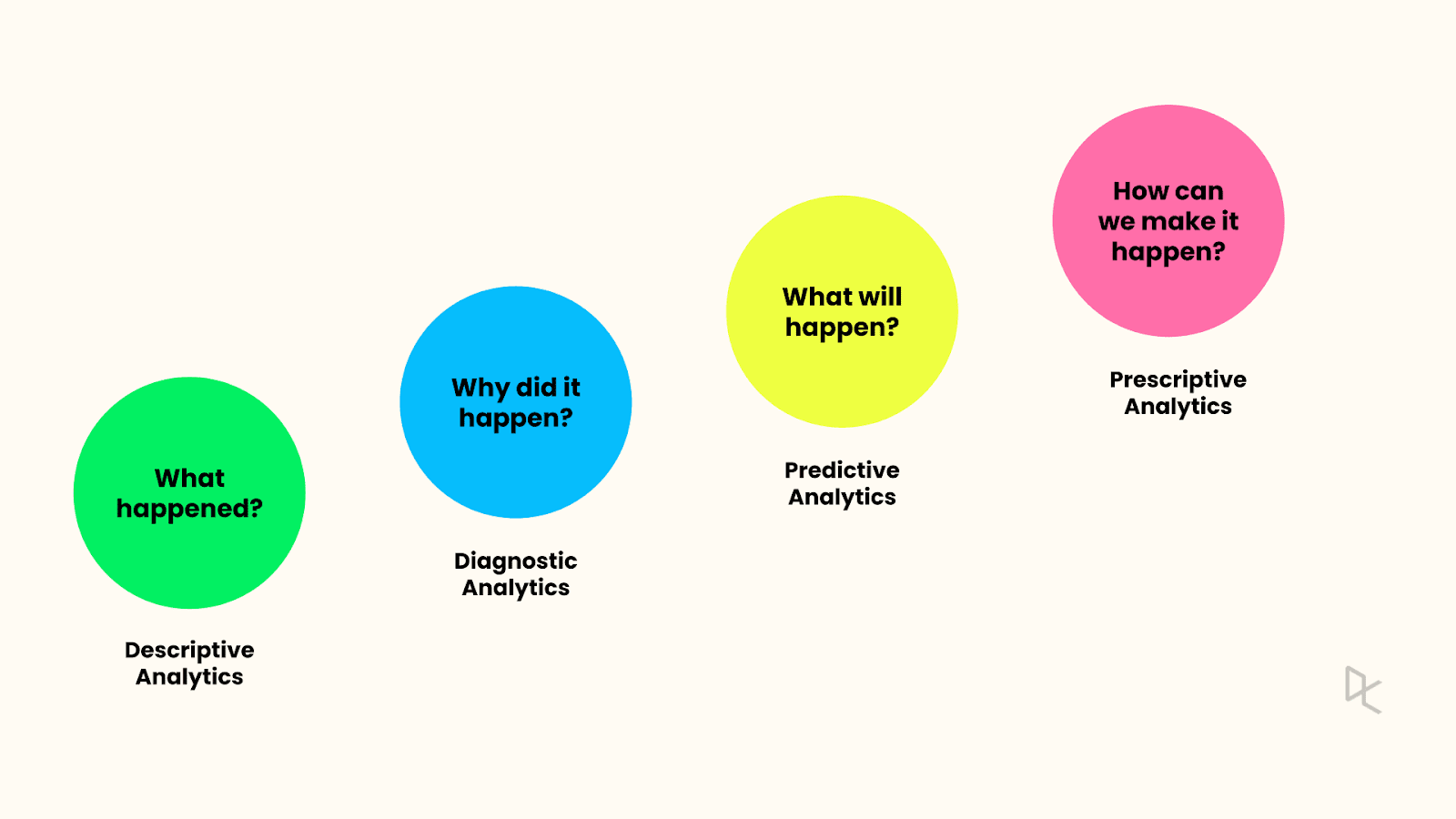

Data analysis can be categorized into four main types, each serving a unique purpose and providing different insights. These are descriptive, diagnostic, predictive, and prescriptive analyses.

The four types of analytics

Descriptive analysis

Descriptive analysis , as the name suggests, describes or summarizes raw data and makes it interpretable. It involves analyzing historical data to understand what has happened in the past.

This type of analysis is used to identify patterns and trends over time.

For example, a business might use descriptive analysis to understand the average monthly sales for the past year.

Diagnostic analysis

Diagnostic analysis goes a step further than descriptive analysis by determining why something happened. It involves more detailed data exploration and comparing different data sets to understand the cause of a particular outcome.

For instance, if a company's sales dropped in a particular month, diagnostic analysis could be used to find out why.

Predictive analysis

Predictive analysis uses statistical models and forecasting techniques to understand the future. It involves using data from the past to predict what could happen in the future. This type of analysis is often used in risk assessment, marketing, and sales forecasting.

For example, a company might use predictive analysis to forecast the next quarter's sales based on historical data.

Prescriptive analysis

Prescriptive analysis is the most advanced type of data analysis. It not only predicts future outcomes but also suggests actions to benefit from these predictions. It uses sophisticated tools and technologies like machine learning and artificial intelligence to recommend decisions.

For example, a prescriptive analysis might suggest the best marketing strategies to increase future sales.

Data Analysis Techniques

There are numerous techniques used in data analysis, each with its unique purpose and application. Here, we will discuss some of the most commonly used techniques, including exploratory analysis, regression analysis, Monte Carlo simulation, factor analysis, cohort analysis, cluster analysis, time series analysis, and sentiment analysis.

Exploratory analysis

Exploratory analysis is used to understand the main characteristics of a data set. It is often used at the beginning of a data analysis process to summarize the main aspects of the data, check for missing data, and test assumptions. This technique involves visual methods such as scatter plots, histograms, and box plots.

You can learn more about exploratory data analysis with our course, covering how to explore, visualize, and extract insights from data using Python.

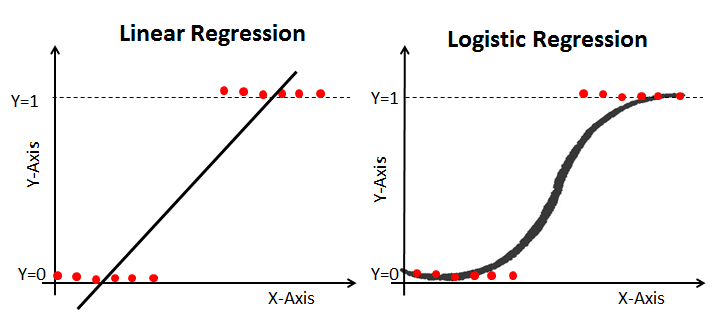

Regression analysis

Regression analysis is a statistical method used to understand the relationship between a dependent variable and one or more independent variables. It is commonly used for forecasting, time series modeling, and finding the causal effect relationships between variables.

We have a tutorial exploring the essentials of linear regression , which is one of the most widely used regression algorithms in areas like machine learning.

Linear and logistic regression

Factor analysis

Factor analysis is a technique used to reduce a large number of variables into fewer factors. The factors are constructed in such a way that they capture the maximum possible information from the original variables. This technique is often used in market research, customer segmentation, and image recognition.

Learn more about factor analysis in R with our course, which explores latent variables, such as personality, using exploratory and confirmatory factor analyses.

Monte Carlo simulation

Monte Carlo simulation is a technique that uses probability distributions and random sampling to estimate numerical results. It is often used in risk analysis and decision-making where there is significant uncertainty.

We have a tutorial that explores Monte Carlo methods in R , as well as a course on Monte Carlo simulations in Python , which can estimate a range of outcomes for uncertain events.

Example of a Monte Carlo simulation

Cluster analysis

Cluster analysis is a technique used to group a set of objects in such a way that objects in the same group (called a cluster) are more similar to each other than to those in other groups. It is often used in market segmentation, image segmentation, and recommendation systems.

You can explore a range of clustering techniques, including hierarchical clustering and k-means clustering, in our Cluster Analysis in R course.

Cohort analysis

Cohort analysis is a subset of behavioral analytics that takes data from a given dataset and groups it into related groups for analysis. These related groups, or cohorts, usually share common characteristics within a defined time span. This technique is often used in marketing, user engagement, and customer lifecycle analysis.

Our course, Customer Segmentation in Python , explores a range of techniques for segmenting and analyzing customer data, including cohort analysis.

Graph showing an example of cohort analysis

Time series analysis

Time series analysis is a statistical technique that deals with time series data, or trend analysis. It is used to analyze the sequence of data points to extract meaningful statistics and other characteristics of the data. This technique is often used in sales forecasting, economic forecasting, and weather forecasting.

Our Time Series with Python skill track takes you through how to manipulate and analyze time series data, working with a variety of Python libraries.

Sentiment analysis

Sentiment analysis, also known as opinion mining, uses natural language processing, text analysis, and computational linguistics to identify and extract subjective information from source materials. It is often used in social media monitoring, brand monitoring, and understanding customer feedback.

To get familiar with sentiment analysis in Python , you can take our online course, which will teach you how to perform an end-to-end sentiment analysis.

Data Analysis Tools

In the realm of data analysis, various tools are available that cater to different needs, complexities, and levels of expertise. These tools range from programming languages like Python and R to visualization software like Power BI and Tableau. Let's delve into some of these tools.

Python is a high-level, general-purpose programming language that has become a favorite among data analysts and data scientists. Its simplicity and readability, coupled with a wide range of libraries like pandas , NumPy , and Matplotlib , make it an excellent tool for data analysis and data visualization.

" dir="ltr">Resources to get you started

- You can start learning Python today with our Python Fundamentals skill track, which covers all the foundational skills you need to understand the language.

- You can also take out Data Analyst with Python career track to start your journey to becoming a data analyst.

- Check out our Python for beginners cheat sheet as a handy reference guide.

R is a programming language and free software environment specifically designed for statistical computing and graphics. It is widely used among statisticians and data miners for developing statistical software and data analysis. R provides a wide variety of statistical and graphical techniques, including linear and nonlinear modeling, classical statistical tests, time-series analysis, and more.

- Our R Programming skill track will introduce you to R and help you develop the skills you’ll need to start coding in R.

- With the Data Analyst with R career track, you’ll gain the skills you need to start your journey to becoming a data analyst.

- Our Getting Started with R cheat sheet helps give an overview of how to start learning R Programming.

SQL (Structured Query Language) is a standard language for managing and manipulating databases. It is used to retrieve and manipulate data stored in relational databases. SQL is essential for tasks that involve data management or manipulation within databases.

- To get familiar with SQL, consider taking our SQL Fundamentals skill track, where you’ll learn how to interact with and query your data.

- SQL for Business Analysts will boost your business SQL skills.

- Our SQL Basics cheat sheet covers a list of functions for querying data, filtering data, aggregation, and more.

Power BI is a business analytics tool developed by Microsoft. It provides interactive visualizations with self-service business intelligence capabilities. Power BI is used to transform raw data into meaningful insights through easy-to-understand dashboards and reports.

- Explore the power of Power BI with our Power BI Fundamentals skill track, where you’ll learn to get the most from the business intelligence tool.

- With Exploratory Data Analysis in Power BI you’ll learn how to enhance your reports with EDA.

- We have a Power BI cheat sheet which covers many of the basics you’ll need to get started.

Tableau is a powerful data visualization tool used in the Business Intelligence industry. It allows you to create interactive and shareable dashboards, which depict trends, variations, and density of the data in the form of charts and graphs.

- The Tableau Fundamentals skill track will introduce you to the business intelligence tool and how you can use it to clear, analyze, and visualize data.

- Analyzing Data in Tableau will give you some of the advanced skills needed to improve your analytics and visualizations.

- Check out our Tableau cheat sheet , which runs you through the essentials of how to get started using the tool.

Microsoft Excel is one of the most widely used tools for data analysis. It offers a range of features for data manipulation, statistical analysis, and visualization. Excel's simplicity and versatility make it a great tool for both simple and complex data analysis tasks.

- Check out our Data Analysis in Excel course to build functional skills in Excel.

- For spreadsheet skills in general, check out Marketing Analytics in Spreadsheets .

- The Excel Basics cheat sheet covers many of the basic formulas and operations you’ll need to make a start.

Understanding the Impact of Data Analysis

Data analysis, whether on a small or large scale, can have a profound impact on business performance. It can drive significant changes, leading to improved efficiency, increased profitability, and a deeper understanding of market trends and customer behavior.

Informed decision-making

Data analysis allows businesses to make informed decisions based on facts, figures, and trends, rather than relying on guesswork or intuition. It provides a solid foundation for strategic planning and policy-making, ensuring that resources are allocated effectively and that efforts are directed towards areas that will yield the most benefit.

Impact on small businesses

For small businesses, even simple data analysis can lead to significant improvements. For example, analyzing sales data can help identify which products are performing well and which are not. This information can then be used to adjust marketing strategies, pricing, and inventory management, leading to increased sales and profitability.

Impact on large businesses