- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Creating Brand Value

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading Change and Organizational Renewal

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

What Is Regression Analysis in Business Analytics?

- 14 Dec 2021

Countless factors impact every facet of business. How can you consider those factors and know their true impact?

Imagine you seek to understand the factors that influence people’s decision to buy your company’s product. They range from customers’ physical locations to satisfaction levels among sales representatives to your competitors' Black Friday sales.

Understanding the relationships between each factor and product sales can enable you to pinpoint areas for improvement, helping you drive more sales.

To learn how each factor influences sales, you need to use a statistical analysis method called regression analysis .

If you aren’t a business or data analyst, you may not run regressions yourself, but knowing how analysis works can provide important insight into which factors impact product sales and, thus, which are worth improving.

Access your free e-book today.

Foundational Concepts for Regression Analysis

Before diving into regression analysis, you need to build foundational knowledge of statistical concepts and relationships.

Independent and Dependent Variables

Start with the basics. What relationship are you aiming to explore? Try formatting your answer like this: “I want to understand the impact of [the independent variable] on [the dependent variable].”

The independent variable is the factor that could impact the dependent variable . For example, “I want to understand the impact of employee satisfaction on product sales.”

In this case, employee satisfaction is the independent variable, and product sales is the dependent variable. Identifying the dependent and independent variables is the first step toward regression analysis.

Correlation vs. Causation

One of the cardinal rules of statistically exploring relationships is to never assume correlation implies causation. In other words, just because two variables move in the same direction doesn’t mean one caused the other to occur.

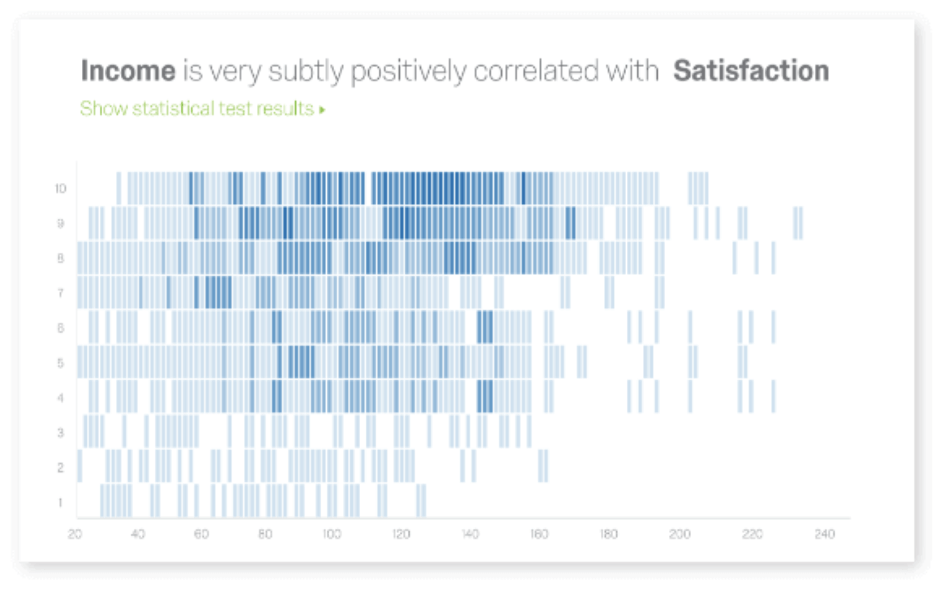

If two or more variables are correlated , their directional movements are related. If two variables are positively correlated , it means that as one goes up or down, so does the other. Alternatively, if two variables are negatively correlated , one goes up while the other goes down.

A correlation’s strength can be quantified by calculating the correlation coefficient , sometimes represented by r . The correlation coefficient falls between negative one and positive one.

r = -1 indicates a perfect negative correlation.

r = 1 indicates a perfect positive correlation.

r = 0 indicates no correlation.

Causation means that one variable caused the other to occur. Proving a causal relationship between variables requires a true experiment with a control group (which doesn’t receive the independent variable) and an experimental group (which receives the independent variable).

While regression analysis provides insights into relationships between variables, it doesn’t prove causation. It can be tempting to assume that one variable caused the other—especially if you want it to be true—which is why you need to keep this in mind any time you run regressions or analyze relationships between variables.

With the basics under your belt, here’s a deeper explanation of regression analysis so you can leverage it to drive strategic planning and decision-making.

Related: How to Learn Business Analytics without a Business Background

What Is Regression Analysis?

Regression analysis is the statistical method used to determine the structure of a relationship between two variables (single linear regression) or three or more variables (multiple regression).

According to the Harvard Business School Online course Business Analytics , regression is used for two primary purposes:

- To study the magnitude and structure of the relationship between variables

- To forecast a variable based on its relationship with another variable

Both of these insights can inform strategic business decisions.

“Regression allows us to gain insights into the structure of that relationship and provides measures of how well the data fit that relationship,” says HBS Professor Jan Hammond, who teaches Business Analytics, one of three courses that comprise the Credential of Readiness (CORe) program . “Such insights can prove extremely valuable for analyzing historical trends and developing forecasts.”

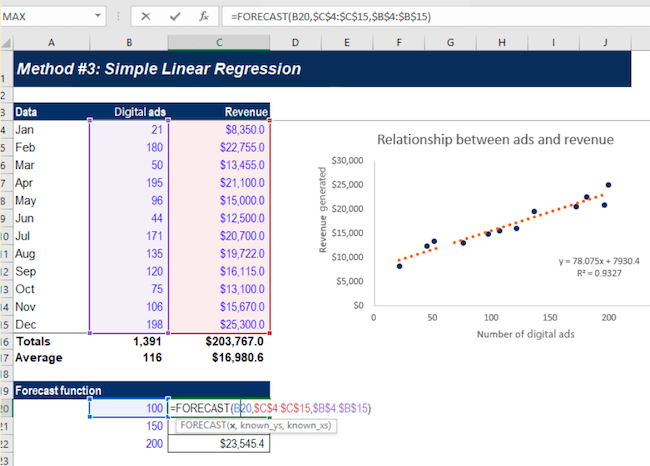

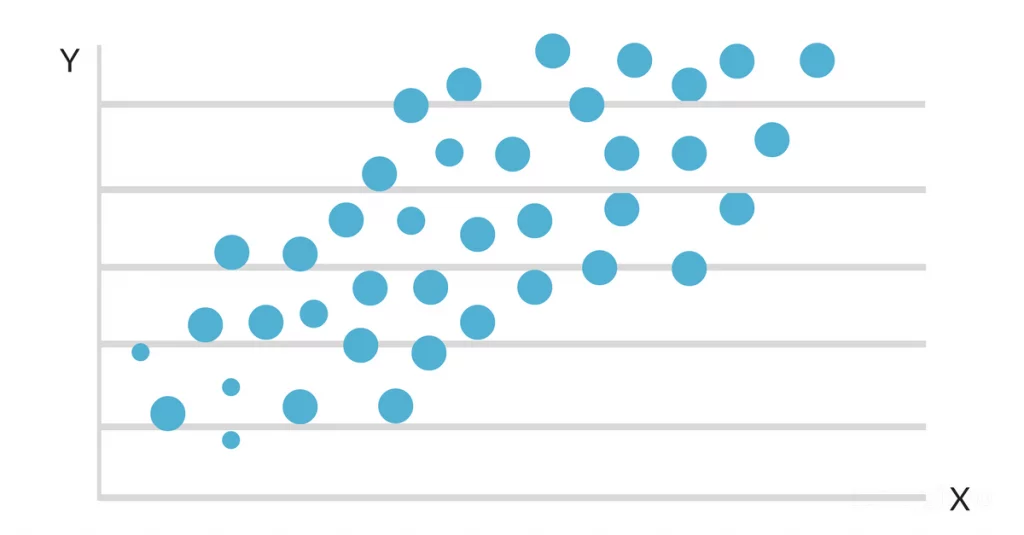

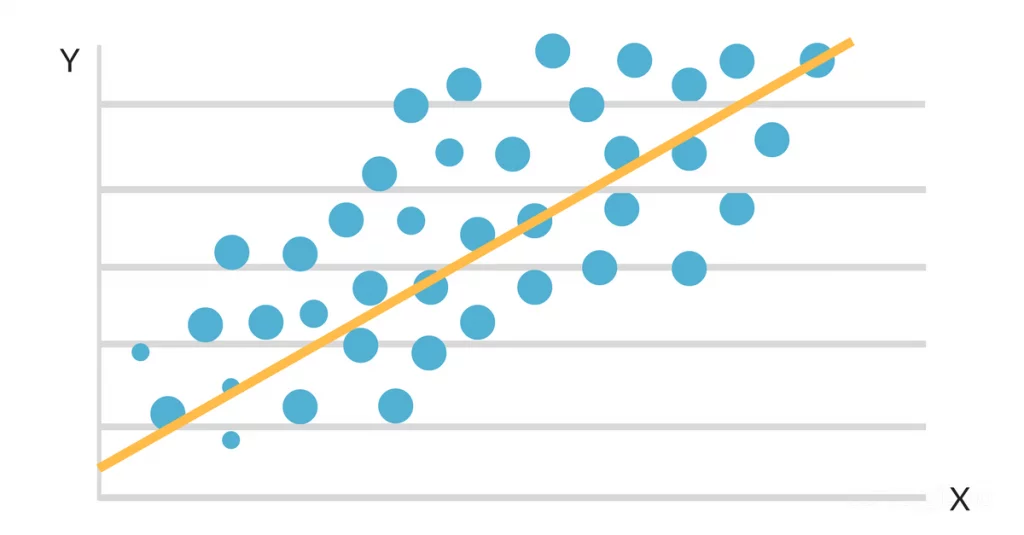

One way to think of regression is by visualizing a scatter plot of your data with the independent variable on the X-axis and the dependent variable on the Y-axis. The regression line is the line that best fits the scatter plot data. The regression equation represents the line’s slope and the relationship between the two variables, along with an estimation of error.

Physically creating this scatter plot can be a natural starting point for parsing out the relationships between variables.

Types of Regression Analysis

There are two types of regression analysis: single variable linear regression and multiple regression.

Single variable linear regression is used to determine the relationship between two variables: the independent and dependent. The equation for a single variable linear regression looks like this:

In the equation:

- ŷ is the expected value of Y (the dependent variable) for a given value of X (the independent variable).

- x is the independent variable.

- α is the Y-intercept, the point at which the regression line intersects with the vertical axis.

- β is the slope of the regression line, or the average change in the dependent variable as the independent variable increases by one.

- ε is the error term, equal to Y – ŷ, or the difference between the actual value of the dependent variable and its expected value.

Multiple regression , on the other hand, is used to determine the relationship between three or more variables: the dependent variable and at least two independent variables. The multiple regression equation looks complex but is similar to the single variable linear regression equation:

Each component of this equation represents the same thing as in the previous equation, with the addition of the subscript k, which is the total number of independent variables being examined. For each independent variable you include in the regression, multiply the slope of the regression line by the value of the independent variable, and add it to the rest of the equation.

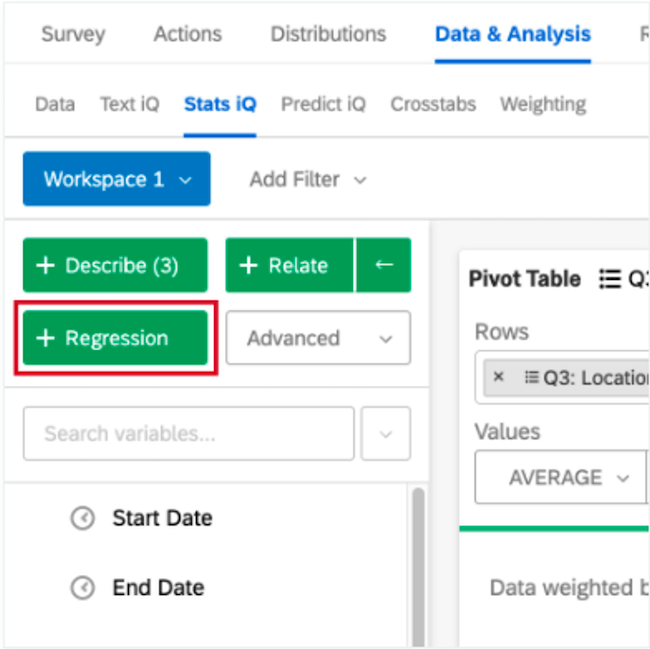

How to Run Regressions

You can use a host of statistical programs—such as Microsoft Excel, SPSS, and STATA—to run both single variable linear and multiple regressions. If you’re interested in hands-on practice with this skill, Business Analytics teaches learners how to create scatter plots and run regressions in Microsoft Excel, as well as make sense of the output and use it to drive business decisions.

Calculating Confidence and Accounting for Error

It’s important to note: This overview of regression analysis is introductory and doesn’t delve into calculations of confidence level, significance, variance, and error. When working in a statistical program, these calculations may be provided or require that you implement a function. When conducting regression analysis, these metrics are important for gauging how significant your results are and how much importance to place on them.

Why Use Regression Analysis?

Once you’ve generated a regression equation for a set of variables, you effectively have a roadmap for the relationship between your independent and dependent variables. If you input a specific X value into the equation, you can see the expected Y value.

This can be critical for predicting the outcome of potential changes, allowing you to ask, “What would happen if this factor changed by a specific amount?”

Returning to the earlier example, running a regression analysis could allow you to find the equation representing the relationship between employee satisfaction and product sales. You could input a higher level of employee satisfaction and see how sales might change accordingly. This information could lead to improved working conditions for employees, backed by data that shows the tie between high employee satisfaction and sales.

Whether predicting future outcomes, determining areas for improvement, or identifying relationships between seemingly unconnected variables, understanding regression analysis can enable you to craft data-driven strategies and determine the best course of action with all factors in mind.

Do you want to become a data-driven professional? Explore our eight-week Business Analytics course and our three-course Credential of Readiness (CORe) program to deepen your analytical skills and apply them to real-world business problems.

About the Author

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

A Refresher on Regression Analysis

Understanding one of the most important types of data analysis.

You probably know by now that whenever possible you should be making data-driven decisions at work . But do you know how to parse through all the data available to you? The good news is that you probably don’t need to do the number crunching yourself (hallelujah!) but you do need to correctly understand and interpret the analysis created by your colleagues. One of the most important types of data analysis is called regression analysis.

- Amy Gallo is a contributing editor at Harvard Business Review, cohost of the Women at Work podcast , and the author of two books: Getting Along: How to Work with Anyone (Even Difficult People) and the HBR Guide to Dealing with Conflict . She writes and speaks about workplace dynamics. Watch her TEDx talk on conflict and follow her on LinkedIn . amyegallo

Partner Center

- Privacy Policy

Home » Regression Analysis – Methods, Types and Examples

Regression Analysis – Methods, Types and Examples

Table of Contents

Regression Analysis

Regression analysis is a set of statistical processes for estimating the relationships among variables . It includes many techniques for modeling and analyzing several variables when the focus is on the relationship between a dependent variable and one or more independent variables (or ‘predictors’).

Regression Analysis Methodology

Here is a general methodology for performing regression analysis:

- Define the research question: Clearly state the research question or hypothesis you want to investigate. Identify the dependent variable (also called the response variable or outcome variable) and the independent variables (also called predictor variables or explanatory variables) that you believe are related to the dependent variable.

- Collect data: Gather the data for the dependent variable and independent variables. Ensure that the data is relevant, accurate, and representative of the population or phenomenon you are studying.

- Explore the data: Perform exploratory data analysis to understand the characteristics of the data, identify any missing values or outliers, and assess the relationships between variables through scatter plots, histograms, or summary statistics.

- Choose the regression model: Select an appropriate regression model based on the nature of the variables and the research question. Common regression models include linear regression, multiple regression, logistic regression, polynomial regression, and time series regression, among others.

- Assess assumptions: Check the assumptions of the regression model. Some common assumptions include linearity (the relationship between variables is linear), independence of errors, homoscedasticity (constant variance of errors), and normality of errors. Violation of these assumptions may require additional steps or alternative models.

- Estimate the model: Use a suitable method to estimate the parameters of the regression model. The most common method is ordinary least squares (OLS), which minimizes the sum of squared differences between the observed and predicted values of the dependent variable.

- I nterpret the results: Analyze the estimated coefficients, p-values, confidence intervals, and goodness-of-fit measures (e.g., R-squared) to interpret the results. Determine the significance and direction of the relationships between the independent variables and the dependent variable.

- Evaluate model performance: Assess the overall performance of the regression model using appropriate measures, such as R-squared, adjusted R-squared, and root mean squared error (RMSE). These measures indicate how well the model fits the data and how much of the variation in the dependent variable is explained by the independent variables.

- Test assumptions and diagnose problems: Check the residuals (the differences between observed and predicted values) for any patterns or deviations from assumptions. Conduct diagnostic tests, such as examining residual plots, testing for multicollinearity among independent variables, and assessing heteroscedasticity or autocorrelation, if applicable.

- Make predictions and draw conclusions: Once you have a satisfactory model, use it to make predictions on new or unseen data. Draw conclusions based on the results of the analysis, considering the limitations and potential implications of the findings.

Types of Regression Analysis

Types of Regression Analysis are as follows:

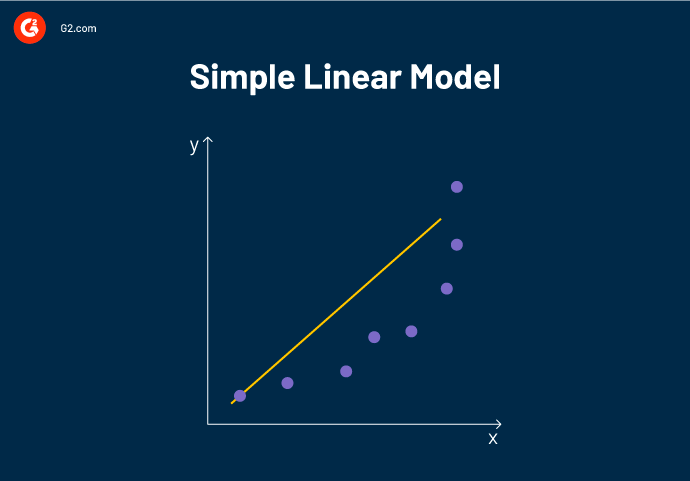

Linear Regression

Linear regression is the most basic and widely used form of regression analysis. It models the linear relationship between a dependent variable and one or more independent variables. The goal is to find the best-fitting line that minimizes the sum of squared differences between observed and predicted values.

Multiple Regression

Multiple regression extends linear regression by incorporating two or more independent variables to predict the dependent variable. It allows for examining the simultaneous effects of multiple predictors on the outcome variable.

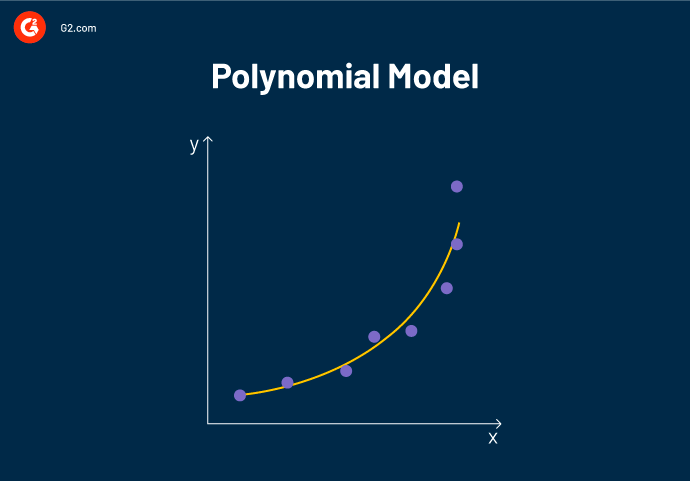

Polynomial Regression

Polynomial regression models non-linear relationships between variables by adding polynomial terms (e.g., squared or cubic terms) to the regression equation. It can capture curved or nonlinear patterns in the data.

Logistic Regression

Logistic regression is used when the dependent variable is binary or categorical. It models the probability of the occurrence of a certain event or outcome based on the independent variables. Logistic regression estimates the coefficients using the logistic function, which transforms the linear combination of predictors into a probability.

Ridge Regression and Lasso Regression

Ridge regression and Lasso regression are techniques used for addressing multicollinearity (high correlation between independent variables) and variable selection. Both methods introduce a penalty term to the regression equation to shrink or eliminate less important variables. Ridge regression uses L2 regularization, while Lasso regression uses L1 regularization.

Time Series Regression

Time series regression analyzes the relationship between a dependent variable and independent variables when the data is collected over time. It accounts for autocorrelation and trends in the data and is used in forecasting and studying temporal relationships.

Nonlinear Regression

Nonlinear regression models are used when the relationship between the dependent variable and independent variables is not linear. These models can take various functional forms and require estimation techniques different from those used in linear regression.

Poisson Regression

Poisson regression is employed when the dependent variable represents count data. It models the relationship between the independent variables and the expected count, assuming a Poisson distribution for the dependent variable.

Generalized Linear Models (GLM)

GLMs are a flexible class of regression models that extend the linear regression framework to handle different types of dependent variables, including binary, count, and continuous variables. GLMs incorporate various probability distributions and link functions.

Regression Analysis Formulas

Regression analysis involves estimating the parameters of a regression model to describe the relationship between the dependent variable (Y) and one or more independent variables (X). Here are the basic formulas for linear regression, multiple regression, and logistic regression:

Linear Regression:

Simple Linear Regression Model: Y = β0 + β1X + ε

Multiple Linear Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

In both formulas:

- Y represents the dependent variable (response variable).

- X represents the independent variable(s) (predictor variable(s)).

- β0, β1, β2, …, βn are the regression coefficients or parameters that need to be estimated.

- ε represents the error term or residual (the difference between the observed and predicted values).

Multiple Regression:

Multiple regression extends the concept of simple linear regression by including multiple independent variables.

Multiple Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

The formulas are similar to those in linear regression, with the addition of more independent variables.

Logistic Regression:

Logistic regression is used when the dependent variable is binary or categorical. The logistic regression model applies a logistic or sigmoid function to the linear combination of the independent variables.

Logistic Regression Model: p = 1 / (1 + e^-(β0 + β1X1 + β2X2 + … + βnXn))

In the formula:

- p represents the probability of the event occurring (e.g., the probability of success or belonging to a certain category).

- X1, X2, …, Xn represent the independent variables.

- e is the base of the natural logarithm.

The logistic function ensures that the predicted probabilities lie between 0 and 1, allowing for binary classification.

Regression Analysis Examples

Regression Analysis Examples are as follows:

- Stock Market Prediction: Regression analysis can be used to predict stock prices based on various factors such as historical prices, trading volume, news sentiment, and economic indicators. Traders and investors can use this analysis to make informed decisions about buying or selling stocks.

- Demand Forecasting: In retail and e-commerce, real-time It can help forecast demand for products. By analyzing historical sales data along with real-time data such as website traffic, promotional activities, and market trends, businesses can adjust their inventory levels and production schedules to meet customer demand more effectively.

- Energy Load Forecasting: Utility companies often use real-time regression analysis to forecast electricity demand. By analyzing historical energy consumption data, weather conditions, and other relevant factors, they can predict future energy loads. This information helps them optimize power generation and distribution, ensuring a stable and efficient energy supply.

- Online Advertising Performance: It can be used to assess the performance of online advertising campaigns. By analyzing real-time data on ad impressions, click-through rates, conversion rates, and other metrics, advertisers can adjust their targeting, messaging, and ad placement strategies to maximize their return on investment.

- Predictive Maintenance: Regression analysis can be applied to predict equipment failures or maintenance needs. By continuously monitoring sensor data from machines or vehicles, regression models can identify patterns or anomalies that indicate potential failures. This enables proactive maintenance, reducing downtime and optimizing maintenance schedules.

- Financial Risk Assessment: Real-time regression analysis can help financial institutions assess the risk associated with lending or investment decisions. By analyzing real-time data on factors such as borrower financials, market conditions, and macroeconomic indicators, regression models can estimate the likelihood of default or assess the risk-return tradeoff for investment portfolios.

Importance of Regression Analysis

Importance of Regression Analysis is as follows:

- Relationship Identification: Regression analysis helps in identifying and quantifying the relationship between a dependent variable and one or more independent variables. It allows us to determine how changes in independent variables impact the dependent variable. This information is crucial for decision-making, planning, and forecasting.

- Prediction and Forecasting: Regression analysis enables us to make predictions and forecasts based on the relationships identified. By estimating the values of the dependent variable using known values of independent variables, regression models can provide valuable insights into future outcomes. This is particularly useful in business, economics, finance, and other fields where forecasting is vital for planning and strategy development.

- Causality Assessment: While correlation does not imply causation, regression analysis provides a framework for assessing causality by considering the direction and strength of the relationship between variables. It allows researchers to control for other factors and assess the impact of a specific independent variable on the dependent variable. This helps in determining the causal effect and identifying significant factors that influence outcomes.

- Model Building and Variable Selection: Regression analysis aids in model building by determining the most appropriate functional form of the relationship between variables. It helps researchers select relevant independent variables and eliminate irrelevant ones, reducing complexity and improving model accuracy. This process is crucial for creating robust and interpretable models.

- Hypothesis Testing: Regression analysis provides a statistical framework for hypothesis testing. Researchers can test the significance of individual coefficients, assess the overall model fit, and determine if the relationship between variables is statistically significant. This allows for rigorous analysis and validation of research hypotheses.

- Policy Evaluation and Decision-Making: Regression analysis plays a vital role in policy evaluation and decision-making processes. By analyzing historical data, researchers can evaluate the effectiveness of policy interventions and identify the key factors contributing to certain outcomes. This information helps policymakers make informed decisions, allocate resources effectively, and optimize policy implementation.

- Risk Assessment and Control: Regression analysis can be used for risk assessment and control purposes. By analyzing historical data, organizations can identify risk factors and develop models that predict the likelihood of certain outcomes, such as defaults, accidents, or failures. This enables proactive risk management, allowing organizations to take preventive measures and mitigate potential risks.

When to Use Regression Analysis

- Prediction : Regression analysis is often employed to predict the value of the dependent variable based on the values of independent variables. For example, you might use regression to predict sales based on advertising expenditure, or to predict a student’s academic performance based on variables like study time, attendance, and previous grades.

- Relationship analysis: Regression can help determine the strength and direction of the relationship between variables. It can be used to examine whether there is a linear association between variables, identify which independent variables have a significant impact on the dependent variable, and quantify the magnitude of those effects.

- Causal inference: Regression analysis can be used to explore cause-and-effect relationships by controlling for other variables. For example, in a medical study, you might use regression to determine the impact of a specific treatment while accounting for other factors like age, gender, and lifestyle.

- Forecasting : Regression models can be utilized to forecast future trends or outcomes. By fitting a regression model to historical data, you can make predictions about future values of the dependent variable based on changes in the independent variables.

- Model evaluation: Regression analysis can be used to evaluate the performance of a model or test the significance of variables. You can assess how well the model fits the data, determine if additional variables improve the model’s predictive power, or test the statistical significance of coefficients.

- Data exploration : Regression analysis can help uncover patterns and insights in the data. By examining the relationships between variables, you can gain a deeper understanding of the data set and identify potential patterns, outliers, or influential observations.

Applications of Regression Analysis

Here are some common applications of regression analysis:

- Economic Forecasting: Regression analysis is frequently employed in economics to forecast variables such as GDP growth, inflation rates, or stock market performance. By analyzing historical data and identifying the underlying relationships, economists can make predictions about future economic conditions.

- Financial Analysis: Regression analysis plays a crucial role in financial analysis, such as predicting stock prices or evaluating the impact of financial factors on company performance. It helps analysts understand how variables like interest rates, company earnings, or market indices influence financial outcomes.

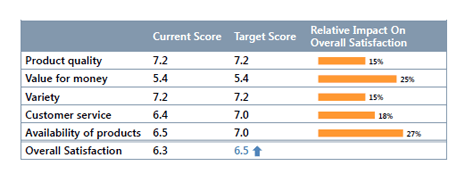

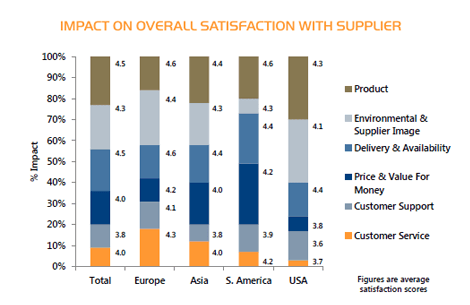

- Marketing Research: Regression analysis helps marketers understand consumer behavior and make data-driven decisions. It can be used to predict sales based on advertising expenditures, pricing strategies, or demographic variables. Regression models provide insights into which marketing efforts are most effective and help optimize marketing campaigns.

- Health Sciences: Regression analysis is extensively used in medical research and public health studies. It helps examine the relationship between risk factors and health outcomes, such as the impact of smoking on lung cancer or the relationship between diet and heart disease. Regression analysis also helps in predicting health outcomes based on various factors like age, genetic markers, or lifestyle choices.

- Social Sciences: Regression analysis is widely used in social sciences like sociology, psychology, and education research. Researchers can investigate the impact of variables like income, education level, or social factors on various outcomes such as crime rates, academic performance, or job satisfaction.

- Operations Research: Regression analysis is applied in operations research to optimize processes and improve efficiency. For example, it can be used to predict demand based on historical sales data, determine the factors influencing production output, or optimize supply chain logistics.

- Environmental Studies: Regression analysis helps in understanding and predicting environmental phenomena. It can be used to analyze the impact of factors like temperature, pollution levels, or land use patterns on phenomena such as species diversity, water quality, or climate change.

- Sports Analytics: Regression analysis is increasingly used in sports analytics to gain insights into player performance, team strategies, and game outcomes. It helps analyze the relationship between various factors like player statistics, coaching strategies, or environmental conditions and their impact on game outcomes.

Advantages and Disadvantages of Regression Analysis

| Advantages of Regression Analysis | Disadvantages of Regression Analysis |

|---|---|

| Provides a quantitative measure of the relationship between variables | Assumes a linear relationship between variables, which may not always hold true |

| Helps in predicting and forecasting outcomes based on historical data | Requires a large sample size to produce reliable results |

| Identifies and measures the significance of independent variables on the dependent variable | Assumes no multicollinearity, meaning that independent variables should not be highly correlated with each other |

| Provides estimates of the coefficients that represent the strength and direction of the relationship between variables | Assumes the absence of outliers or influential data points |

| Allows for hypothesis testing to determine the statistical significance of the relationship | Can be sensitive to the inclusion or exclusion of certain variables, leading to different results |

| Can handle both continuous and categorical variables | Assumes the independence of observations, which may not hold true in some cases |

| Offers a visual representation of the relationship through the use of scatter plots and regression lines | May not capture complex non-linear relationships between variables without appropriate transformations |

| Provides insights into the marginal effects of independent variables on the dependent variable | Requires the assumption of homoscedasticity, meaning that the variance of errors is constant across all levels of the independent variables |

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Uniform Histogram – Purpose, Examples and Guide

Methodological Framework – Types, Examples and...

Grounded Theory – Methods, Examples and Guide

MANOVA (Multivariate Analysis of Variance) –...

Phenomenology – Methods, Examples and Guide

Probability Histogram – Definition, Examples and...

What is Regression Analysis?

- Regression Analysis – Linear Model Assumptions

- Regression Analysis – Simple Linear Regression

- Regression Analysis – Multiple Linear Regression

Regression Analysis in Finance

Regression tools, additional resources, regression analysis.

The estimation of relationships between a dependent variable and one or more independent variables

Regression analysis is a set of statistical methods used for the estimation of relationships between a dependent variable and one or more independent variables . It can be utilized to assess the strength of the relationship between variables and for modeling the future relationship between them.

Regression analysis includes several variations, such as linear, multiple linear, and nonlinear. The most common models are simple linear and multiple linear. Nonlinear regression analysis is commonly used for more complicated data sets in which the dependent and independent variables show a nonlinear relationship.

Regression analysis offers numerous applications in various disciplines, including finance .

Regression Analysis – Linear Model Assumptions

Linear regression analysis is based on six fundamental assumptions:

- The dependent and independent variables show a linear relationship between the slope and the intercept.

- The independent variable is not random.

- The value of the residual (error) is zero.

- The value of the residual (error) is constant across all observations.

- The value of the residual (error) is not correlated across all observations.

- The residual (error) values follow the normal distribution.

Regression Analysis – Simple Linear Regression

Simple linear regression is a model that assesses the relationship between a dependent variable and an independent variable. The simple linear model is expressed using the following equation:

Y = a + bX + ϵ

- Y – Dependent variable

- X – Independent (explanatory) variable

- a – Intercept

- b – Slope

- ϵ – Residual (error)

Check out the following video to learn more about simple linear regression:

Regression Analysis – Multiple Linear Regression

Multiple linear regression analysis is essentially similar to the simple linear model, with the exception that multiple independent variables are used in the model. The mathematical representation of multiple linear regression is:

Y = a + b X 1 + c X 2 + d X 3 + ϵ

- X 1 , X 2 , X 3 – Independent (explanatory) variables

- b, c, d – Slopes

Multiple linear regression follows the same conditions as the simple linear model. However, since there are several independent variables in multiple linear analysis, there is another mandatory condition for the model:

- Non-collinearity: Independent variables should show a minimum correlation with each other. If the independent variables are highly correlated with each other, it will be difficult to assess the true relationships between the dependent and independent variables.

Regression analysis comes with several applications in finance. For example, the statistical method is fundamental to the Capital Asset Pricing Model (CAPM) . Essentially, the CAPM equation is a model that determines the relationship between the expected return of an asset and the market risk premium.

The analysis is also used to forecast the returns of securities, based on different factors, or to forecast the performance of a business. Learn more forecasting methods in CFI’s Budgeting and Forecasting Course !

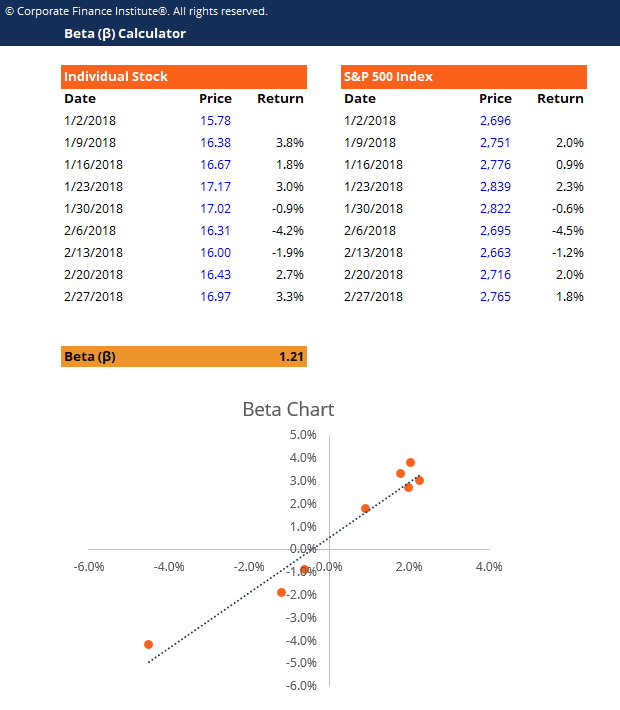

1. Beta and CAPM

In finance, regression analysis is used to calculate the Beta (volatility of returns relative to the overall market) for a stock. It can be done in Excel using the Slope function .

Download CFI’s free beta calculator !

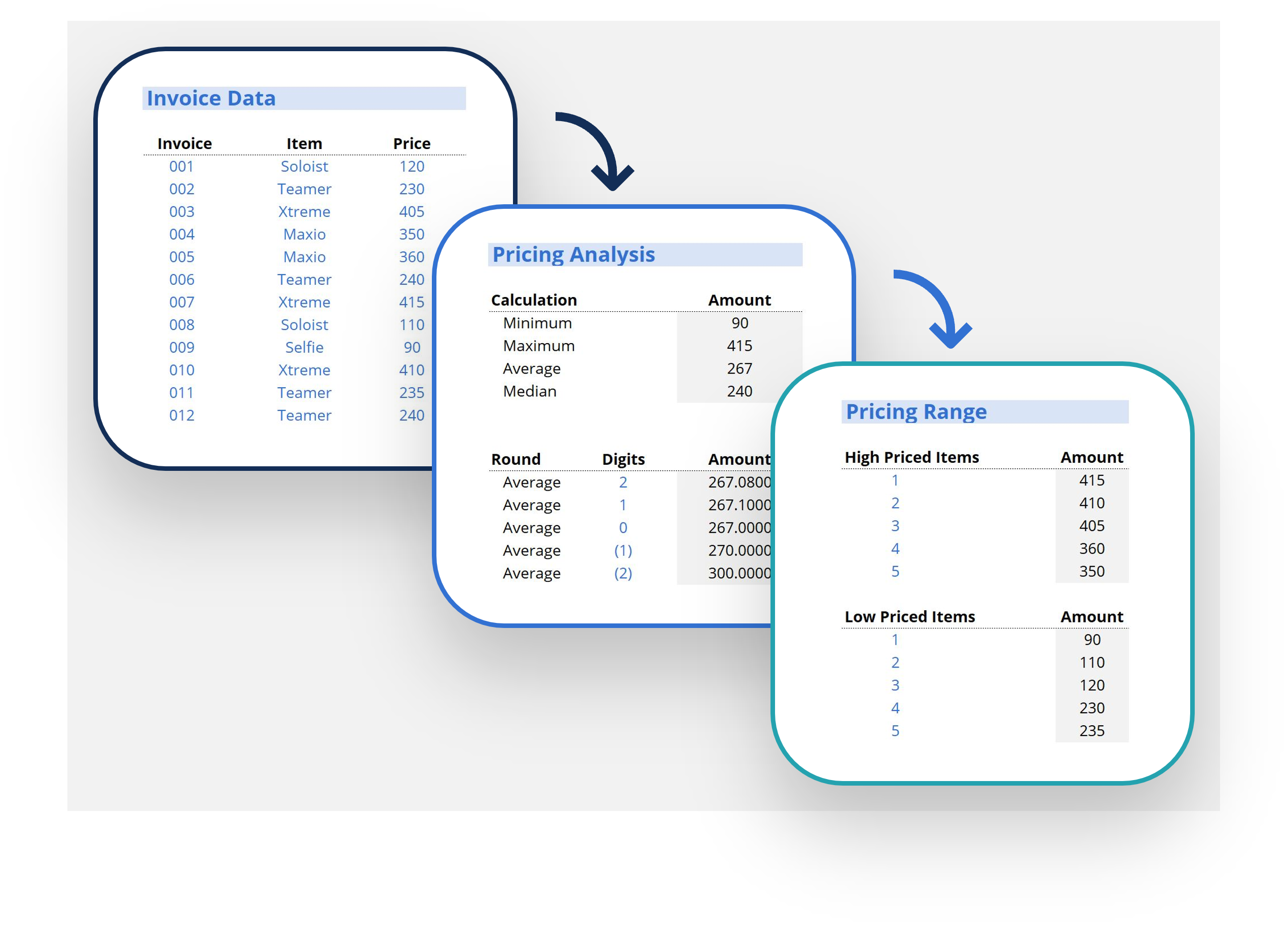

2. Forecasting Revenues and Expenses

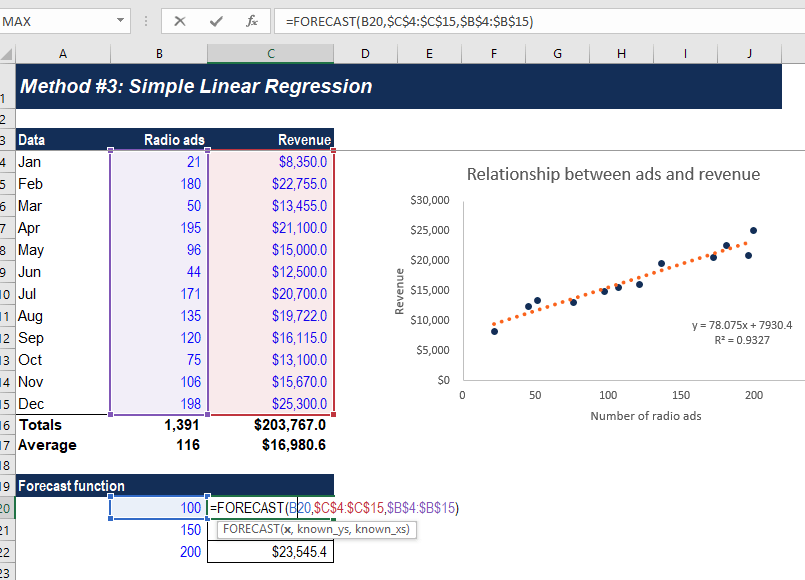

When forecasting financial statements for a company, it may be useful to do a multiple regression analysis to determine how changes in certain assumptions or drivers of the business will impact revenue or expenses in the future. For example, there may be a very high correlation between the number of salespeople employed by a company, the number of stores they operate, and the revenue the business generates.

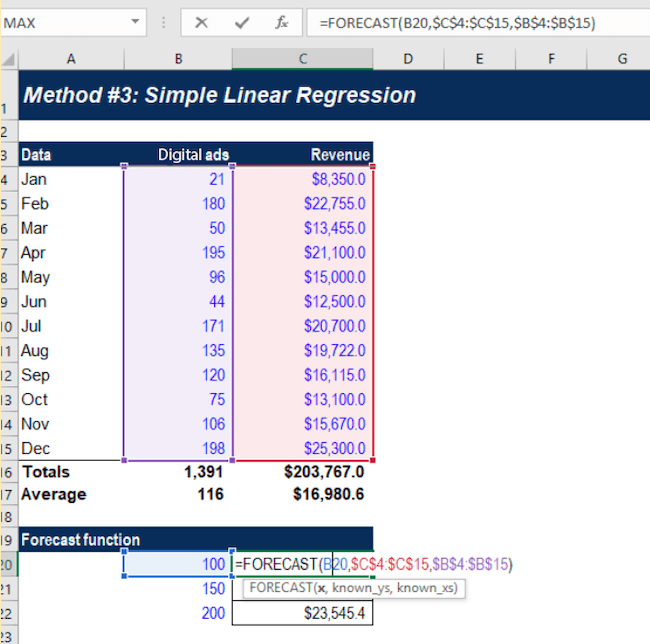

The above example shows how to use the Forecast function in Excel to calculate a company’s revenue, based on the number of ads it runs.

Learn more forecasting methods in CFI’s Budgeting and Forecasting Course !

Excel remains a popular tool to conduct basic regression analysis in finance, however, there are many more advanced statistical tools that can be used.

Python and R are both powerful coding languages that have become popular for all types of financial modeling, including regression. These techniques form a core part of data science and machine learning, where models are trained to detect these relationships in data.

Learn more about regression analysis, Python, and Machine Learning in CFI’s Business Intelligence & Data Analysis certification.

To learn more about related topics, check out the following free CFI resources:

- Cost Behavior Analysis

- Forecasting Methods

- Joseph Effect

- Variance Inflation Factor (VIF)

- High Low Method vs. Regression Analysis

- See all data science resources

- Share this article

Create a free account to unlock this Template

Access and download collection of free Templates to help power your productivity and performance.

Already have an account? Log in

Supercharge your skills with Premium Templates

Take your learning and productivity to the next level with our Premium Templates.

Upgrading to a paid membership gives you access to our extensive collection of plug-and-play Templates designed to power your performance—as well as CFI's full course catalog and accredited Certification Programs.

Already have a Self-Study or Full-Immersion membership? Log in

Access Exclusive Templates

Gain unlimited access to more than 250 productivity Templates, CFI's full course catalog and accredited Certification Programs, hundreds of resources, expert reviews and support, the chance to work with real-world finance and research tools, and more.

Already have a Full-Immersion membership? Log in

- All Categories

- Statistical Analysis Software

What Is Regression Analysis? Types, Importance, and Benefits

In this post

Regression analysis basics

- How does regression analysis work?

- Types of regression analysis

When is regression analysis used?

Benefits of regression analysis, applications of regression analysis, top statistical analysis software.

Businesses collect data to make better decisions.

But when you count on data for building strategies, simplifying processes, and improving customer experience, more than collecting it, you need to understand and analyze it to be able to draw valuable insights. Analyzing data helps you study what’s already happened and predict what may happen in the future.

Data analysis has many components, and while some can be easy to understand and perform, others are rather complex. The good news is that many statistical analysis software offer meaningful insights from data in a few steps.

You have to understand the fundamentals before using or relying on a statistical program to give accurate results because even though generating results is easy, interpreting them is another ballgame.

While interpreting data, considering the factors that affect the data becomes essential. Regression analysis helps you do just that. With the assistance of this statistical analysis method , you can find the most important and least important factors in any data set and understand how they relate.

This guide covers the fundamentals of regression analysis, its process, benefits, and applications.

What is regression analysis?

Regression analysis is a statistical process that helps assess the relationships between a dependent variable and one or more independent variables.

The primary purpose of regression analysis is to describe the relationship between variables, but it can also be used to:

- Estimate the value of one variable using the known values of other variables.

- Predict results and shifts in a variable based on its relationship with other variables.

- Control the influence of variables while exploring the relationship between variables.

To understand regression analysis comprehensively, you must build foundational knowledge of the statistical concepts.

Regression analysis helps identify the factors that impact data insights. You can use it to understand which factors play a role in creating an outcome and how significant they are. These factors are called variables.

You need to grasp two main types of variables.

- The main factor you're focusing on is the dependent variable . This variable is often measured as an outcome of analyses and depends on one or more other variables.

- The factors or variables that impact your dependent variable are called independent variables . Variables like these are often altered for analysis. They’re also called explanatory variables or predictor variables.

Correlation vs. causation

Causation indicates that one variable is the result of the occurrence of the other variable. Correlation suggests a connection between variables. Correlation and causation can coexist, but correlation does not imply causation.

Overfitting

Overfitting is a statistical modeling error that occurs when a function lines up with a limited set of data points and makes predictions based on those instead of exploring new data points. As a result, the model can only be used as a reference to its initial data set and not to any other data sets.

Want to learn more about Statistical Analysis Software? Explore Statistical Analysis products.

How does regression analysis work .

For a minute, let's imagine that you own an ice cream stand. In this case, we can consider “revenue” and “temperature” to be the two factors under analysis. The first step toward conducting a successful regression statistical analysis is gathering data on the variables.

You collect all your monthly sales numbers for the past two years and any data on the independent variables or explanatory variables you’re analyzing. In this case, it’s the average monthly temperature for the past two years.

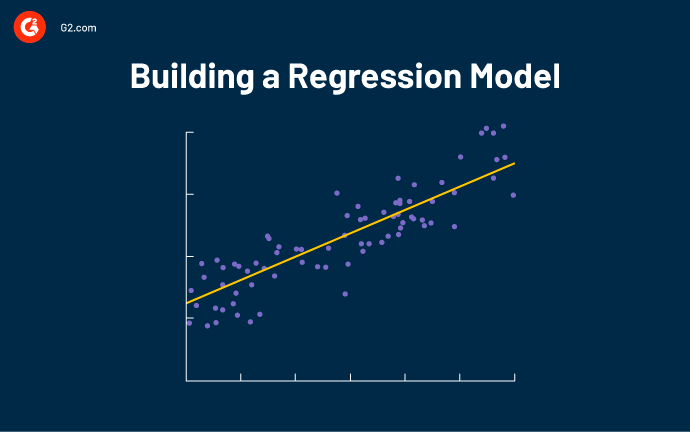

To begin to understand whether there’s a relationship between these two variables, you need to plot these data points on a graph that looks like the following theoretical example of a scatter plot:

.png)

The amount of sales is represented on the y-axis (vertical axis), and temperature is represented on the x-axis (horizontal axis). The dots represent one month's data – the average temperature and sales in that same month.

Observing this data shows that sales are higher on days when the temperature increases. But by how much? If the temperature goes higher, how much do you sell? And what if the temperature drops?

Drawing a regression line roughly in the middle of all the data points helps you figure out how much you typically sell when it’s a specific temperature. Let’s use a theoretical scatter plot to depict a regression line:

The regression line explains the relationship between the predicted values and dependent variables. It can be created using statistical analysis software or Microsoft Excel.

Your regression analysis tool must also display a formula that defines the slope of the line. For example:

y = 100 + 2x + error term

On observing the formula, you can conclude that when there is no x , y equals 100, which means that when the temperature is very low, you can make an average of 100 sales. Provided the other variables remain constant, you can use this to predict the future of sales. For every rise in the temperature, you make an average of two more sales.

A regression line always has an error term because an independent variable cannot be a perfect predictor of a dependent variable. Deciding whether this variable is worth your attention depends on the error term – the larger the error term, the less certain the regression line.

Types of regression analysis

Various types of regression analysis are at your disposal, but the five mentioned below are the most commonly used.

Linear regression

A linear regression model is defined as a straight line that attempts to predict the relationship between variables. It’s mainly classified into two types: simple and multiple linear regression.

We’ll discuss those in a moment, but let’s first cover the five fundamental assumptions made in the linear regression model.

- The dependent and independent variables display a linear relationship.

- The value of the residual is zero.

- The value of the residual is constant and not correlated across all observations.

- The residual is normally distributed.

- Residual errors are homoscedastic – they have a constant variance.

Simple linear regression analysis

Linear regression analysis helps predict a variable's value (dependent variable) based on the known value of one other variable (independent variable).

Linear regression fits a straight line, so a simple linear model attempts to define the relationship between two variables by estimating the coefficients of the linear equation.

Simple linear regression equation:

Y = a + bX + ϵ

Where, Y – Dependent variable (response variable) X – Independent variable (predictor variable) a – Intercept (y-intercept) b – Slope ϵ – Residual (error)

I n such a linear regression model, a response variable has a single corresponding predictor variable that impacts its value. For example, consider the linear regression formula:

y = 5x + 4

If the value of x is defined as 3, only one possible outcome of y is possible.

Multiple linear regression analysis

In most cases, simple linear regression analysis can't explain the connections between data. As the connection becomes more complex, the relationship between data is better explained using more than one variable.

Multiple regression analysis describes a response variable using more than one predictor variable. It is used when a strong correlation between each independent variable has the ability to affect the dependent variable.

Multiple linear regression equation:

Y = a + bX1 + cX2 + dX3 + ϵ

Where, Y – Dependent variable X1, X2, X3 – Independent variables a – Intercept (y-intercept) b, c, d – Slopes ϵ – Residual (error)

Ordinary least squares

Ordinary Least Squares regression estimates the unknown parameters in a model. It estimates the coefficients of a linear regression equation by minimizing the sum of the squared errors between the actual and predicted values configured as a straight line.

Polynomial regression

A linear regression algorithm only works when the relationship between the data is linear. What if the data distribution was more complex, as shown in the figure below?

As seen above, the data is nonlinear. A linear model can't be used to fit nonlinear data because it can't sufficiently define the patterns in the data.

Polynomial regression is a type of multiple linear regression used when data points are present in a nonlinear manner. It can determine the curvilinear relationship between independent and dependent variables having a nonlinear relationship.

Polynomial regression equation:

y = b0+b1x1+ b2x1^2+ b2x1^3+...... bnx1^n

Logistic regression

Logistic regression models the probability of a dependent variable as a function of independent variables. The values of a dependent variable can take one of a limited set of binary values (0 and 1) since the outcome is a probability.

Logistic regression is often used when binary data (yes or no; pass or fail) needs to be analyzed. In other words, using the logistic regression method to analyze your data is recommended if your dependent variable can have either one of two binary values.

Let’s say you need to determine whether an email is spam. We need to set up a threshold based on which the classification can be done. Using logistic regression here makes sense as the outcome is strictly bound to 0 (spam) or 1 (not spam) values.

Bayesian linear regression

In other regression methods, the output is derived from one or more attributes. But what if those attributes are unavailable?

The bayesian regression method is used when the dataset that needs to be analyzed has less or poorly distributed data because its output is derived from a probability distribution instead of point estimates. When data is absent, you can place a prior on the regression coefficients to substitute the data. As we add more data points, the accuracy of the regression model improves.

Imagine a company launches a new product and wants to predict its sales. Due to the lack of available data, we can’t use a simple regression analysis model. But Bayesian regression analysis lets you set up a prior and calculate future projections.

Additionally, once new data from the new product sales come in, the prior is immediately updated. As a result, the forecast for the future is influenced by the latest and previous data.

The Bayesian technique is mathematically robust. Because of this, it doesn’t require you to have any prior knowledge of the dataset during usage. However, its complexity means it takes time to draw inferences from the model, and using it doesn't make sense when you have too much data.

Quantile regression analysis

The linear regression method estimates a variable's mean based on the values of other predictor variables. But we don’t always need to calculate the conditional mean. In most situations, we only need the median, the 0.25 quantile, and so on. In cases like this, we can use quantile regression.

Quantile regression defines the relationship between one or more predictor variables and specific percentiles or quantiles of a response variable. It resists the influence of outlying observations. No assumptions about the distribution of the dependent variable are made in quantile regression, so you can use it when linear regression doesn’t satisfy its assumptions.

Let's consider two students who have taken an Olympiad exam open for all age groups. Student A scored 650, while student B scored 425. This data shows that student A has performed better than student B.

But quantile regression helps remind us that since the exam was open for all age groups, we have to factor in r the student's age to determine the correct outcome in their individual conditional quantile spaces.

We know the variable causing such a difference in the data distribution. As a result, the scores of the students are compared for the same age groups.

What is regularization?

Regularization is a technique that prevents a regression model from overfitting by including extra information. It’s implemented by adding a penalty term to the data model. It allows you to keep the same number of features by reducing the magnitude of the variables. It reduces the magnitude of the coefficient of features toward zero.

The two types of regularization techniques are L1 and L2. A regression model using the L1 regularization technique is known as Lasso regression, and the one using the L2 regularization technique is called Ridge regression.

Ridge regression

Ridge regression is a regularization technique you would use to eliminate the correlations between independent variables (multicollinearity) or when the number of independent variables in a set exceeds the number of observations.

Ridge regression performs L2 regularization. In such a regularization, the formula used to make predictions is the same for ordinary least squares, but a penalty is added to the square of the magnitude of regression coefficients. This is done so that each feature has as little effect on the outcome as possible.

Lasso regression

Lasso stands for Least Absolute Shrinkage and Selection Operator.

Lasso regression is a regularized linear regression that uses an L1 penalty that pushes some regression coefficient values to become closer to zero. By setting features to zero, it automatically chooses the required feature and avoids overfitting.

So if the dataset has high correlation, high levels of multicollinearity, or when specific features such as variable selection or parameter elimination need to be automated, you can use lasso regression.

Now is the time to get SaaS-y news and entertainment with our 5-minute newsletter, G2 Tea , featuring inspiring leaders, hot takes, and bold predictions. Subscribe today!

Regression analysis is a powerful tool used to derive statistical inferences for the future using observations from the past . It identifies the connections between variables occurring in a dataset and determines the magnitude of these associations and their significance on outcomes.

Across industries, it’s a useful statistical analysis tool because it provides exceptional flexibility. So the next time someone at work proposes a plan that depends on multiple factors, perform a regression analysis to predict an accurate outcome.

In the real world, various factors determine how a business grows. Often these factors are interrelated, and a change in one can positively or negatively affect the other.

Using regression analysis to judge how changing variables will affect your business has two primary benefits.

- Making data-driven decisions: Businesses use regression analysis when planning for the future because it helps determine which variables have the most significant impact on the outcome according to previous results. Companies can better focus on the right things when forecasting and making data-backed predictions.

- Recognizing opportunities to improve: Since regression analysis shows the relations between two variables, businesses can use it to identify areas of improvement in terms of people, strategies, or tools by observing their interactions. For example, increasing the number of people on a project might positively impact revenue growth .

Both small and large industries are loaded with an enormous amount of data. To make better decisions and eliminate guesswork, many are now adopting regression analysis because it offers a scientific approach to management.

Using regression analysis, professionals can observe and evaluate the relationship between various variables and subsequently predict this relationship's future characteristics.

Companies can utilize regression analysis in numerous forms. Some of them:

- Many finance professionals use regression analysis to forecast future opportunities and risks . The capital asset pricing model (CAPM) that decides the relationship between an asset's expected return and the associated market risk premium is an often-used regression model in finance for pricing assets and discovering capital costs. Regression analysis is also used to calculate beta (β), which is described as the volatility of returns while considering the overall market for a stock.

- Insurance firms use regression analysis to forecast the creditworthiness of a policyholder . It can also help choose the number of claims that may be raised in a specific period.

- Sales forecasting uses regression analysis to predict sales based on past performance. It can give you a sense of what has worked before, what kind of impact it has created, and what can improve to provide more accurate and beneficial future results.

- Another critical use of regression models is the optimization of business processes . Today, managers consider regression an indispensable tool for highlighting the areas that have the maximum impact on operational efficiency and revenues, deriving new insights, and correcting process errors.

Businesses with a data-driven culture use regression analysis to draw actionable insights from large datasets. For many leading industries with extensive data catalogs, it proves to be a valuable asset. As the data size increases, further executives lean into regression analysis to make informed business decisions with statistical significance.

While Microsoft Excel remains a popular tool for conducting fundamental regression data analysis, many more advanced statistical tools today drive more accurate and faster results. Check out the top statistical analysis software in 2023 here.

To be included in this category, the regression analysis software product must be able to:

- Execute a simple linear regression or a complex multiple regression analysis for various data sets.

- Provide graphical tools to study model estimation, multicollinearity, model fits, line of best fit, and other aspects typical of the type of regression.

- Possess a clean, intuitive, and user-friendly user interface (UI) design

*Below are the top 5 leading statistical analysis software solutions from G2’s Winter 2023 Grid® Report. Some reviews may be edited for clarity.

1. IBM SPSS statistics

IBM SPSS Statistics allows you to predict the outcomes and apply various nonlinear regression procedures that can be used for business and analysis projects where standard regression techniques are limiting or inappropriate. With IBM SPSS Statistics, you can specify multiple regression models in a single command to observe the correlation between independent and dependent variables and expand regression analysis capabilities on a dataset.

What users like best :

"I have used a couple of different statistical softwares. IBM SPSS is an amazing software, a one-stop shop for all statistics-related analysis. The graphical user interface is elegantly built for ease. I was quickly able to learn and use it"

- IBM SPSS Statistics Review , Haince Denis P.

What users dislike:

"Some of the interfaces could be more intuitive. Thankfully much information is available from various sources online to help the user learn how to set up tests."

- IBM SPSS Statistics Review , David I.

To make data science more intuitive and collaborative, Posit provides users across key industries with R and Python-based tools, enabling them to leverage powerful analytics and gather valuable insights.

What users like best:

"Straightforward syntax, excellent built-in functions, and powerful libraries for everything else. Building anything from simple mathematical functions to complicated machine learning models is a breeze."

- Posit Review , Brodie G.

"Its GUI could be more intuitive and user-friendly. One needs a lot of time to understand and implement it. Including a package manager would be a good idea, as it has become common in many modern IDEs. There must be an option to save console commands, which is currently unavailable."

- Posit Review , Tanishq G.

JMP is a data analysis software that helps make sense of your data using cutting-edge and modern statistical methods. Its products are intuitively interactive, visually compelling, and statistically profound.

"The instructional videos on the website are great; I had no clue what I was doing before I watched them. The videos make the application very user-friendly."

- JMP Review , Ashanti B.

"Help function can be brief in terms of what the functionality entails, and that's disappointing because the way the software is set up to communicate data visually and intuitively suggests the presence of a logical and explainable scientific thought process, including an explanation of the "why.” The graph builder could also use more intuitive means to change layout features."

- JMP Review , Zeban K.

4. Minitab statistical software

Minitab Statistical Software is a data and statistical analysis tool used to help businesses understand their data and make better decisions. It allows companies to tap into the power of regression analysis by analyzing new and old data to discover trends, predict patterns, uncover hidden relationships between variables, and create stunning visualizations.

"The greatest program for learning and analyzing as it allows you to improve the settings with incredibly accurate graphs and regression charts. This platform allows you to analyze the outcomes or data with their ideal values."

- Minitab Statistical Software Review , Pratibha M.

"The software price is steep, and licensing is troublesome. You are required to be online or connected to the company VPN for licensing, especially for corporate use. So without an internet connection, you cannot use it at all. Also, if you are in the middle of doing an analysis and happen to lose your internet connection, you will risk losing the project or the study you are working on."

- Minitab Statistical Software Review , Siew Kheong W.

EViews offers user-friendly tools to perform data modeling and forecasting. It operates with an innovative, easy-to-use object-oriented interface used by researchers, financial institutions, government agencies, and educators.

"As an economist, this software is handy as it assists me in conducting advanced research, analyzing data, and interpreting results for policy recommendations. I just cannot do without EViews. I like its recent updates that have also enhanced the UI."

- EViews Review , T homas M.

"In my experience, importing data from Excel is not easy using EViews compared to other statistical software. One needs to develop expertise while importing data into EViews from different formats. Moreover, the price of the software is very high."

- EViews Review , Md. Zahid H.

Collecting data gathers no moss.

Data collection has become easy in the modern world, but more than just gathering is required. Businesses must know how to get the most value from this data. Analysis helps companies to understand the available information, derive actionable insights, and make informed decisions. Businesses should thoroughly know the data analysis process inside and out to refine operations, improve customer service, and track performance.

Learn more ab out the various stages of data analysis and implement them to drive success.

Devyani Mehta

Devyani Mehta is a content marketing specialist at G2. She has worked with several SaaS startups in India, which has helped her gain diverse industry experience. At G2, she shares her insights on complex cybersecurity concepts like web application firewalls, RASP, and SSPM. Outside work, she enjoys traveling, cafe hopping, and volunteering in the education sector. Connect with her on LinkedIn.

Explore More G2 Articles

Understanding regression analysis: overview and key uses

Last updated

22 August 2024

Reviewed by

Miroslav Damyanov

Regression analysis is a fundamental statistical method that helps us predict and understand how different factors (aka independent variables) influence a specific outcome (aka dependent variable).

Imagine you're trying to predict the value of a house. Regression analysis can help you create a formula to estimate the house's value by looking at variables like the home's size and the neighborhood's average income. This method is crucial because it allows us to predict and analyze trends based on data.

While that example is straightforward, the technique can be applied to more complex situations, offering valuable insights into fields such as economics, healthcare, marketing, and more.

- 3 uses for regression analysis in business

Businesses can use regression analysis to improve nearly every aspect of their operations. When used correctly, it's a powerful tool for learning how adjusting variables can improve outcomes. Here are three applications:

1. Prediction and forecasting

Predicting future scenarios can give businesses significant advantages. No method can guarantee absolute certainty, but regression analysis offers a reliable framework for forecasting future trends based on past data. Companies can apply this method to anticipate future sales for financial planning purposes and predict inventory requirements for more efficient space and cost management. Similarly, an insurance company can employ regression analysis to predict the likelihood of claims for more accurate underwriting.

2. Identifying inefficiencies and opportunities

Regression analysis can help us understand how the relationships between different business processes affect outcomes. Its ability to model complex relationships means that regression analysis can accurately highlight variables that lead to inefficiencies, which intuition alone may not do. Regression analysis allows businesses to improve performance significantly through targeted interventions. For instance, a manufacturing plant experiencing production delays, machine downtime, or labor shortages can use regression analysis to determine the underlying causes of these issues.

3. Making data-driven decisions

Regression analysis can enhance decision-making for any situation that relies on dependent variables. For example, a company can analyze the impact of various price points on sales volume to find the best pricing strategy for its products. Understanding buying behavior factors can help segment customers into buyer personas for improved targeting and messaging.

- Types of regression models

There are several types of regression models, each suited to a particular purpose. Picking the right one is vital to getting the correct results.

Simple linear regression analysis is the simplest form of regression analysis. It examines the relationship between exactly one dependent variable and one independent variable, fitting a straight line to the data points on a graph.

Multiple regression analysis examines how two or more independent variables affect a single dependent variable. It extends simple linear regression and requires a more complex algorithm.

Multivariate linear regression is suitable for multiple dependent variables. It allows the analysis of how independent variables influence multiple outcomes.

Logistic regression is relevant when the dependent variable is categorical, such as binary outcomes (e.g., true/false or yes/no). Logistic regression estimates the probability of a category based on the independent variables.

- 6 mistakes people make with regression analysis

Ignoring key variables is a common mistake when working with regression analysis. Here are a few more pitfalls to try and avoid:

1. Overfitting the model

If a model is too complex, it can become overly powerful and lead to a problem known as overfitting. This mistake is an especially significant problem when the independent variables don't impact the dependent data, though it can happen whenever the model over-adjusts to fit all the variables. In such cases, the model starts memorizing noise rather than meaningful data. When this happens, the model’s results will fit the training data perfectly but fail to generalize to new, unseen data, rendering the model ineffective for prediction or inference.

2. Underfitting the model

A less complex model is unlikely to draw false conclusions mistakenly. However, if the model is too simplistic, it will face the opposite problem: underfitting. In this case, the model will fail to capture the underlying patterns in the data, meaning it won't perform well on either the training or new, unseen data. This lack of complexity prevents the model from making accurate predictions or drawing meaningful inferences.

3. Neglecting model validation

Model validation is how you can be sure that a model isn't overfitting or underfitting. Imagine teaching a child to read. If you always read the same book to the child, they might memorize it and recite it perfectly, making it seem like they’ve learned to read. However, if you give them a new book, they might struggle and be unable to read it.

This scenario is similar to a model that performs well on its training data but fails with new data. Model validation involves testing the model with data it hasn’t seen before. If the model performs well on this new data, it indicates having truly learned to generalize. On the other hand, if the model only performs well on the training data and poorly on new data, it has overfitted to the training data, much like the child who can only recite the memorized book.

4. Multicollinearity

Regression analysis works best when the independent variables are genuinely independent. However, sometimes, two or more variables are highly correlated. This multicollinearity can make it hard for the model to accurately determine each variable's impact.

If a model gives poor results, checking for correlated variables may reveal the issue. You can fix it by removing one or more correlated variables or using a principal component analysis (PCA) technique, which transforms the correlated variables into a set of uncorrelated components.

5. Misinterpreting coefficients

Errors are not always due to the model itself; human error is common. These mistakes often involve misinterpreting the results. For example, someone might misunderstand the units of measure and draw incorrect conclusions. Another frequent issue in scientific analysis is confusing correlation and causation. Regression analysis can only provide insights into correlation, not causation.

6. Poor data quality

The adage “garbage in, garbage out” strongly applies to regression analysis. When low-quality data is input into a model, it analyzes noise rather than meaningful patterns. Poor data quality can manifest as missing values, unrepresentative data, outliers, and measurement errors. Additionally, the model may have excluded essential variables significantly impacting the results. All these issues can distort the relationships between variables and lead to misleading results.

- What are the assumptions that must hold for regression models?

To correctly interpret the output of a regression model, the following key assumptions about the underlying data process must hold:

The relationship between variables is linear.

There must be homoscedasticity, meaning the variance of the variables and the error term must remain constant.

All explanatory variables are independent of one another.

All variables are normally distributed.

- Real-life examples of regression analysis

Let's turn our attention to examining how a few industries use the regression analysis to improve their outcomes:

Regression analysis has many applications in healthcare, but two of the most common are improving patient outcomes and optimizing resources.

Hospitals need to use resources effectively to ensure the best patient outcomes. Regression models can help forecast patient admissions, equipment and supply usage, and more. These models allow hospitals to plan and maximize their resources.

Predicting stock prices, economic trends, and financial risks benefits the finance industry. Regression analysis can help finance professionals make informed decisions about these topics.

For example, analysts often use regression analysis to assess how changes to GDP, interest rates, and unemployment rates impact stock prices. Armed with this information, they can make more informed portfolio decisions.

The banking industry also uses regression analysis. When a loan underwriter determines whether to grant a loan, regression analysis allows them to calculate the probability that a potential lender will repay the loan.

Imagine how much more effective a company's marketing efforts could be if they could predict customer behavior. Regression analysis allows them to do so with a degree of accuracy. For example, marketers can analyze how price, advertising spend, and product features (combined) influence sales. Once they've identified key sales drivers, they can adjust their strategy to maximize revenue. They may approach this analysis in stages.

For instance, if they determine that ad spend is the biggest driver, they can apply regression analysis to data specific to advertising efforts. Doing so allows them to improve the ROI of ads. The opposite may also be true. If ad spending has little to no impact on sales, something is wrong that regression analysis might help identify.

- Regression analysis tools and software

Regression analysis by hand isn't practical. The process requires large numbers and complex calculations. Computers make even the most complex regression analysis possible. Even the most complicated AI algorithms can be considered fancy regression calculations. Many tools exist to help users create these regressions.

Another programming language—while MATLAB is a commercial tool, the open-source project Octave aims to implement much of the functionality. These languages are for complex mathematical operations, including regression analysis. Its tools for computation and visualization have made it very popular in academia, engineering, and industry for calculating regression and displaying the results. MATLAB integrates with other toolboxes so developers can extend its functionality and allow for application-specific solutions.

Python is a more general programming language than the previous examples, but many libraries are available that extend its functionality. For regression analysis, packages like Scikit-Learn and StatsModels provide the computational tools necessary for the job. In contrast, packages like Pandas and Matplotlib can handle large amounts of data and display the results. Python is a simple-to-learn, easy-to-read programming language, which can give it a leg up over the more dedicated math and statistics languages.

SAS (Statistical Analysis System) is a commercial software suite for advanced analytics, multivariate analysis, business intelligence, and data management. It includes a procedure called PROC REG that allows users to efficiently perform regression analysis on their data. The software is well-known for its data-handling capabilities, extensive documentation, and technical support. These factors make it a common choice for large-scale enterprise use and industries requiring rigorous statistical analysis.

Stata is another statistical software package. It provides an integrated data analysis, management, and graphics environment. The tool includes tools for performing a range of regression analysis tasks. This tool's popularity is due to its ease of use, reproducibility, and ability to handle complex datasets intuitively. The extensive documentation helps beginners get started quickly. Stata is widely used in academic research, economics, sociology, and political science.

Most people know Excel , but you might not know that Microsoft's spreadsheet software has an add-in called Analysis ToolPak that can perform basic linear regression and visualize the results. Excel is not an excellent choice for more complex regression or very large datasets. But for those with basic needs who only want to analyze smaller datasets quickly, it's a convenient option already in many tech stacks.

SPSS (Statistical Package for the Social Sciences) is a versatile statistical analysis software widely used in social science, business, and health. It offers tools for various analyses, including regression, making it accessible to users through its user-friendly interface. SPSS enables users to manage and visualize data, perform complex analyses, and generate reports without coding. Its extensive documentation and support make it popular in academia and industry, allowing for efficient handling of large datasets and reliable results.

What is a regression analysis in simple terms?

Regression analysis is a statistical method used to estimate and quantify the relationship between a dependent variable and one or more independent variables. It helps determine the strength and direction of these relationships, allowing predictions about the dependent variable based on the independent variables and providing insights into how each independent variable impacts the dependent variable.

What are the main types of variables used in regression analysis?

Dependent variables : typically continuous (e.g., house price) or binary (e.g., yes/no outcomes).

Independent variables : can be continuous, categorical, binary, or ordinal.

What does a regression analysis tell you?

Regression analysis identifies the relationships between a dependent variable and one or more independent variables. It quantifies the strength and direction of these relationships, allowing you to predict the dependent variable based on the independent variables and understand the impact of each independent variable on the dependent variable.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 22 August 2024

Last updated: 5 February 2023

Last updated: 16 August 2024

Last updated: 9 March 2023

Last updated: 30 April 2024

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 4 July 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next, log in or sign up.

Get started for free

- Search Search Please fill out this field.

What Is Regression?

Understanding regression, calculating regression, the bottom line.

- Macroeconomics