Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

CarryCKW/AES-NPCR

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 4 Commits | ||||

| asap | asap | |||

Repository files navigation

Automated-essay-scoring-via-pairwise-contrastive-regression.

Created by Jiayi Xie*, Kaiwei Cai*, Li Kong, Junsheng Zhou, Weiguang Qu This repository contains the ASAP dataset and Pytorch implementation for Automated Essay Scoring.(Coling 2022, Oral)

We use 5-corss-validation, and convert the dataset asap into 5 folds, as shown in the file path "./dataset/asap"

Code for AES-NPCR

Requirement.

Pytorch 1.7.1 Python 3.7.9

Pretrain Model

BERT, Roberta, XLNet can be used, default BERT

The code will be refactored.

- Python 97.7%

- Search Menu

Sign in through your institution

- Advance Articles

- Special Issues

- Author Guidelines

- Submission Site

- Open Access

- Reviewer Guidelines

- Review and Appeals Process

- About The Computer Journal

- About the BCS, The Chartered Institute for IT

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

- < Previous

Automated Essay Scoring by Capturing Relative Writing Quality

- Article contents

- Figures & tables

- Supplementary Data

Hongbo Chen, Jungang Xu, Ben He, Automated Essay Scoring by Capturing Relative Writing Quality , The Computer Journal , Volume 57, Issue 9, September 2014, Pages 1318–1330, https://doi.org/10.1093/comjnl/bxt117

- Permissions Icon Permissions

Automated essay-scoring (AES) systems utilize computer techniques and algorithms to automatically rate essays written in an educational setting, by which the workload of human raters is greatly reduced. AES is usually addressed as a classification or regression problem, where classical machine learning algorithms such as K-nearest neighbor and support vector machines are applied. In this paper, we argue that essay rating is based on the comparison of writing quality between essays and treat AES rather as a ranking problem by capturing the difference in writing quality between essays. We propose a rank-based approach that trains an essay-rating model by learning to rank algorithms, which have been widely used in many information retrieval and social Web mining tasks. Various linguistic and statistical features are utilized to facilitate the learning algorithms. Extensive experiments on two public English essay datasets, Automated Student Assessment Prize and Chinese Learners English Corpus, show that our proposed approach based on pairwise learning outperforms previous classification or regression-based methods on all 15 topics. Finally, analysis on the importance of the features extracted reveals that content, organization and structure are the main factors that affect the ratings of essays written by native English speakers, while non-native speakers are prone to losing ratings on improper term usage, syntactic complexity and grammar errors.

Personal account

- Sign in with email/username & password

- Get email alerts

- Save searches

- Purchase content

- Activate your purchase/trial code

- Add your ORCID iD

Institutional access

Sign in with a library card.

- Sign in with username/password

- Recommend to your librarian

- Institutional account management

- Get help with access

Access to content on Oxford Academic is often provided through institutional subscriptions and purchases. If you are a member of an institution with an active account, you may be able to access content in one of the following ways:

IP based access

Typically, access is provided across an institutional network to a range of IP addresses. This authentication occurs automatically, and it is not possible to sign out of an IP authenticated account.

Choose this option to get remote access when outside your institution. Shibboleth/Open Athens technology is used to provide single sign-on between your institution’s website and Oxford Academic.

- Click Sign in through your institution.

- Select your institution from the list provided, which will take you to your institution's website to sign in.

- When on the institution site, please use the credentials provided by your institution. Do not use an Oxford Academic personal account.

- Following successful sign in, you will be returned to Oxford Academic.

If your institution is not listed or you cannot sign in to your institution’s website, please contact your librarian or administrator.

Enter your library card number to sign in. If you cannot sign in, please contact your librarian.

Society Members

Society member access to a journal is achieved in one of the following ways:

Sign in through society site

Many societies offer single sign-on between the society website and Oxford Academic. If you see ‘Sign in through society site’ in the sign in pane within a journal:

- Click Sign in through society site.

- When on the society site, please use the credentials provided by that society. Do not use an Oxford Academic personal account.

If you do not have a society account or have forgotten your username or password, please contact your society.

Sign in using a personal account

Some societies use Oxford Academic personal accounts to provide access to their members. See below.

A personal account can be used to get email alerts, save searches, purchase content, and activate subscriptions.

Some societies use Oxford Academic personal accounts to provide access to their members.

Viewing your signed in accounts

Click the account icon in the top right to:

- View your signed in personal account and access account management features.

- View the institutional accounts that are providing access.

Signed in but can't access content

Oxford Academic is home to a wide variety of products. The institutional subscription may not cover the content that you are trying to access. If you believe you should have access to that content, please contact your librarian.

For librarians and administrators, your personal account also provides access to institutional account management. Here you will find options to view and activate subscriptions, manage institutional settings and access options, access usage statistics, and more.

Short-term Access

To purchase short-term access, please sign in to your personal account above.

Don't already have a personal account? Register

| Month: | Total Views: |

|---|---|

| February 2017 | 3 |

| March 2017 | 1 |

| April 2017 | 18 |

| May 2017 | 4 |

| June 2017 | 1 |

| July 2017 | 5 |

| August 2017 | 2 |

| September 2017 | 2 |

| October 2017 | 16 |

| November 2017 | 11 |

| December 2017 | 13 |

| January 2018 | 9 |

| March 2018 | 5 |

| April 2018 | 6 |

| May 2018 | 2 |

| July 2018 | 3 |

| September 2018 | 18 |

| October 2018 | 1 |

| November 2018 | 4 |

| December 2018 | 3 |

| February 2019 | 3 |

| March 2019 | 5 |

| April 2019 | 6 |

| May 2019 | 3 |

| June 2019 | 5 |

| July 2019 | 1 |

| September 2019 | 2 |

| October 2019 | 2 |

| November 2019 | 12 |

| December 2019 | 4 |

| January 2020 | 2 |

| February 2020 | 2 |

| March 2020 | 2 |

| April 2020 | 2 |

| May 2020 | 1 |

| June 2020 | 1 |

| July 2020 | 2 |

| August 2020 | 1 |

| October 2020 | 4 |

| January 2021 | 3 |

| February 2021 | 4 |

| March 2021 | 4 |

| April 2021 | 1 |

| May 2021 | 3 |

| July 2021 | 1 |

| September 2021 | 3 |

| October 2021 | 1 |

| December 2021 | 5 |

| January 2022 | 4 |

| February 2022 | 1 |

| March 2022 | 3 |

| April 2022 | 2 |

| July 2022 | 1 |

| August 2022 | 3 |

| September 2022 | 2 |

| November 2022 | 2 |

| December 2022 | 7 |

| February 2023 | 3 |

| March 2023 | 5 |

| April 2023 | 1 |

| May 2023 | 3 |

| June 2023 | 4 |

| July 2023 | 6 |

| August 2023 | 5 |

| September 2023 | 2 |

| October 2023 | 2 |

| November 2023 | 1 |

| December 2023 | 1 |

| January 2024 | 2 |

| February 2024 | 3 |

| March 2024 | 6 |

| April 2024 | 4 |

| May 2024 | 5 |

| June 2024 | 4 |

| July 2024 | 6 |

| August 2024 | 4 |

Email alerts

Citing articles via.

- Recommend to your Library

Affiliations

- Online ISSN 1460-2067

- Print ISSN 0010-4620

- Copyright © 2024 British Computer Society

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Rights and permissions

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

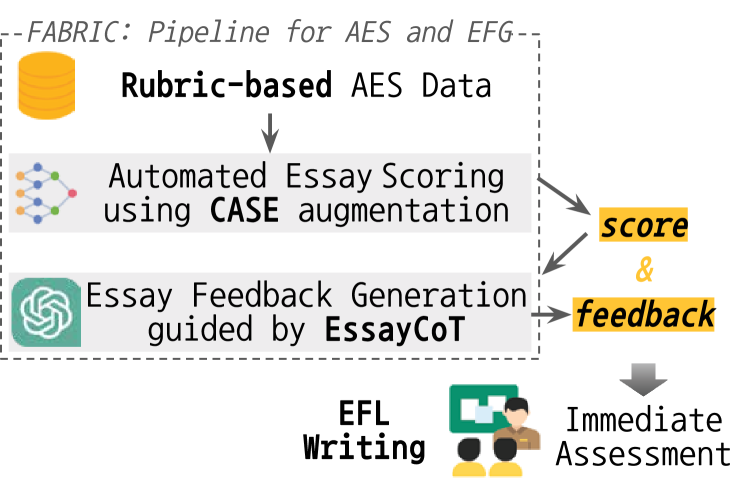

FABRIC: Automated Scoring and Feedback Generation for Essays

Automated essay scoring (AES) provides a useful tool for students and instructors in writing classes by generating essay scores in real-time. However, previous AES models do not provide more specific rubric-based scores nor feedback on how to improve the essays, which can be even more important than the overall scores for learning. We present FABRIC, a pipeline to help students and instructors in English writing classes by automatically generating 1) the overall scores, 2) specific rubric-based scores, and 3) detailed feedback on how to improve the essays. Under the guidance of English education experts, we chose the rubrics for the specific scores as content , organization , and language . The first component of the FABRIC pipeline is DREsS, a real-world D ataset for R ubric-based Es say S coring (DREsS). The second component is CASE, a C orruption-based A ugmentation S trategy for E ssays, with which we can improve the accuracy of the baseline model by 45.44%. The third component is EssayCoT, the Essay Chain-of-Thought prompting strategy which uses scores predicted from the AES model to generate better feedback. We evaluate the effectiveness of the new dataset DREsS and the augmentation strategy CASE quantitatively and show significant improvements over the models trained with existing datasets. We evaluate the feedback generated by EssayCoT with English education experts to show significant improvements in the helpfulness of the feedback across all rubrics. Lastly, we evaluate the FABRIC pipeline with students in a college English writing class who rated the generated scores and feedback with an average of 6 on the Likert scale from 1 to 7.

1 Introduction

In writing education, automated essay scoring (AES) offers benefits to both students and instructors by providing scores of students’ essays in real-time. Many students fear exposing their errors to instructors, therefore immediate assessment of their essays with AES can reduce their anxiety and help them improve their writing Sun and Fan ( 2022 ) . For instructors, this AES model can ease the burdensome process of evaluation and offer a means to validate their own evaluation, ensuring accuracy and consistency in assessment.

Existing AES models provide valuable overall scores, but they are insufficient for both learners and instructors desiring more details. Several studies have underscored English learners’ preference for specific and direct feedback Sidman-Taveau and Karathanos-Aguilar ( 2015 ); Karathanos and Mena ( 2009 ) . As students rarely seek clarifications on unclear feedback and may even disregard it, scoring and feedback must be clear and specific for easy comprehension Sidman-Taveau and Karathanos-Aguilar ( 2015 ) . However, existing AES models cannot be trained to provide detailed rubric-based scores because the datasets either do not have any rubric-specific scores, or when they do, the rubrics and criteria for scoring vary significantly among different datasets.

We introduce FABRIC , F eedback generation guided with A ES B y R ubric-based dataset I ncorporating C hatGPT, a combination of AES model and LLM. FABRIC comprises three major contributions: DREsS , a real-world D ataset for R ubric-based Es say S coring, CASE , a C orruption-based A ugmentation S trategy for E ssays, and EssayCoT , the Essay Chain-of-Thought prompting strategy for feedback generation.

DREsS includes 1,782 essays collected from EFL learners, each scored by instructors according to three rubrics: content, organization, and language. Furthermore, we rescale existing rubric-based datasets to align with our three primary rubrics. We propose as a standard rubric-based AES dataset this combination of a newly collected real-classroom dataset and an existing dataset rescaled to the same set of rubrics and standards. CASE is a novel data augmentation method to enhance the performance of the AES model. CASE employs three rubric-specific strategies to augment the essay dataset with corruption, and training with CASE results in a model that outperforms the quadratic weighted kappa score of the baseline model by 26.37%. EssayCoT is a prompting strategy to guide essay feedback generation, which is a new task on top of AES. EssayCoT leverages essay scores automatically predicted by the AES model when generating feedback, instead of manually composing few-shot exemplars. Feedback with EssayCoT prompting is significantly more preferred and helpful compared to standard prompting, according to the assessment by 13 English education experts. Lastly, we deploy FABRIC in an essay editing platform for 33 English as a Foreign Language (EFL) students.

In summary, the main contributions of this work are as follows:

We propose a standard rubric-based dataset with the combination of our newly collected real-classroom DREsS dataset (1.7K) and unified samples of the existing datasets (2.9K).

We introduce corruption-based augmentation strategies for essays (CASE). We build 3.9K of content, 15.7K of organization, and 0.9K of language synthetic data for AES model training.

We introduce EssayCoT prompting for essay feedback generation, which significantly improves the helpfulness of feedback.

We propose FABRIC, a pipeline that generates both scores and feedback leveraging DREsS, CASE, and EssayCoT. We deploy FABRIC with the aim of exploring its practical application in English writing education.

2 Related Work

2.1 automated essay scoring.

Automated essay scoring (AES) systems are used in evaluating and scoring student essays based on a given prompt. However, there is only a limited amount of available rubric-based datasets for AES with limited utility because the rubrics are not consistent. Furthermore, AES dataset has to be annotated with the experts in English education, considering scoring task requires not only proficiency in English but also pedagogical knowledge in English writing. To the best of our knowledge, a real-world AES dataset has not yet been established, as existing AES datasets make use of scores annotated by non-experts in English education.

2.1.1 AES Datasets

ASAP dataset 1 1 1 https://www.kaggle.com/c/asap-aes is widely used in AES tasks, including eight different prompts. Six out of eight prompt sets (P1-6) have a single overall score, and only two prompts (P7-8) are rubric-based datasets. These two rubric-based prompts consist of 1,569 and 723 essays for each respective prompt. The two prompt sets even have distinct rubrics and score ranges, which poses a challenge in leveraging both datasets for training rubric-based models. The essays are graded by non-expert annotators, though the essays were written by Grade 7-10 students in the US.

Mathias and Bhattacharyya ( 2018 ) manually annotated different attributes of essays in ASAP Prompt 1 to 6, which only have a single overall score. ASAP++ P1-2 are argumentative essays, while P3-6 are source-dependent essays. However, most samples in ASAP++ were annotated by a single annotator, who is a non-expert, including non-native speakers of English. Moreover, each prompt set of ASAP++ has different attributes to each other, which need to be more generalizable to fully leverage such dataset for AES model.

ICNALE Edited Essays

ICNALE Edited Essays (EE) v3.0 Ishikawa ( 2018 ) presents rubric-based essay evaluation scores and fully edited versions of essays written by EFL learners from 10 countries in Asia. The essays were evaluated according to 5 rubrics: content, organization, vocabulary, language use, and mechanics, according to ESL Composition Profile Jacobs et al. ( 1981 ) . Even though the essays are written by EFL learners, the essay is rated and edited only by five native English speakers, non-experts in the domain of English writing education. In addition, it is not openly accessible and only consists of 639 samples.

TOEFL11 Blanchard et al. ( 2013 ) corpus from ETS introduced 12K TOEFL iBT essays, which are not publicly accessible now. TOEFL11 only provides a general score for essays in 3 levels (low/mid/high), which is insufficient for building a well-performing AES system.

2.1.2 AES Models

Recent AES models can be categorized into two distinct types: holistic scoring model and rubric-based scoring model.

Holistic AES

The majority of the previous studies used the ASAP dataset for training and evaluation, aiming to predict the overall score of the essay only Tay et al. ( 2018 ); Cozma et al. ( 2018 ); Wang et al. ( 2018 ); Yang et al. ( 2020 ) . Enhanced AI Scoring Engine (EASE) 2 2 2 https://github.com/edx/ease is a commonly used, open-sourced AES system based on feature extraction and statistical methods. In addition, Taghipour and Ng ( 2016 ) and Xie et al. ( 2022 ) released models based on recurrent neural networks and neural pairwise contrastive regression (NPCR) model, respectively. However, only a limited number publicly opened their models and code, highlighting the need for additional publicly available data and further validation of existing models.

Rubric-based AES

The scarcity of publicly available rubric-based AES datasets poses significant obstacles to the advancement of AES research. There are industry-driven services such as IntelliMetric® Rudner et al. ( 2006 ) and E-rater® Attali and Burstein ( 2006 ) and datasets from ETS Blanchard et al. ( 2013 ) , but none of them are accessible to the public. In order to facilitate AES research in the academic community, it is crucial to release a publicly available rubric-based AES dataset and baseline model.

2.2 Essay Feedback Generation

Feedback generation.

Though recent studies assume that LLMs can be used to facilitate education innovation by providing real-time and individualized feedback Yan et al. ( 2023 ); Kasneci et al. ( 2023 ) , no study has addressed detailed approaches for feedback generation in education using LLMs to the best of our knowledge. Peng et al. ( 2023 ) demonstrate that LLM performances on task-oriented dialogue and open-domain question answering dramatically improves with access to golden knowledge, suggesting the benefit of incorporating more specific and targeted knowledge to LLMs. It assumes that appropriate golden knowledge, such as rubric explanations and accurate scores on essays, can nudge LLMs to generate better feedback on essay writing.

Feedback Quality Evaluation

Zheng et al. ( 2023 ) evaluate the quality of responses of LLM-based assistants to open-ended questions using a holistic approach, considering four criteria: helpfulness, relevance, accuracy, and level of detail. Wang et al. ( 2023 ) evaluate responses generated by current LLMs in terms of helpfulness and acceptness, indicating which response is better by inputting win, tie, and lose. Jia et al. ( 2021 ) define each feature in peer-review comments into three: suggestion, problem, and positive tone.

3 FABRIC Pipeline

We have examined the specific needs of the stakeholders in EFL education for both scores and feedback on essays through a group interview with six students and a written interview with three instructors. The interview details are in Appendix A.1 . Along with AES for essay scores, we propose an essay feedback generation task to meet the needs of EFL learners for immediate and specific feedback on their essays. Specifically, the feedback generation task involves understanding a student’s essay and generating feedback under three rubrics: content, organization, and language Cumming ( 1990 ); Ozfidan and Mitchell ( 2022 ) . The objective is to provide feedback that is helpful, relevant, accurate, and specific Zheng et al. ( 2023 ) for both students and instructors. In this section, we present FABRIC, a serial combination of rubric-based AES models (§ 3.1 ) and rubric-based feedback generation using EssayCoT (§ 3.2 ).

3.1 Rubric-based AES Models

We fine-tune BERT for each rubric using 1.7K essays from DREsS (§ 3.1.1 ), 2.9K essays from standardized data (§ 3.1.2 ), and 1.3K essays augmented by CASE (§ 3.1.3 ). BERT-based model architectures are the most state-of-the-art method in AES Devlin et al. ( 2019 ) , and there are no significant improvements in AES by using other pre-trained language models (PLM) Xie et al. ( 2022 ) . Experimental results of rubric-based AES with different PLMs are provided in Appendix B.2 .

3.1.1 Dataset Collection

Dataset details.

DREsS includes 1,782 essays on 22 prompts, having 313.36 words and 21.19 sentences on average. Each sample in DREsS includes students’ written essay, essay prompt, rubric-based scores (content, organization, language), total score, class division (intermediate, advanced), and a test type (pre-test, post-test). The essays are scored on a range of 1 to 5, with increments of 0.5, based on the three rubrics: content, organization, and language. We chose such three rubrics as standard criteria for scoring EFL essays, according to previous studies from the language education Cumming ( 1990 ); Ozfidan and Mitchell ( 2022 ) . Detailed explanations of the rubrics are shown in Table 1 . The essays are written by undergraduate students enrolled in EFL writing courses at a college in South Korea from 2020 to 2023. Most students are Korean their ages span from 18 to 22, with an average of 19.7. In this college, there are two divisions of the EFL writing class: intermediate and advanced. The division is based on students’ TOEFL writing scores (15-18 for intermediate and 19-21 for advanced). During the course, students are asked to write an in-class timed essay for 40 minutes both at the start (pre-test) and the end of the semester (post-test) to measure their improvements.

| Content | Paragraphs is well-developed and relevant to the argument, supported with strong reasons and examples. |

|---|---|

| Organization | The argument is very effectively structured and developed, making it easy for the reader to follow the ideas and understand how the writer is building the argument. Paragraphs use coherence devices effectively while focusing on a single main idea. |

| Language | The writing displays sophisticated control of a wide range of vocabulary and collocations. The essay follows grammar and usage rules throughout the paper. Spelling and punctuation are correct throughout the paper. |

Annotator Details

We collect scoring data from 11 instructors, who served as the teachers of the students who wrote the essays. All annotators are experts in English education or Linguistics and are qualified to teach EFL writing courses at a college in South Korea. To ensure consistent and reliable scoring across all instructors, they all participated in training sessions with a scoring guide and norming sessions where they develop a consensus on scores using two sample essays. Additionally, there was no significant difference among the score distribution of all instructors in the whole data tested by one-way ANOVA and Tukey HSD at a p-value of 0.05.

3.1.2 Standardizing the Existing Data

We standardize three existing rubric-based datasets to align with the three rubrics in DREsS: content, organization, and language. We unify ASAP set 7 and 8, which are the only rubric-based datasets in ASAP. ASAP prompt set 7 includes four rubrics – ideas, organization, style, and convention – while prompt set 8 contains six rubrics – ideas and content, organization, voice, word choice, sentence fluency, and convention. Both sets provide scores ranging from 0 to 3. For language rubric, we first create synthetic labels based on a weighted average. This involves assigning a weight of 0.66 to the style and 0.33 to the convention in set 7, and assigning equal weights to voice, word choice, sentence fluency, and convention in set 8. For content and organization rubric, we utilize the existing data rubric (idea for content, organization as same) in the dataset. We then rescale the score of all rubrics into a range of 1 to 5. We repeated the same process with ASAP++ set 1 and 2, which has the same attributes as ASAP set 7 and 8. Similarly, for ICNALE EE dataset, we unify vocabulary, language use, and mechanics as language rubric with a weight of 0.4, 0.5, and 0.1, respectively. In the process of consolidating the writing assessment criteria, we sought professional consultation from EFL education experts and strategically grouped together those components that evaluate similar aspects.

3.1.3 Synthetic Data Construction

To overcome the scarcity of data, we construct synthetic data for rubric-based AES. We introduce a corruption-based augmentation strategy for essays (CASE), which starts with a well-written essay and incorporates a certain portion of sentence-level errors into the synthetic essay. In subsequent experiments, we define well-written essays as an essay that scored 4.5 or 5.0 out of 5.0 on each criterion.

| (1) |

n ( S c ) n subscript 𝑆 𝑐 \text{n}(S_{c}) is the number of corrupted sentences in the synthetic essay, and n ( S E ) n subscript 𝑆 𝐸 \text{n}(S_{E}) is the number of sentences in the well-written essay, which serves as the basis for the synthetic essay. x i subscript 𝑥 𝑖 x_{i} denotes the score of the synthetic essay.

We substitute randomly-sampled sentences from well-written essays with out-of-domain sentences from different prompts. This is based on an assumption that sentences in well-written essays support the given prompt’s content, meaning that sentences from the essays on different prompts convey different contents. Therefore, more number of substitutions imply higher levels of corruption in the content of the essay.

Organization

We swap two randomly-sampled sentences in well-written essays and repeat this process based on the synthetic score, supposing that sentences in well-written essays are systemically structured in order. The more number of swaps implies higher levels of corruption in the organization of the essay.

We substitute randomly-sampled sentences into ungrammatical sentences and repeat this process based on the synthetic score. We extract 605 ungrammatical sentences from BEA-2019 data for the shared task of grammatical error correction (GEC) Bryant et al. ( 2019 ) . We define ungrammatical sentences with the number of edits of the sentence over 10, which is the 98th percentile. The more substitutions, the more corruption is introduced in the grammar of the essay. We set a high threshold for ungrammatical sentences because of the limitation of the current GEC dataset that inherent noise may be included, such as erroneous or incomplete correction Rothe et al. ( 2021 ) .

3.1.4 Data Statistics

| Content | Organization | Language | |

| DREsS | 1,782 | 1,782 | 1,782 |

| ASAP P7 | 1,569 | 1,569 | 1,569 |

| ASAP P8 | 723 | 723 | 723 |

| ASAP++ P1 | 1,785 | 1,785 | 1,785 |

| ASAP++ P2 | 1,800 | 1,800 | 1,800 |

| ICNALE EE | 639 | 639 | 639 |

| CASE | 3,924 | 15,696 | 981 |

| Total | 12,222 | 23,994 | 14,845 |

Table 2 shows the number of samples per rubric. We use the data for training and validating our AES model. It consists of our newly released DREsS dataset, unified samples of existing datasets (ASAP Prompt 7-8, ASAP++ Prompt 1-2, and ICNALE EE), and synthetic data augmented using CASE. In particular, we generate synthetic data with CASE under ablation study for exploring the optimal number of samples.

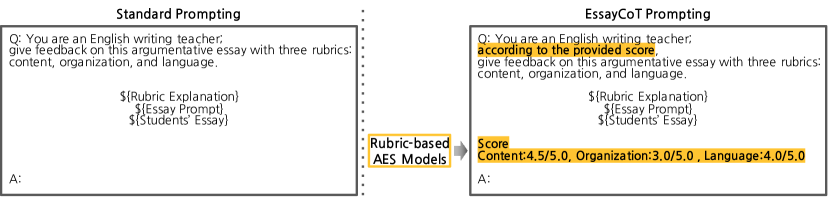

3.2 EssayCoT

We introduce EssayCoT (Figure 2 ), a simple but efficient prompting method, to enhance the performance of essay feedback generation. Chain-of-Thought (CoT) Wei et al. ( 2022 ) is a few-shot prompting technique that enhances problem-solving by incorporating intermediate reasoning steps, guiding LLMs toward the final answer. However, it requires significant time and effort to provide human-written few-shot examples. Especially, CoT may not be an efficient approach for essay feedback generation, considering the substantial length of the essay and feedback. Instead, EssayCoT can perform CoT in a zero-shot setting without any additional human effort, since it leverages essay scores which are automatically predicted by AES model. It utilizes three rubric-based scores on content, organization, and language as a rationale for essay feedback generation.

4 Experimental Result

In this section, we present the performance of AES model with CASE (§ 4.1 ) and essay feedback generation with EssayCoT (§ 4.2 ).

4.1 Automated Essay Scoring

| Model | Data | Content | Organization | Language | Total |

|---|---|---|---|---|---|

| gpt-3.5-turbo | N/A | 0.239 | 0.371 | 0.246 | 0.307 |

| EASE (SVR) | DREsS | - | - | - | 0.360 |

| NPCR ) | DREsS | - | - | - | 0.507 |

| BERT ) | DREsS | 0.414 | 0.311 | 0.487 | 0.471 |

| + unified ASAP, ASAP++, ICNALE EE | 0.599 | 0.593 | 0.587 | 0.551 | |

| + synthetic data from CASE | 0.642 | 0.750 | 0.607 | 0.685 |

The performance of AES models is mainly evaluated by the consistency between the predicted scores and the gold standard scores, conventionally calculated using the quadratic weighted kappa (QWK) score. Table 3 shows the experimental results with augmentations using CASE on the combination of DREsS dataset and unified datasets (ASAP, ASAP++, and ICNALE EE). Detailed experimental settings are described in Appendix B.1 . Fine-tuned BERT exhibits scalable results with the expansion of training data. The model trained with a combination of our approaches outperforms other baseline models by 45.44%, demonstrating its effectiveness.

The results of existing holistic AES models underscore the need to examine existing AES models using new datasets. The QWK scores of EASE and NPCR drop from 0.699 to 0.360 and from 0.817 to 0.507, respectively, compared to the QWK scores of the models trained on ASAP. It implies that (1) our dataset may be more complex, considering that ASAP has 4-6 score classes while our DREsS contains 9 classes on each rubric with scores ranging from 1 to 5 with increments of 0.5 and 25 classes with a score range 3 to 15 on the total score, and (2) the existing models might be overfitted to ASAP. Another limitation of these models is their inability to compute rubric-based scores.

Asking gpt-3.5-turbo to score an essay achieved the worst performances among all, showing high variances among the essays with the same ground truth score. The detailed results for ChatGPT in different prompt settings are provided in Table 7 in Appendix B.3 .

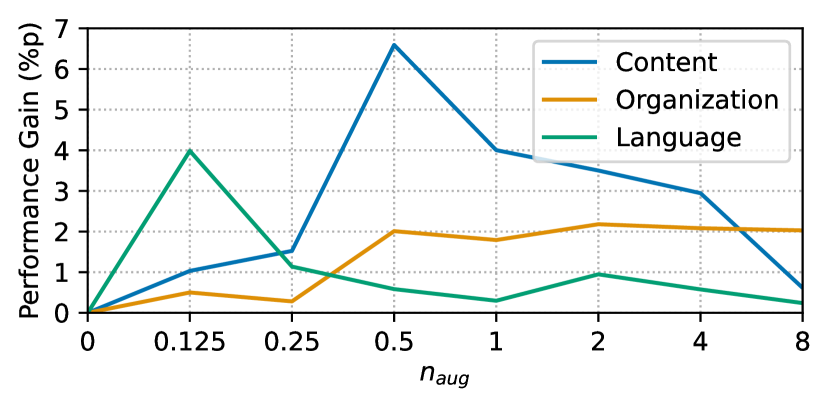

We perform an ablation study to explore the effects of CASE and find the optimal number of CASE operations per each rubric. In Figure 3 , we investigate how the number of synthetic data by each class per original data among all classes, n a u g subscript 𝑛 𝑎 𝑢 𝑔 n_{aug} , affects the performance over all rubrics for n a u g = { 0.125 , 0.25 , 0.5 , 1 , 2 , 4 , 8 } subscript 𝑛 𝑎 𝑢 𝑔 0.125 0.25 0.5 1 2 4 8 n_{aug}=\{0.125,0.25,0.5,1,2,4,8\} . CASE on content, organization, and language rubrics show their best performances on 0.5, 2, 0.125 of n a u g subscript 𝑛 𝑎 𝑢 𝑔 n_{aug} , generating a pair of synthetic essays and corresponding scores in 4.5, 18, 1.125 times, respectively. We suppose that the detailed augmentation strategies for each rubric and the small size of the original data affect the optimal number of CASE operations. Organization, where corruption was made within the essay and irrelevant to the size of the original data, showed the highest n a u g subscript 𝑛 𝑎 𝑢 𝑔 n_{aug} . Content, where the corrupted sentences were sampled from 874 well-written essays with 21.2 sentences on average, reported higher n a u g subscript 𝑛 𝑎 𝑢 𝑔 n_{aug} than language, where the corrupted sentences were sampled from 605 ungrammatical sentences.

4.2 Essay Feedback Generation

We adapt evaluation criteria for the quality evaluation of LLM response Zheng et al. ( 2023 ) and re-define those criteria to fit our domain of feedback generation. To overcome the limitation of previous research with holistic evaluation, we assess the feedback quality by each criterion. • Level of detail: the feedback is specific, supported with details. • Accuracy: the feedback content provides accurate information according to the essay. • Relevance: the feedback is provided according to the understanding of the essay criteria. • Helpfulness: the feedback is helpful for students to improve the quality of writing.

| Standard Prompting | EssayCoT Prompting |

|---|---|

| The language used in the essay is sophisticated and displays a wide range of vocabulary and collocations. The writer follows grammar and usage rules throughout the paper, and spelling and punctuation are correct. The essay is well-written and easy to read. Overall, the language used in the essay is strong and effectively communicates the writer’s argument. | The essay displays some control of vocabulary and grammar, but there are also several errors and awkward phrasings throughout. For example, the writer uses the phrase (‘nowdays’ instead of ‘nowadays’), and there are several instances of awkward phrasing and word choice. Additionally, there are several errors in punctuation and capitalization. The essay would benefit from more careful proofreading and editing, as well as more attention to precise and effective language use. |

Table 4 shows two different language feedback examples for the same essay with a score of 2.5 out of 5.0. These examples are generated using different prompts: a standard prompt without the score and EssayCoT prompt with the score. The green text indicates detailed support and examples provided by the essay (level of detail), and the blue text describes the overall evaluation of the essay regarding the language criterion. By comparing blue text, EssayCoT suggests the improvements (helpfulness) such as ‘errors and awkward phrasing’ and ‘punctuation and capitalization’ , while standard prompting only praises language use such as ‘vocabulary and collocations’ . Considering that the language score of the essay is 2.5 out of 5.0, the feedback generated by EssayCoT appears to be more accurate. The orange text in the feedback generated by the standard prompt is irrelevant to the language criterion (relevance) and has similar expressions to an organization explanation in Table 1 .

To evaluate the quality of the feedback generated through these two prompting techniques (standard vs. EssayCoT), we recruited 13 English education experts with a Secondary School Teacher’s Certificate (Grade II) for English Language, licensed by the Ministry of Education, Korea. These annotators were asked to evaluate both types of rubric-based feedback for the same essay on a 7-point Likert scale for each rubric. Then, they were asked to vote their general preference between the two feedback types with three options: A is better, B is better, and no difference. We randomly sampled 20 essays balancing the total score of the essay, and allocated 7 annotators to each essay.

Results show that 52.86% of the annotators prefer feedback from EssayCoT prompting, compared to only 28.57% who prefer feedback from standard prompting. The remaining 18.57% reported no difference between the two feedback. It was shown to be statistically significant at p 𝑝 p level of < 0.05 using the Chi-squared test. Figure 4 presents further evaluation results on the two types of feedback. EssayCoT prompting performs better in terms of accuracy, relevance, and especially helpfulness, which achieves statistical significance across all rubrics. Feedback from standard prompting without essay scores tends to generate general compliments rather than criticisms and suggestions. EFL learners prefer constructive corrective feedback rather than positive feedback, according to the qualitative interview described in Appendix A.1 ,

The only area where standard prompting performed better was in the level of detail regarding the content feedback. This suggests that standard prompting allows a higher degree of freedom by prompting without scores, which enables to generate more detailed feedback. Nevertheless, as it scored poorer on all other criteria, we suppose that this freedom was not quite helpful in essay feedback generation. The comparison of content feedback in Appendix B.4 represents that standard prompting only provided a specific summary of the essay instead of suggestions or criticisms. Furthermore, it even provided inaccurate information in language feedback. As shown in Table 4 , the feedback generated with standard prompting incorrectly indicated that the spelling is correct.

5 Prototype Deployment and Evaluation

We adopted our pipeline to the college’s EFL writing courses using the RECIPE Han et al. ( 2023 ) platform to investigate both the usage and perception by students and instructors. The participants of our study were 33 students from EFL writing courses (intermediate: 11, advanced: 22). Student cohort compromise 32 Korean and 1 Italian student, of whom 12 are female and 21 are male.

Students were asked to self-assess their essays, given a short description of each rubric as a guide. Subsequently, they received the scores and feedback generated by our system. Then they evaluated the helpfulness of the scores and feedback and engaged further by having a conversation with ChatGPT to better understand the feedback and to improve their essays. A detailed set of the questions posed to students is described in Appendix C.1.1 .

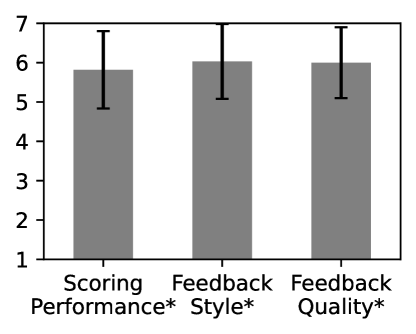

Figure 5 presents the responses of the EFL writing course students regarding the perceived performances and the learning experiences with the outputs of our pipeline. On average, students evaluated the performance of the AES model as well as the style and the quality of feedback generated as 6 out of 7 (Figure 5(a) ). They reported confidence in their essay quality and understanding of each writing rubric significantly improved due to the engagement on our platform embedded with FABRIC (Figure 5(b) ).

6 Discussion

In this work, we propose a fully-automated pipeline capable of scoring and generating feedback on students’ essays. As we investigated in § A.1 and simulated in § 5 , this pipeline could assist both EFL learners and instructors by generating rubric-based scores and feedback promptly. In this section, we discuss plausible usage scenarios to advance the FABRIC and integrate the pipeline into the general education contexts.

Human-in-the-Loop Pipeline

Though our main finding shows the possibilities of the full automation of essay scoring and feedback generation, we also suggest a direct extension of our work that can be further developed by integrating a human-in-the-loop component into the pipeline to enhance the teaching and learning experience. As instructors can modify the style or contents of the feedback, FABRIC can be enhanced by implementing a personalized feedback generation model that aligns seamlessly with instructors’ pedagogical objectives and teaching styles. Therefore, students can receive feedback that is more trustworthy and reliable which empowers them to engage in the learning process actively. In addition, feedback generation can be developed to provide personalized feedback for students to align with their difficult needs and learning styles.

Check for Students’ Comprehension

Instructors can incorporate our pipeline into their class materials to identify recurring issues in students’ essays, potentially saving significant time compared to manual reviews. Our pipeline can be effectively used to detect similar feedback provided to a diverse set of students, which often indicates common areas of difficulty. By identifying these common issues, instructors can create targeted, customized, individualized educational content that addresses the specific needs of their students, thereby enhancing the overall learning experience.

7 Conclusion

In conclusion, this paper contributes to English writing education by releasing new data, introducing novel augmentation strategies for automated essay scoring, and proposing EssayCoT prompting for essay feedback generation. Recognizing the limitations of previous holistic AES studies, we present DREsS, a dataset specifically designed for rubric-based essay scoring. Additionally, we suggest CASE, corruption-based augmentation strategies for essays, which utilizes DREsS to generate pairs of synthetic essays and the corresponding score by injecting feasible sentence-level errors. Through in-depth focus group interviews with EFL learners, we identify a strong demand for both scores and feedback in EFL writing education, leading us to define a novel task, essay feedback generation. To address this task, we propose FABRIC, a comprehensive pipeline for score and feedback generation on student essays, employing essay scores for feedback generation as Essay Chain-of-Thought (EssayCoT). Our results show that the augmented data with CASE significantly improve the performance of AES, achieving about 0.6 of QWK scores, and feedback generated by EssayCoT prompting with essay score is significantly more preferred compared to standard prompting by English education experts. We finally deployed our FABRIC pipeline in real-world EFL writing education, exploring the students’ practical use of AI-generated scores and feedback. We envision several scenarios for the implementation of our proposed pipeline in real-world classrooms, taking into consideration of human-computer interactions. This work aims to inspire researchers and practitioners to delve deeper into NLP-driven innovation in English writing education, with the ultimate goal of advancing the field.

Limitations

Our augmentation strategy primarily starts from well-written essays and generates erroneous essays and their corresponding scores, therefore it is challenging to synthesize well-written essays with our method. We believe that well-written essays can be reliably produced by LLMs, which have demonstrated strong writing capabilities, especially in English.

We utilize ChatGPT, a black-box language model, for feedback generation. As a result, our pipeline lacks transparency and does not provide explicit justifications or rationales for the feedback generated. We acknowledge the need for further research to develop models that produce more explainable feedback, leaving room for future exploration.

Ethics Statement

We expect that this paper can considerably contribute to the development of NLP for good within the field of NLP-driven assistance in EFL writing education. All studies in this research project were performed under our institutional review board (IRB) approval. We have thoroughly addressed ethical considerations throughout our study, focusing on (1) collecting essays from students, (2) validating our pipeline in EFL courses, and (3) releasing the data.

After the EFL courses ended, we asked the students who had taken them to share their essays written through the course to prevent any potential effects on their scores or grades.

There was no discrimination when recruiting and selecting EFL students and instructors regarding any demographics, including gender and age. We set the wage per session to be above the minimum wage in the Republic of Korea in 2023 (KRW 9,260 ≈ \approx USD 7.25) 3 3 3 https://www.minimumwage.go.kr/ . They were free to participate in or drop out of the experiment, and their decision did not affect the scores or the grade they received.

We deeply considered the potential risk associated with releasing a dataset containing human-written essays in terms of privacy and personal information. We will filter out all sensitive information related to their privacy and personal information by (1) rule-based code and (2) human inspection. To address this concern, we will run a checklist, and only the researchers or practitioners who submit the checklist can access our data.

- Attali and Burstein (2006) Yigal Attali and Jill Burstein. 2006. Automated essay scoring with e-rater® v.2 . The Journal of Technology, Learning and Assessment , 4(3).

- Beltagy et al. (2020) Iz Beltagy, Matthew E. Peters, and Arman Cohan. 2020. Longformer: The long-document transformer .

- Black et al. (2022) Sidney Black, Stella Biderman, Eric Hallahan, Quentin Anthony, Leo Gao, Laurence Golding, Horace He, Connor Leahy, Kyle McDonell, Jason Phang, Michael Pieler, Usvsn Sai Prashanth, Shivanshu Purohit, Laria Reynolds, Jonathan Tow, Ben Wang, and Samuel Weinbach. 2022. GPT-NeoX-20B: An open-source autoregressive language model . In Proceedings of BigScience Episode #5 – Workshop on Challenges & Perspectives in Creating Large Language Models , pages 95–136, virtual+Dublin. Association for Computational Linguistics.

- Blanchard et al. (2013) Daniel Blanchard, Joel Tetreault, Derrick Higgins, Aoife Cahill, and Martin Chodorow. 2013. Toefl11: A corpus of non-native english. ETS Research Report Series , 2013(2):i–15.

- Bryant et al. (2019) Christopher Bryant, Mariano Felice, Øistein E. Andersen, and Ted Briscoe. 2019. The BEA-2019 shared task on grammatical error correction . In Proceedings of the Fourteenth Workshop on Innovative Use of NLP for Building Educational Applications , pages 52–75, Florence, Italy. Association for Computational Linguistics.

- Cozma et al. (2018) Mădălina Cozma, Andrei Butnaru, and Radu Tudor Ionescu. 2018. Automated essay scoring with string kernels and word embeddings . In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers) , pages 503–509, Melbourne, Australia. Association for Computational Linguistics.

- Cumming (1990) Alister Cumming. 1990. Expertise in evaluating second language compositions . Language Testing , 7(1):31–51.

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of deep bidirectional transformers for language understanding . In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers) , pages 4171–4186, Minneapolis, Minnesota. Association for Computational Linguistics.

- Han et al. (2023) Jieun Han, Haneul Yoo, Yoonsu Kim, Junho Myung, Minsun Kim, Hyunseung Lim, Juho Kim, Tak Yeon Lee, Hwajung Hong, So-Yeon Ahn, and Alice Oh. 2023. RECIPE: How to integrate ChatGPT into EFL writing education .

- Ishikawa (2018) Shinichiro Ishikawa. 2018. The icnale edited essays; a dataset for analysis of l2 english learner essays based on a new integrative viewpoint. English Corpus Studies , 25:117–130.

- Jacobs et al. (1981) Holly Jacobs, Stephen Zinkgraf, Deanna Wormuth, V. Hearfiel, and Jane Hughey. 1981. Testing ESL Composition: a Practical Approach . ERIC.

- Jia et al. (2021) Qinjin Jia, Jialin Cui, Yunkai Xiao, Chengyuan Liu, Parvez Rashid, and Edward F. Gehringer. 2021. All-in-one: Multi-task learning bert models for evaluating peer assessments .

- Karathanos and Mena (2009) K Karathanos and DD Mena. 2009. Enhancing the academic writing skills of ell future educators: A faculty action research project. English learners in higher education: Strategies for supporting students across academic disciplines , pages 1–13.

- Kasneci et al. (2023) Enkelejda Kasneci, Kathrin Sessler, Stefan Küchemann, Maria Bannert, Daryna Dementieva, Frank Fischer, Urs Gasser, Georg Groh, Stephan Günnemann, Eyke Hüllermeier, Stepha Krusche, Gitta Kutyniok, Tilman Michaeli, Claudia Nerdel, Jürgen Pfeffer, Oleksandra Poquet, Michael Sailer, Albrecht Schmidt, Tina Seidel, Matthias Stadler, Jochen Weller, Jochen Kuhn, and Gjergji Kasneci. 2023. Chatgpt for good? on opportunities and challenges of large language models for education . Learning and Individual Differences , 103:102274.

- Mathias and Bhattacharyya (2018) Sandeep Mathias and Pushpak Bhattacharyya. 2018. ASAP++: Enriching the ASAP automated essay grading dataset with essay attribute scores . In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018) , Miyazaki, Japan. European Language Resources Association (ELRA).

- Ozfidan and Mitchell (2022) Burhan Ozfidan and Connie Mitchell. 2022. Assessment of students’ argumentative writing: A rubric development . Journal of Ethnic and Cultural Studies , 9(2):pp. 121–133.

- Peng et al. (2023) Baolin Peng, Michel Galley, Pengcheng He, Hao Cheng, Yujia Xie, Yu Hu, Qiuyuan Huang, Lars Liden, Zhou Yu, Weizhu Chen, and Jianfeng Gao. 2023. Check your facts and try again: Improving large language models with external knowledge and automated feedback .

- Rothe et al. (2021) Sascha Rothe, Jonathan Mallinson, Eric Malmi, Sebastian Krause, and Aliaksei Severyn. 2021. A simple recipe for multilingual grammatical error correction . In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 2: Short Papers) , pages 702–707, Online. Association for Computational Linguistics.

- Rudner et al. (2006) Lawrence M. Rudner, Veronica Garcia, and Catherine Welch. 2006. An evaluation of intellimetric™ essay scoring system . The Journal of Technology, Learning and Assessment , 4(4).

- Sidman-Taveau and Karathanos-Aguilar (2015) Rebekah Sidman-Taveau and Katya Karathanos-Aguilar. 2015. Academic writing for graduate-level english as a second language students: Experiences in education. The CATESOL Journal , 27(1):27–52.

- Sun and Fan (2022) Bo Sun and Tingting Fan. 2022. The effects of an awe-aided assessment approach on business english writing performance and writing anxiety: A contextual consideration. Studies in Educational Evaluation , 72:101123.

- Taghipour and Ng (2016) Kaveh Taghipour and Hwee Tou Ng. 2016. A neural approach to automated essay scoring . In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing , pages 1882–1891, Austin, Texas. Association for Computational Linguistics.

- Tay et al. (2018) Yi Tay, Minh Phan, Luu Anh Tuan, and Siu Cheung Hui. 2018. Skipflow: Incorporating neural coherence features for end-to-end automatic text scoring . Proceedings of the AAAI Conference on Artificial Intelligence , 32(1).

- Wang et al. (2023) Hongru Wang, Rui Wang, Fei Mi, Zezhong Wang, Ruifeng Xu, and Kam-Fai Wong. 2023. Chain-of-thought prompting for responding to in-depth dialogue questions with llm .

- Wang et al. (2018) Yucheng Wang, Zhongyu Wei, Yaqian Zhou, and Xuanjing Huang. 2018. Automatic essay scoring incorporating rating schema via reinforcement learning . In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing , pages 791–797, Brussels, Belgium. Association for Computational Linguistics.

- Wei et al. (2022) Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, brian ichter, Fei Xia, Ed Chi, Quoc V Le, and Denny Zhou. 2022. Chain-of-thought prompting elicits reasoning in large language models . In Advances in Neural Information Processing Systems , volume 35, pages 24824–24837. Curran Associates, Inc.

- Xie et al. (2022) Jiayi Xie, Kaiwei Cai, Li Kong, Junsheng Zhou, and Weiguang Qu. 2022. Automated essay scoring via pairwise contrastive regression . In Proceedings of the 29th International Conference on Computational Linguistics , pages 2724–2733, Gyeongju, Republic of Korea. International Committee on Computational Linguistics.

- Yan et al. (2023) Lixiang Yan, Lele Sha, Linxuan Zhao, Yuheng Li, Roberto Martinez-Maldonado, Guanliang Chen, Xinyu Li, Yueqiao Jin, and Dragan Gašević. 2023. Practical and ethical challenges of large language models in education: A systematic literature review .

- Yang et al. (2020) Ruosong Yang, Jiannong Cao, Zhiyuan Wen, Youzheng Wu, and Xiaodong He. 2020. Enhancing automated essay scoring performance via fine-tuning pre-trained language models with combination of regression and ranking . In Findings of the Association for Computational Linguistics: EMNLP 2020 , pages 1560–1569, Online. Association for Computational Linguistics.

- Zaheer et al. (2020) Manzil Zaheer, Guru Guruganesh, Kumar Avinava Dubey, Joshua Ainslie, Chris Alberti, Santiago Ontanon, Philip Pham, Anirudh Ravula, Qifan Wang, Li Yang, and Amr Ahmed. 2020. Big bird: Transformers for longer sequences . In Advances in Neural Information Processing Systems , volume 33, pages 17283–17297. Curran Associates, Inc.

- Zheng et al. (2023) Lianmin Zheng, Wei-Lin Chiang, Ying Sheng, Siyuan Zhuang, Zhanghao Wu, Yonghao Zhuang, Zi Lin, Zhuohan Li, Dacheng Li, Eric. P Xing, Hao Zhang, Joseph E. Gonzalez, and Ion Stoica. 2023. Judging llm-as-a-judge with mt-bench and chatbot arena .

Appendix A Qualitative Interview

A.1 content of the interview.

While most existing NLP-driven EFL writing assistance tools focus on automated scoring, we were interested in how much EFL learners and instructors want feedback (i.e., rationales to an essay score) as well. We conducted a group interview with six EFL learners, who had taken at least one undergraduate EFL writing course. While only 2 of them had received feedback from their instructors before, all of them expressed strong needs for both rubric-based scores and feedback. 4 out of 6 students preferred specific and constructive feedback for improving their essays, as opposed to positive remarks without critical analysis. In addition, they are particularly interested in getting scores and feedback instantaneously so that they can learn the weaknesses of their essays in the same context of writing, and refine them through an iterative process.

To examine the needs for feedback generation from the perspective of EFL instructors, we conducted a written interview with three instructors who are currently teaching at a college EFL center. We provided a sample essay accompanied with four different AI-generated scores and feedback, which varied based on whether a score was provided, whether feedback was given, and the type of feedback. Different instructors expressed diverse preferences when it came to giving scores and providing auto-generated feedback. Two of them exhibited concerns that the feedback may give irrelevant to the class topic, or fail to consider the open-ended nature of essay feedback. There was another group of concerns that giving too much feedback would lower the educational effect by overwhelming the students. Despite the aforementioned concerns, all the instructors expressed a strong need for objective scoring. P1 and P3 mentioned that they would like to utilize AI-generated score and feedback as a comparison to double-check if their scores need to be adjusted.

A.2 Interview Questionnaire

For the midterm and final essays, most of the instructors provide only scores without any feedback. Please tell us the pros and cons of this. Please share your various needs for grade provision.

Which of the following six types of feedback do you prefer and why? (Direct feedback, Indirect feedback, Metalinguistic CF, The focus of the feedback, Electronic feedback, Reformulation)

What kind of feedback would you like to receive among the feedback listed below? (Positive feedback, Constructive feedback, Questioning feedback, Reflective feedback, Specific feedback, Comparative feedback, Collaborative feedback)

What was the hardest thing about evaluating students’ essays? What efforts other than rubric, norming sessions were needed to minimize the subjectivity of scores? Please feel free to tell us about ways to improve the shortcomings you mentioned.

The example is the student essay and AI scoring results. Among the four scoring and feedback examples above, please list them in order of help in improving students’ learning effect.

If the AI model provides both a score and feedback for a student’s essay, what advantages do you think it would have over providing only a score? If the AI model provides both a score and feedback for a student’s essay, what advantages do you think it will have over providing feedback alone?

What kinds of feedback can be good for enhancing the learning effect? Are there any concerns about providing score and feedback through AI?

What part of the grade processing process would you like AI to help with?

After looking at the score and feedback generated by the AI model, do you think your score could change after seeing them? How do you think it will be used for scoring?

Appendix B Supplemental Results

B.1 experimental settings.

We split our data into train/dev/test with 6:2:2 ratio with a seed of 22. The AES experiments were conducted under GeForce RTX 2080 Ti (4 GPUs), 128GiB system memory, and Intel(R) Xeon(R) Silver 4114 CPU @ 2.20GHz (20 CPU cores) with hyperparameters denoted in Table 5 .

| Hyperparameter | Value |

|---|---|

| Batch Size | 32 |

| Number of epochs | 10 |

| Early Stopping Patience | 5 |

| Learning Rate | 2e-5 |

| Learning Rate Scheduler | Linear |

| Optimizer | AdamW |

B.2 Rubric-based AES with Different LMs

| Model | Content | Organization | Language | Total |

|---|---|---|---|---|

| BERT ( ) | 0.414 | 0.311 | 0.487 | 0.471 |

| Longformer ( ) | 0.409 | 0.312 | 0.475 | 0.463 |

| BigBird ( ) | 0.412 | 0.317 | 0.473 | 0.469 |

| GPT-NeoX ( ) | 0.410 | 0.313 | 0.446 | 0.475 |

Experimental results of rubric-based AES with different LMs are provided in Table 6 , showing no significant difference among different LMs. Xie et al. ( 2022 ) also observed that leveraging different LMs has no significant effect on AES performance, and most state-of-the-art AES methods have leveraged BERT Devlin et al. ( 2019 ) .

B.3 Rubric-based AES with ChatGPT

Table 7 shows AES results of ChatGPT with different prompts described in Table 8 . Considering the substantial length of the essay and feedback, we were able to provide a maximum of 2 shots for the prompt to gpt-3.5-turbo . To examine 2-shot prompting performance, we divided the samples into two distinct groups and computed the average total score for each group. Subsequently, we randomly sampled a single essay in each group, ensuring that its total score corresponded to the calculated mean value.

| Prompt | Content | Organization | Language | Total |

|---|---|---|---|---|

| (A) | 0.320 | 0.248 | 0.359 | 0.336 |

| (B) | 0.330 | 0.328 | 0.306 | 0.346 |

| (C) | 0.357 | 0.278 | 0.342 | 0.364 |

| (D) | 0.336 | 0.361 | 0.272 | 0.385 |

IMAGES

VIDEO

COMMENTS

Abstract. Automated essay scoring (AES) involves the prediction of a score relating to the writing quality of an essay. Most existing works in AES utilize regression objectives or ranking objectives respectively. However, the two types of methods are highly complementary. To this end, in this paper we take inspiration from contrastive learning ...

1 Introduction. Automated Essay Scoring (AES) is to evaluate the quality of essays and score automatically by using computer technologies. Notably, reasonable grad-ing can solve problems that consume much time and require a lot of human effort. What's more, providing feedback to learners can promote self improvement.

A novel unified Neural Pairwise Contrastive Regression model in which both objectives are optimized simultaneously as a single loss is proposed, achieving the state-of-the-art average performance for the AES task. Automated essay scoring (AES) involves the prediction of a score relating to the writing quality of an essay. Most existing works in AES utilize regression objectives or ranking ...

Automated-Essay-Scoring-via-Pairwise-Contrastive-Regression. Created by Jiayi Xie*, Kaiwei Cai*, Li Kong, Junsheng Zhou, Weiguang Qu. This repository contains the ASAP dataset and Pytorch implementation for Automated Essay Scoring. (Coling 2022, Oral)

Automated Essay Scoring (AES) is a critical text regression task that automatically assigns scores to essays based on their writing quality. Recently, the performance of sentence prediction tasks has been largely improved by using Pre-trained Language Models via fusing representations from different layers, constructing an auxiliary sentence ...

a is greatly reduced.1 INTRODUCTIONAutomatic Essay Scoring (AES) is the technique to automatically score an es. ay over some specific marking scale. AES has been an eye-catching problem in machine learning due to it. promising application in education. It can free tremendous amount of repetitive labour,

Experiments show that the proposed rankbased approach outperforms the state-of-the-art algorithms, and achieves performance comparable to professional human raters, which suggests the effectiveness of the proposed method for automated essay scoring. Previous approaches for automated essay scoring (AES) learn a rating model by minimizing either the classification, regression, or pairwise ...

Extensive experiments on two public English essay datasets, Automated Student Assessment Prize and Chinese Learners English Corpus, show that our proposed approach based on pairwise learning outperforms previous classification or regression-based methods on all 15 topics.

Automated essay scoring via pairwise contrastive regression. In Proceedings of the 29th International Conference on Computational Linguistics, pages 2724-2733, Gyeongju, Republic of Korea. International Committee on Computational Linguistics.

Automated essay scoring (AES) involves predicting a score that reflects the writing quality of an essay. Most existing AES systems produce only a single overall score. However, users and L2 learners expect scores across different dimensions (e.g., vocabulary, grammar, coherence) for English essays in real-world applications. To address this need, we have developed two models that automatically ...

Automated Essay Scoring via Pairwise Contrastive Regression. Jiayi Xie, Kaiwei Cai, Li Kong, Junsheng Zhou, Weiguang Qu. Automated Essay Scoring via Pairwise Contrastive Regression. In Nicoletta Calzolari, Chu-Ren Huang, Hansaem Kim, James Pustejovsky, Leo Wanner, Key-Sun Choi, Pum-Mo Ryu, Hsin-Hsi Chen, Lucia Donatelli, Heng Ji, Sadao ...

Automated essay scoring (AES) involves the prediction of a score relating to the writing quality of an essay. Most existing works in AES utilize regression objectives or ranking objectives respectively. However, the two types of methods are highly complementary. To this end, in this paper we take inspiration from contrastive learning and ...

@inproceedings{xie-etal-2022-automated, title = "Automated Essay Scoring via Pairwise Contrastive Regression", author = "Xie, Jiayi and Cai, Kaiwei and Kong, Li and Zhou, Junsheng and Qu, Weiguang", editor = "Calzolari, Nicoletta and Huang, Chu-Ren and Kim, Hansaem and Pustejovsky, James and Wanner, Leo and Choi, Key-Sun and Ryu, Pum-Mo and Chen, Hsin-Hsi and Donatelli, Lucia and Ji, Heng and ...

Automated essay scoring via pairwise contrastive regression. In Proceedings of the 29th International Conference on Computational Linguistics, pages 2724-2733. Yang et al. (2020) Ruosong Yang, Jiannong Cao, Zhiyuan Wen, Youzheng Wu, and Xiaodong He. 2020. Enhancing automated essay scoring performance via fine-tuning pre-trained language ...

The automated essay scoring model is a topic of in-terest in both linguistics and Machine Learning. The model systematically classi es our varying degrees of. CS224N Final Project, Shihui Song, Jason Zhao. [email protected] [email protected]. speech and can be applied in both academia and large industrial organizations to improve ...

Automated essay scoring aims to evaluate the quality of an essay automatically. It is one of the main educational applications in the field of natural language processing. Recently, the research scope has been extended from prompt-special scoring to cross-prompt scoring and further concentrating on scoring different traits. However, cross-prompt trait scoring requires identifying inner ...

Details and statistics. —. open. Conference or Workshop Paper. 2022-10-13. Jiayi Xie, Kaiwei Cai, Li Kong, Junsheng Zhou, Weiguang Qu: Automated Essay Scoring via Pairwise Contrastive Regression. COLING 2022: 2724-2733. last updated on 2022-10-13 17:29 CEST by the.

1. Introduction. Automated essay scoring (AES), which aims to automatically evaluate and score essays, is one typical application of natural language processing (NLP) technique in the field of education [].In earlier studies, a combination of handcrafted design features and statistical machine learning is used [2,3], and with the development of deep learning, neural network-based approaches ...

Automated essay scoring plays an important role in judging students' language abilities in education. Traditional approaches use handcrafted features to score and are time-consuming and complicated. Recently, neural network approaches have improved performance without any feature engineering. Unlike other natural language processing tasks, only a small number of datasets are publicly available ...

Automated Essay Scoring via Pairwise Contrastive Regression Jiayi Xie | Kaiwei Cai ... Automated essay scoring (AES) involves the prediction of a score relating to the writing quality of an essay. Most existing works in AES utilize regression objectives or ranking objectives respectively. However, the two types of methods are highly complementary.

A new way to fine-tune pre-trained language models with multiple losses of the same task is found to improve AES's performance, and the model outperforms not only state-of-the-art neural models near 3 percent but also the latest statistic model. Automated Essay Scoring (AES) is a critical text regression task that automatically assigns scores to essays based on their writing quality ...

Automated Essay Scoring via Pairwise Contrastive Regression. Jiayi Xie Kaiwei Cai Li Kong Junsheng Zhou Weiguang Qu. ... 2022; TLDR. A novel unified Neural Pairwise Contrastive Regression model in which both objectives are optimized simultaneously as a single loss is proposed, achieving the state-of-the-art average performance for the AES task. ...

Automated Essay Scoring via Pairwise Contrastive Regression Jiayi Xie | Kaiwei Cai ... Automated essay scoring (AES) involves the prediction of a score relating to the writing quality of an essay. Most existing works in AES utilize regression objectives or ranking objectives respectively. However, the two types of methods are highly complementary.