Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 09 October 2023

Sign language recognition using the fusion of image and hand landmarks through multi-headed convolutional neural network

- Refat Khan Pathan 1 ,

- Munmun Biswas 2 ,

- Suraiya Yasmin 3 ,

- Mayeen Uddin Khandaker ORCID: orcid.org/0000-0003-3772-294X 4 , 5 ,

- Mohammad Salman 6 &

- Ahmed A. F. Youssef 6

Scientific Reports volume 13 , Article number: 16975 ( 2023 ) Cite this article

18k Accesses

16 Citations

Metrics details

- Computational science

- Image processing

Sign Language Recognition is a breakthrough for communication among deaf-mute society and has been a critical research topic for years. Although some of the previous studies have successfully recognized sign language, it requires many costly instruments including sensors, devices, and high-end processing power. However, such drawbacks can be easily overcome by employing artificial intelligence-based techniques. Since, in this modern era of advanced mobile technology, using a camera to take video or images is much easier, this study demonstrates a cost-effective technique to detect American Sign Language (ASL) using an image dataset. Here, “Finger Spelling, A” dataset has been used, with 24 letters (except j and z as they contain motion). The main reason for using this dataset is that these images have a complex background with different environments and scene colors. Two layers of image processing have been used: in the first layer, images are processed as a whole for training, and in the second layer, the hand landmarks are extracted. A multi-headed convolutional neural network (CNN) model has been proposed and tested with 30% of the dataset to train these two layers. To avoid the overfitting problem, data augmentation and dynamic learning rate reduction have been used. With the proposed model, 98.981% test accuracy has been achieved. It is expected that this study may help to develop an efficient human–machine communication system for a deaf-mute society.

Similar content being viewed by others

AI enabled sign language recognition and VR space bidirectional communication using triboelectric smart glove

Sign language recognition based on dual-path background erasure convolutional neural network

Improved 3D-ResNet sign language recognition algorithm with enhanced hand features

Introduction.

Spoken language is the medium of communication between a majority of the population. With spoken language, it would be workable for a massive extent of the population to impart. Nonetheless, despite spoken language, a section of the population cannot speak with most of the other population. Mute people cannot convey a proper meaning using spoken language. Hard of hearing is a handicap that weakens their hearing and makes them unfit to hear, while quiet is an incapacity that impedes their talking and makes them incapable of talking. Both are just handicapped in their hearing or potentially, therefore, cannot still do many other things. Communication is the only thing that isolates them from ordinary people 1 . As there are so many languages in the world, a unique language is needed to express their thoughts and opinions, which will be understandable to ordinary people, and such a language is named sign language. Understanding sign language is an arduous task, an ability that must be educated with training.

Many methods are available that use different things/tools like images (2D, 3D), sensor data (hand globe 2 , Kinect sensor 3 , neuromorphic sensor 4 ), videos, etc. All things are considered due to the fact that the captured images are excessively noisy. Therefore an elevated level of pre-processing is required. The available online datasets are already processed or taken in a lab environment where it becomes easy for recent advanced AI models to train and evaluate, causing prone to errors in real-life applications with different kinds of noises. Accordingly, it is a basic need to make a model that can deal with noisy images and also be able to deliver positive results. Different sorts of methods can be utilized to execute the classification and recognition of images using machine learning. Apart from recognizing static images, work has been done in depth-camera detecting and video processing 5 , 6 , 7 . Various cycles inserted in the system were created utilizing other programming languages to execute the procedural strategies for the final system's maximum adequacy. The issue can be addressed and deliberately coordinated into three comparable methodologies: initially using static image recognition techniques and pre-processing procedures, secondly by using deep learning models, and thirdly by using Hidden Markov Models.

Sign language guides this part of the community and empowers smooth communication in the community of people with trouble talking and hearing (deaf and dumb). They use hand signals along with facial expressions and body activities to cooperate. Yet, as a global language, not many people become familiar with communication via sign language gestures 8 . Hand motions comprise a significant part of communication through signing vocabulary. At the same time, facial expressions and body activities assume the jobs of underlining the words and phrases communicated by hand motions. Hand motions can be static or dynamic 9 , 10 . There are methodologies for motion discovery utilizing the dynamic vision sensor (DVS), a similar technique used in the framework introduced in this composition. For example, Arnon et al. 11 have presented an event-based gesture recognition system, which measures the event stream utilizing a natively event-based processor from International Business Machines called TrueNorth. They use a temporal filter cascade to create Spatio-temporal frames that CNN executes in the event-based processor, and they reported an accuracy of 96.46%. But in a real-life scenario, corresponding background situations are not static. Therefore the stated power saving process might not work properly. Jun Haeng Lee et al. 12 proposed a motion classification method with two DVSs to get a stereo-vision system. They used spike neurons to handle the approaching occasions with the same real-life issue. Static hand signals are also called hand acts and are framed in different shapes and directions of hands without speaking to any movement data. Dynamic hand motions comprise a sequence of hand stances with related movement information 13 . Using facial expressions, static hand images, and hand signals, communication through signing gives instruments to convey similarly as if communicated in dialects; there are different kinds of communication via gestures as well 14 .

In this work, we have applied a fusion of traditional image processing with extracted hand landmarks and trained on a multi-headed CNN so that it could complement each other’s weights on the concatenation layer. The main objective is to achieve a better detection rate without relying on a traditional single-channel CNN. This method has been proven to work well with less computational power and fewer epochs on medical image datasets 15 . The rest of the paper is divided into multiple sections as literature review in " Literature review " section, materials and methods in " Materials and methods " section with three subsections: dataset description in Dataset description , image pre-processing in " Pre-processing of image dataset " and working procedure in " Working procedure ", result analysis in " Result analysis " section, and conclusion in " Conclusion " section.

Literature review

State-of-the-art techniques centered after utilizing deep learning models to improve good accuracy and less execution time. CNNs have indicated huge improvements in visual object recognition 16 , natural language processing 17 , scene labeling 18 , medical image processing 15 , and so on. Despite these accomplishments, there is little work on applying CNNs to video classification. This is halfway because of the trouble in adjusting the CNNs to join both spatial and fleeting data. Model using exceptional hardware components such as a depth camera has been used to get the data on the depth variation in the image to locate an extra component for correlation, and then built up a CNN for getting the results 19 , still has low accuracy. An innovative technique that does not need a pre-trained model for executing the system was created using a capsule network and versatile pooling 11 .

Furthermore, it was revealed that lowering the layers of CNN, which employs a greedy way to do so, and developing a deep belief network produced superior outcomes compared to other fundamental methodologies 20 . Feature extraction using scale-invariant feature transform (SIFT) and classification using Neural Networks were developed to obtain the ideal results 21 . In one of the methods, the images were changed into an RGB conspire, the data was developed utilizing the movement depth channel lastly using 3D recurrent convolutional neural networks (3DRCNN) to build up a working system 5 , 22 where Canny edge detection oriented FAST and Rotated BRIEF (ORB) has been used. ORB feature detection technique and K-means clustering algorithm used to create the bag of feature model for all descriptors is described, but the plain background, easy to detect edges are totally dependent on edges; if the edges give wrong info, the model may fall accuracy and become the main problem to solve.

In recent years, utilizing deep learning approaches has become standard for improving the recognition accuracy of sign language models. Using Faster Region-based Convolutional Neural Network (Faster-RCNN) 23 , a CNN model is applied for hand recognition in the data image. Rastgoo et al. 24 proposed a method where they cropped an image properly, used fusion between RGB and depth image (RBM), added two noise types (Gaussian noise + salt n paper noise), and prepared the data for training. As a naturally propelled deep learning model, CNNs achieve every one of the three phases with a single framework that is prepared from crude pixel esteems to classifier yields, but extreme computation power was needed. Authors in ref. 25 proposed 3D CNNs where the third dimension joins both spatial and fleeting stamps. It accepts a few neighboring edges as input and performs 3D convolution in the convolutional layers. Along with them, the study reported in 26 followed similar thoughts and proposed regularizing the yields with high-level features, joining the expectations of a wide range of models. They applied the developed models to perceive human activities and accomplished better execution in examination than benchmark methods. But it is not sure it works with hand gestures as they detected face first and thenody movement 27 .

On the other hand, the Microsoft and Leap Motion companies have developed unmistakable approaches to identify and track a user’s hand and body movement by presenting Kinect and the leap motion controller (LMC) separately. Kinect recognizes the body skeleton and tracks the hands, whereas the LMC distinguishes and tracks hands with its underlying cameras and infrared sensors 3 , 28 . Using the provided framework, Sykora et al. 7 utilized the Kinect system to catch the depth data of 10 hand motions to classify them using a speeded-up robust features (SURF) technique that came up to an 82.8% accuracy, but it cannot test on more extensive database and modified feature extraction methods (SIFT, SURF) so it can be caused non-invariant to the orientation of gestures. Likewise, Huang et al. 29 proposed a 10-word-based ASL recognition system utilizing Kinect by tenfold cross-validation with an SVM that accomplished a precision pace of 97% using a set of frame-independent features, but the most significant problem in this method is segmentation.

The literature summarizes that most of the models used in this application either depend on a single variable or require high computational power. Also, their dataset choice for training and validating the model is in plain background, which is easier to detect. Our main aim is to show how to reduce the computational power for training and the dependency of model training on one layer.

Materials and methods

Dataset description.

Using a generalized single-color background to classify sign language is very common. We intended to avoid that single color background and use a complex background with many users’ hand images to increase the detection complexity. That’s why we have used the “ASL Finger Spelling” dataset 30 , which has images of different sizes, orientations, and complex backgrounds of over 500 images per sign (24 sign total) of 4 users (non-native to sign language). This dataset contains separate RGB and depth images; we have worked with the RGB images in this research. The photos were taken in 5 sessions with the same background and lighting. The dataset details are shown in Table 1 , and some sample images are shown in Fig. 1 .

Sample images from a dataset containing 24 signs from the same user.

Pre-processing of image dataset

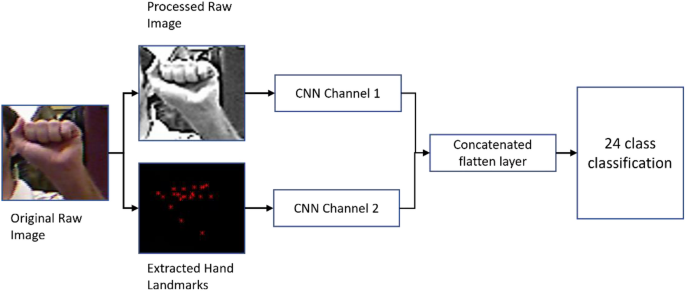

Images were pre-processed for two operations: preparing the original image training set and extracting the hand landmarks. Traditional CNN has one input data channel and one output channel. We are using two input data channels and one output channel, so data needs to be prepared for both inputs individually.

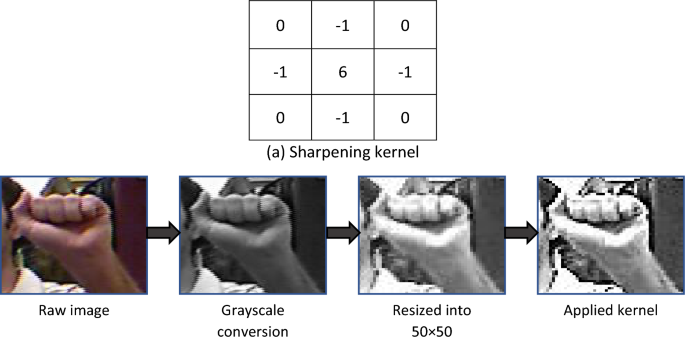

Raw image processing

In raw image processing, we have converted the images from RGB to grayscale to reduce color complexity. Then we used a 2D kernel matrix for sharpening the images, as shown in Fig. 2 . After that, we resized the images into 50 × 50 pixels for evaluation through CNN. Finally, we have normalized the grayscale values (0–255) by dividing the pixel values by 255, so now the new pixel array contains value ranges (0–1). The primary advantage of this normalization is that CNN works faster in the (0–1) range rather than other limits.

Raw image pre-processing with ( a ) sharpening kernel.

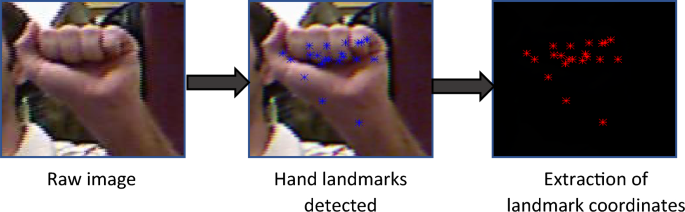

Hand landmark detection

Google’s hand landmark model has an input channel of RGB and an image size of (224 × 224 × 3). So, we have taken the RGB images, converted pixel values into float32, and resized all the images into (256 × 256 × 3). After applying the model, it gives 21 coordinated 3-dimensional points. The landmark detection process is shown in Fig. 3 .

Hand landmarks detection and extraction of 21 coordinates.

Working procedure

The whole work is divided into two main parts, one is the raw image processing, and another one is the hand landmarks extraction. After both individual processing had been completed, a custom lightweight simple multi-headed CNN model was built to train both data. Before processing through a fully connected layer for classification, we merged both channel’s features so that the model could choose between the best weights. This working procedure is illustrated in Fig. 4 .

Flow diagram of working procedure.

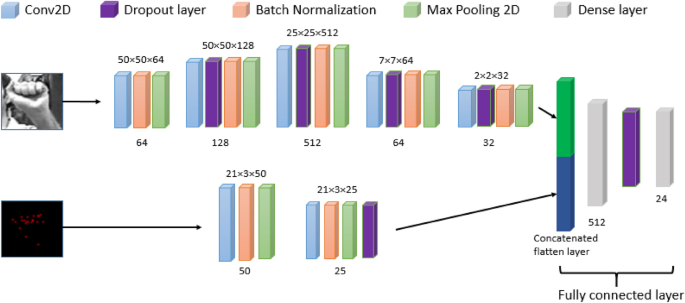

Model building

In this research, we have used multi-headed CNN, meaning our model has two input data channels. Before this, we trained processed images and hand landmarks with two separate models to compare. Google’s model is not best for “in the wild” situations, so we needed original images to complement the low faults in Google’s model. In the first head of the model, we have used the processed images as input and hand landmarks data as the second head’s input. Two-dimensional Convolutional layers with filter size 50, 25, kernel (3, 3) with Relu, strides 1; MaxPooling 2D with pool size (2, 2), batch normalization, and Dropout layer has been used in the hand landmarks training side. Besides, the 2D Convolutional layer with filter size 32, 64, 128, 512, kernel (3, 3) with Relu; MaxPooling 2D with pool size (2, 2); batch normalization and dropout layer has been used in the image training side. After both flatten layers, two heads are concatenated and go through a dense, dropout layer. Finally, the output dense layer has 24 units with Softmax activation. This model has been compiled with Adam optimizer and MSE loss for 50 epochs. Figure 5 illustrates the proposed CNN architecture, and Table 2 shows the model details.

Proposed multi-headed CNN architecture. Bottom values are the number of filters and top values are output shapes.

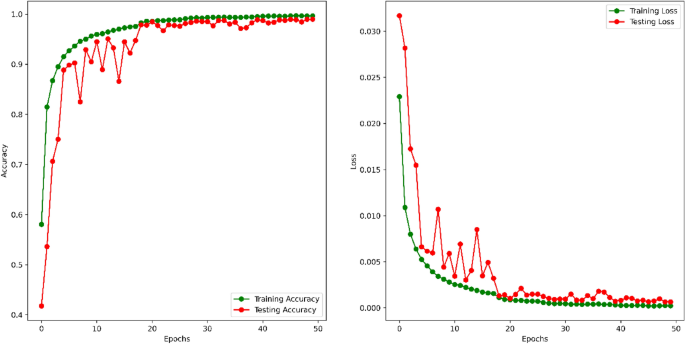

Training and testing

The input images were augmented to generate more difficulty in training so that the model could not overfit. Image Data Generator did image augmentation with 10° rotation, 0.1 zoom range, 0.1 widths and height shift range, and horizontal flip. Being more conscious about the overfitting issues, we have used dynamic learning rates, monitoring the validation accuracy with patience 5, factor 0.5, and a minimum learning rate of 0.00001. For training, we have used 46,023 images, and for testing, 19,725 images. For 50 epochs, the training vs testing accuracy and loss has been shown in Fig. 6 .

Training versus testing accuracy and loss for 50 epochs.

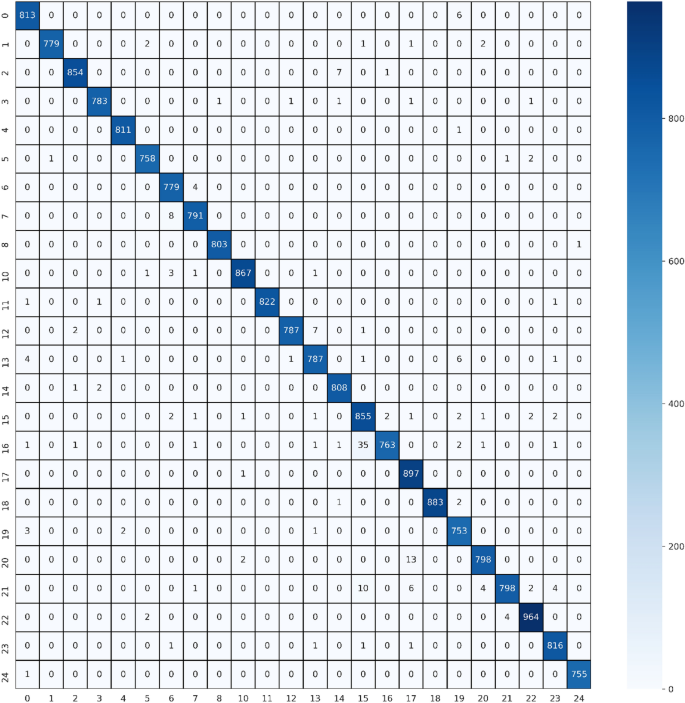

For further evaluation, we have calculated the precision, recall, and F1 score of the proposed multi-headed CNN model, which shows excellent performance. To compute these values, we first calculated the confusion matrix (shown in Fig. 7 ). When a class is positive and also classified as so, it is called true positive (TP). Again, when a class is negative and classified as so, it is called true negative (TN). If a class is negative and classified as positive, it is called false positive (FP). Also, when a class is positive and classified as not negative, it is called false negative (FN). From these, we can conclude precision, recall, and F1 score like the below:

Confusion matrix of the testing dataset. Numerical values in X and Y axis means the sequential letters from A = 0 to Y = 24, number 9 and 25 is missing because dataset does not have letter J and Z.

Precision: Precision is the ratio of TP and total predicted positive observation.

Recall: It is the ratio of TP and total positive observations in the actual class.

F1 score: F1 score is the weighted average of precision and recall.

The Precision, Recall, and F1 score for 24 classes are shown in Table 3 .

Result analysis

In human action recognition tasks, sign language has an extra advantage as it can be used to communicate efficiently. Many techniques have been developed using image processing, sensor data processing, and motion detection by applying different dynamic algorithms and methods like machine learning and deep learning. Depending on methodologies, researchers have proposed their way of classifying sign languages. As technologies develop, we can explore the limitations of previous works and improve accuracy. In ref. 13 , this paper proposes a technique for acknowledging hand motions, which is an excellent part of gesture-based communication jargon, because of a proficient profound deep convolutional neural network (CNN) architecture. The proposed CNN design disposes of the requirement for recognition and division of hands from the captured images, decreasing the computational weight looked at during hand pose recognition with classical approaches. In our method, we used two input channels for the images and hand landmarks to get more robust data, making the process more efficient with a dynamic learning rate adjustment. Besides in ref 14 , the presented results were acquired by retraining and testing the sign language gestures dataset on a convolutional neural organization model utilizing Inception v3. The model comprises various convolution channel inputs that are prepared on a piece of similar information. A capsule-based deep neural network sign posture translator for an American Sign Language (ASL) fingerspelling (posture) 20 has been introduced where the idea concept of capsules and pooling are used simultaneously in the network. This exploration affirms that utilizing pooling and capsule routing on a similar network can improve the network's accuracy and convergence speed. In our method, we have used the pre-trained model of Google to extract the hand landmarks, almost like transfer learning. We have shown that utilizing two input channels could also improve accuracy.

Moreover, ref 5 proposed a 3DRCNN model integrating a 3D convolutional neural network (3DCNN) and upgraded completely associated recurrent neural network (FC-RNN), where 3DCNN learns multi-methodology features from RGB, motion, and depth channels, and FCRNN catch the fleeting data among short video clips divided from the original video. Consecutive clips with a similar semantic significance are singled out by applying the sliding window way to deal with a section of the clips on the whole video sequence. Combining a CNN and traditional feature extractors, capable of accurate and real-time hand posture recognition 26 where the architecture is assessed on three particular benchmark datasets and contrasted and the cutting edge convolutional neural networks. Extensive experimentation is directed utilizing binary, grayscale, and depth data and two different validation techniques. The proposed feature fusion-based CNN 31 is displayed to perform better across blends of approval procedures and image representation. Similarly, fusion-based CNN is demonstrated to improve the recognition rate in our study.

After worldwide motion analysis, the hand gesture image sequence was dissected for keyframe choice. The video sequences of a given gesture were divided in the RGB shading space before feature extraction. This progression enjoyed the benefit of shaded gloves worn by the endorsers. Samples of pixel vectors representative of the glove’s color were used to estimate the mean and covariance matrix of the shading, which was sectioned. So, the division interaction was computerized with no user intervention. The video frames were converted into color HSV (Hue-SaturationValue) space in the color object tracking method. Then the pixels with the following shading were distinguished and marked, and the resultant images were converted to a binary (Gray Scale image). The system identifies image districts compared to human skin by binarizing the input image with a proper threshold value. Then, at that point, small regions from the binarized image were eliminated by applying a morphological operator and selecting the districts to get an image as an applicant of hand.

In the proposed method we have used two-headed CNN to train the processed input images. Though the single image input stream is widely used, two input streams have an advantage among them. In the classification layer of CNN, if one layer is giving a false result, it could be complemented by the other layer’s weight, and it is possible that combining both results could provide a positive outcome. We used this theory and successfully improved the final validation and test results. Before combining image and hand landmark inputs, we tested both individually and acquired a test accuracy of 96.29% for the image and 98.42% for hand landmarks. We did not use binarization as it would affect the background of an image with skin color matched with hand color. This method is also suitable for wild situations as it is not entirely dependent on hand position in an image frame. A comparison of the literature and our work has been shown in Table 4 , which shows that our method overcomes most of the current position in accuracy gain.

Table 5 illustrates that the Combined Model, while having a larger number of parameters and consuming more memory, achieves the highest accuracy of 98.98%. This suggests that the combined approach, which incorporates both image and hand landmark information, is effective for the task when accuracy is priority. On the other hand, the Hand Landmarks Model, despite having fewer parameters and lower memory consumption, also performs impressively with an accuracy of 98.42%. But it has its own error and memory consumption rate in model training by Google. The Image Model, while consuming less memory, has a slightly lower accuracy of 96.29%. The choice between these models would depend on the specific application requirements, trade-offs between accuracy and resource utilization, and the importance of execution time.

This work proposes a methodology for perceiving the classification of sign language recognition. Sign language is the core medium of communication between deaf-mute and everyday people. It is highly implacable in real-world scenarios like communication, human–computer interaction, security, advanced AI, and much more. For a long time, researchers have been working in this field to make a reliable, low cost and publicly available SRL system using different sensors, images, videos, and many more techniques. Many datasets have been used, including numeric sensory, motion, and image datasets. Most datasets are prepared in a good lab condition to do experiments, but in the real world, it may not be a practical case. That’s why, looking into the real-world situation, the Fingerspelling dataset has been used, which contains real-world scenarios like complex backgrounds, uneven image shapes, and conditions. First, the raw images are processed and resized into a 50 × 50 size. Then, the hand landmark points are detected and extracted from these hand images. Making images goes through two processing techniques; now, there are two data channels. A multi-headed CNN architecture has been proposed for these two data channels. Total data has been augmented to avoid overfitting, and dynamic learning rate adjustment has been done. From the prepared data, 70–30% of the train test spilled has been done. With the 30% dataset, a validation accuracy of 98.98% has been achieved. In this kind of large dataset, this accuracy is much more reliable.

There are some limitations found in the proposed method compared with the literature. Some methods might work with low image dataset numbers, but as we use the simple CNN model, this method requires a good number of images for training. Also, the proposed method depends on the hand landmark extraction model. Other hand landmark model can cause different results. In raw image processing, it is possible to detect hand portions to reduce the image size, which may increase the recognition chance and reduce the model training time. Hence, we may try this method in future work. Currently, raw image processing takes a good amount of training time as we considered the whole image for training.

Data availability

The dataset used in this paper (ASL Fingerspelling Images (RGB & Depth)) is publicly available at Kaggle on this URL: https://www.kaggle.com/datasets/mrgeislinger/asl-rgb-depth-fingerspelling-spelling-it-out .

Anderson, R., Wiryana, F., Ariesta, M. C. & Kusuma, G. P. Sign language recognition application systems for deaf-mute people: A review based on input-process-output. Proced. Comput. Sci. 116 , 441–448. https://doi.org/10.1016/j.procs.2017.10.028 (2017).

Article Google Scholar

Mummadi, C. et al. Real-time and embedded detection of hand gestures with an IMU-based glove. Informatics 5 (2), 28. https://doi.org/10.3390/informatics5020028 (2018).

Hickeys Kinect for Windows - Windows apps. (2022). Accessed 01 January 2023. https://learn.microsoft.com/en-us/windows/apps/design/devices/kinect-for-windows

Rivera-Acosta, M., Ortega-Cisneros, S., Rivera, J. & Sandoval-Ibarra, F. American sign language alphabet recognition using a neuromorphic sensor and an artificial neural network. Sensors 17 (10), 2176. https://doi.org/10.3390/s17102176 (2017).

Article ADS PubMed PubMed Central Google Scholar

Ye, Y., Tian, Y., Huenerfauth, M., & Liu, J. Recognizing American Sign Language Gestures from Within Continuous Videos. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) , 2145–214509 (IEEE, 2018). https://doi.org/10.1109/CVPRW.2018.00280 .

Ameen, S. & Vadera, S. A convolutional neural network to classify American Sign Language fingerspelling from depth and colour images. Expert Syst. 34 (3), e12197. https://doi.org/10.1111/exsy.12197 (2017).

Sykora, P., Kamencay, P. & Hudec, R. Comparison of SIFT and SURF methods for use on hand gesture recognition based on depth map. AASRI Proc. 9 , 19–24. https://doi.org/10.1016/j.aasri.2014.09.005 (2014).

Sahoo, A. K., Mishra, G. S. & Ravulakollu, K. K. Sign language recognition: State of the art. ARPN J. Eng. Appl. Sci. 9 (2), 116–134 (2014).

Google Scholar

Mitra, S. & Acharya, T. “Gesture recognition: A survey. IEEE Trans. Syst. Man Cybern. Part C 37 (3), 311–324. https://doi.org/10.1109/TSMCC.2007.893280 (2007).

Rautaray, S. S. & Agrawal, A. Vision based hand gesture recognition for human computer interaction: A survey. Artif. Intell. Rev. 43 (1), 1–54. https://doi.org/10.1007/s10462-012-9356-9 (2015).

Amir A. et al A low power, fully event-based gesture recognition system. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) , 7388–7397 (IEEE, 2017). https://doi.org/10.1109/CVPR.2017.781 .

Lee, J. H. et al. Real-time gesture interface based on event-driven processing from stereo silicon retinas. IEEE Trans. Neural Netw. Learn Syst. 25 (12), 2250–2263. https://doi.org/10.1109/TNNLS.2014.2308551 (2014).

Article PubMed Google Scholar

Adithya, V. & Rajesh, R. A deep convolutional neural network approach for static hand gesture recognition. Proc. Comput. Sci. 171 , 2353–2361. https://doi.org/10.1016/j.procs.2020.04.255 (2020).

Das, A., Gawde, S., Suratwala, K., & Kalbande, D. Sign language recognition using deep learning on custom processed static gesture images. In 2018 International Conference on Smart City and Emerging Technology (ICSCET) , 1–6 (IEEE, 2018). https://doi.org/10.1109/ICSCET.2018.8537248 .

Pathan, R. K. et al. Breast cancer classification by using multi-headed convolutional neural network modeling. Healthcare 10 (12), 2367. https://doi.org/10.3390/healthcare10122367 (2022).

Article PubMed PubMed Central Google Scholar

Lecun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86 (11), 2278–2324. https://doi.org/10.1109/5.726791 (1998).

Collobert, R., & Weston, J. A unified architecture for natural language processing. In Proceedings of the 25th international conference on Machine learning—ICML ’08 , 160–167 (ACM Press, 2008). https://doi.org/10.1145/1390156.1390177 .

Farabet, C., Couprie, C., Najman, L. & LeCun, Y. Learning hierarchical features for scene labeling. IEEE Trans. Pattern Anal. Mach. Intell. 35 (8), 1915–1929. https://doi.org/10.1109/TPAMI.2012.231 (2013).

Xie, B., He, X. & Li, Y. RGB-D static gesture recognition based on convolutional neural network. J. Eng. 2018 (16), 1515–1520. https://doi.org/10.1049/joe.2018.8327 (2018).

Jalal, M. A., Chen, R., Moore, R. K., & Mihaylova, L. American sign language posture understanding with deep neural networks. In 2018 21st International Conference on Information Fusion (FUSION) , 573–579 (IEEE, 2018).

Shanta, S. S., Anwar, S. T., & Kabir, M. R. Bangla Sign Language Detection Using SIFT and CNN. In 2018 9th International Conference on Computing, Communication and Networking Technologies (ICCCNT) , 1–6 (IEEE, 2018). https://doi.org/10.1109/ICCCNT.2018.8493915 .

Sharma, A., Mittal, A., Singh, S. & Awatramani, V. Hand gesture recognition using image processing and feature extraction techniques. Proc. Comput. Sci. 173 , 181–190. https://doi.org/10.1016/j.procs.2020.06.022 (2020).

Ren, S., He, K., Girshick, R., & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process Syst. , 28 (2015).

Rastgoo, R., Kiani, K. & Escalera, S. Multi-modal deep hand sign language recognition in still images using restricted Boltzmann machine. Entropy 20 (11), 809. https://doi.org/10.3390/e20110809 (2018).

Jhuang, H., Serre, T., Wolf, L., & Poggio, T. A biologically inspired system for action recognition. In 2007 IEEE 11th International Conference on Computer Vision , 1–8. (IEEE, 2007) https://doi.org/10.1109/ICCV.2007.4408988 .

Ji, S., Xu, W., Yang, M. & Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 35 (1), 221–231. https://doi.org/10.1109/TPAMI.2012.59 (2013).

Huang, J., Zhou, W., Li, H., & Li, W. sign language recognition using 3D convolutional neural networks. In 2015 IEEE International Conference on Multimedia and Expo (ICME) , 1–6 (IEEE, 2015). https://doi.org/10.1109/ICME.2015.7177428 .

Digital worlds that feel human Ultraleap. Accessed 01 January 2023. Available: https://www.leapmotion.com/

Huang, F., & Huang, S. Interpreting american sign language with Kinect. Journal of Deaf Studies and Deaf Education, [Oxford University Press] , (2011).

Pugeault, N., & Bowden, R. Spelling it out: Real-time ASL fingerspelling recognition. In 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops) , 1114–1119 (IEEE, 2011). https://doi.org/10.1109/ICCVW.2011.6130290 .

Rahim, M. A., Islam, M. R. & Shin, J. Non-touch sign word recognition based on dynamic hand gesture using hybrid segmentation and CNN feature fusion. Appl. Sci. 9 (18), 3790. https://doi.org/10.3390/app9183790 (2019).

“ASL Alphabet.” Accessed 01 Jan, 2023. https://www.kaggle.com/grassknoted/asl-alphabet

Download references

Funding was provided by the American University of the Middle East, Egaila, Kuwait.

Author information

Authors and affiliations.

Department of Computing and Information Systems, School of Engineering and Technology, Sunway University, 47500, Bandar Sunway, Selangor, Malaysia

Refat Khan Pathan

Department of Computer Science and Engineering, BGC Trust University Bangladesh, Chittagong, 4381, Bangladesh

Munmun Biswas

Department of Computer and Information Science, Graduate School of Engineering, Tokyo University of Agriculture and Technology, Koganei, Tokyo, 184-0012, Japan

Suraiya Yasmin

Centre for Applied Physics and Radiation Technologies, School of Engineering and Technology, Sunway University, 47500, Bandar Sunway, Selangor, Malaysia

Mayeen Uddin Khandaker

Faculty of Graduate Studies, Daffodil International University, Daffodil Smart City, Birulia, Savar, Dhaka, 1216, Bangladesh

College of Engineering and Technology, American University of the Middle East, Egaila, Kuwait

Mohammad Salman & Ahmed A. F. Youssef

You can also search for this author in PubMed Google Scholar

Contributions

R.K.P and M.B, Conceptualization; R.K.P. methodology; R.K.P. software and coding; M.B. and R.K.P. validation; R.K.P. and M.B. formal analysis; R.K.P., S.Y., and M.B. investigation; S.Y. and R.K.P. resources; R.K.P. and M.B. data curation; S.Y., R.K.P., and M.B. writing—original draft preparation; S.Y., R.K.P., M.B., M.U.K., M.S., A.A.F.Y. and M.S. writing—review and editing; R.K.P. and M.U.K. visualization; M.U.K. and M.B. supervision; M.B., M.S. and A.A.F.Y. project administration; M.S. and A.A.F.Y, funding acquisition.

Corresponding author

Correspondence to Mayeen Uddin Khandaker .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Pathan, R.K., Biswas, M., Yasmin, S. et al. Sign language recognition using the fusion of image and hand landmarks through multi-headed convolutional neural network. Sci Rep 13 , 16975 (2023). https://doi.org/10.1038/s41598-023-43852-x

Download citation

Received : 04 March 2023

Accepted : 29 September 2023

Published : 09 October 2023

DOI : https://doi.org/10.1038/s41598-023-43852-x

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Journal Proposal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- PubMed/Medline

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

Sign language recognition using the electromyographic signal: a systematic literature review.

1. Introduction

2. background and objectives, 2.1. background.

- The first type is the surface EMG (sEMG), which is recorded via non-invasive electrodes, often used to obtain data on the intensity of the superficial muscle activation or on the time [ 7 ].

- The second is the intramuscular EMG that is recorded via invasive electrodes [ 8 ].

2.2. Objectives

- Systematically searched, identified, and critically evaluated the relevant literature on sign language recognition using EMG signals.

- Investigated the various data acquisition methods and devices used to capture EMG signals and their impact on the recognition performance.

- Analyzed the different feature extraction and classification techniques applied to EMG signals for sign language recognition.

- Identified the most used datasets and evaluated their relevance and suitability for sign language recognition using EMG signals.

- Assessed the current state of research in terms of the sample size and the diversity of the participants in the studies.

- Provided a summary of the current state of research on sign language recognition using EMG signals and made recommendations for future research.

- Identified the challenges and limitations of using EMG signals for sign language recognition, including problems related to signal quality, feature extraction, and classification.

3. Data Acquisition and Devices

Click here to enlarge figure

4. Feature Extraction

5. classification approaches, 5.1. k-nearest neighbor-based approaches, 5.2. support vector machine-based approches, 5.3. hidden markov model-based approaches, 5.4. artificial neural network-based approaches, 5.5. convolutional neural network-based approaches, 5.6. long short-term memory-based approaches, 5.7. other proposed approaches, 6. discussion, 7. conclusions, author contributions, institutional review board statement, informed consent statement, data availability statement, conflicts of interest.

| Ref. | Sensor | Device | EMG Channel | Freq. | Hand |

|---|---|---|---|---|---|

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG + accelerometer | - | 5 | 1000 Hz | Right |

| [ ] | sEMG + 2 accelerometers | - | 8 | 1000 Hz | Right/tow |

| [ ] | sEMG + 2 accelerometers + 2 gyroscopes | - | 8 | 1000 Hz | Right |

| [ ] | sEMG + accelerometer + gyroscope | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG | Custom device: Conductor muscle electrical sensor, Arduino UNO. | 6 | - | - |

| [ ] | sEMG + accelerometer | Delsys Trigno Lab Wireless System | 4 | 1927 Hz | Right |

| [ ] | sEMG | Delsys Trigno | 8 | 1926 Hz | Right |

| [ ] | sEMG + accelerometer | Custom device | 4 | 1 kHz | Tow |

| [ ] | sEMG and accelerometer | DELSYS TrignoTM Wireless EMG System | 3 | 2000 Hz | Right |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer | Custom device: sEMG sensors, MMA7361 | 4 | 1000 Hz | Tow |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 100 Hz | - |

| [ ] | sEMG + 2 accelerometers | Custom device: NIPCI-6036 E, National Instruments | 8 | 1000 Hz | Tow |

| [ ] | sEMG + accelerometer + gyroscope | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG + accelerometer + gyroscope | Custom device | 6 | 500 Hz | Right |

| [ ] | sEMG + accelerometer + gyroscope | Custom device | 4 | 1 kHz | Tow |

| [ ] | sEMG + accelerometer + gyroscope | Myo armband | 8 | 200 Hz | Tow |

| [ ] | sEMG + accelerometer | Custom device | 8 | 1 kHz | Tow |

| [ ] | sEMG | Delsys Trigno Lab Wireless System | 6 | 2 kHz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG +acceleration, gyroscope, gravity sensors | Smartwatch + Myo armband | 8 | 200 Hz | Dominant |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Custom device: InvenSense MPU9150 ADS1299 | 4 | 1000 Hz | Right |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Custom device: InvenSense MPU9150, TI ADS1299 | 4 | 1000 Hz | Right |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | Dominant |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | Right/tow |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | 2 Myo armbands | 8 each armband | 200 Hz | Tow |

| sEMG + accelerometer + gyroscope | |||||

| sEMG + accelerometer + magnetometer | |||||

| sEMG + accelerometer + magnetometer | |||||

| sEMG + accelerometer | |||||

| sEMG + gyroscope + magnetometer | |||||

| sEMG | |||||

| [ ] | sEMG + accelerometer | 2 Myo armbands | 8 | 200 Hz | Tow |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG + FMG | Custom device: ADS1299, Texas instrument | 8 | 1000 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | Tow |

| [ ] | sEMG + accelerometer + gyroscope | Myo armband | 8 | 200 | Tow |

| [ ] | HD- sEMG | Custom device | 8 × 16 | 400 Hz | Right |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + flex | Custom device | 2 | - | - |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer + leap motion + VIVE HMD | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG | Bio Radio 150 CleveMed | 8 | 960 Hz | Right |

| [ ] | sEMG + accelerometer + gyroscope | Myo armband | 8 | 200 Hz | Tow |

| [ ] | sEMG | Delsys Trigno Lab Wireless System | 6 | 2 kHz | Tow |

| [ ] | sEMG | BIOPAC-MP-45 | 4 | 1000 Hz | Right |

| [ ] | sEMG | Delsys Trigno Wireless EMG | 3 | 1111 Hz | Dominant |

| Accelerometer + sEMG | |||||

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | 3 | 1000 Hz | Tow | |

| [ ] | sEMG + accelerometer + gyroscope | Delsys Trigno Lab Wireless System | 3 | 1 kHz | Tow |

| [ ] | sEMG | Delsys Trigno Lab Wireless System | 5 | 1 kHz | - |

| [ ] | sEMG + accelerometer + gyroscope | Delsys Trigno Lab Wireless System | 2 | 1 kHz | Dominant |

| [ ] | sEMG | Delsys Trigno Lab Wireless System | 3 | 1 kHz | Dominant |

| [ ] | sEMG | Custom device | 1 | 1 kHz | Right |

| [ ] | sEMG | Delsys Trigno Lab Wireless System | 3 | 1.1 kHz | Right |

| [ ] | sEMG + accelerometer + gyroscope | Delsys Trigno Lab Wireless System | 3 | 900 kHz | Tow |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG | Custom device | 4 | - | - |

| [ ] | sEMG | Myo armband | 8 | 100 Hz | - |

| [ ] | sEMG + pressure | Custom device | 3 | - | - |

| [ ] | Leap motion + sEMG | Myo armband | 8 | 200 Hz | Tow |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | Tow |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG | Custom device | 3 | 500 Hz | Right |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | Dominant |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG | sEMG armband | 8 | 600 Hz | Right |

| [ ] | sEMG | BIOPAC | 3 | Dominant | |

| [ ] | sEMG | BIOPAC | 3 | - | - |

| [ ] | sEMG | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + force-sensing resistor | Custom device | 1 | 10 Hz | Right |

| [ ] | sEMG | Custom device | 4 | 100 Hz | Left |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | Dominant |

| [ ] | sEMG + accelerometer | Custom device | 1 | - | - |

| [ ] | EMG | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG + accelerometer + gyroscope | Custom device | 4 | - | Right |

| [ ] | sEMG + accelerometer | BTS FREEMG | 4 | 1000 Hz | Right |

| [ ] | sEMG | TMS porti | 8 | 1000 Hz | - |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | Right |

| [ ] | sEMG + accelerometer + gyroscope + magnetometer | Myo armband | 8 | 200 Hz | - |

| [ ] | sEMG + accelerometer | Bioplux8 | 5 | 1000 Hz | Right |

| Ref. | Target | Type | Classes | Dataset Size | Sensor | Placement | Frame Rate | Subjects | Accuracy |

|---|---|---|---|---|---|---|---|---|---|

| [ ] | Chinese SL | Word | 86 | 85,424 | 8 | Armband placed on arm muscles: Extensor carpi radialis longus Flexor carpi ulnaris Flexor carpi radialis Brachioradialis Extensor digitorum Extensor digiti minimi | 200 | 20 | From 94.72% to 98.92% |

| [ ] | Chinese SL | Word | 72 | ni | 5 | Extensor digiti minimi Palmaris longus Extensor carpi ulnaris Extensor carpi radialis Brachioradialis | 1000 | 2 | 93.1% |

| [ ] | Chinese SL | Subword | 121 | 2420 | 8 | Extensor digiti minimi Palmaris Extensor carpi ulnaris Extensor carpi radialis | 1000 | 1 | 95.78% |

| [ ] | Chinese SL | Subword | 150 | 3750 | 8 | Extensor digiti minimi Palmaris longus Extensor carpi ulnaris Extensor carpi radialis | 1000 | 8 | From 88.2% to 95.1% |

| [ ] | Chinese SL | Word | 48 | 4800 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 1 | 97.12% |

| [ ] | Chinese SL | Alphabet | 4 | 800 | 6 | Pronator quadratus Flexor digitorum superficialis Flexor carpi ulnaris Palmaris longus Flexor carpi radialis Brachioradialis Pronator teres | - | 4 | 86% |

| [ ] | Chinese SL | Word | 53 | 26,500 | 4 | Extensor digitorum Palmaris longus Extensor carpi radialis longus Flexor carpi ulnari The hybrid sensor (EMG+IMU) is placed on the extensor digiti minimi. Extensor pollicis longus Extensor pollicis brevis | 1927 | 5 | 96.01 ± 0.83% |

| Alphabet | 23 | 8028 | 92.73% ± 1.47 | ||||||

| [ ] | Chinese SL | Alphabet | 30 | 600 | 8 | Extensor carpi radialis brevis Extensor digitorum Brachioradialis Extensor carpi ulnaris | 1926 | 4 | 95.48% |

| [ ] | Chinese SL | Subword | 120 | 14,200 | 4 | - | 1000 | 5 | 91.51% |

| [ ] | Chinese SL | Word | 18 | 864 | 3 | Extensor carpi radialis longus Extensor carpi ulnaris Flexor carpi radialis longus Extensor digitorum Tendons of extensor digitorum/lumbricals | 2000 | 8 | From 84.9% to 91.4% |

| [ ] | Chinese SL | Word | 15 | 5250 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 10 | 88.7% |

| [ ] | Chinese SL | Subword | 121 | 1452 | 4 | Extensor minimi digiti Palmaris longus Extensor carpi ulnaris Extensor carpi radilis | 1000 | 5 | 98.25% |

| [ ] | Chinese SL | Word | 35 | 4480 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 100 | 8 | 98.12% |

| [ ] | Chinese SL | Word Sentence | 120 | 975 | 4 | Extensor minimi digiti Palmaris longus Extensor carpi ulnaris Extensor carpi radialis | 1000 | 5 | 96.5% |

| 200 | 86.7% | ||||||||

| [ ] | Chinese SL | Word | 50 | 2780 | 8 | Armband placed on arm muscles: Same as [ ] | 200 | 10 | 89% |

| [ ] | Chinese SL | Word | 5 | 5000 | 6 | Extensor digitorum Flexor carpi radialis longus Extensor carpi radialis longus Extensor carpi ulnaris | 500 | 4 | 91.2% |

| [ ] | Chinese SL | Word | 150 | 30,000 | 4 | Extensor digiti minimi Palmaris longus Extensor carpi ulnaris Extensor carpi radialis | 1000 | 8 | 90% |

| [ ] | Chinese SL | Word | 60 | 20,400 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 34 | WER 19.7 |

| [ ] | Chinese SL | Subword | 116 | 27,840 | 8 | Extensor minimi digiti Palmaris longus Extensor carpi ulnaris Extensor carpi radialis | 1000 | 2 | 97.55% |

| [ ] | Chinese SL | Hand shape | 13 | 780 | 6 | Extensor carpi radialis longus Extensor digitorum and flexor carpi ulnaris Palmaris longus Extensor pollicis longus Abductor pollicis longus Extensor digiti minimi | 1927 | 10 | 78.15% |

| [ ] | Chinese SL | Word | 10 | 20,000 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 10 | 98.66% |

| [ ] | American SL | Sentence | 250 | 10625 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 15 | WER 0.29% |

| [ ] | American SL | Word | 80 | 24,000 | 4 | Extensor digitorum Flexor carpi radialis longus Extensor carpi radialis longus Extensor carpi ulnaris | 1000 | 4 | 85.24–96.16% |

| [ ] | American SL | Word | 40 | 4000 | 4 | Extensor digitorum Flexor carpi radialis longus Extensor carpi radialis longus Extensor carpi ulnaris | 1000 | 4 | 95.94% |

| [ ] | American SL | Word | 27 | 2080 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 1 | From 60.85% to 80% |

| 10,400 | 10 | From 34.00% to 51.54% | |||||||

| [ ] | American SL | Word | 70 | ni | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 15 | 93.7% |

| Sentence | 100 | ||||||||

| [ ] | American SL | Word | 8 | 1300 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 50 | 99.31% |

| Alphabet | 5 | ||||||||

| [ ] | American SL | Word | 9 | SCEPTRE database | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 3 | From 47.4% to 100% |

| [ ] | American SL | Word | 50 | 10,000 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 10 | 33.66% |

| [ ] | American SL | Word | 20 | 4000 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 20 | 97.9% |

| [ ] | American SL | Digit | 10 | 250 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 1000 | 5 | 91.6 ± 3.5% |

| [ ] | American SL | Alphabet | 24 | 240 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 1 | 80% |

| [ ] | American SL | Word | 13 | 390 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 3 | 81.20% |

| [ ] | American SL | Word | 13 | 26,000 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 3 | 93.79% |

| [ ] | American SL | Hand shape | 10 | 120 | 8 × 16 | Intrinsic muscles Extrinsic muscles | 400 | 4 | 78% |

| [ ] | American SL | Alphabet | 26 | 936 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 8 | 95.36% |

| [ ] | American SL | Alphabet | 26 | 130 | 2 | Flexor carpi radialis Extensor carpi radialis longus | - | 1 | 95% |

| [ ] | American SL | Word | 10 | 3000 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | - | 95% |

| [ ] | American SL | Alphabet | 25 | 33,600 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 7 | 100% |

| [ ] | American SL | Alphabet | 26 | 2080 | 8 | - | 960 | 1 | 92% |

| [ ] | American SL | Word | 20 | - | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 10 | 97.72% |

| [ ] | American SL | Word | 20 | 1800 | 6 | - | 2000 | 3 | 97% |

| [ ] | Indian SL | Word | 5 | 250 | 4 | Flexor carpi radialis Extensor carpi radialis longus Reference electrode: Palm | 1000 | 6 | 90% |

| [ ] | Indian SL | Word | 10 | 1200 | 3 | Extensor carpi radialis longus Extensor digitorum Flexor carpi radialis | 1111 | 6 | 87.5% |

| [ ] | Indian SL | Digit/word | 9 | 180 | 3 | Where the maximum movement of the muscles of the forelimbs is observed | 1000 | - | 91.1% |

| [ ] | Indian SL | Word | 100 | 16,000 | 3 | - | 1000 | 10 | 97% |

| [ ] | Indian SL | Hand shape | 15 | 1200 | 5 | - | 1111 | - | 100% |

| [ ] | Indian SL | Hand shape + word | 12 | 2400 | 2 | Flexor digitorum Extensor carpi radialis | 1111 | 10 | 88.25% |

| [ ] | Indian SL | Digit | 9 | 900 | 3 | Flexor digitorum Extensor carpi radialis Brachioradialis | 1111 | 5 | 90.10% |

| [ ] | Indian SL | Hand shape | 4 | 120 | 1 | Flexor carpi radialis | 1000 | - | 97.50% |

| [ ] | Indian SL | Hand shape + word | 10 | 800 | 3 | Flexor capri ulnaris Extensor capri radialis Brachioradialis | 1100 | 4 | 92.37% |

| [ ] | Indian SL | Word | 100 | 20,000 | 3 | - | 900 | 10 | 90.73% |

| [ ] | Brazilian SL | Alphabet | 20 | 2200 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 1 | From 4% to 95% |

| [ ] | Brazilian SL | Alphabet | 26 | 520 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 10 | 89.11% |

| [ ] | Brazilian SL | Alphabet | 26 | - | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 15 | 99.06% |

| [ ] | Brazilian SL | Alphabet | 20 | 840 | 8 | Extensor carpi ulnar Flexor carpi radial | 200 | 1 | 81.60% |

| [ ] | General hand shapes | Hand shapes | 12 | - | 8 | Brachial | 200 | - | - |

| [ ] | General | Digit/alphabet | 36 | - | 4 | Lumbric muscles Hypothenar muscles Thenar muscles Flexor radials carpi | - | - | - |

| [ ] | General | Hand shape | 6 | 3600 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 100 | 3 | 94% |

| [ ] | General | Digit | 10 | 500 | 3 | - | - | - | 86.80% |

| [ ] | Indonesian SL | Word + alphabet | 10 | 200 | 8 | Armband placed on arm muscles: Same as [ ] | 200 | 1 | 98.63% |

| [ ] | Indonesian SL | Alphabet | 26 | 260 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 1 | 93.08% |

| [ ] | Indonesian SL | Word + alphabet | 52 | 5200 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 20 | 86.75% |

| [ ] | Indonesian SL | Alphabet | 26 | 260 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | - | 82.31% |

| [ ] | Arabic SL | Alphabet | 28 | 33,600 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 3 | 98.49% |

| [ ] | Arabic SL | Words | 5 | 150 | 3 | - | 500 | 1 | 90.66% |

| [ ] | Arabic SL | Alphabet | 28 | 15,000 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 8 | 97.4% |

| [ ] | Italian SL | Alphabet | 26 | 780 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 30 | 97% |

| [ ] | Italian SL | Alphabet | 780 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 1 | 93.5% | |

| [ ] | Italian SL | Alphabet | 26 | 780 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 1 | - |

| [ ] | Korean SL | Word | 3 | 1200 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 1 | 94% |

| [ ] | Korean SL | Word | 30 | ni | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 1 | 99.6% |

| [ ] | Korean SL | Word | 38 | 300 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ], with the reference channel placed on the flexor carpi radialis | 600 | 17 | 97.4% |

| [ ] | Pakistani SL | Alphabet | 26 | 780 | 3 | Flexor carpi radialis Flexor digitorum superficialis | 1 | 81% | |

| [ ] | Pakistani SL | Sentence | 11 | 550 | 3 | - | - | 5 | 85.40% |

| [ ] | Turkish SL | Number | 11 | 1656 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 9 | 86.61% |

| [ ] | Turkish SL | Hand shape | 36 | - | 8 | - | 200 | 10 | 78% |

| [ ] | Malaysian SL | Word | 5 | 150 | 1 | Extensor carpi ulnaris | 10 | 3 | 91% |

| [ ] | Peru SL | Alphabet | 27 | 135 | 4 | - | 100 | 1 | 93.9% |

| [ ] | Polish SL | Word | 18 | 21,420 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 14 | 91% |

| [ ] | German SL | Word | 7 | 560 | 1 | Flexor carpi radialis—nearby wrist | - | 8 | 96.31% |

| [ ] | French SL | Alphabet | 7 | 2480 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 4 | 90% |

| [ ] | Persian SL | Word | 20 | 2000 | 4 | Extensor digitorum communis Flexor carpi radialis longus Extensor carpi radialis longus Extensor carpi ulnaris | - | 10 | 96.13% |

| [ ] | Colombian SL | Word | 12 | 360 | 4 | Extensor digitorum communis Extensor carpi ulnaris Flexor carpi ulnaris Flexor carpi radialis | 1000 | 3 | 96.66% |

| [ ] | Thai SL | Alphabet | 10 | 2000 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 1000 | 1 | 95% |

| [ ] | Sinhala SL | Word | 12 | 360 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ] | 200 | 6 | 94.4% |

| [ ] | Irish SL | Alphabet | 26 | 1560 | 8 | Armband placed on arm muscles: Same as the study published by the authors of [ ], with focus on the extensor digitorum from the posterior forearm, and the flexor carpi ulnaris from the anterior forearm | 200 | 12 | 78% |

| [ ] | Greek SL | Word | 60 | - | 5 | Flexor carpi ulnaris Flexor digitorum superficialis Flexor carpi radialis Extensor digitorum communis Extensor carpi ulnaris | 1000 | - | 92% |

- Aviles, M.; Rodríguez-Reséndiz, J.; Ibrahimi, D. Optimizing EMG Classification through Metaheuristic Algorithms. Technologies 2023 , 11 , 87. [ Google Scholar ] [ CrossRef ]

- Aviles, M.; Sánchez-Reyes, L.M.; Fuentes-Aguilar, R.Q.; Toledo-Pérez, D.C.; Rodríguez-Reséndiz, J. A Novel Methodology for Classifying EMG Movements Based on SVM and Genetic Algorithms. Micromachines 2022 , 13 , 2108. [ Google Scholar ] [ CrossRef ]

- Toledo-Pérez, D.C.; Martínez-Prado, M.A.; Gómez-Loenzo, R.A.; Paredes-García, W.J.; Rodríguez-Reséndiz, J. A study of movement classification of the lower limb based on up to 4-EMG channels. Electronics 2019 , 8 , 259. [ Google Scholar ] [ CrossRef ]

- Toledo-Pérez, D.C.; Rodríguez-Reséndiz, J.; Gómez-Loenzo, R.A.; Jauregui-Correa, J.C. Support vector machine-based EMG signal classification techniques: A review. Appl. Sci. 2019 , 9 , 4402. [ Google Scholar ] [ CrossRef ]

- Amor, A.B.H.; Ghoul, O.; Jemni, M. Toward sign language handshapes recognition using Myo armband 2017 6th. In Proceedings of the International Conference on Information and Communication Technology and Accessibility (ICTA) 2017, Muscat, Oman, 19–21 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [ Google Scholar ]

- Kim, J.; Mastnik, S.; André, E. EMG-based hand gesture recognition for realtime biosignal interfacing. In Proceedings of the 13th International Conference on Intelligent User Interfaces, Gran Canaria, Spain, 13–16 January 2008; pp. 30–39. [ Google Scholar ]

- Farina, D.; Negro, F. Accessing the neural drive to muscle and translation to neurorehabilitation technologies. IEEE Rev. Biomed. Eng. 2012 , 5 , 3–14. [ Google Scholar ] [ CrossRef ]

- Merletti, R.; De Luca, C.J. New techniques in surface electromyography. Comput. Aided Electromyogr. Expert Syst. 1989 , 9 , 115–124. [ Google Scholar ]

- Ahsan, M.R.; Ibrahimy, M.I.; Khalifa, O.O. EMG signal classification for human computer interaction: A review. Eur. J. Sci. Res. 2009 , 33 , 480–501. [ Google Scholar ]

- Di Pino, G.; Guglielmelli, E.; Rossini, P.M. Neuroplasticity in amputees: Main implications on bidirectional interfacing of cybernetic hand prostheses. Prog. Neurobiol. 2009 , 88 , 114–126. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Kallenberg, L.A.C. Multi-Channel Array EMG in Chronic Neck-Shoulder Pain. Ph.D. Thesis, Roessingh Research and Development, University of Twente, Enschede, The Netherlands, 2007. [ Google Scholar ]

- Galván-Ruiz, J.; Travieso-González, C.M.; Tejera-Fettmilch, A.; Pinan-Roescher, A.; Esteban-Hernández, L.; Domínguez-Quintana, L. Perspective and evolution of gesture recognition for sign language: A review. Sensors 2020 , 20 , 3571. [ Google Scholar ] [ CrossRef ]

- Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 2015 , 4 , 1. [ Google Scholar ] [ CrossRef ]

- Wang, F.; Zhao, S.; Zhou, X.; Li, C.; Li, M.; Zeng, Z. An recognition—Verification mechanism for real-time chinese sign language recognition based on multi-information fusion. Sensors 2019 , 19 , 2495. [ Google Scholar ] [ CrossRef ]

- Zhang, X.; Chen, X.; Li, Y.; Lantz, V.; Wang, K.; Yang, J. A framework for hand gesture recognition based on accelerometer and EMG sensors. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2011 , 41 , 1064–1076. [ Google Scholar ] [ CrossRef ]

- Li, Y.; Chen, X.; Tian, J.; Zhang, X.; Wang, K.; Yang, J. Automatic recognition of sign language subwords based on portable accelerometer and EMG sensors. In Proceedings of the International Conference on Multimodal Interfaces and the Workshop on Machine Learning for Multimodal Interaction, Beijing, China, 8–10 November 2010; pp. 1–7. [ Google Scholar ]

- Yu, Y.; Chen, X.; Cao, S.; Zhang, X.; Chen, X. Exploration of Chinese sign language recognition using wearable sensors based on deep belief net. IEEE J. Biomed. Health Inform. 2019 , 24 , 1310–1320. [ Google Scholar ] [ CrossRef ]

- Jane, S.P.Y.; Sasidhar, S. Sign language interpreter: Classification of forearm emg and imu signals for signing exact english. In Proceedings of the 2018 IEEE 14Th International Conference on Control and Automation (ICCA), Anchorage, AK, USA, 12–15 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 947–952. [ Google Scholar ]

- Chen, H.; Qin, T.; Zhang, Y.; Guan, B. Recognition of American Sign Language Gestures Based on Electromyogram (EMG) Signal with XGBoost Machine Learning. In Proceedings of the 2021 3rd International Conference on Machine Learning, Big Data and Business Intelligence (MLBDBI), Taiyuan, China, 3–5 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 24–29. [ Google Scholar ]

- Cheng, J.; Chen, X.; Liu, A.; Peng, H. A novel phonology-and radical-coded Chinese sign language recognition framework using accelerometer and surface electromyography sensors. Sensors 2015 , 15 , 23303–23324. [ Google Scholar ] [ CrossRef ]

- Yuan, S.; Wang, Y.; Wang, X.; Deng, H.; Sun, S.; Wang, H.; Huang, P.; Li, G. Chinese sign language alphabet recognition based on random forest algorithm. In Proceedings of the 2020 IEEE International Workshop on Metrology for Industry 4.0 & IoT, Rome, Italy, 3–5 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 340–344. [ Google Scholar ]

- Ma, D.; Chen, X.; Li, Y.; Cheng, J.; Ma, Y. Surface electromyography and acceleration based sign language recognition using hidden conditional random fields. In Proceedings of the 2012 IEEE-EMBS Conference on Biomedical Engineering and Sciences, Langkawi, Malaysia, 17–19 December 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 535–540. [ Google Scholar ]

- Zhuang, Y.; Lv, B.; Sheng, X.; Zhu, X. Towards Chinese sign language recognition using surface electromyography and accelerometers. In Proceedings of the 2017 24Th International Conference on Mechatronics and Machine Vision in Practice (m2VIP), Auckland, New Zealand, 21–23 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–5. [ Google Scholar ]

- Zhang, Z.; Su, Z.; Yang, G. Real-time Chinese Sign Language Recognition based on artificial neural networks. In Proceedings of the 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), Dali, China, 6–8 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1413–1417. [ Google Scholar ]

- Su, R.; Chen, X.; Cao, S.; Zhang, X. Random forest-based recognition of isolated sign language subwords using data from accelerometers and surface electromyographic sensors. Sensors 2016 , 16 , 100. [ Google Scholar ] [ CrossRef ]

- Li, M.; Wang, F.; Jia, K.; Zhao, S.; Li, C. A Sign Language Interactive System based on Multi-feature Fusion. In Proceedings of the 2019 IEEE 9th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Suzhou, China, 29 July–2 August 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 237–241. [ Google Scholar ]

- Li, Y.; Chen, X.; Zhang, X.; Wang, K.; Wang, Z.J. A sign-component-based framework for Chinese sign language recognition using accelerometer and sEMG data. IEEE Trans. Biomed. Eng. 2012 , 59 , 2695–2704. [ Google Scholar ]

- Zeng, Z.; Wang, F. An Attention Based Chinese Sign Language Recognition Method Using sEMG Signal. In Proceedings of the 2022 12th International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Baishan, China, 27–31 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 457–461. [ Google Scholar ]

- Wang, N.; Ma, Z.; Tang, Y.; Liu, Y.; Li, Y.; Niu, J. An optimized scheme of mel frequency cepstral coefficient for multi-sensor sign language recognition. In Smart Computing and Communication: Proceedings of the First International Conference, SmartCom 2016, Shenzhen, China, 17–19 December 2016 ; Proceedings 1; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 224–235. [ Google Scholar ]

- Yang, X.; Chen, X.; Cao, X.; Wei, S.; Zhang, X. Chinese sign language recognition based on an optimized tree-structure framework. IEEE J. Biomed. Health Inform. 2016 , 21 , 994–1004. [ Google Scholar ] [ CrossRef ]

- Wang, Z.; Zhao, T.; Ma, J.; Chen, H.; Liu, K.; Shao, H.; Wang, Q.; Ren, J. Hear sign language: A real-time end-to-end sign language recognition system. IEEE Trans. Mob. Comput. 2020 , 21 , 2398–2410. [ Google Scholar ] [ CrossRef ]

- Li, Y.; Chen, X.; Zhang, X.; Wang, K.; Yang, J. Interpreting sign components from accelerometer and sEMG data for automatic sign language recognition. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 3358–3361. [ Google Scholar ]

- Xue, B.; Wu, L.; Wang, K.; Zhang, X.; Cheng, J.; Chen, X.; Chen, X. Multiuser gesture recognition using sEMG signals via canonical correlation analysis and optimal transport. Comput. Biol. Med. 2021 , 130 , 104188. [ Google Scholar ] [ CrossRef ]

- Li, J.; Meng, J.; Gong, H.; Fan, Z. Research on Continuous Dynamic Gesture Recognition of Chinese Sign Language Based on Multi-Mode Fusion. IEEE Access 2022 , 10 , 106946–106957. [ Google Scholar ] [ CrossRef ]

- Qian, Z.; JiaZhen, J.; Dong, W.; Run, Z. WearSign: Pushing the Limit of Sign Language Translation Using Inertial and EMG Wearables. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022 , 35 , 1–27. [ Google Scholar ]

- Wu, J.; Sun, L.; Jafari, R. A wearable system for recognizing American sign language in real-time using IMU and surface EMG sensors. IEEE J. Biomed. Health Inform. 2016 , 20 , 1281–1290. [ Google Scholar ] [ CrossRef ]

- Wu, J.; Tian, Z.; Sun, L.; Estevez, L.; Jafari, R. Real-time American sign language recognition using wrist-worn motion and surface EMG sensors. In Proceedings of the 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Cambridge, MA, USA, 9–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [ Google Scholar ]

- Savur, C.; Sahin, F. American Sign Language Recognition system by using surface EMG signal. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 002872–002877. [ Google Scholar ]

- Zhang, Q.; Wang, D.; Zhao, R.; Yu, Y. MyoSign: Enabling end-to-end sign language recognition with wearables. In Proceedings of the 24th International Conference on Intelligent User Interfaces, Marina del Ray, CA, USA, 17–20 March 2019; pp. 650–660. [ Google Scholar ]

- Andronache, C.; Negru, M.; Neacsu, A.; Cioroiu, G.; Radoi, A.; Burileanu, C. Towards extending real-time EMG-based gesture recognition system. In Proceedings of the 2020 43rd International Conference on Telecommunications and Signal Processing (TSP), Milan, Italy, 7–9 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 301–304. [ Google Scholar ]

- Rodríguez-Tapia, B.; Ochoa-Zezzatti, A.; Marrufo, A.I.S.; Arballo, N.C.; Carlos, P.A. Sign Language Recognition Based on EMG Signals through a Hibrid Intelligent System. Res. Comput. Sci. 2019 , 148 , 253–262. [ Google Scholar ] [ CrossRef ]

- Derr, C.; Sahin, F. Signer-independent classification of American sign language word signs using surface EMG. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 665–670. [ Google Scholar ]

- Tateno, S.; Liu, H.; Ou, J. Development of sign language motion recognition system for hearing-impaired people using electromyography signal. Sensors 2020 , 20 , 5807. [ Google Scholar ] [ CrossRef ]

- Jiang, S.; Gao, Q.; Liu, H.; Shull, P.B. A novel, co-located EMG-FMG-sensing wearable armband for hand gesture recognition. Sens. Actuators A Phys. 2020 , 301 , 111738. [ Google Scholar ] [ CrossRef ]

- Catalan-Salgado, E.A.; Lopez-Ramirez, C.; Zagal-Flores, R. American Sign Language Electromiographic Alphabet Sign Translator. In Telematics and Computing: Proceedings of the 8th International Congress, WITCOM 2019, Merida, Mexico, 4–8 November 2019 ; Proceedings 8; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 162–170. [ Google Scholar ]

- Fatmi, R.; Rashad, S.; Integlia, R.; Hutchison, G. American Sign Language Recognition using Hidden Markov Models and Wearable Motion Sensors. Trans. Mach. Learn. Data Min. 2017 , 10 , 41–55. [ Google Scholar ]

- Fatmi, R.; Rashad, S.; Integlia, R. Comparing ANN, SVM, and HMM based machine learning methods for American sign language recognition using wearable motion sensors. In Proceedings of the 2019 IEEE 9th annual computing and communication workshop and conference (CCWC), Las Vegas, NV, USA, 7–9 January 2018; pp. 290–297. [ Google Scholar ]

- Serdana, F.I. Controlling 3D Model of Human Hand Exploiting Synergistic Activation of The Upper Limb Muscles. In Proceedings of the 2022 International Electronics Symposium (IES), Surabaya, Indonesia, 9–11 August 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 142–149. [ Google Scholar ]

- Paudyal, P.; Lee, J.; Banerjee, A.; Gupta, S.K. Dynamic feature selection and voting for real-time recognition of fingerspelled alphabet using wearables. In Proceedings of the 22nd International Conference on Intelligent User Interfaces, Limassol, Cyprus, 13–16 March 2017; pp. 457–467. [ Google Scholar ]

- Anetha, K.; Rejina Parvin, J. Hand talk-a sign language recognition based on accelerometer and SEMG data. Int. J. Innov. Res. Comput. Commun. Eng. 2014 , 2 , 206–215. [ Google Scholar ]

- Shakeel, Z.M.; So, S.; Lingga, P.; Jeong, J.P. MAST: Myo Armband Sign-Language Translator for Human Hand Activity Classification. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 21–23 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 494–499. [ Google Scholar ]

- Amrani, M.Z.; Borst, C.W.; Achour, N. Multi-sensory assessment for hand pattern recognition. Biomed. Signal Process. Control 2022 , 72 , 103368. [ Google Scholar ] [ CrossRef ]

- Savur, C.; Sahin, F. Real-time american sign language recognition system using surface emg signal. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 9–11 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 497–502. [ Google Scholar ]

- Paudyal, P.; Banerjee, A.; Gupta, S.K. Sceptre: A pervasive, non-invasive, and programmable gesture recognition technology. In Proceedings of the 21st International Conference on Intelligent User Interfaces, Sonoma, CA, USA, 7–10 March 2016; pp. 282–293. [ Google Scholar ]

- Qi, S.; Wu, X.; Chen, W.H.; Liu, J.; Zhang, J.; Wang, J. sEMG-based recognition of composite motion with convolutional neural network. Sens. Actuators A Phys. 2020 , 311 , 112046. [ Google Scholar ] [ CrossRef ]

- Divya, B.; Delpha, J.; Badrinath, S. Public speaking words (Indian sign language) recognition using EMG. In Proceedings of the 2017 International Conference on Smart Technologies for Smart Nation (SmartTechCon), Bengaluru, India, 17–19 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 798–800. [ Google Scholar ]

- Gupta, R. A quantitative performance assessment of surface EMG and accelerometer in sign language recognition. In Proceedings of the 2019 9th Annual Information Technology, Electromechanical Engineering and Microelectronics Conference (IEMECON), Jaipur, India, 13–15 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 242–246. [ Google Scholar ]